Create APM rules and alerts

The Applications UI allows you to define rules to detect complex conditions within your APM data and trigger built-in actions when those conditions are met.

The following APM rules are supported:

| APM Anomaly | Alert when either the latency, throughput, or failed transaction rate of a service is anomalous.Anomaly rules can be set at the environment level, service level, and/or transaction type level. Read more in APM Anomaly rule → |

| Error count threshold | Alert when the number of errors in a service exceeds a defined threshold. Error count rules can be set at theenvironment level, service level, and error group level. Read more in Error count threshold rule → |

| Failed transaction rate threshold | Alert when the rate of transaction errors in a service exceeds a defined threshold. Read more in Failed transaction rate threshold rule → |

| Latency threshold | Alert when the latency or failed transaction rate is abnormal.Threshold rules can be as broad or as granular as you’d like, enabling you to define exactly when you want to be alerted—whether that’s at the environment level, service name level, transaction type level, and/or transaction name level. Read more in Latency threshold rule → |

For a complete walkthrough of the Create rule flyout panel, including detailed information on each configurable property, see Kibana’s Create and manage rules.

View and manage rules and alerts in the Applications UI.

Active alerts are displayed and grouped in multiple ways in the Applications UI.

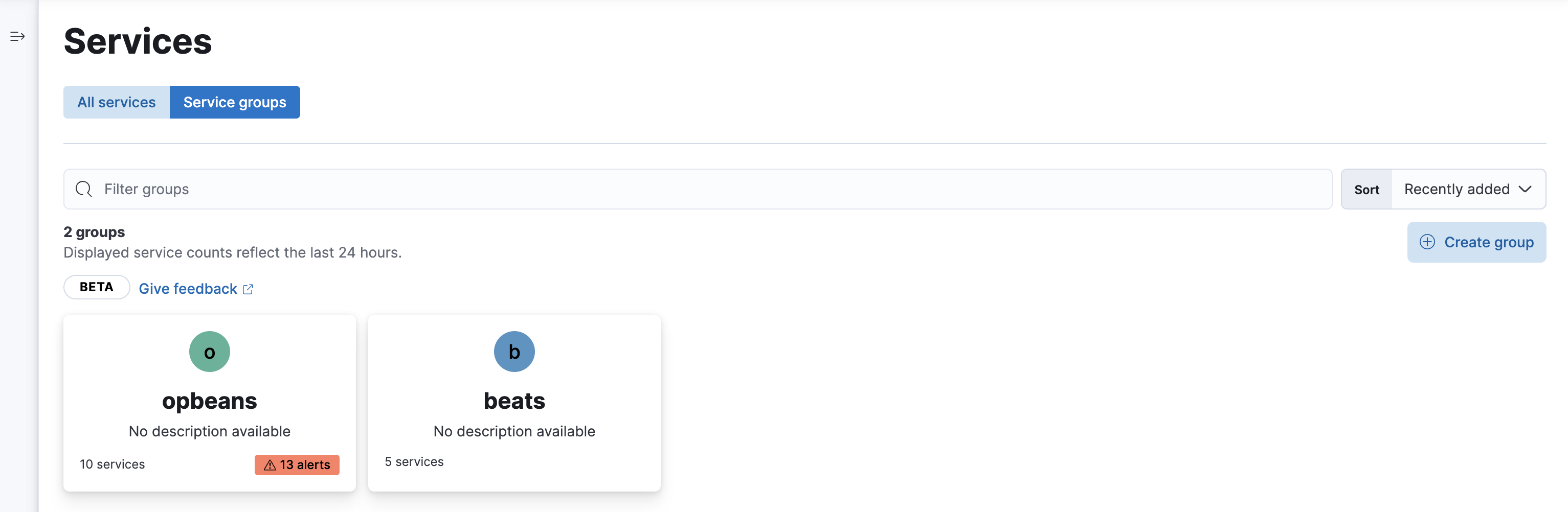

If you’re using the service groups feature, you can view alerts by service group. From the service group overview page, click the red alert indicator to open the Alerts tab with a predefined filter that matches the filter used when creating the service group.

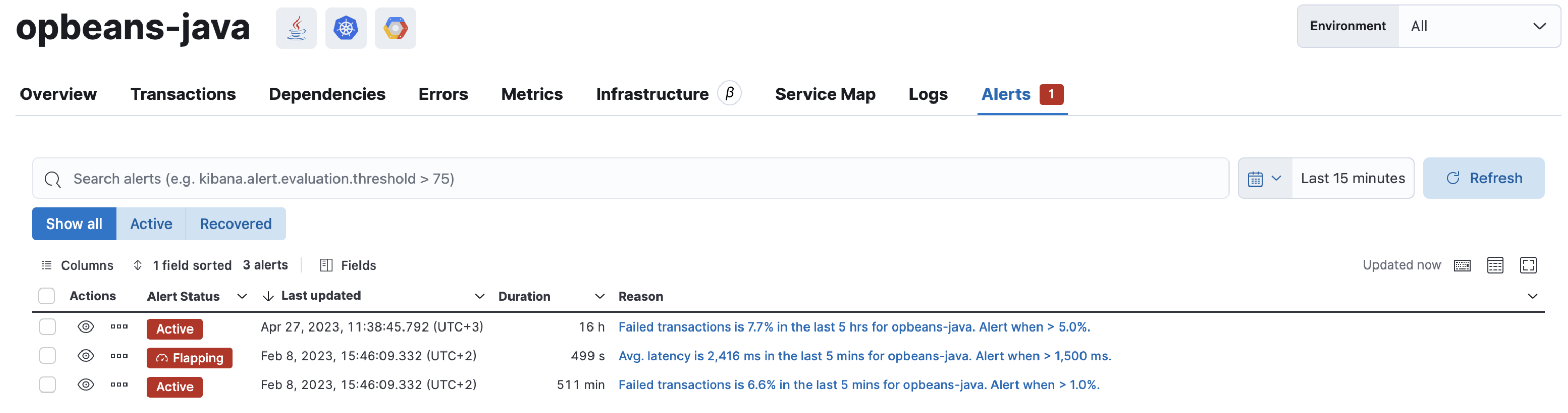

Alerts can be viewed within the context of any service. After selecting a service, go to the Alerts tab to view any alerts that are active for the selected service.

From the Applications UI, select Alerts and rules → Manage rules to be taken to the Kibana Rules page. From this page, you can disable, mute, and delete APM alerts.

See Alerting for more information.

If you are using an on-premise Elastic Stack deployment with security, communication between Elasticsearch and Kibana must have TLS configured. More information is in the alerting prerequisites.