Overview of image similarity search in Elasticsearch

Imagine being able to mimic a celebrity’s look with a screenshot. Users could use the image to quickly find clothing sold online that matches the style. But, this is not the search experience of today.

Customers struggle to find what they need, and if they can’t, they'll leave. Some of them don't remember the name (keyword) of what they are looking for, but have an idea of what it looks like or the actual image. With vector search, an integrated capability in Elastic, organizations can implement similarity image search, also known as reverse image search. This helps organizations create a more intuitive search experience so customers can easily search for what they are looking for with just an image.

To implement this functionality in Elastic, you don’t need to be a machine learning expert to get started. That’s because vector search is already integrated into our scalable, highly performant platform. You get integrations into application frameworks, making it easier to stand up interactive applications.

In this multi-part blog series, you’ll walk through how to build a prototype similarity search application in Elastic using your own set of images. The front end of this prototype application is implemented using Flask. It can serve as a blueprint for your own custom application.

- Part 1: 5 technical components of image similarity search

- Part 2: How to implement image similarity search in Elastic

In this overview blog, you’ll go behind the scenes to better understand the architecture required to apply vector search to image data with Elastic. If you’re actually more interested in semantic search on text rather than images, review the multi-blog series on natural language processing (NLP) to learn about text embeddings and vector search, named entity recognition (NER), sentiment analysis, and how to apply these techniques in Elastic. We’ll get started by stepping back a little and explaining how both similarity and semantic search are powered by vector search.

Semantic search and similarity search — both powered by vector search

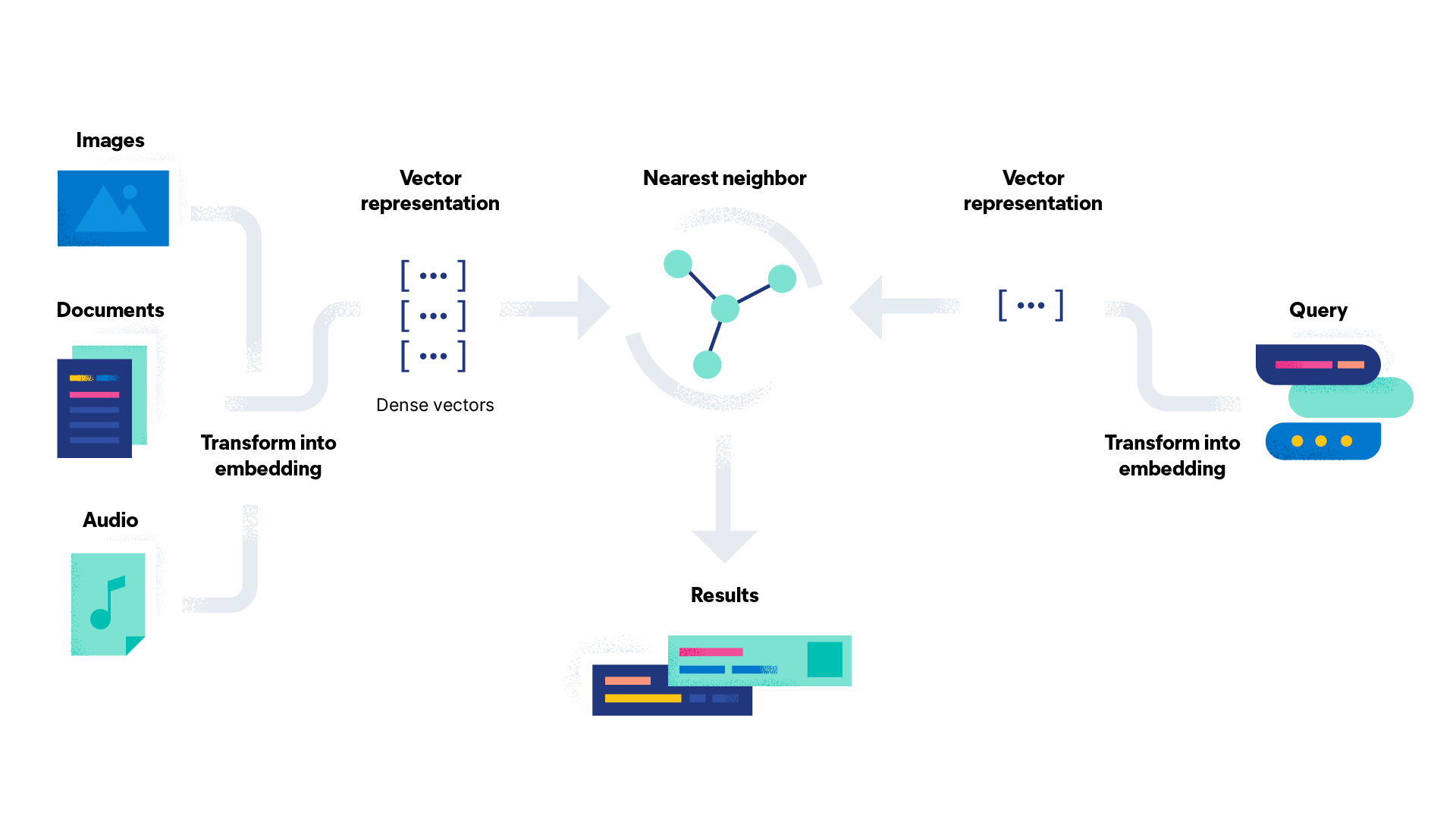

Vector search leverages machine learning (ML) to capture the meaning and context of unstructured data. Vector search finds similar data using approximate nearing neighbor (ANN) algorithms. Compared to traditional text search (in Elastic, based on BM25 scoring), vector search yields more relevant results and executes faster (without the need of extreme search engine optimizations).

This approach works not only with text data but also images and other types of unstructured data for which generic embedding models are available. For text data, it is commonly referred to as semantic search, while similarity search is frequently used in the context of images and audio.

How do you generate vector embeddings for images?

Vector embeddings are the numeric representation of data and related context stored in high dimensional (dense) vectors. Models that generate embeddings are typically trained on millions of examples to deliver more relevant and accurate results.

For text data, BERT-like transformers are popular to generate embeddings that work with many types of text, and they are available on public repositories like Hugging Face. Embedding models, which work well on any type of image, are a subject of ongoing research. The CLIP model — used by our teams to prototype the image similarity app — is distributed by OpenAI and provides a good starting point. For specialized use cases and advanced users, you may need to train a custom embedding model to achieve desired performance. Next, you need the ability to search efficiently. Elastic supports the widely adopted HNSW-based approximate nearest neighbor search.

How similarity search powers innovative applications

How does similarity search power innovation? In our first example, users could take a screenshot and search to find a favorite celebrity’s outfit.

You can also use similarity search to:

- Suggest products that are similar to what other shoppers purchased.

- Find related existing designs or relevant templates from a library of visual design elements.

- Find songs you may like from popular music streaming services based on what you listened to recently.

- Search through huge datasets of unstructured and untagged images using the natural description.

Architecture overview of image similarity app

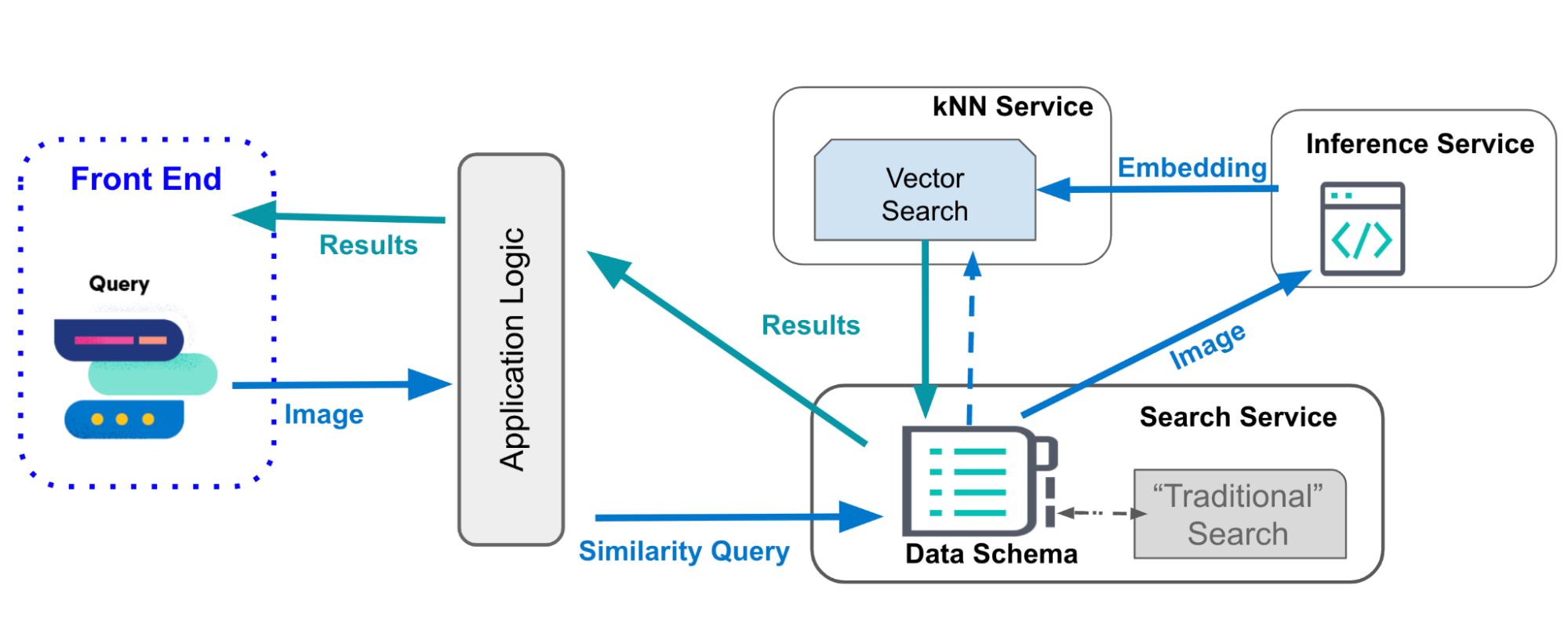

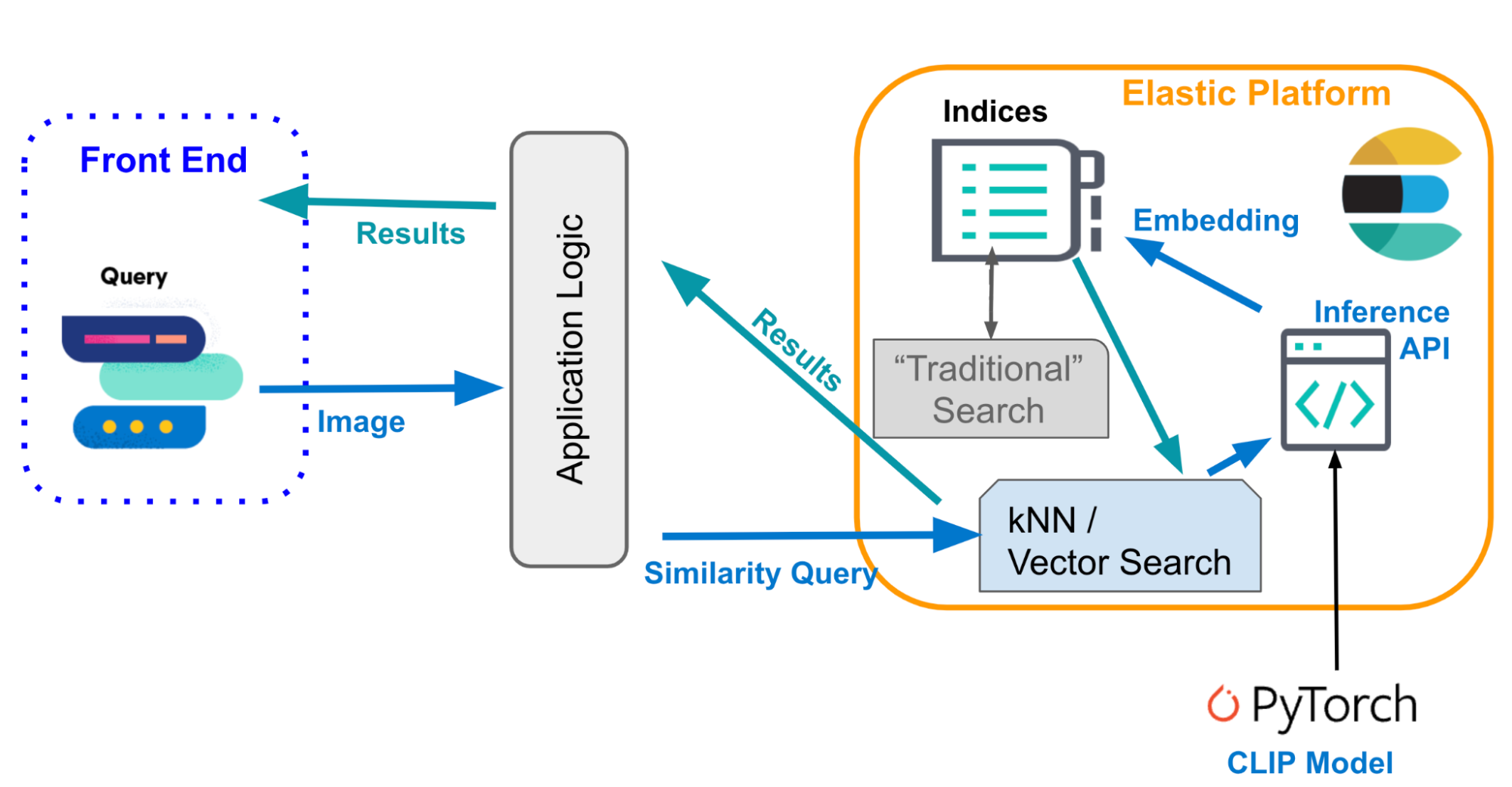

Making this kind of interactive application can seem complex. That’s especially true if you have been considering implementation within a traditional architecture as shown below. But in the second diagram, it shows how Elastic significantly simplifies this architecture…

Most search application frameworks for searching your indexed data do not natively support the k-nearest neighbor search needed for vector (similarity) search, nor the inference needed to apply NLP models. Therefore, the image similarity application needs to interact with multiple services, aside from the core search, the kNN service. If text processing is involved, it will also need to interact with an NLP service, like shown in Figure 1. This can be complicated to build and maintain.

By contrast, when implementing image similarity search using the Elastic Platform, vector search and NLP are natively integrated. The application can natively communicate with all components involved. The Elasticsearch cluster can execute kNN searches and NLP inference, as is shown below.

Why choose Elastic for image similarity search?

Implementing image similarity search in Elastic provides you with istinct advantages. With Elastic, you can…

Reduce application complexity. With Elastic you don’t need separate services for running kNN search and vectorizing your search input. Vector search and NLP inference endpoints are integrated within a scalable search platform. In other popular frameworks, applying deep neural networks and NLP models occurs separately from scaling searches on large data sets. This means you need to hire experts, add development time to your project, and set aside resources to manage it over time.

Scale with speed. In Elastic, you get scale and speed. Models live alongside nodes running search in the same cluster, which applies to on-premise clusters, and even more so if you deploy to the cloud. Elastic Cloud allows you to easily scale up and down, depending on your current search workload.

Reducing the number of services needed by an application has benefits beyond scaling. You can experience simplified performance monitoring, a smaller maintenance footprint, and fewer security vulnerabilities — to name a few. Future serverless architectures will take application simplicity to a whole new level.

What’s next?

Parts 1 and 2 in this series will provide more details about how to implement image similarity search in Elastic. They will include technical design considerations for each of the components in the high-level architecture plus actual code to implement the architecture in Elastic.

To get some hands-on experience with applying vector search within Elastic, sign up for our hands-on vector search workshop. Check out our virtual event hub to find and sign up for the next workshop. If you have questions about any of the concepts discussed in this series in the meantime, engage with our community in this discussion forum.

Originally published December 14, 2022; updated February 28, 2023.