Unified observability for your cloud ecosystem

As more applications and workloads move to the cloud, organizations increasingly are challenged to get the cross-platform visibility and analytics they need to proactively detect and resolve issues — especially in a complex hybrid and multi-cloud ecosystem. Elastic can help.

Explore how easy it is to get started monitoring your infrastructure with OpenTelemetry in this 15-minute hands-on learning.

Try hands-on learningTake a deep dive into the considerations you should keep in mind when choosing an observability solution.

Download the ebookMigrating to the cloud? Understand the challenges organizations face when migrating and how Elastic Observability can help.

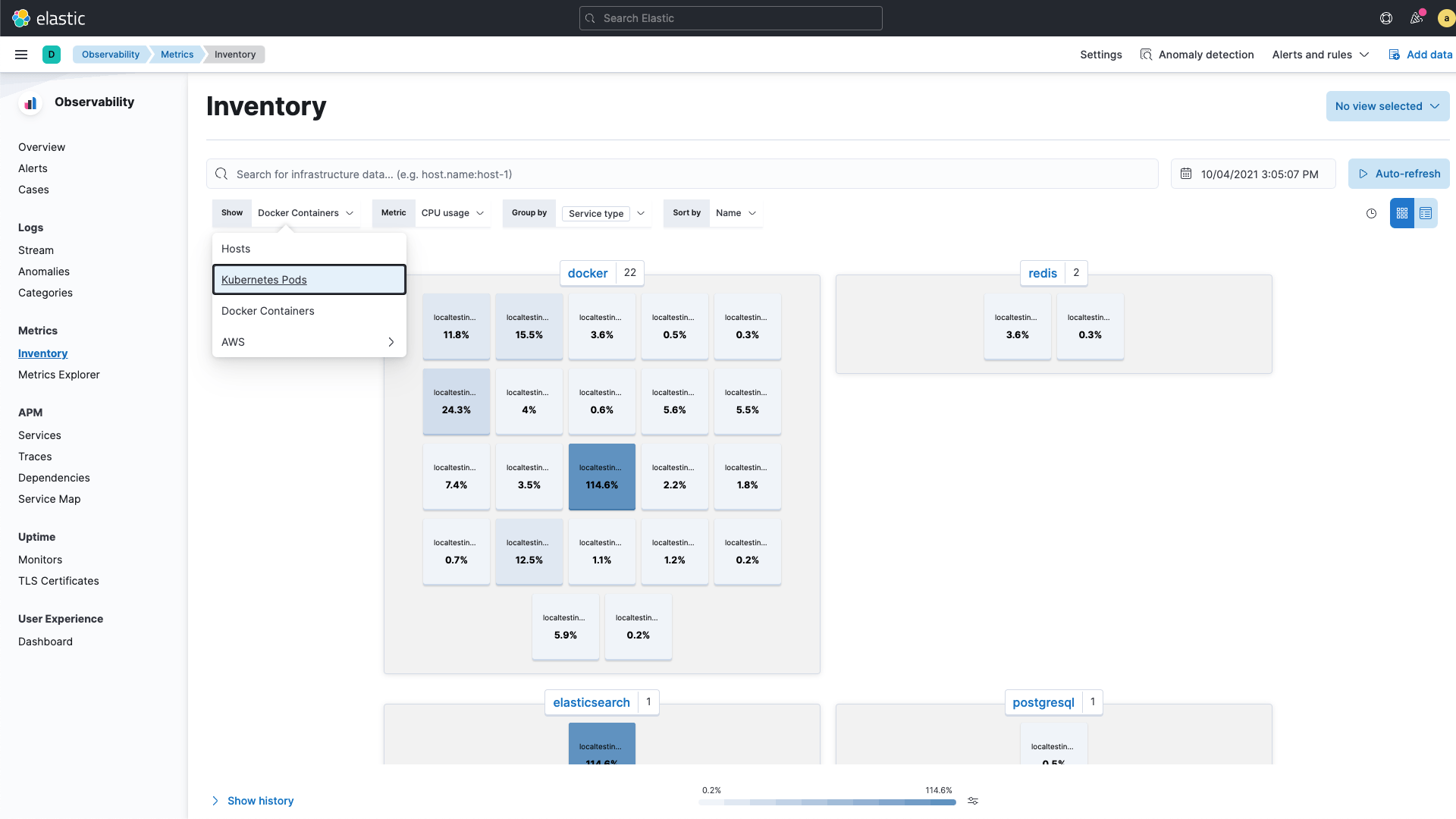

Watch nowFrom applications to containers to serverless

Get immediate operational visibility across cloud-native infrastructure and applications, including services, hosts, containers, Kubernetes pods, and serverless tiers.

Get the complete picture

Unify visibility across your on-premises and cloud environments. Ingest telemetry from applications, infrastructure, and more, with 200+ out-of-the-box integrations for popular services and platforms.

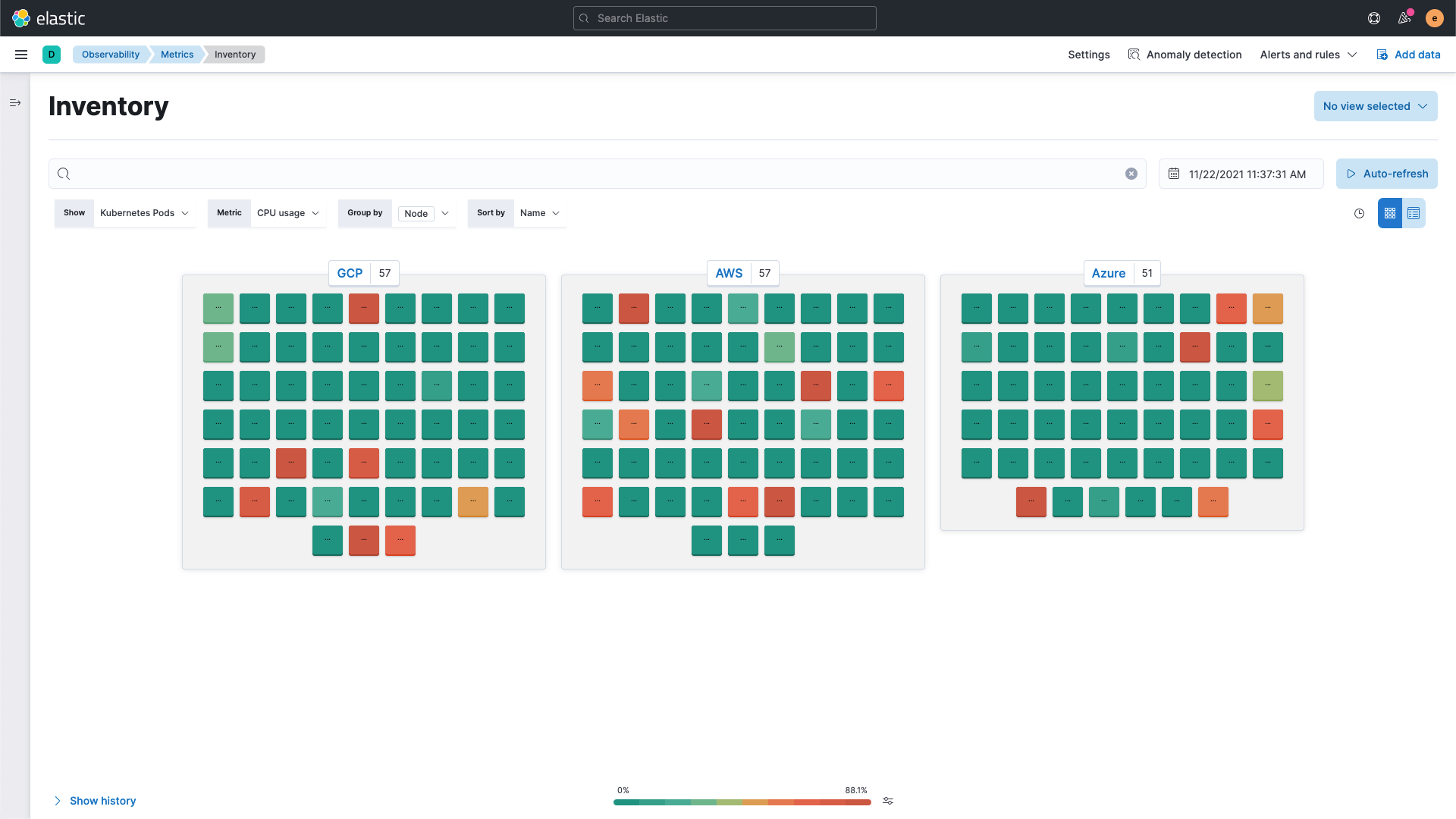

Monitor cloud-based infrastructure

Track performance across a broad range of cloud services from Amazon Web Services, Google Cloud, and Microsoft Azure. Drive efficiency with agentless data ingestion through native integrations within the cloud console.

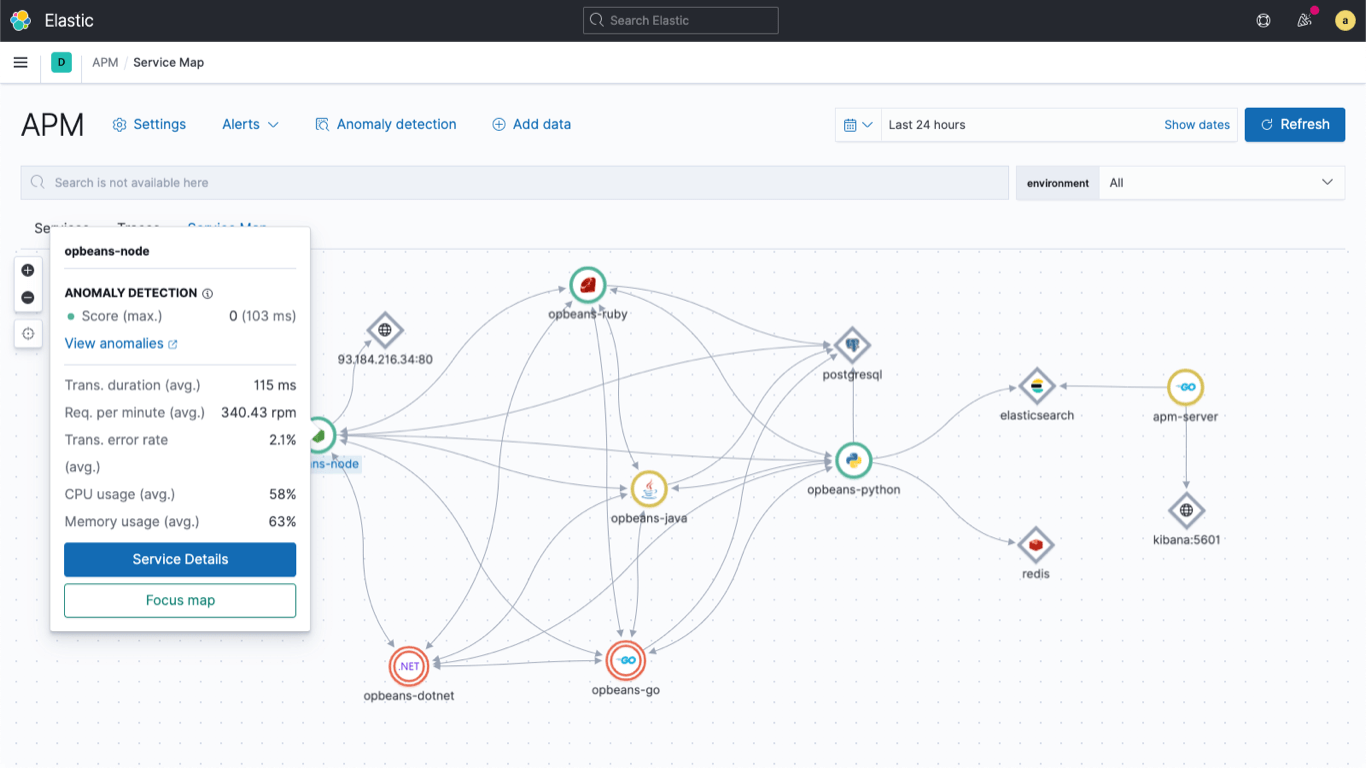

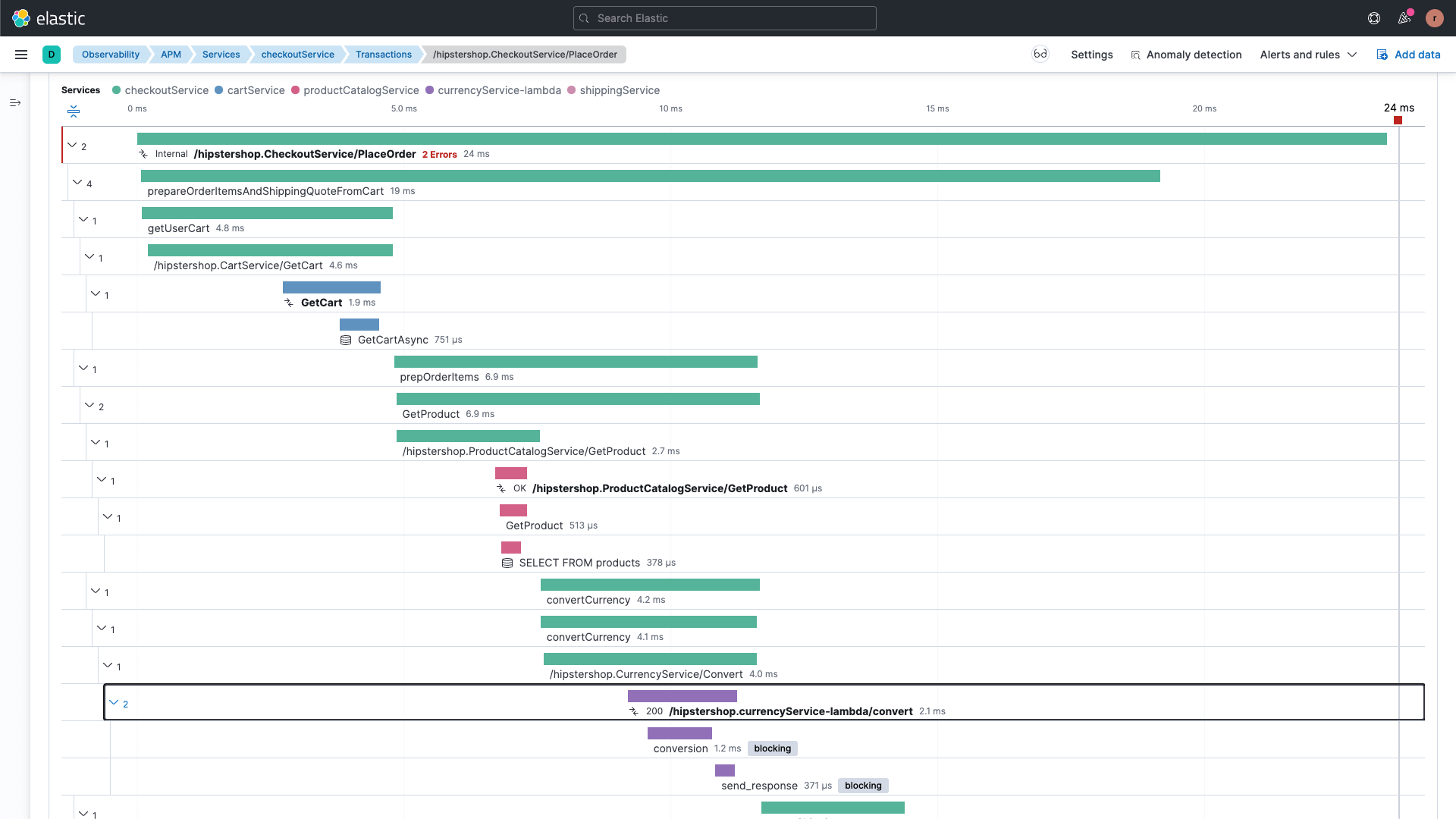

Troubleshoot application errors and performance

Understand complex microservices architectures and get deep application profiling with end-to-end distributed tracing. Map application service dependencies spanning multiple cloud or hybrid environments.

Turn data into action

Get the visibility you need with out-of-the-box dashboards, alerts, and ingest pipelines for extracting structured fields. Detect anomalous behavior with scalable machine learning and automatically correlate transaction performance to determine root cause. Send alerts to your notification tool of choice.

A cloud experience built by Elastic

As the company behind Elasticsearch, we bring our features, support, search, and security to your clusters in the cloud.

Observability

Unify your logs, metrics, and APM traces at scale in a single stack.

Security

Prevent, detect, and respond to threats — quickly and at scale. Because while you observe, why not protect?

Search

Powerful, modern search experiences for your workplace, website, or apps.