Is it a good time(stamp) for centralized logging?

With almost all deployed software systems consisting of multiple moving parts, it’s hard to find arguments against centralized aggregation of log entries. Deployment technologies like lightweight virtualization, Kubernetes, and serverless computing tend to spread out the components of a system across a large number of runtime primitives. Gaining visibility into the state and history of such systems is as important as ever but can also be more difficult than ever.

While the consensus about the necessity of centralized logging is strong, the opinions about the format and content of the log entries are manyfold and can border on the philosophical. In this article we will focus on one of the central pieces of information in every log entry: the timestamp. We will take a look at the fundamental whys and hows of timestamps and try to derive some recommendations and best practices.

Why include timestamps?

The inclusion of timestamps in itself is a pretty universal feature in most logging solutions and for good reason: the timestamp gives each log entry a point in time and thereby defines an ordering of events. Such order might be achievable without a timestamp in a simple system with a single log stream simply by preserving the sequence in which the log entries were emitted. In systems producing multiple log streams, though, the timestamps play a critical role in correlating entries across streams and in deriving a global order.

Knowing the order of events is not an end in itself, though. Leaving aside philosophical and physical considerations of the nature of causal relationships, it has proven itself to be indispensable when investigating the causality of events.

Time representation

This is a good time to introduce an obvious assumption we’ll make for the rest of this text: regardless of whether the log format is unstructured or highly structured, the timestamp needs to be available as a separate datum. In pure text logs, this means it must be parsed from the log message or inferred in some other way. In structured logs, it is usually emitted in a well-known field from the start.

In order to store and transmit the time, each implementation must inevitably choose a technical representation. That choice dictates several important properties and limitations of the time handling aspects of the system:

- Point of reference: In computing, time values are usually stored and transmitted as counts of time units starting from a well-defined reference time. That reference can relate to technical properties such as the ticks of a processor since it has been powered up or based on an external calendar, such as the UNIX epoch.

- Precision: The size of a time unit defines the precision or granularity with which a point in time can be addressed and thereby define the lower limit of what would be considered “simultaneous” in a system. A sufficiently high precision is also important to ensure stability in the ordering of events. Usual precisions range from seconds to nanoseconds.

- Range of values: No known memory technology can hold values of infinite size, so there will be an earliest and latest time that can be represented in the implementation.

When communicating time values between parts of the system, it is important that senders and receivers share common assumptions about these properties or that the representation explicitly includes information about that. An example of the latter would be the inclusion of a time zone or of a time measurement unit.

There are two especially common encodings of time values that each have different limitations in regard to these degrees of freedom: numbers and strings.

Numeric representations

Many operating systems and programming languages use numeric representations of time values. The POSIX time probably is the most famous example of that, as it’s used by UNIX and related operating systems as well as many C dialects, Java, JavaScript, and many other languages. Another example would be UUID version 1 or the Network Time Protocol.

Even though there are slight differences, the numeric representations often have very space-efficient binary encodings and are trivial to parse due to their rigid structure. The assumed epoch is usually implicit.

Elasticsearch also stores the values of date and date_nanos fields efficiently as numeric values normalized to UTC internally, even though they can be ingested and read in string representations. The range of representable values of date_nanos fields is smaller in exchange for the increased precision.

Examples

- POSIX time:

1580227855 - JavaScript time:

1580227855778 - UUID version 1:

4826e8e2-41e8-11ea-b77f-2e728ce88125

String representations

The representation of time as strings is common in ASCII-based serialization mechanisms of network protocols and document formats intended for exchange between different systems. The prevalent format is specified by ISO 8601 and the derived, but more strict, RFC 3339. Among the advantages of these two formats are the flexibility to include offset information and leap seconds and to cover a large range of date values. Conveniently, its lexicographic order is the same as its time order. It’s also often chosen because it’s easily readable by humans, which is often important for logging use cases.

The flip side of that power and flexibility is the need for a significantly more complex parser, which is required to convert the string to a numeric time value to perform time arithmetic and conversion.

When indexing or querying date fields, the Elasticsearch API takes care of converting the date to and from the efficient internal representation if a format string is supplied in the mapping or the query. The format string can refer to one of the numerous built-in formats or it can be a custom format.

Examples

- RFC 3339 with an offset:

2020-01-28T17:12:15.775+01:00 - RFC 3339 in UTC:

2020-01-28T16:12:15.775Z - SMTP date:

2 Nov 81 22:33:44

General recommendations

In the best case, the above might have been a mildly interesting primer on handling time values in software. What would be some useful recommendations that we can offer for handling such timestamps in log aggregation scenarios? What should we keep in mind when formatting the timestamps in logs?

- Include and maintain explicit time zone offset information. Explicit is better than implicit, as the Zen of Python puts it. If the time zone is omitted or discarded too early, correct interpretation of time values becomes a guessing game. In the worst case, it leads to hard-to-discover bugs with costly mitigations. RFC 3339 provides a robust and concise format to use, that is universally known and human-readable.

- Otherwise, normalize to location-independent universal time. If it’s not feasible to include time zone information, agree on a common reference frame for all involved systems. The numeric POSIX time is a good smallest common denominator for that purpose.

- Choose and maintain sufficient precision. The appropriate precision required might ultimately vary between high-precision industrial environments to low-volume batch-oriented systems. But across them all, it is impossible to recover lost precision at a later stage in the pipeline. As such, the precision should only be reduced if technical limitations demand it. An ambiguous order of events in the logs might hinder investigations or even lead to false conclusions about the causality.

- Otherwise, add a sequence counter. If the timestamps can not be produced with sufficient precision to ensure an absolute ordering, consider artificially adding a monotonically increasing counter to the event as early as possible in the pipeline to break the tie between “simultaneous” events.

- Synchronize time across systems. All of the above is not sufficient to ensure that log events from different machines can be correlated correctly — their clocks need to be synchronized to within use case–specific tolerances, which usually relates to the required precision of the time values. A common mechanism for clock synchronization is the Network Time Protocol already mentioned above.

- Otherwise, rewrite the timestamp with a consistent time value at the earliest opportunity. If technical limitations make it impossible to synchronize the clocks of the entity emitting the log event, it is sufficient for some applications to add or rewrite the timestamp to a synchronized and consistent value at a later stage in the log processing pipeline. This is what Logstash and Filebeat do when they receive events without timestamps. The efficacy of that highly depends on properties of the specific deployment scenarios, though, such as log event transmission jitter and latency. The closer the rewriting entity is to the source the more accurate the timing in most cases.

Time to shine

With all of the above in mind, you’re well equipped to steer clear of a major pitfall when setting up a centralized logging system. It enables you to correlate log events from a wide variety of sources and make use of state-of-the-art tooling such as Elastic Observability.

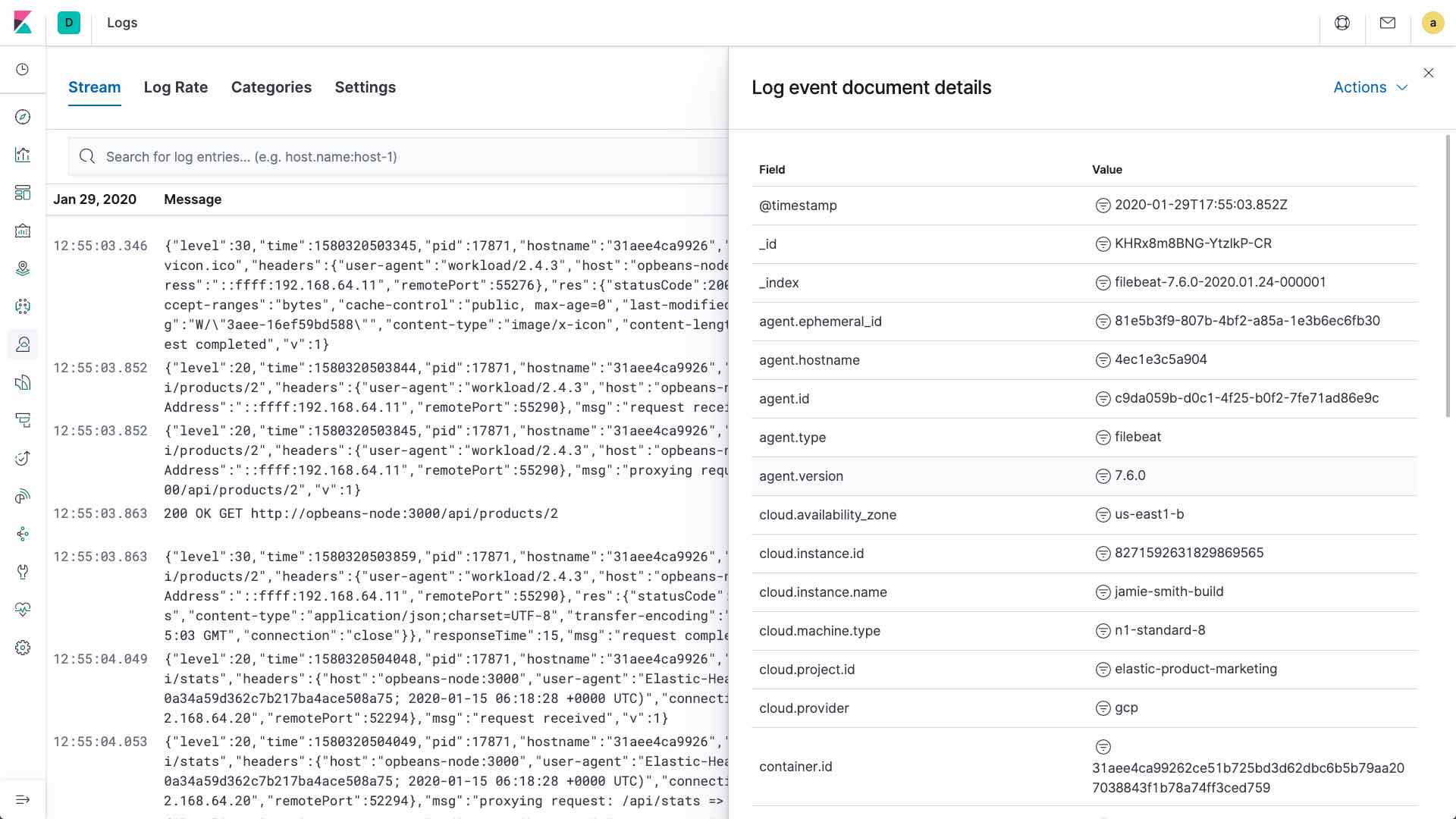

Filebeat and Logstash know how to parse your log event’s timestamps to forward them as Elastic Common Schema (ECS)–compliant documents. Elasticsearch can parse the timestamps to make them available for efficient date queries and aggregations. And the Logs UI can live-tail your log events and their messages and employ machine learning to provide insights into large volumes of events.

As we’ve seen above, it’s a complex topic with many facets, but with the right tooling and community it can be mastered. Poke around in our logging demo or spin up a free trial of Elasticsearch Service to see how easy log analytics can be. And as always stop by and exchange insights with the community and the developers in our community forum, or drop in at our community slack for a chat. And remember, timestamps are your friends!