Tracing history: The generative AI revolution in SIEM

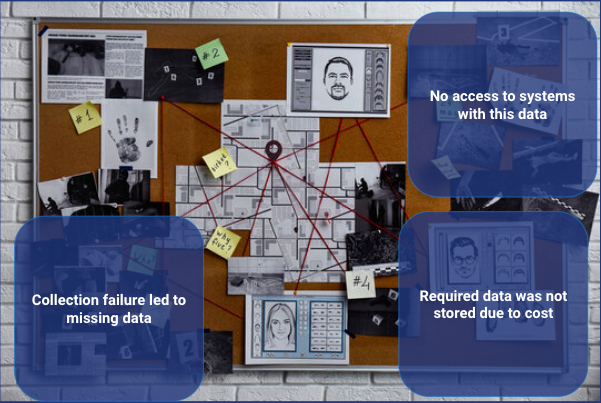

The cybersecurity domain mirrors the physical space, with the security operations center (SOC) acting as your digital police department. Cybersecurity analysts are like the police, working to deter cybercriminals from attempting attacks on their organization or stopping them in their tracks if they try it. When an attack occurs, incident responders, akin to digital detectives, piece together clues from many different sources to determine the order and details of events before building a remediation plan. To accomplish this goal, teams stitch together numerous (sometimes dozens) of products to determine the full scope of an attack and identify how to stop the threat before damage and loss occurs to their business.

In the early days of cybersecurity, analysts realized that centralizing evidence streamlines digital investigations. Otherwise, they would spend most of their time trying to gather the required data from those aforementioned products separately — requesting access to log files, scraping affected systems for information, and then tying this disparate data together manually.

I remember using a tool in my forensic days called “log2timeline” to organize data into a time series format that was also color coded for the type of activity, like file creation, logon, etc. Early SANS training courses taught the power of this tool and timelining in general for analysis. This was quite literally an Excel macro that would sort data into a “super” timeline. It was revolutionary, providing a simple way to organize so much data, but it took a long time to produce.

Now, imagine if detectives had to wait days before accessing a crime scene or if the evidence in a room was off limits to them until they could find the right person to provide permissions. That is the life of a cybersecurity analyst.

During my SOC career, I was continually surprised at how little time senior analysts spent doing analytic work. Most of their time was spent managing data, like pursuing data sources and sifting logs for the relevant information.

In the early 2000s, products emerged to centralize “security logs” for the security team. This technology quickly became a staple in the SOC and (after a few evolutions of naming) eventually came to be called security information and event management (SIEM). This product promised to lift the fog surrounding our data, giving teams a central place to store and analyze their organizations’ security-related information. In part one of this three-part series, we will cover the first three major phases of SIEM evolution.

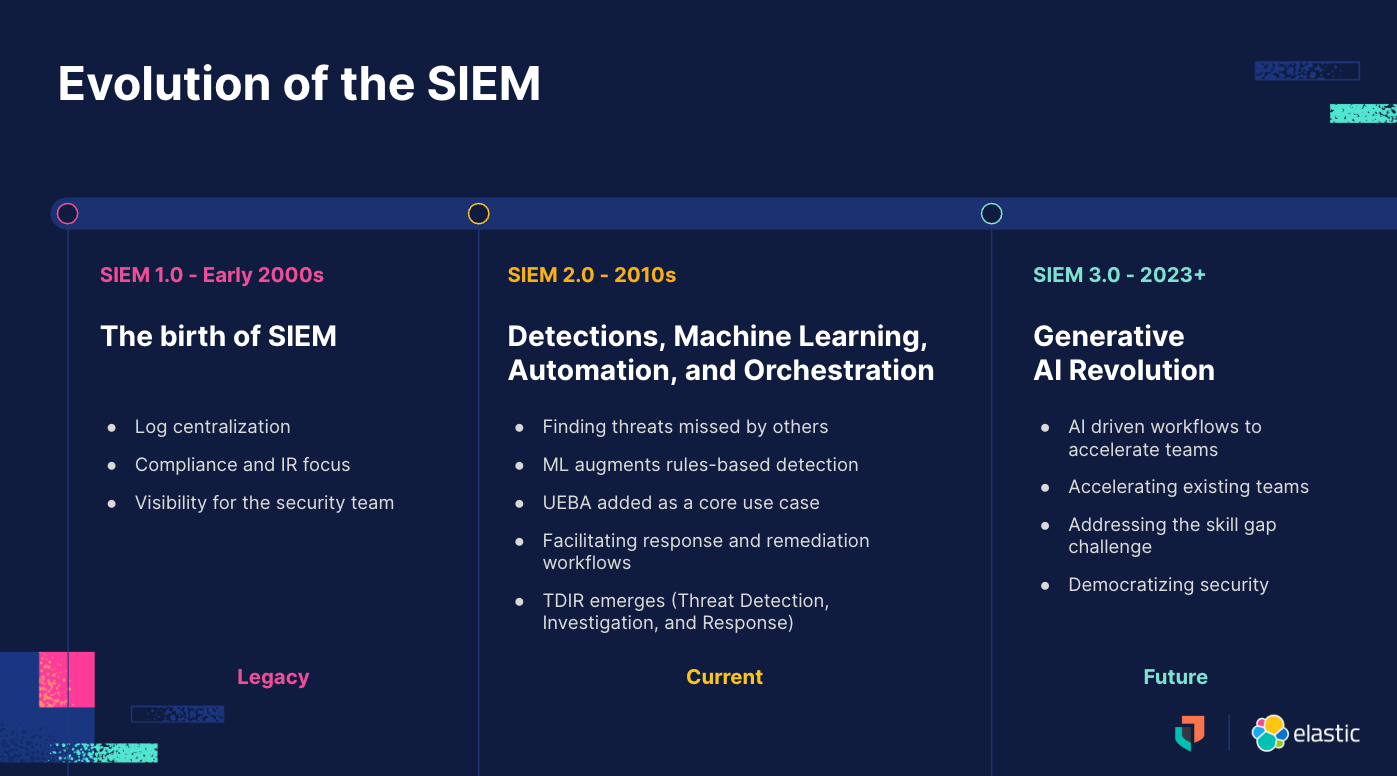

SIEM 1.0 — Early 2000s

Operational collection and compliance

This initial iteration of security log collection was defined as SEM (security event management) or SIM (security information management). It collected a combination of log data, or the digital records of system activity, along with event data. This was a game changer for analysts, as they now had a system within their control that contained the data needed to solve the digital crime. Basically, security teams now had their own data silo. This product revolution was mostly driven by a need to collect data in case something happened, like maintaining a forensic log and being able to demonstrate to auditors and investigators that indeed these logs were being collected. This compliance use case drove the adoption of central security event collection.

There were challenges with this new type of product. The SOC now needed security engineers to manage large amounts of data. They also needed the budget to collect and store this information, as they were copying data from numerous other systems into a monolithic, centralized system. But the benefits were clear: accelerating detection and remediation by slashing the time spent collecting and sorting data from across the business. Once notified of an attack, incident responders could get to work almost instantly.

SIEM 2.0 - 2010’s

Detection builds upon collection

The next advance was to apply detection logic at the centralized SIEM layer. A SIEM used to be a combination of the event data in a SEM and the information data in a SIM. The compliance and evidentiary collection power of SEM/SIMs was strong, but after almost a decade of just collecting and reviewing data, analysts realized they could do far more with centralized information. Instead of merely consolidating alerts from other systems and providing a central system of record for collected logs and events, SIEMs now enabled analysis across many data sources. Detection engineers could operate from a new perspective — spotting threats that may have been missed at a point-solution analyzing only one data source, like your anti-virus or network firewall.

This evolution came with plenty of challenges. In addition to greater need for subject matter experts and prebuilt rules, SIEMs centrally collected alerts from numerous point solutions, each of which produced a lot of false positives on their own and exacerbated the issue. SIEM analysts had to review the collective network and desktop alerts. This resulted in an often-asked question by a SIEM analyst: “Where do I start?” paired with an entirely new set of detection alerts from the SIEM itself. Your SIEM now contains the sum of all other system alerts in the network plus the amount of alerts normally generated. Needless to say, this was incredibly overwhelming.

The promise of machine learning

Machine learning (ML) promised to improve the detection of unknown threats with less required upkeep. The goal was to identify abnormal behavior, rather than depending on hard coded rules to find every threat.

Before ML, detection engineers had to analyze an attack that had already happened or one that could happen (courtesy of first party research) and write detections for that potential occurrence. For example, if there was an attack discovered that took advantage of some specific arguments sent to a Windows process, one could write a rule looking for those arguments to be invoked on execution. But the adversary could merely change the order of arguments or invoke them differently to avoid this brittle detection. And, if there were legitimate uses of those arguments, it would take days (maybe weeks) of tuning to remove these false positives from the detection logic.

Machine learning promised to greatly reduce this challenge; specifically in two ways:

“Unsupervised” ML-based anomaly detection: Analysts only had to decide in what area to look for unknown behaviors, such as in logins, in process executions, and in access to S3 buckets. Then the ML engine learned the NORMAL behavior for these areas and flagged what was unusual. SANS DFIR made a famous poster in 2014 that said "Know Abnormal…Find Evil."

- Trained or “supervised” ML models: Human analysts can see something, and their brains can connect the dots on how it looks somewhat similar to an attack previously observed. These experts are able to learn about how an attack took place and apply that knowledge to finding unknown attacks that follow a similar progression. Traditionally, they used this expertise in threat hunting to help find threats their security products may have missed. Now, with machine learning they were able to craft trained model detections with the ability to learn from previous attacks and find new ones that are similar in how they are attacking. Focusing on the behavior — and not only on atomic indicators like hashes, strings in files, and URLs — allows for detections with a longer shelf life and a higher rate of attack detection.

The identification of abnormal activity, or outlier analysis, allowed security teams to quickly identify the “weird” and investigate it. Weird could be a user logging in from a strange location at a strange time, which sometimes would be an adversary that had stolen credentials to access the network. But sometimes it was Sally on vacation logging in to fix a network issue at 2:00 a.m. While the false positives increased, the ability to discover brand new, previously unfounded threats was reason enough to put the additional help to triaging false positives. The era of user and entity behavior analytics (UEBA) had begun, and modern SIEMs are powered by both rule-based and machine learning detection technologies.

Moving from reactive to proactive

As we’ve seen, SIEMs used to be historical reports of problems rather than actual end-to-end solutions. SIEMs could alert you to an issue, but then you were on your own to clean it up. This changed with the entrance of SOAR: security orchestration, automation, and response. This new product line was created to fill a feature gap in SIEMs. They provided a place to collect and organize the steps an analyst wanted to perform for remediation as well as connectors to the rest of the ecosystem to initiate the response. In our police department analogy, SOARs were like traffic cops directing all the other systems to execute commands. They were the glue holding the discovery of the attack from the SIEM to the response actions of the other systems.

Like UEBA, the ability to organize response plans and kick off actions from a central location has become an expectation of modern SIEMs. Now in the SIEM 2.0 lifecycle, it is expected that SIEMs can collect data at scale across the organization (.gen 0), detect new threats the point solutions may have missed, and correlate across disparate systems using both rule-based and machine learning-based technologies (SIEM 1.0), and allow for planning and execution of the response plans (2.0). In fact, a new acronym — TDIR (threat detection, investigation, and response) — was coined to capture the ability to handle the full scope of an attack.

SIEM 3.0 — 2023 and beyond

The generative AI revolution in cybersecurity

SIEMs have become foundational in a SOC’s threat detection, triage, and investigation, despite failing to address a fundamental challenge: the massive skills shortage in cybersecurity. A March 2023 study commissioned by IBM and completed by Morning Consult found that SOC team members are “only getting to half of the alerts that they’re supposed to review within a typical workday.” That’s a 50% blind spot. Decades of incremental improvements to simplify workflows, automate routine steps, guide junior analysts, and more have helped — but not enough. With the advent of consumer-accessible generative artificial intelligence models with domain expertise in cybersecurity, this is changing quickly.

SIEMs have traditionally been very dependent on the human behind the screen — alerting, dashboarding, and threat hunting are all human intensive operations. Even early AI efforts like AI co-pilots have depended on the ability of the analyst to use these co-pilots effectively. This revolution will happen when AI operates on behalf of the analyst, removing the requirement to “chat.” Imagine the system sifting through all the data, ignoring the irrelevant and identifying what's critical, discovering the specific attack, and crafting specific remediations — and, in turn, freeing up your experts to focus on stopping the impact to the business.

The application of generative AI

For the first time, technology is learning from senior analysts and transferring that knowledge to junior members automatically. Generative AI now helps security practitioners develop organization-specific remediation plans, prioritize threats, write and curate detections, debug issues, and tackle other routine and time-consuming tasks. Generative AI promises to automate the feedback loop back into the SOC, enabling continual improvement day after day. We can now close the OODA loop with this automated feedback and learning.

Due to the nature of large language models (the science behind generative AI), we can finally leverage technology to reason across numerous data points, just like a human would — but with greater scale, higher speed, and broader understanding. Additionally, users can interact with large language models in natural language, rather than code or mathematics, and further reduce barriers to adoption. Never before has an analyst been able to ask questions in natural language, such as "Does my data contain any activity in any area that might represent a risk to my organization?" This is an unprecedented leap forward in the capabilities that can now be embedded in a SIEM for all members of a SOC. Generative AI has become a powerful and accurate digital SOC assistant.

The products that harness the AI revolution in security operations workflows will deliver SIEM 3.0.

Learn more about the evolution of SIEM

This blog post has reviewed the evolution of SIEM, from centrally collecting data to detecting threats at the organizational level and then to automation and orchestration for speeding up remediation. Now, in this third phase of SIEM technologies, we are finally tackling the massive skills shortage in cybersecurity.

In part two of this series, we will discuss the evolution of Elastic Security from a TDIR to the world’s first and only AI-driven security analytics offering. In the meantime, you can learn more about how security professionals have reacted to the emergence of generative AI with this ebook: Generative AI for cybersecurity: An optimistic but uncertain future. Stay tuned for part two!

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.