Zero Trust requires unified data

It’s vital to have a common understanding and shared context for complex technical topics. The previously adopted perimeter model of security has become outdated and inadequate. Zero Trust (ZT) is the current security model being designed and deployed across the US federal government. It’s important to point out that ZT is not a security solution itself. Instead, it’s a security methodology and framework that assumes threats exist both inside and outside of an environment.

The ZT security model has two parts: Zero Trust Architecture (ZTA) and Zero Trust Network Access (ZTNA). ZTA focuses on securing data and resources, while ZTNA focuses on securing access to applications and resources for remote users. ZTA focuses on an entire organization’s network, and ZTNA focuses specifically on remote users and third parties. Both are critical parts of the broader ZT methodology that are required to work together in order for the Zero Trust approach to be a success.

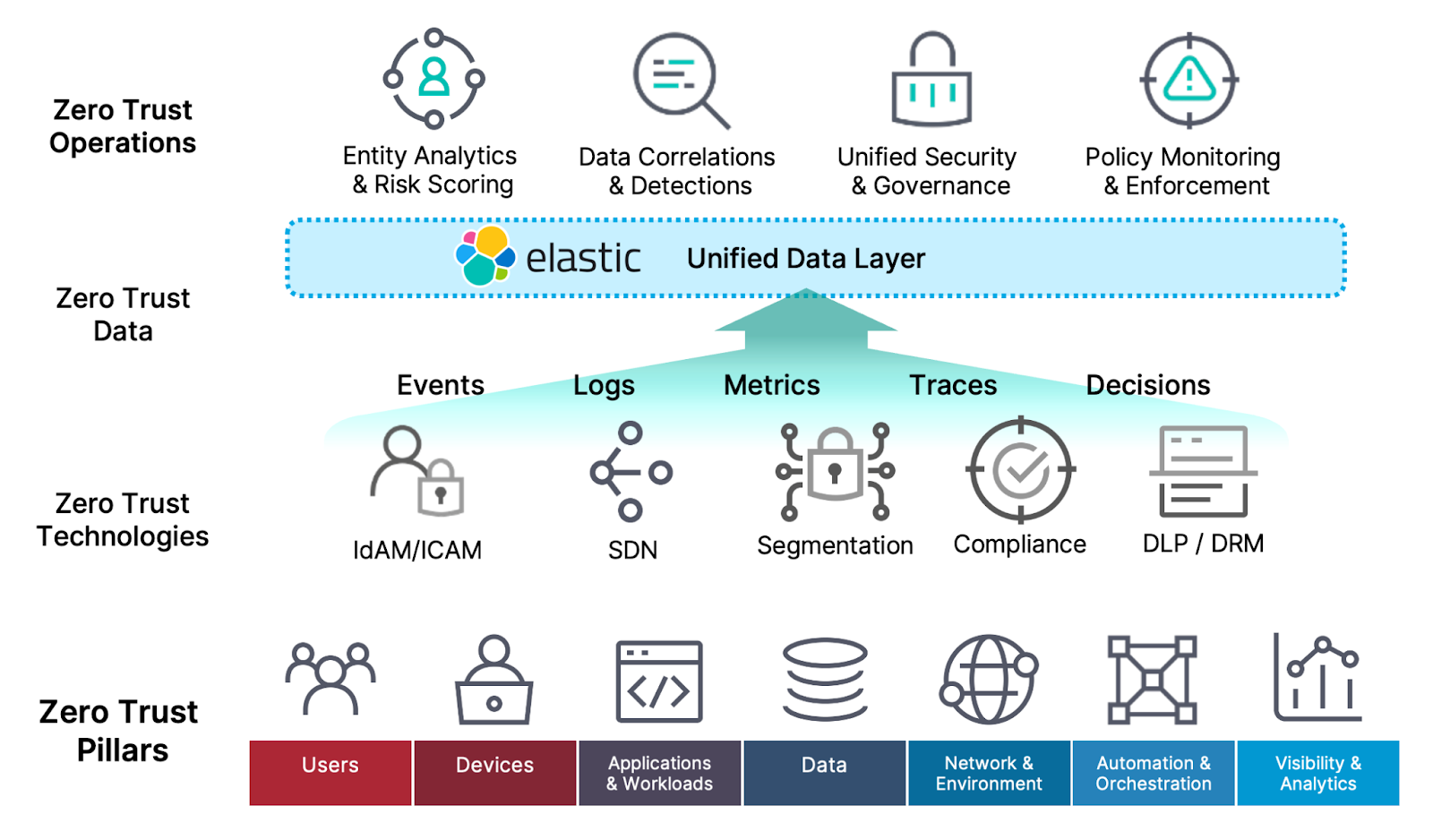

The operational surface area that a ZT methodology has to cover is huge — it encompasses the users, the endpoints they're using, the network perimeter systems, the systems routing requests internally, the applications and services being accessed, the data repositories servicing those applications, and the data itself residing within those repositories. It also includes the ZT policy components and a myriad of ancillary systems built around supporting and securing portions of those operational assets and transactions. This includes identity and permissions management systems, comply-to-connect and master data record systems, data loss prevention, user and entity behavior analysis, and many others.

Given this massive and complex surface area, it's extremely impractical (nigh impossible) to create from scratch and then maintain individual integrations to connect and share data among each of the systems, sub-systems, and applications that are involved in ZT operations. But those connection points are absolutely critical in designing an efficient, holistic approach.

Divided we fall: Disconnected data compromises speed and accuracy

A truly effective Zero Trust approach is only possible when all components and processes are working together and can communicate in a shared data environment that can scale and support decisions at network speeds. The CISA Zero Trust Maturity Model states: “... cross-pillar coordination is required. However, this coordination can only be achieved with capabilities and dependencies compatible with one another and the enterprise-wide environment.” This indicates that the intention and the requirement is to be able to observe all of these systems, users, and interactions collectively and correlate activities across them all.

Because there is currently no Zero Trust interface standard for different portions of the IT landscape to conform to, the only viable option for achieving that level of cross platform/application/network/boundary communication is to integrate them all at the data layer — the one commonality that all components and layers of the organization share is that they all generate data. In order for Zero Trust to work, all of this data needs to be observable, available, and correlatable within a unified data plane. In other words, it needs to be integrated at the data layer.

The defining principle of Zero Trust is "never trust, always verify," and all pillars are connected to the architecture by the data they share. That data is the foundation for the ZT decisions being made at any given time. Speed is a huge factor in making Zero Trust practical: ZT operations rely on fast and comprehensive access to all relevant data not only to make good policy-based decisions about trust in a timely manner, but also to validate that the decided-upon action was actually performed (the "always verify" part of the equation).

Zero Trust operations can't use a “fire and forget” mentality: every portion of the transaction chain needs to be monitored and analyzed to ensure the ZT policy was followed. It’s not good enough to passively collect logs to “check the box” on the security requirements.

For organizations, such as defense and intelligence agencies that need strong security beyond traditional enterprise environments, Zero Trust must operate at the edge and without internet connections. In these cases, the only viable ZT model is one that works in disrupted, disconnected, intermittent, and low-bandwidth (DDIL) environments as well as it does in fully connected environments.

Together we stand: The value of a unified data layer for Zero Trust

Elastic shines when used as the unifying data foundation for Zero Trust operations. While Elastic's Search AI Platform has features, capabilities, and components that can directly support Zero Trust activities, the primary and unique value that Elastic provides for ZT is the ability to ingest data from any systems across all parts of the Zero Trust pillars (ZTA and ZTNA) into a shared data environment that operates at the speed of search.

A recent NSA white paper specifically highlights the value and importance of the data layer in a ZT architecture. Elastic can become the foundation of the Data pillar, enabling a single, unified data layer for:

Identifying and tracking valuable data throughout the organization

Identifying and adapting risk scoring to data sources and user interactions with valuable data

Labeling, tagging, cataloging, and governing data in a comprehensive way

Encrypting, protecting, and monitoring data

Providing a single data security layer for access control

Powering Zero Trust decision-making with access to all data at once

With Elasticsearch, you can index, query, and analyze disparate data streams at the volumes and speeds that Zero Trust requires. We offer hundreds of prebuilt integrations to collect data across the spectrum of services and applications, as well as the built-in query and analytics operations to enable near real-time cross-correlation on all those data streams. And because Elastic is a distributed-by-design platform, it provides the flexibility and scalability to work equally well in enterprise and DDIL environments.

Greater than the sum

IT and security teams are often asked to “do more with less,” including fewer security resources and training. As the cyber landscape evolves and becomes increasingly more complex, speed and agility are the foundation of success. We need to move away from limping through the daily review of thousands of simple alerts; we need our operators and analysts to focus on what matters and to be able to do so with agility and speed. Elastic provides integrated machine learning (unsupervised, supervised, and third-party model integration) to automate anomaly and pattern detection (like behavioral, categorization, and temporal) and alerting on all ingested data. The idea isn’t to replace people; it’s to make existing teams more efficient and better focused on what matters most.

One of the core Zero Trust implementation principles is that you should start where you are. Elastic can be integrated to the different ZTA and ZTNA systems in a phased approach that makes use of existing investments, while steadily moving toward a unified data layer. Over time, Elastic’s search analytics platform allows those integrated systems to become the distributed data speed layer that integrates all components of the ecosystem and to power successful Zero Trust operations and decisions.

Future-proofing

Eventually, the Zero Trust design will encompass all types of data, not just events, logs, and metrics. It will have to make ZT decisions based on the human data living within our office documents and data repositories as well.

Elasticsearch is also a market-leading vector database with integrated and ever-expanding capabilities in combining semantic search modalities (vector search, NLP, NER, classification) with traditional lexical search, aggregations, and filtering operations. Lexical search is traditional keyword-based retrieval, whereas vector search enables finding information based on semantics or the meaning of the question. The combination of those two fundamental types of search (hybrid search) with the ability to aggregate and action data is extremely powerful. Elastic’s unique approach of providing an open, scalable, and general-purpose search analytics platform — with the full spectrum of data query, machine learning and AI model integrations, analysis, detection, alerting, and integration capabilities — enables organizations to future-proof all data operations and adapt easily to new sources and use cases.

Zero Trust is a long-term strategy and investment

Zero Trust as a practical methodology is still relatively new and evolving. The vast array of technologies waiting to be integrated into the Zero Trust framework varies widely and is unique to each organization. As noted in the CISA Zero Trust Maturity Model, “the path to zero trust is an incremental process that may take years to implement,” so it’s very likely that there will be an initial and ongoing investment required to implement the principles and maxims of Zero Trust.

The path to ZT success, given the evolving landscape and processes, requires agility. Realizing these goals is only possible if all Zero Trust operations are built upon a unified data layer providing the scalability, speed, and flexibility to adapt to the changing requirements. That is how we protect our vital information assets and operations in a rapidly changing security environment.

Learn more about Elastic and Zero Trust

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.