What is a web crawler?

Web crawler definition

A web crawler is a digital search engine bot that uses copy and metadata to discover and index site pages. Also referred to as a spider bot, it "crawls" the world wide web (hence "spider" and "crawler") to learn what a given page is about. It then indexes the pages and stores the information for future searches.

Indexing refers to organizing data within a given schema or structure. It is a process that allows the search engine to match, with the use of indexed data, relevant search results to a query. As a result, a web crawler is a tool that facilitates web browsing.

There is a distinction between internet web crawlers and enterprise web crawlers. An internet web crawler crawls the internet and continuously expands the crawl frontier by discovering new sites and indexing them. An enterprise web crawler crawls a given business website to index site data so the information is discoverable when queried by a user using the site's search function. It can also be used as a business tool that automates certain searches.

How does web crawling work?

Web crawling works by discovering new pages, indexing them, and then storing the information for future use. It can continuously crawl your content at specified intervals to keep your search results updated and searchable.

Discovering and fetching pages

In order to gather information on as many online sites or pages as possible, a web crawler will move between links on pages.

A web crawler begins on a known URL, or seed URL, and then discovers and accesses new sites from the links on that page. It repeats this process over and over, constantly looking for new pages. Due to the enormous number of online pages, and the fact that information is continuously updated, this process can go on almost indefinitely.

Links that the crawler finds but remembers for future discovery make up what is known as the crawl frontier. These hyperlinks are then visited in an order determined by a set of policies or crawling rules. These include selection policies, revisit policies, politeness policies, and parallelization policies.

A web crawler will consider the number of URLs linking to a given page and the number of visits to a given page — all in an effort to discover and index content that is important. The logic is that an oft-visited and cited page contains authoritative, high quality information. It is therefore important for the search engine to know the site, and to have the ability to make it discoverable.

Rendering, downloading, and indexing pages

Once a crawler bot discovers a new page, it renders the information on it, be it site copy or meta tags, downloads this information, and indexes it. Some web crawlers can only access or read public pages, others have permissions to index authenticated pages. They are also beholden to robots.txt files and noindex meta tag requirements. A robots.txt file is a ledger of rules for online pages, which determines what links a bot can follow, and what information it can index. A noindex meta tag discerns meta tags that are not for indexing.

Rinse and repeat

The purpose of web crawlers is to index and download information about given sites. Crawlers are always expanding the crawl frontier, looking for new sites, pages, and updates. As a result, they continue to expand their search engine’s indexed data.

With the help of their spider bots, search engine algorithms can sort the indexes created by the crawlers so they can be fetched and ranked into results when queried.

Why is web crawling important?

Web crawling is important to businesses because it is key to search engine functionality. It lets search engines index information, and knows what sites and pages exist so it can refer to this information when it's relevant to a query.

Discoverability

Web crawling is part of a successful SEO and search strategy, in part because it makes business websites and business information discoverable. Without an initial crawl, search engines cannot know your site or website data exists. An internal crawl of your site also helps you manage your site data, keeping it updated and relevant so the right information is discoverable when queried, and so that you reach the right audiences.

User satisfaction

Using an enterprise web crawler is also key to your business website's search functions. Because crawling indexes your site data (without the hassle), you are able to offer users a seamless search experience, and are more likely to convert them into customers.

Automation and time-saving

A web crawler automates data retrieval, and enables you to drive engagement to your website by crawling internally and externally. This way, you can focus on creating content, and making strategic changes where necessary. In short, web crawling — and your site's crawlability — is important to your business's success.

Key components of a web crawler

Web crawlers are essential search engine tools, so their specific components are considered proprietary information. They contribute to distinguishing search services and define search experience — your experience on Google is different from your experience on Yandex or Bing, for instance. Moreover, your search experience on your own website may vary from that of your competitors depending on how up-to-date, accurate, and relevant the information presented in your search results are.

So though different web crawlers work differently, be they Internet or enterprise crawler bots, they share standard architecture and have similar capabilities. They receive a seed URL as input. From there, they can access more URLs along the crawl frontier, which is composed of a list of URLs that have yet to be visited by a crawl bot.

Based on a set of policies or crawl rules, such as politeness (what the bot can index) and revisit policies (how often it can crawl), the crawler will continue to visit new URLs.

From there, it must have the capacity to render the URL's information, download it at a high speed, index it, and store it in the engine for future use.

While Internet crawlers enable a web-wide search, enterprise web crawlers allow your content to be searchable on your site. Some of their capabilities include:

- Full visibility into crawl activity so you can keep track of crawl performance

- Programmability, which gives you control of the bot with flexible APIs

- Easy to use user interfaces

Types of web crawlers

Web crawlers can be programmed to fulfill different tasks. As such, different types of web crawlers exist.

Focused web crawler: A focused web crawler's goal is to crawl content focused on a parameter, such as content related to a single topic, or from a single type of domain. In order to do so, a focused web crawler will discern which hyperlinks to follow based on probability.

Incremental web crawler: An incremental web crawler is a type of crawler bot that revisits pages in order to update indexes. It replaces old links with new URLs where applicable. This process serves to reduce inconsistent document downloads.

Distributed crawler: Distributed crawlers work on different websites simultaneously to fulfill crawling duties.

Parallel crawler: A parallel crawler is a type of crawl bot that runs multiple processes simultaneously — or in parallel — to increase download efficiency.

Popular search engine bots

The most popular crawler bots are Internet search engine bots. They include:

- BingBot: Bing's crawler bot

- GoogleBot: Made up of two bots — one for mobile platforms, and the other for desktops

- DuckDuckBot: DuckDuckGo's bot

- Slurp: Yahoo Search's bot

- YandexBot: Yandex's bot

- Baiduspider: Baidu's search engine bot

Benefits of web crawling

While web crawling used by search engines provides a user-friendly search experience, business users benefit from web crawling in a number of ways.

The primary benefit of web crawling for business users is that it enables discoverability of their site, content, and data, and is, as such, essential to business SEO and search strategy. Crawling your site is also the easiest way to index data on your own website for your own search experience. And the good news is, web crawling doesn't affect your site's performance because it runs in the background. Regular web crawling also helps you manage your site's performance, search experience, and ensure that it is ranking optimally.

Additional web crawling benefits include:

- Built-in reporting: Most web crawlers possess reporting or analytics features you can access. These reports can often be exported into spreadsheets or other readable formats and are helpful tools for managing your SEO and search strategy.

- Crawl parameters: As a site manager, you can set crawl rate frequency rules. You decide how often the spider bot crawls your site. Because the bot is automated, there is no need to manually pull crawl reports every time.

- Automated indexing: Using a web crawler on your site enables you to index your data automatically. You can control what data gets crawled and indexed, further automating the process.

- Lead generation: Crawling can help you gather insights on the market, find opportunities within and generate leads. As an automatic search tool, it speeds up a process that might otherwise be manual.

- Monitoring: Web crawlers can help you monitor mentions of your company on social media, and increase time to response. When used for monitoring, a web crawler can be an effective PR tool.

Web crawling challenges and limitations

The primary challenge of web crawling is the sheer amount of data that exists and is continually produced or updated. Crawlers are continually looking for links, but are unlikely to discover everything ever produced. This is due in part to these challenges and limitations:

- Regular content updates: Search engine optimization strategies encourage companies to regularly update the content on their pages. Some companies use dynamic web pages, which automatically adjust their content based on visitor engagement. With regularly changing source code, web crawlers must revisit pages frequently in order to keep indexes up to date.

- Crawler traps: Sometimes intentionally, websites will use crawler traps to prevent spider bots from crawling certain pages. Commonly used crawler roadblocks are robots.txt files, or noindex meta tags. Though they are intended to protect certain parts of a site from being crawled and indexed, they can sometimes trip up the crawler. When that happens, the bot can get trapped in a vicious crawling cycle that wastes the crawler's resources, and your crawl budget.

- Bandwidth strain: When downloading and indexing large numbers of pages, web crawlers can consume a lot of network capacity, and therefore strain network bandwidth.

- Duplicate content: Duplicate content, whether the product of machine or human error, can result in inaccurate indexing. When crawlers visit duplicate pages, they only index and rank one page. Determining which to download and index is difficult for the bot, and counterproductive for the business.

Web crawling vs. web scraping

The key difference between crawling and scraping is that web crawling is used for data indexing, whereas web scraping is used for data extraction.

Web scraping, also referred to as web harvesting, is generally more targeted than crawling. It can be performed on a small and a large scale and is used to extract data and content from sites for market research, lead generation, or website testing. Web crawling and web scraping are sometimes used interchangeably.

Where web crawlers are generally bound by rules such as robots.txt files and URL frontier policies, web scrapers may ignore permissions, download content illegally, and disregard any server strain their activities might be causing.

Future trends in web crawling

Web crawlers are used by all search engines, and are a fairly mature technology. For this reason, few invest the time in building their own. What’s more, open-source web crawlers exist.

However, as the production of new data continues to grow exponentially, and as companies move more towards mining the possibilities of unstructured data, web crawl technology will evolve to meet the demand. Search functionalities are vital to businesses, and with the arrival of AI, enterprise web crawlers are the key to ensuring that gen AI gets the most relevant and up to date information by regularly crawling and indexing site data.

Businesses are also dedicating more of their budgets to web scraping to expand current use cases, which include investigations, market research, monitoring competitors, or even criminal investigations. Opimas predicts that spending will increase to $6 billion USD by 20251.

Web crawling with Elastic

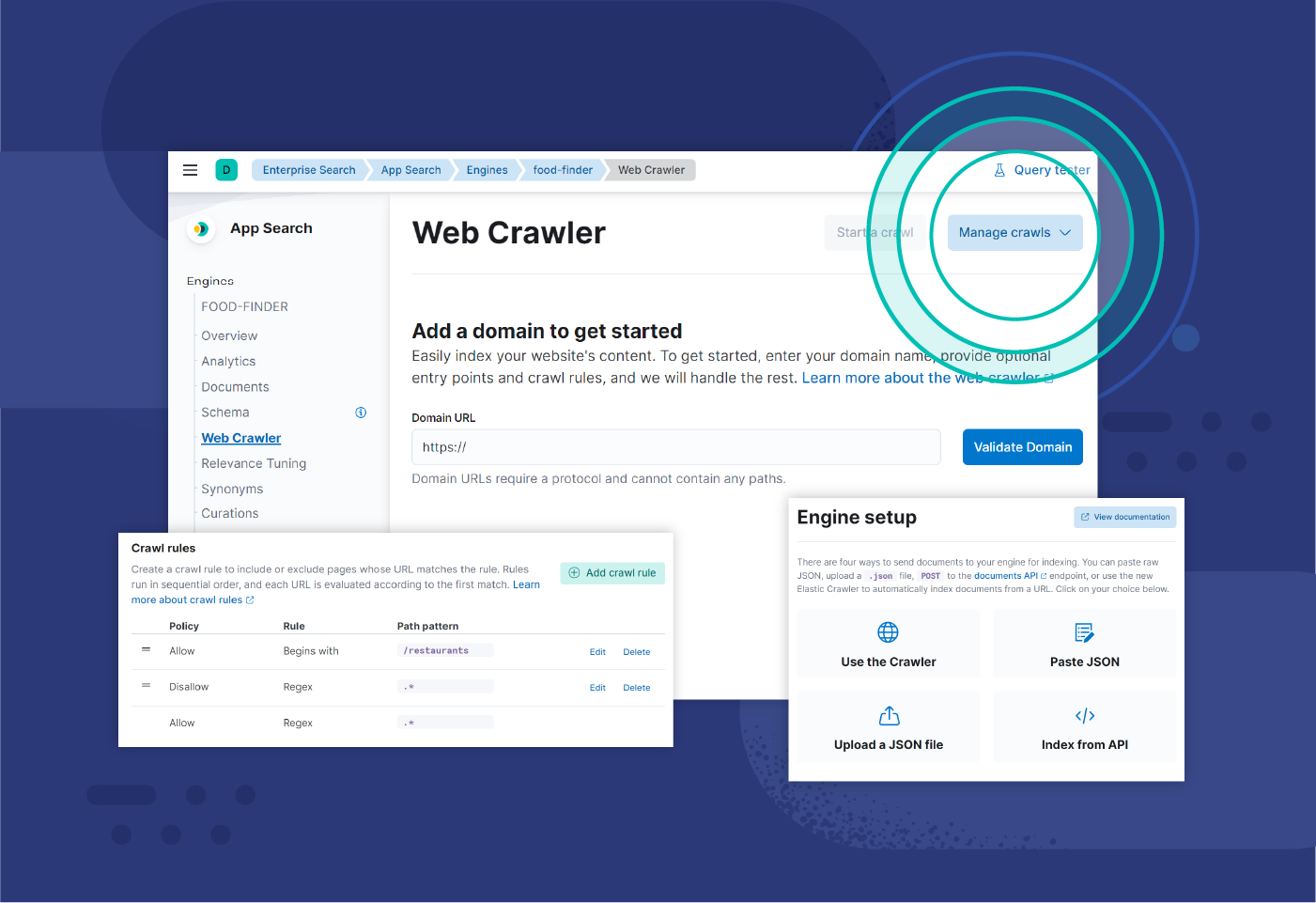

The Elastic web crawler is an Elasticsearch tool that lets developers easily index and sync content on their website. The crawler automatically handles indexing and is easy to control while being configurable and observable.

With Elastic's production-ready web crawler, you can schedule crawls to run automatically, configure rules, and crawl authenticated content and PDFs.

Footnotes

1 "What's the future of web scraping in 2023?", Apify Blog, January 2023