Ingest data directly from Google Pub/Sub into Elastic using Google Dataflow

Today we’re excited to announce the latest development in our ongoing partnership with Google Cloud. Now developers, site reliability engineers (SREs), and security analysts can ingest data from Google Pub/Sub to the Elastic Stack with just a few clicks in the Google Cloud Console. By leveraging Google Dataflow templates, Elastic makes it easy to stream events and logs from Google Cloud services like Google Cloud Audit, VPC Flow, or firewall into the Elastic Stack. This allows customers to simplify their data pipeline architecture, eliminate operational overhead, and reduce the time required for troubleshooting.

Many developers, SREs, and security analysts who use Google Cloud to develop applications and set up their infrastructure also use the Elastic Stack to troubleshoot, monitor, and identify security anomalies. Google and Elastic have worked together to provide an easy-to-use, frictionless way to ingest logs and events from applications and infrastructure in Google Cloud services to Elastic. And all of this is possible with just a few clicks in the Google Cloud Console, without ever installing any data shippers.

In this blog post, we’ll cover how to get started with agentless data ingestion from Google Pub/Sub to the Elastic Stack using Google Dataflow.

Skip the overhead

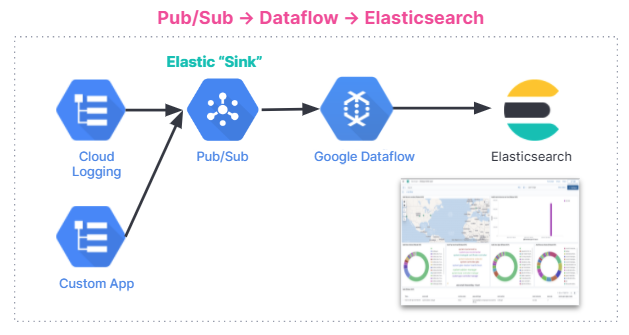

Pub/Sub is a popular serverless asynchronous messaging service used to stream data from Google Operations (formerly Stackdriver), applications built using Google Cloud services, or other use cases involving streaming data integration pipelines. Ingesting Google Cloud Audit, VPC Flow, or firewall logs to third-party analytics solutions like the Elastic Stack requires these logs to be shipped to Google Operations first, then Pub/Sub. Once the logs are in Pub/Sub, a Google Cloud user must decide on the ingestion method to ship messages stored in Google Pub/Sub to third-party analytics solutions.

A popular option for joint Google and Elastic users is to install Filebeat, Elastic Agent, or Fluentd on a Google Compute Engine VM (virtual machine), then use one of these data shippers to send data from Pub/Sub to the Elastic Stack. Provisioning a VM and installing data shippers requires process and management overhead. The ability to skip this step and ingest data directly from Pub/Sub to Elastic is valuable to many users — especially when it can be done with a few clicks in the Google Cloud Console. Now this is possible through a dropdown menu in Google Dataflow.

Streamline data ingest

Google Dataflow is a serverless asynchronous messaging service based on Apache Beam. Dataflow can be used instead of Filebeat to ship logs directly from the Google Cloud Console. The Google and Elastic teams worked together to develop an out-of-the-box Dataflow template that pushes logs and events from Pub/Sub to Elastic. This template replaces lightweight processing such as data format transformation previously completed by Filebeat in a serverless manner — with no other changes for users who previously used the Elasticsearch ingest pipeline.

Here is a summary of data ingestion flow. The integration works for all users, regardless of whether they are using the Elastic Stack on Elastic Cloud, Elastic Cloud in the Google Cloud Marketplace, or a self-managed environment.

Get started

In this section, we’ll go into a step-by-step tutorial on how to get started with the Dataflow template for analyzing GCP Audit Logs in the Elastic Stack.

Audit logs contain information that help you answer the question of "where, how and when" of operational changes that happen in your Google Cloud account. With our Pub/Sub template, you can stream audit logs from GCP to Elasticsearch and gather insights within seconds.

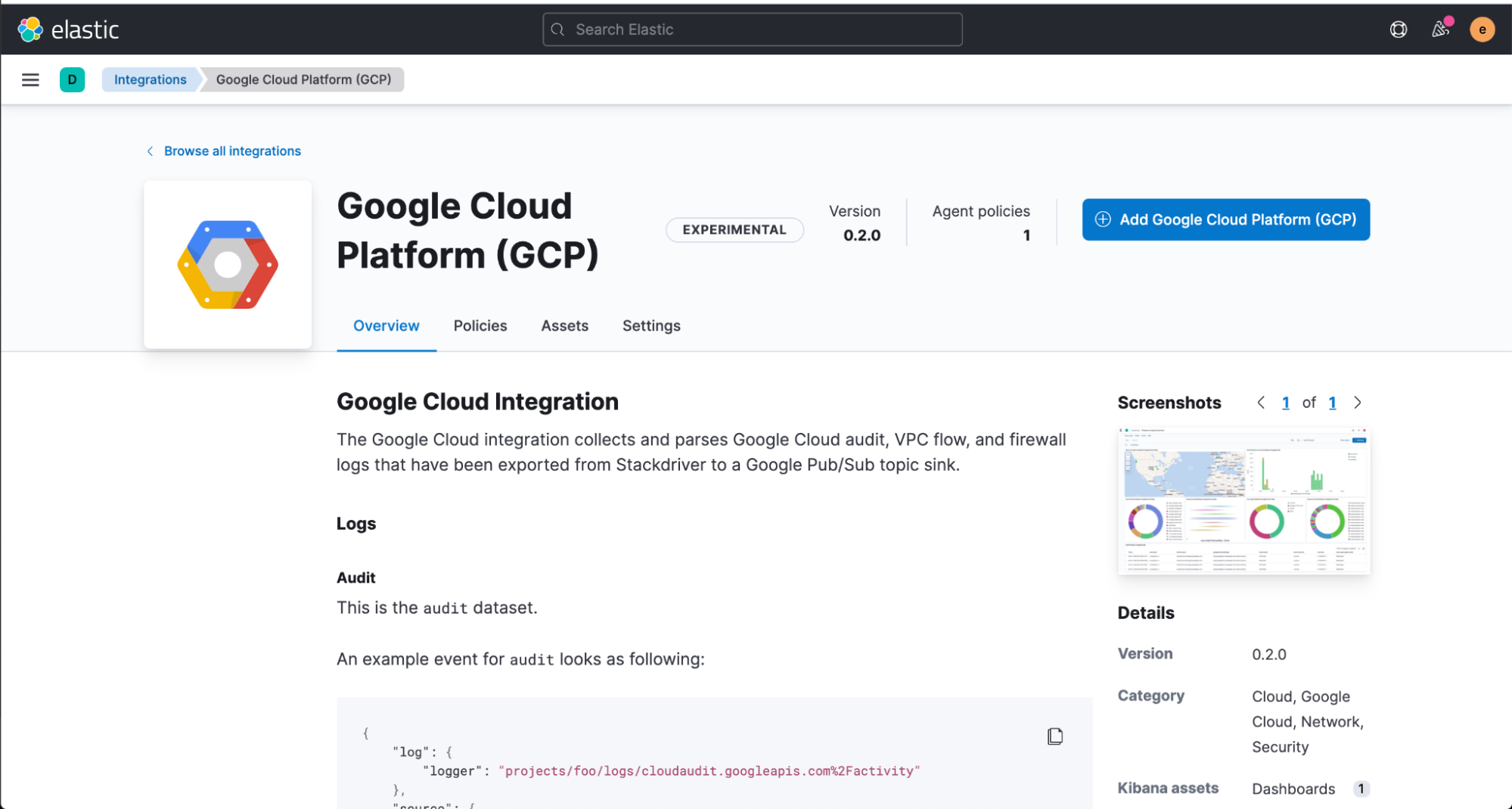

We’ll start with installing the Elastic GCP integration straight from the Kibana web UI, which contains prebuilt dashboards, ingest node configurations, and other assets that help you get the most of the audit logs you ingest.

Before configuring the Dataflow template, you will have to create a Pub/Sub topic and subscription from your Google Cloud Console where you can send your logs from Google Operations Suite.

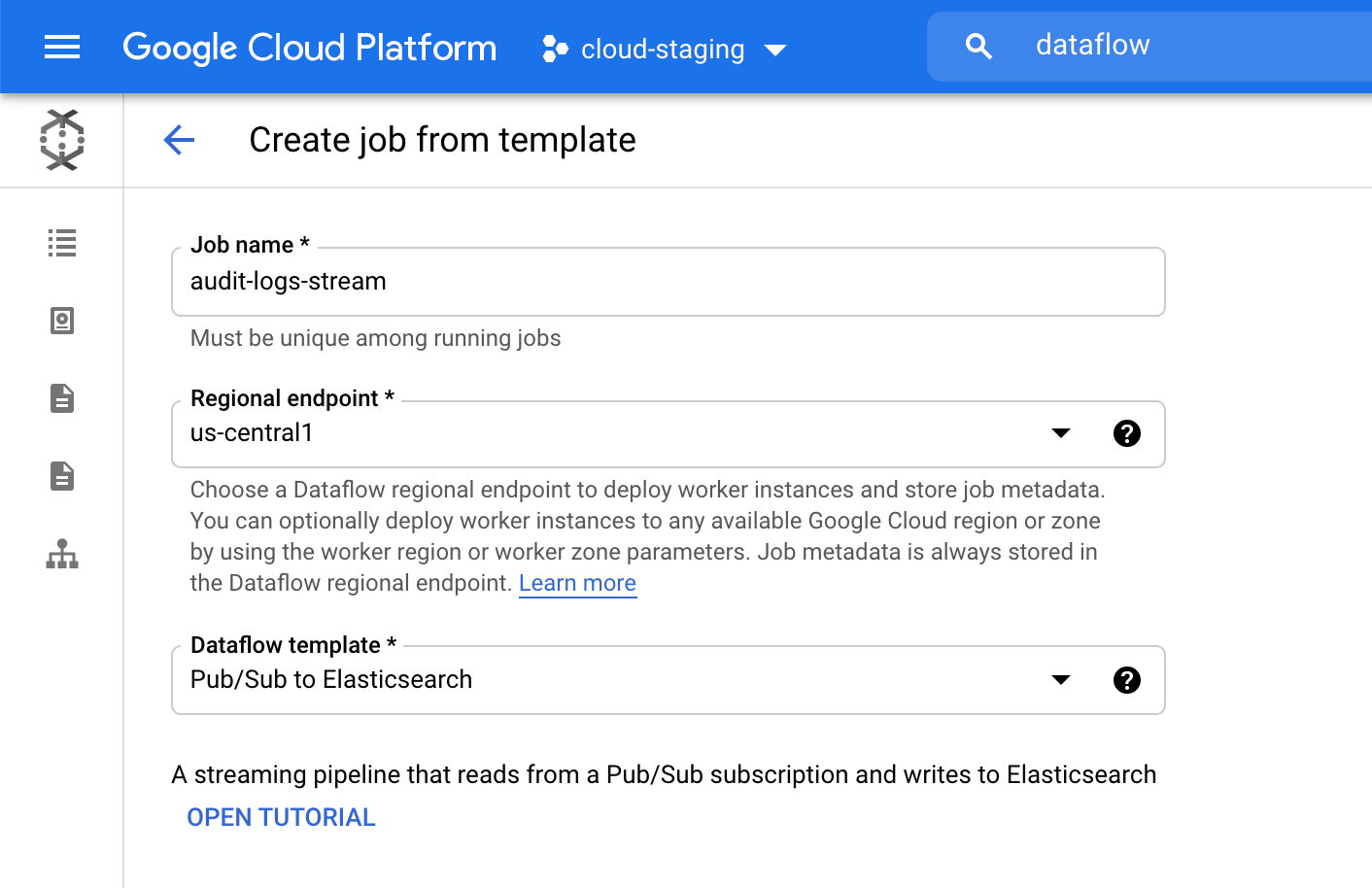

Next, navigate to the Google Cloud Console to configure our Dataflow job.

In the Dataflow product, click “Create job from template” and select "Pub/Sub to Elasticsearch" from the Dataflow template dropdown menu.

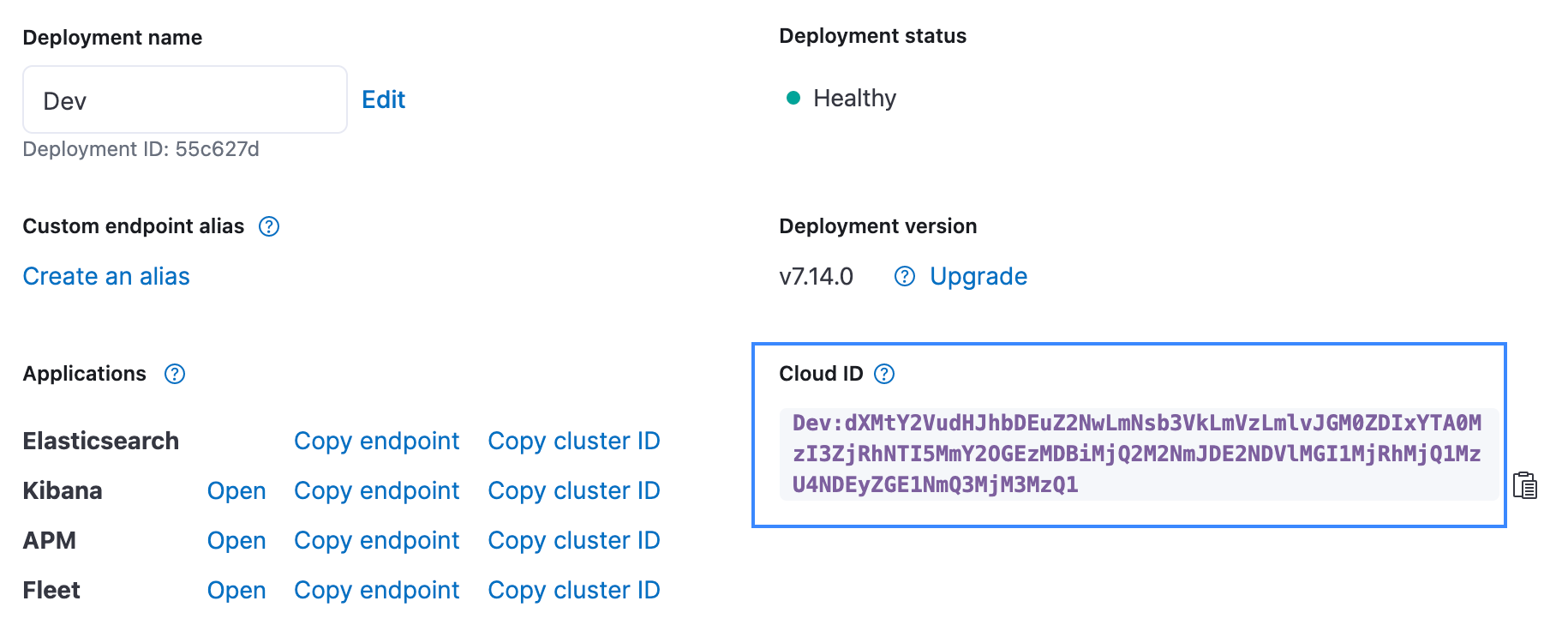

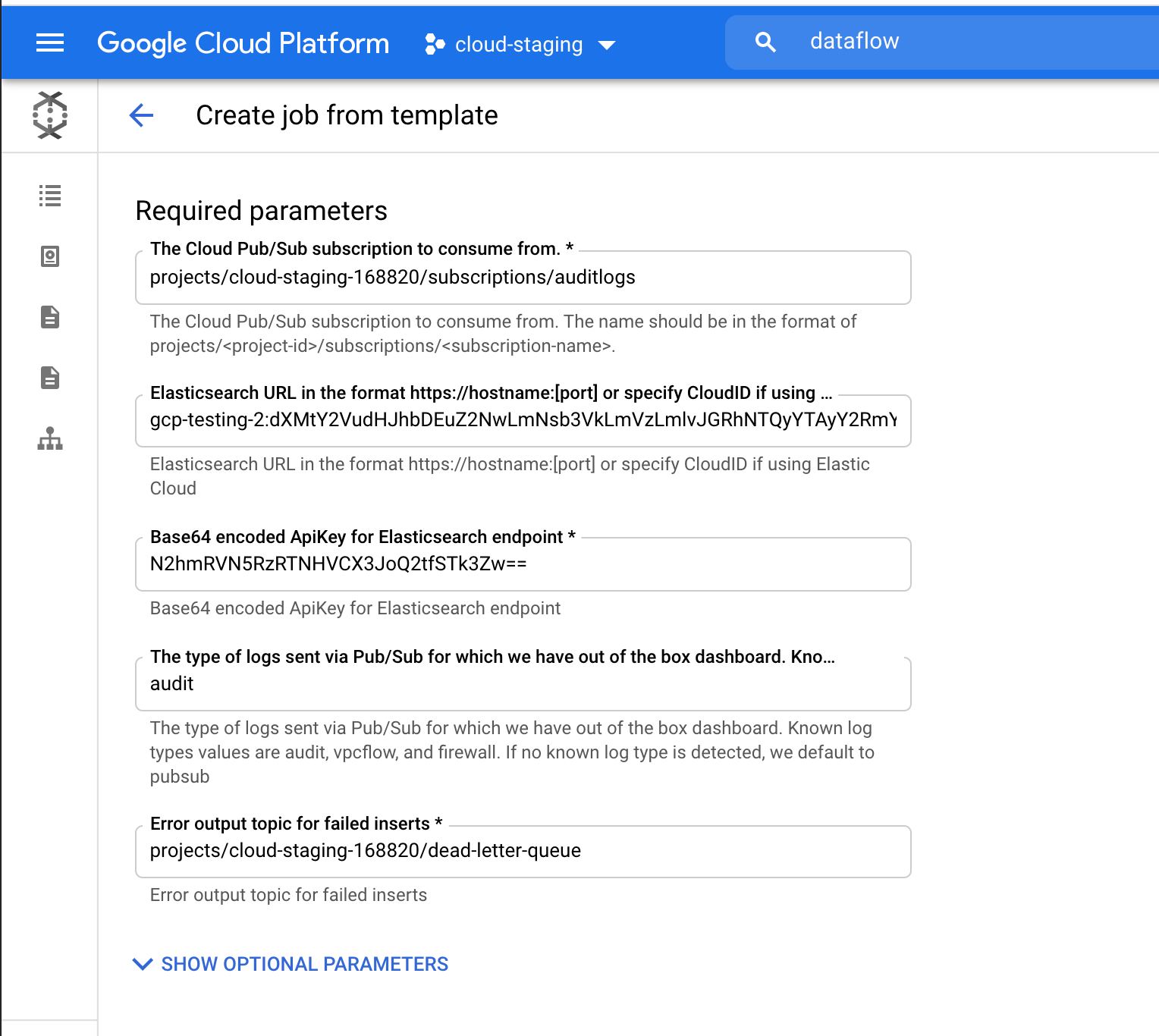

Fill in required parameters, including your Cloud ID and Base64-encoded API Key for Elasticsearch. Since we are streaming audit logs, add “audit” as a log type parameter. Cloud ID can be found from Elastic Cloud UI as shown below. API Key can be created using the Create API key API.

Click “Run Job” and wait for Dataflow to execute the template, which takes about a few minutes. As you can see, you don’t need to leave the Google Cloud Console or manage agents!

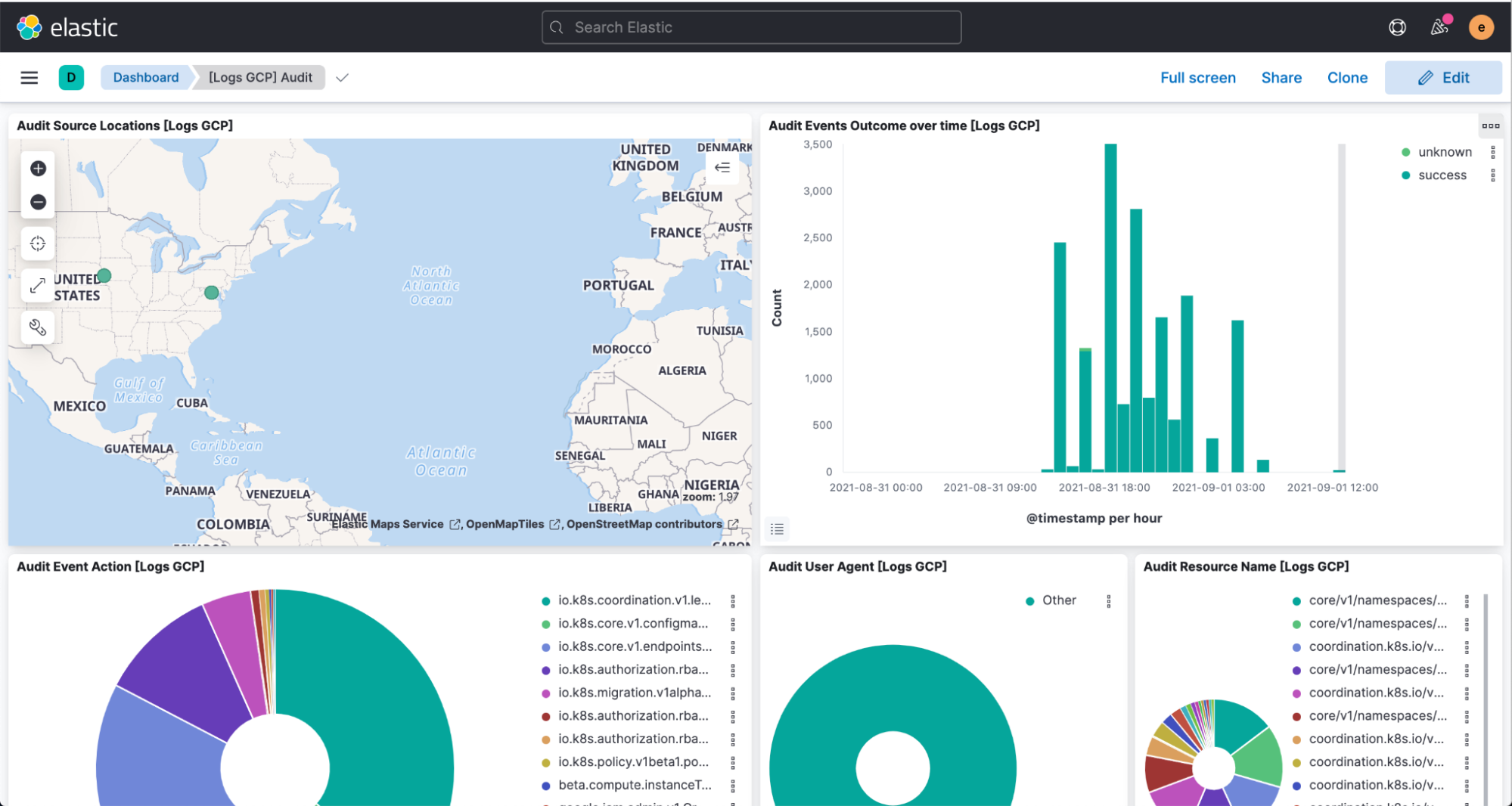

Now, navigate to Kibana to see your logs parsed and visualized in the [Logs GCP] dashboard.

Wrapping up

Elastic is constantly making it easier and more frictionless for customers to run where they want and use what they want — and this streamlined integration with Google Cloud is the latest example of that. Elastic Cloud extends the value of the Elastic Stack, allowing customers to do more, faster, making it the best way to experience our platform. For more information on the integration, visit Google’s documentation. To get started using Elastic on Google Cloud, visit the Google Cloud Marketplace or elastic.co.