Crafting prompt sandwiches for generative AI

Large language models (LLMs) can give notoriously inconsistent responses when asked the same question multiple times. For example, if you ask for help writing an Elasticsearch® query, sometimes the generated query may be wrapped by an API call, even though we didn’t ask for it. This sometimes subtle, other times dramatic variability adds complexity when integrating generative AI into analyst workflows that expect specifically formatted responses, like queries. In the short term, LLM providers may offer a patchwork of vendor-specific techniques for improving the repeatability of responses.

Fortunately, in addition to these emerging vendor-specific techniques, there's a universal pattern for structuring prompts at the start of a conversation to steer it in the right direction. We'll craft a prompt sandwich to improve the quality and consistency of chats with your preferred LLM. We'll explore the parts of a prompt sandwich and illustrate its practical application in the Elastic AI Assistant.

The Elastic AI Assistant integrates Elastic Security detection alerts, events, rules, and data quality checks as context for generative AI chats. The assistant helps make prompt sandwiches and reduces privacy risks through field-level pseudonymization of values.

Crafting prompt sandwiches for generative AI

Beginning a new conversation with a prompt sandwich applies to writing prompts for the most popular LLMs and sets the tone for quality and consistent responses because it:

- Steers the conversation and role of the assistant

- Provides context

- Asks a question or makes a request

Consider the following example of a prompt sandwich:

You are an expert in Elastic Security. I have an alert for a user named alice. How should I investigate it?

This prompt can be deconstructed into three vertically stacked parts, resembling a sandwich:

_________________________________________________

/ \

| You are an expert in Elastic Security. | (1)

\ _______________________________________________/

+------------------------------------------------+

| I have an alert for a user named alice. | (2)

+------------------------------------------------+

/ \

| How should I investigate it? | (3)

\_______________________________________________/Each layer of the prompt sandwich has a name and specific purpose:

System prompt

Context

User prompt

Each layer's unique purpose is outlined below:

Purpose | Example | |

System prompt | Steers the conversation and role of the assistant | You are an expert in Elastic Security. |

Context | Data or metadata, which may be structured or freeform | I have an alert for a user named alice. |

User prompt | Asks a question or makes a request | How should I investigate it? |

When building and tuning prompts for repeatability, this three-layered format provides guidance on structuring prompts and which parts to modify first.

System prompt

System prompts, the top layer of the sandwich, frame the conversation and the assistant's role, using universal techniques like role prompting.

One system prompt may be combined with many different contexts and user prompts.

When building new prompts or tuning existing prompts to get more consistent responses, it’s often better to start with an existing system prompt and add specific instructions to the user prompt. System prompts are more reusable when details are kept out until they are necessary.

Reasons to consider modifying or creating a new system prompt include:

- Repeating the same instructions in numerous user prompts

- Requesting the inclusion or exclusion of categories of answers

- Needing to explain results to diverse audiences, such as security analysts or executives

Context

Context, the sandwich's middle layer, is typically data or metadata. It’s crucial to keep data privacy in mind when providing context in prompts to third-party LLMs. Most popular LLMs accept context data or metadata in structured formats like comma-separated values or as unstructured text.

Context data may contain information that could potentially identify an individual. To reduce privacy risks, specific values may be replaced before they are sent as context in a chat. For example, jem, the value of a user.name field, may be replaced with a newly-generated unique ID like 3a4a99d0-60a5-4ab9-99dd-b6786da5b8b2 when user.name is sent as context to the LLM. When a response is received from the LLM, the unique ID is replaced with the original value. This technique is an example of pseudonymization because re-identification of the data subject is impractical without additional information.

We will later discuss how the Elastic AI Assistant's data anonymization feature offers customizable field-level options for including, excluding, and generating pseudoanonymized values for some types of structured context, such as alerts.

User prompts

User prompts are the sandwich's bottom layer. It's where we ask questions like How should I investigate it? or make requests, such as summarize this alert.

When optimizing prompts for repeatability, consider requesting output in a specific format. For example, you can add the instruction format your output in markdown syntax to a user prompt.

Typically, you'll create many more user prompts than system prompts. When refining prompts for consistency, the user prompt should be your starting point.

Building repeatable prompts in the Elastic AI Assistant

Now that you understand how to identify the layers in a prompt sandwich, let's examine a practical application of the technique to build repeatable prompts in the Elastic AI Assistant.

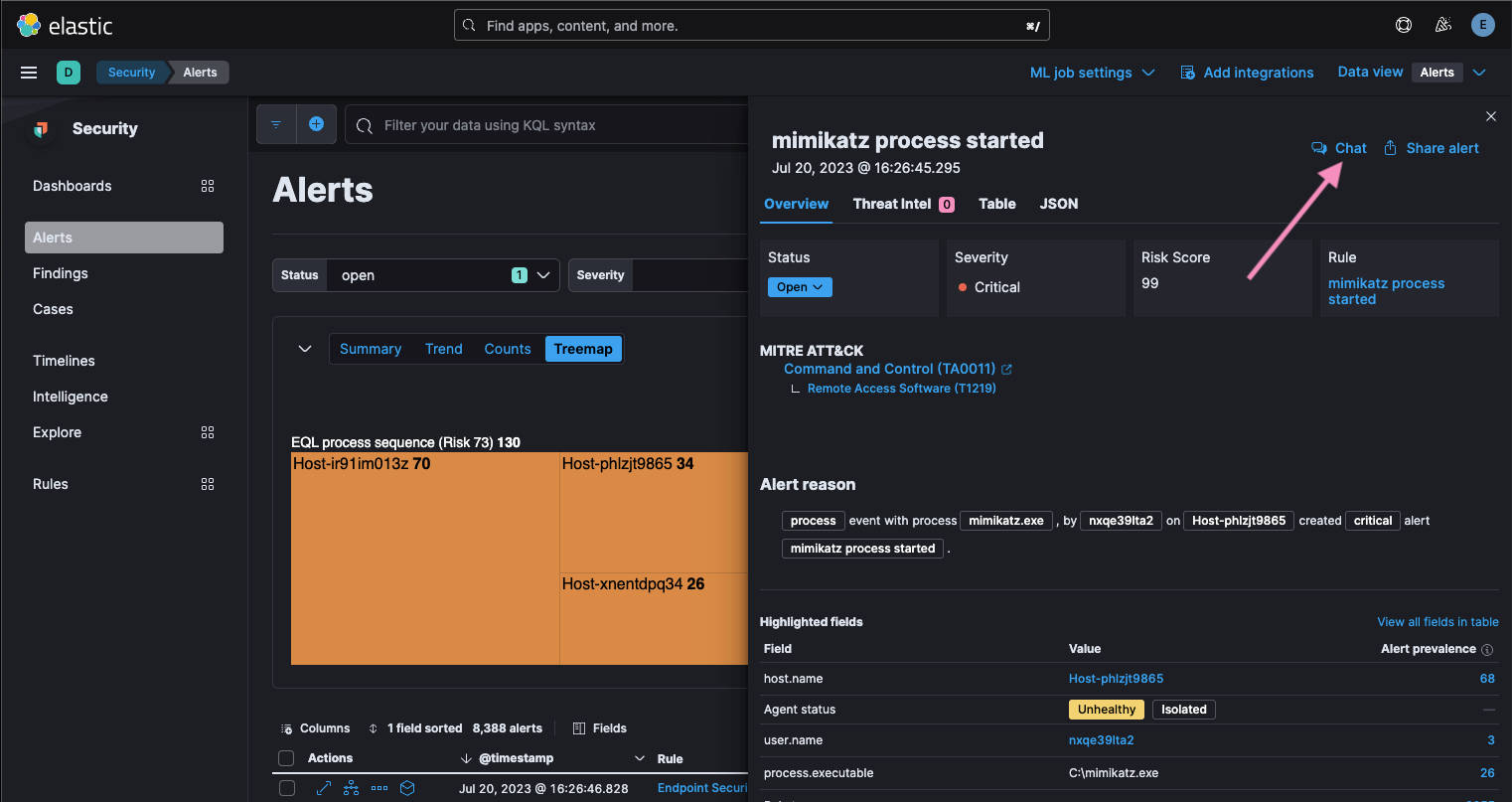

Clicking the Chat button throughout Elastic Security brings data, such as the example alert in the screenshot below, as context into a chat:

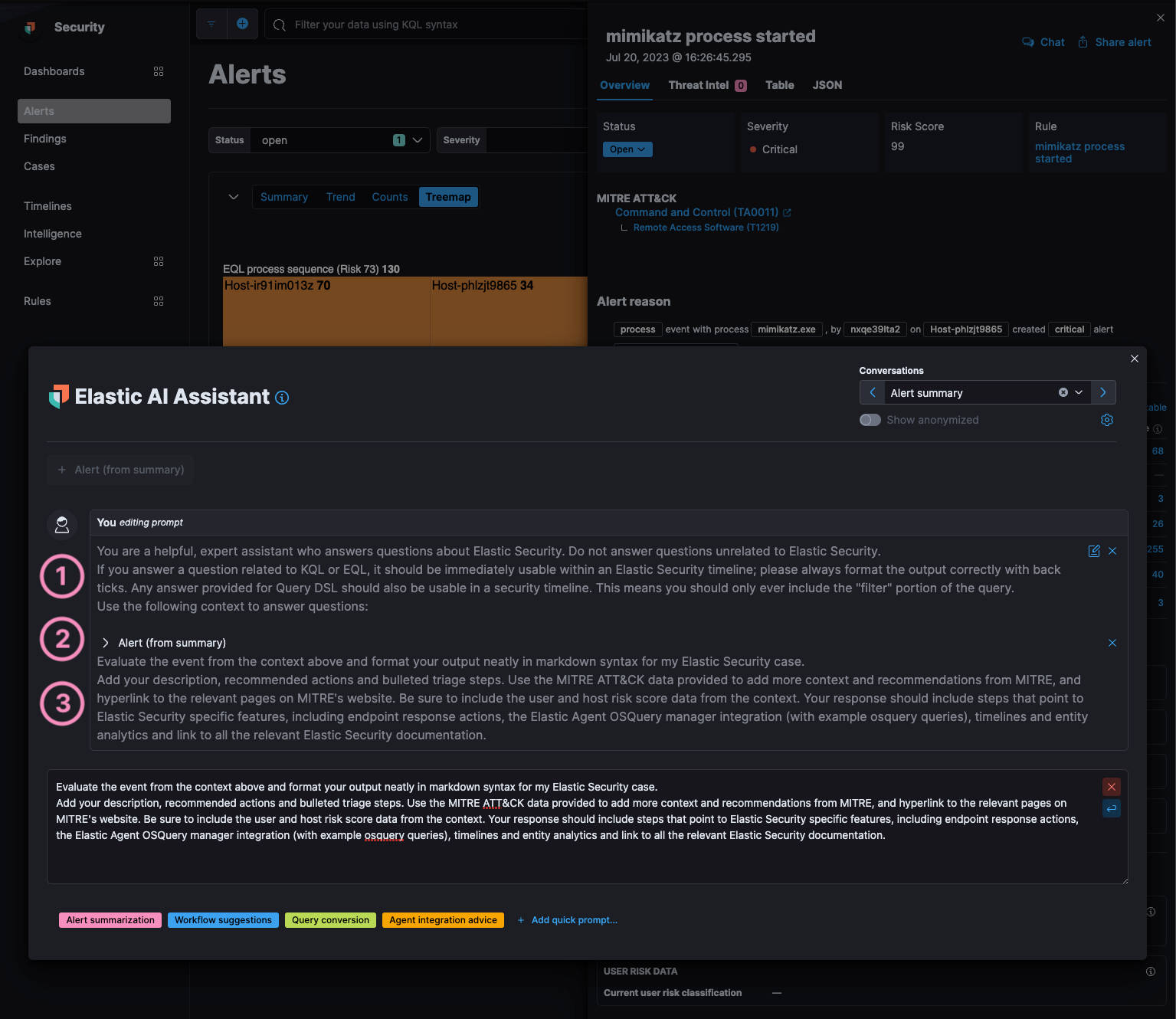

The three layers of the prompt sandwich — (1) System prompt, (2) Context, and (3) User prompt — are labeled in the screenshot below.

Before any data is sent to the LLM, you may use the Elastic AI Assistant's inline editing prompt preview (as shown above) to:

- Add new, edit, or remove system prompts

- Add additional context, remove context, and for some types of data, configure field level anonymization

- Preview or edit user prompts selected via the Quick prompts feature

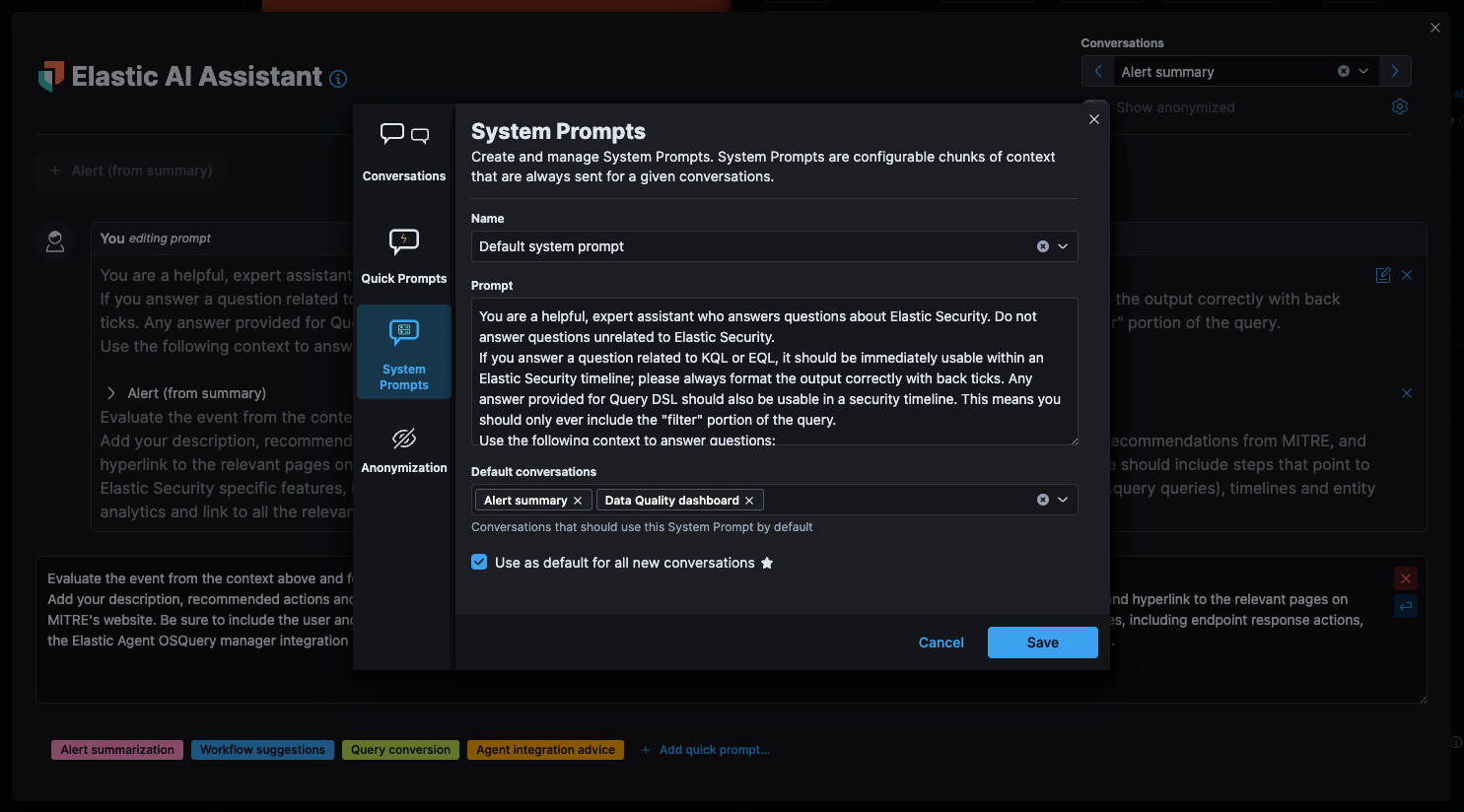

Custom system prompts and user prompts are optionally created via the settings shown in the screenshot below:

The Settings view above also configures the defaults for data anonymization.

Data anonymization (Pseudonymization)

The Elastic AI assistant optionally provides anonymization for some types of context data like alerts so that you may:

- Allow or deny specific fields from being sent as context to the LLM

- Toggle anonymization on or off for specific fields

- Set defaults for the above on a per-field basis or in bulk

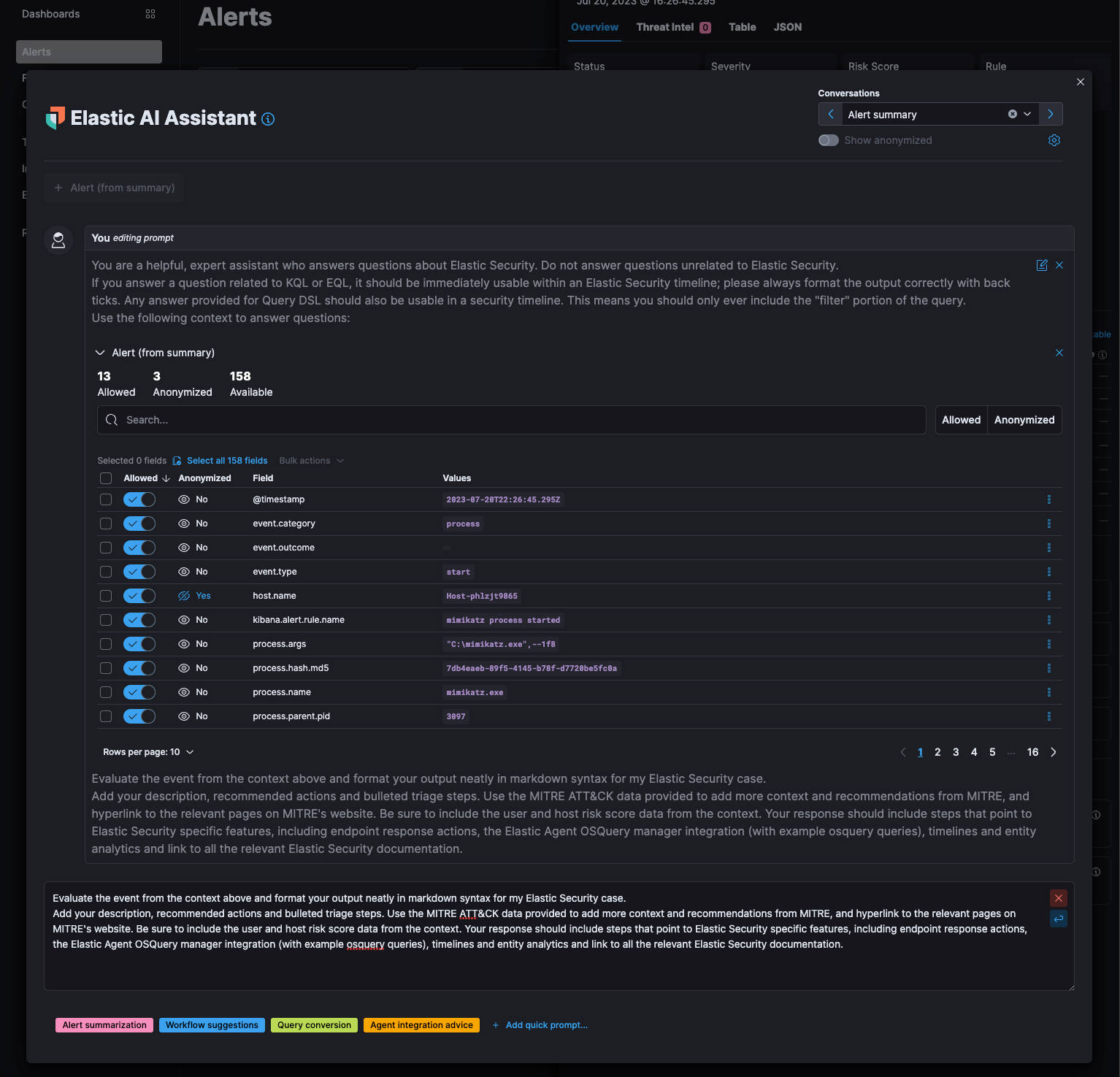

Only the Allowed fields in the example Alert (from summary) context below will be sent to the LLM:

In the example above, the values of three fields will be anonymized when they are sent to the LLM. You may accept the configured defaults or interact with the preview to toggle specific fields.

Review what was actually sent

A single click of the Show anonymized toggle reveals the anonymized data sent to and received from the LLM in chats, as illustrated by the animated gif below:

Above: The Show anonymized toggle reveals the anonymized data sent and received from the LLM.

Conclusion

Crafting prompt sandwiches improves the consistency of responses when incorporating generative AI into your workflows. When tuning prompts, use the three layers of the prompt sandwich: System prompt, Context, and User prompt as guidance for what to change, and when to change it.

When sending data as context to LLMs, remember the importance of privacy. The anonymization feature of the Elastic AI Assistant reduces privacy risks by only allowing specific fields to be sent to the LLM and applying field-level pseudonymization to specific values.

Start a free trial of Elastic Cloud to try the Elastic AI Assistant for free. For more information, including how to integrate it with your model of choice, read our documentation.

If you're a developer, check out The Elasticsearch Relevance Engine™ (ESRE), vector search, and the first of Elastic® contributions to LangChain.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.