Avoid Shadow AI — Embrace generative AI in the SOC

You can’t open your Linkedin feed without being inundated with generative AI articles. They cover the spectrum of generative AI being a horrible evolution that will eliminate jobs, empower cyber criminals, and extract private data to it being the greatest innovation in information technology since the world wide web. As with everything, the truth lies somewhere in the middle of the hyperbole, or as we like to say at Elastic®, “IT, depends.”

It’s pretty complicated to make some things simple, and even more complicated to make other things possible. We embrace and value the knowledge required to do both.

In our discussions with cybersecurity leaders, we consistently hear the question: Should we allow the use of generative AI within our organizations, even within cybersecurity?

However, remember a very similar technology impact with the consumerization of technology. Employees began using applications and technology not previously approved by the organization’s IT department, coined Shadow IT. The rise of Shadow IT has taught us that our users will find a way to leverage new technology.

As cybersecurity professionals, your team of motivated, talented experts will use every advantage they can find to combat cyber threats. This includes generative AI, whether they get your explicit approval or not. Therefore, the real question you need to ask yourself is: Should we be aware of the use of generative AI within our cybersecurity organization?

The dangers of Shadow AI

Like Shadow IT, Shadow AI is already in use within organizations that are not actively empowering their teams with explicit uses of generative AI to accelerate their daily operations. When employees choose to implement these kinds of processes on their own, it opens their company to a myriad of security and data risks.

When talking with CISOs across our global customer base, the consistent concern around embracing generative AI was how to maintain control of their data. It is too easy to accidentally send private information to a public large language model (LLM), and companies like Samsung have had cases of employee misuse and are now taking extra steps to control the use of generative AI through infosec policies.

Front door locks only keep honest people out of your home. Similarly, infosec policies only stop those that read (and follow) the instructions. In line with Zero Trust policies, the team at Elastic urges CISOs to embrace the potential business accelerations by enabling generative AI with strong controls through trusted solutions.

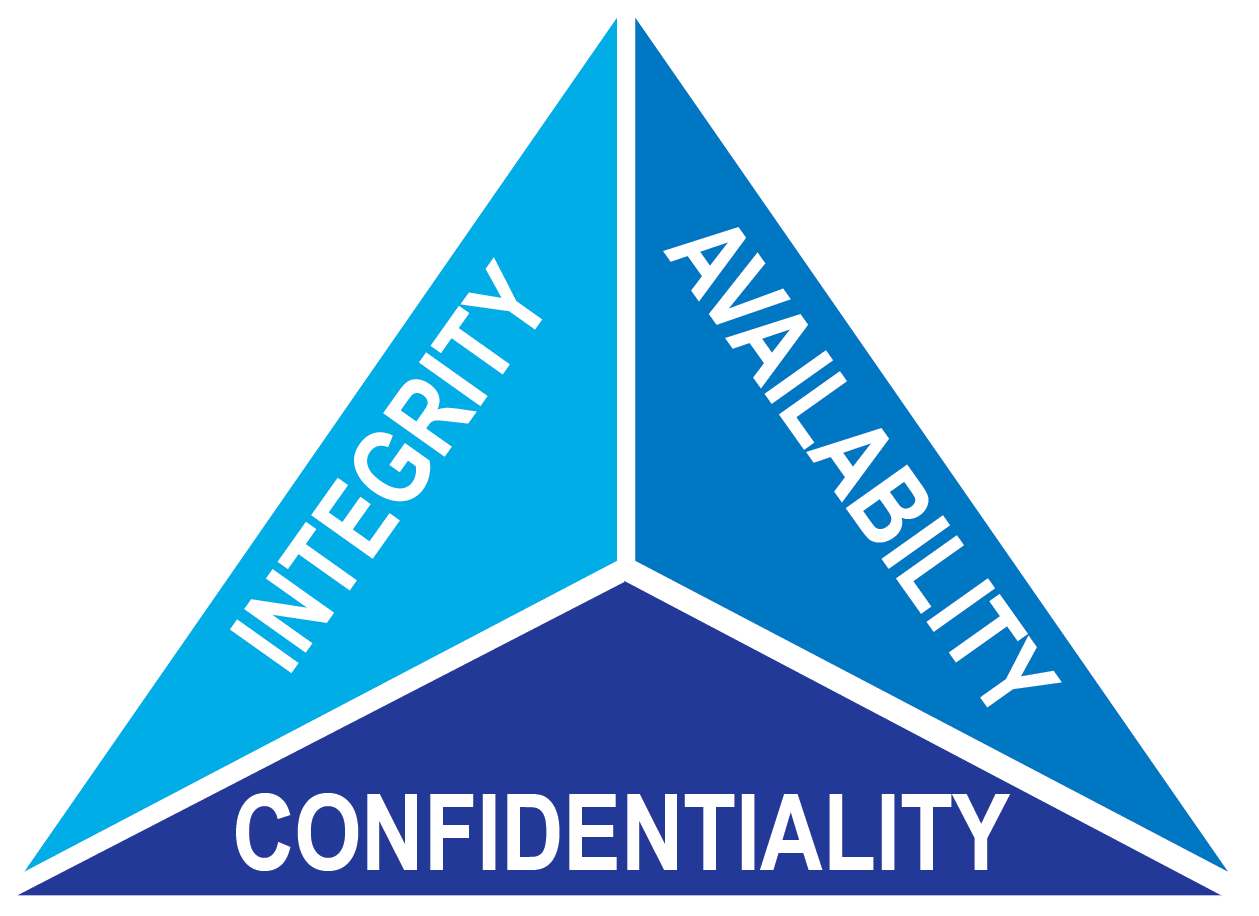

In order to choose which strong controls to implement, our team at Elastic encourages you to become familiar with the CIA triad established through the US National Institute of Standards and Technology (NIST). Let’s evaluate the three principles of the CIA triad when applied to generative AI:

Confidentiality

CISOs need to be careful when implementing LLMs. Ensure the models you choose to employ are either hosted locally within your organization or are hosted by a vendor you trust that explicitly ensures the privacy of your interactions with the LLM. Microsoft Azure OpenAI because of its rigid focus on customer data and privacy. When selecting an LLM provider, you need to ensure that the data you send to the LLM — whether as questions, prompts, or context — will not be used in future pre-training or fine-tuning of new models.

Unfortunately, the user experience is so simple for most of these generative AI models (chat-like interfaces) that it’s easy to forget that you’re passing information outside of your organization. Additionally, robust generative AI applications can pull even more context directly from your system or environment, and add it to your question, meaning information you may not have even been aware of is being sent to these models.

These challenges guided us as we created the Elastic AI Assistant. While creating, we chose to follow the Elastic principle of complete transparency. Everything that the Elastic AI Assistant sends to an LLM is shown to the user.

We think this transparency is necessary, but not sufficient. So, the Elastic AI Assistant also enables admin-controlled context window anonymization. Through a simple user interface, users can select on a field-by-field basis to either restrict the sending of data or anonymize the information being shared with the LLM. These industry-first controls bring the field-level and document-level controls users have grown to love and expect in Elasticsearch to generative AI through the Elastic AI Assistant.

Integrity

Generative AI can seem like magic, providing pin point accurate information in seconds. However, it is flawed and can provide answers that are incorrect or misleading. So, not only is it key to ensure that the integrity of data is maintained through the many systems operating on the information, but we must also expand the scope of integrity here to ensure generative AI answers are not blindly followed.

Generative AI has the power to address the cyber skill gap that has plagued us since the term cybersecurity was first coined. It addresses this challenge by accelerating the rate at which our existing teams do their work, allowing them to accomplish more, and elevating new professionals to excel in more advanced roles.

Generative AI will expand our teams, not reduce them. Generative AI should not be thought of as a way to reduce the need for human intelligence. No one understands the impact to your business better than your analysts, and generative AI can speed up their processes by allowing users more time to make the critical decisions — leading to strong cyber posture everywhere.

Availability

Using a solution like Elastic Security to power generative AI ensures the information sent to the LLM and retrieved as answers is maintained for as long as you’ve defined your data lifecycle. This central “funnel” of generative AI operations ensures auditing and compliance can be maintained while also ensuring you are providing leading edge technology to the hands of your practitioners. As mentioned earlier, users will find a way to use generative AI in your company, and we believe that way should be monitored for acceptable use.

To this point, it’s also important to keep your data within your control (local if possible). Utilizing LLMs that keep data within your ecosystem, like Microsoft Azure OpenAI, ensures you maintain control and availability. And eventually, the ideal way to use generative AI would be to keep all your data local. As LLMs get smaller over time via distillation and quantization techniques (especially the open source models), we are eager to start helping our customers run these LLMs locally in Elasticsearch via our “bring your own Transformer” functionality. We are already able to do this with smaller LLMs, and we anticipate those capabilities improving dramatically over the next year.

Avoid Shadow AI by embracing and empowering your team

Generative AI is changing how we operate within the SOC, and your teams can accomplish so much more through the help and guidance of this technology. Remember, if you try to block generative AI usage you will only strengthen the Shadow AI footprint in your organization. By embracing generative AI and ensuring it follows the same core principles as other processes within your SOC, you can strengthen the security posture of your organization, help your team to be more successful, and maintain control of your data.

Elastic can help enforce the guardrails on generative AI. For more information on how to integrate the Elastic AI Assistant with your model of choice and begin harnessing the power of generative AI, read our documentation.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.