Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

Previously published on the Google Cloud blog

For retailers, having a powerful search function for their website and mobile applications is crucial. However, most current search experiences are somewhat basic and limited, primarily returning a list of results ranked based on keyword relevance. And typically, customers only have a vague idea of what they want, rather than a specific item in mind. For instance, they might be searching for a nice dining room table but aren't sure about the style, size, shape, or other details. Not surprisingly, many customers leave a retail website after unsuccessful search attempts. This not only deprives them of a potentially satisfying shopping experience but also represents a lost opportunity for retailers.

Today, we are excited to present a new search experience for retailers using generative AI with Vertex AI and Elasticsearch®. This enhanced interface offers users an interactive, conversational experience that summarizes pertinent data and tailors responses based on each customer's unique needs, all while drawing upon the retailer's public and internal knowledge. That can include, for example, public knowledge from the retailer's domain expertise:

- Guidelines and common scenarios for solving your specific need

- Summary of the reviews for similar products matching your request

- Public references on how to build, combine, or use the products

Or, the knowledge can be private to the retailer:

- A user’s general location and local regulations that may apply to them

- Real-time updated product catalog, stock nearby, and prices

- Private documentation and knowledge

Instead of merely generating a simple list of relevant items, this advanced search experience functions much like a concierge sales representative with in-depth domain knowledge. It assists and guides customers throughout their entire purchase journey, creating a more engaging and efficient shopping experience.

Architecture

The diagram below illustrates the integration of Google Cloud’s generative AI services and Elastic’s search capabilities, which forms the foundation for this new user experience.

The life of a search query

In this architecture, a search query happens in two broad phases.

1. Initial query processing and enrichment

A user initiates the process by submitting a query or question on the search page.

This query, along with other relevant metadata like general geolocation, session information, or any other data deemed significant by the retailer, is then forwarded to Elastic Cloud.

Elastic Cloud uses this query and metadata to perform search on the domain-specific customer data, gathering rich context information in the process:

- Relevant real-time data from multiple internal company data sources (ERP, CRM, WMS, BigQuery, GCS, etc.) are continuously indexed into Elastic through its set of integrations, and related embeddings are created via your transformer model of choice or the built-in Elastic Learned Sparse EncodeR (ELSER). Inference can be automatically applied to every data stream through ingest pipelines.

- Embeddings are then stored as dense vectors in the Elastic’s vector database.

- Using the search API, Elasticsearch starts executing search among its indices with both text-search and vector search within a single call. Meanwhile it generates vectors on the user’s query text that it receives.

- Generated embeddings are compared via vector search to the dense vectors from previously ingested data with kNN (K-Nearest Neighbors Algorithm).

An output is produced combining both semantic and text-search results, with the Reciprocal Rank Fusion (RRF) hybrid ranking.

2. Produce results using generative AI

The original query and the newly obtained rich context information is forwarded to Vertex AI Conversation. Conversational AI is a collection of conversational AI tools, solutions, and APIs, both for designers and developers. In this design, we will be using Dialogflow CX in Vertex AI Conversation for the conversational AI and integrate with its API.

When using the Dialogflow CX API, your system needs to:

- Build an agent.

- Provide a user interface for end users.

- Call the Dialogflow API for each conversational turn to send end-user input to the API.

- Unless your agent responses are purely static (uncommon), host a webhook service to handle webhook-enabled fulfillment.

For more details on using the API, please refer to Dialogflow CX API Quickstart.

The Conversational AI module reaches the endpoints of the LLM model deployed in your Vertex AI tenant to generate the complete response in natural language, merging model knowledge with Elastic-provided private data. This is achieved by:

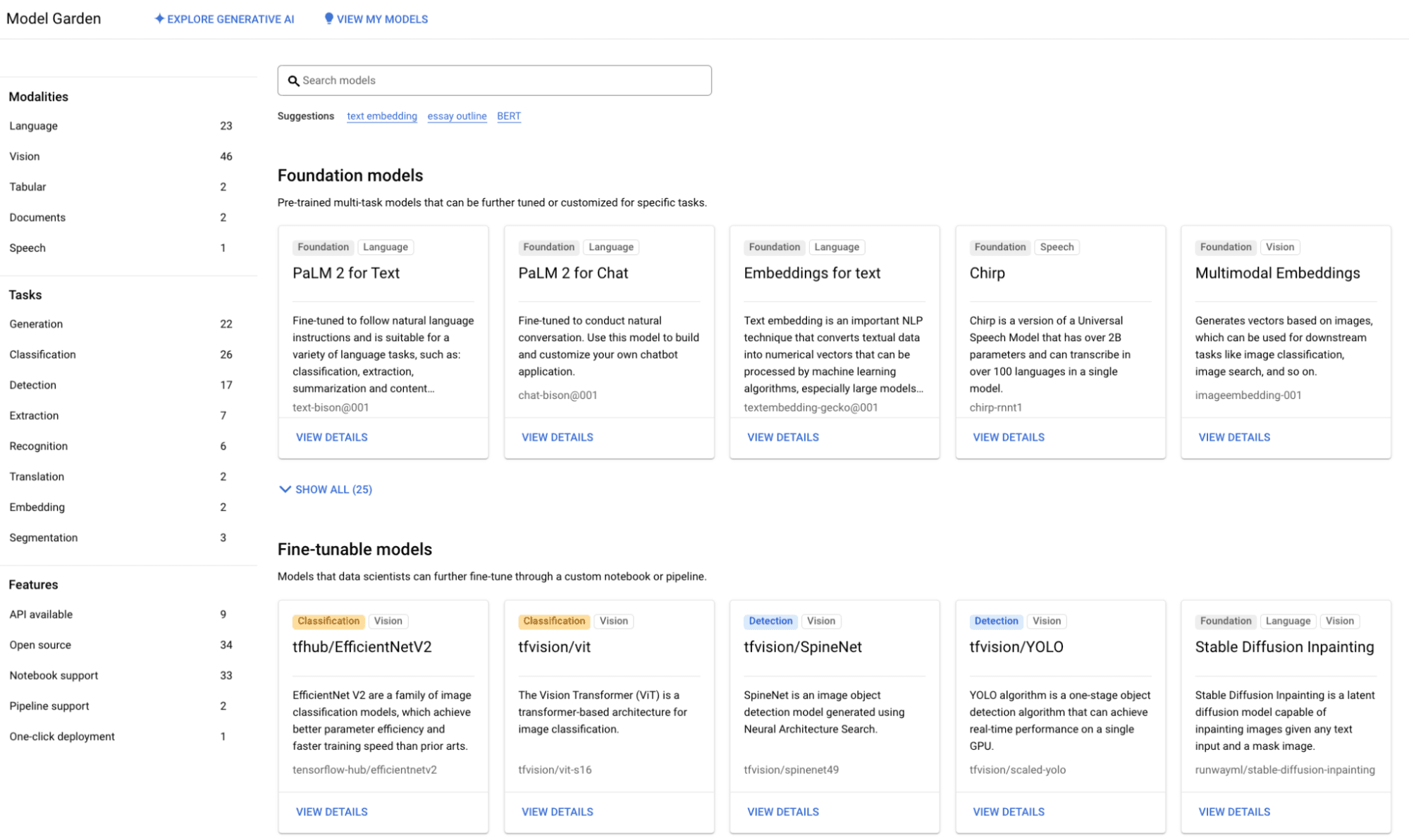

- Selecting your favorite model from Model Garden

- If needed, fine-tuning it on your domain tasks

- Deploying the model to an endpoint in your Google Cloud project

- Consuming the endpoint from the Dialogflow workflows

To manage data access control and ensure privacy, Vertex AI employs IAM for resource access management. You have the flexibility to regulate access at either the project or resource level. For more details, please refer to the Google documentation. Please refer to the section below for more details on this step.

Dialogflow make the chatbot experience actionable with conversational responses, providing relevant actions to the user depending on the context (for instance placing an order or navigate to content)

The response is relayed back to the user.

Elastic Cloud: Build context from enterprise data

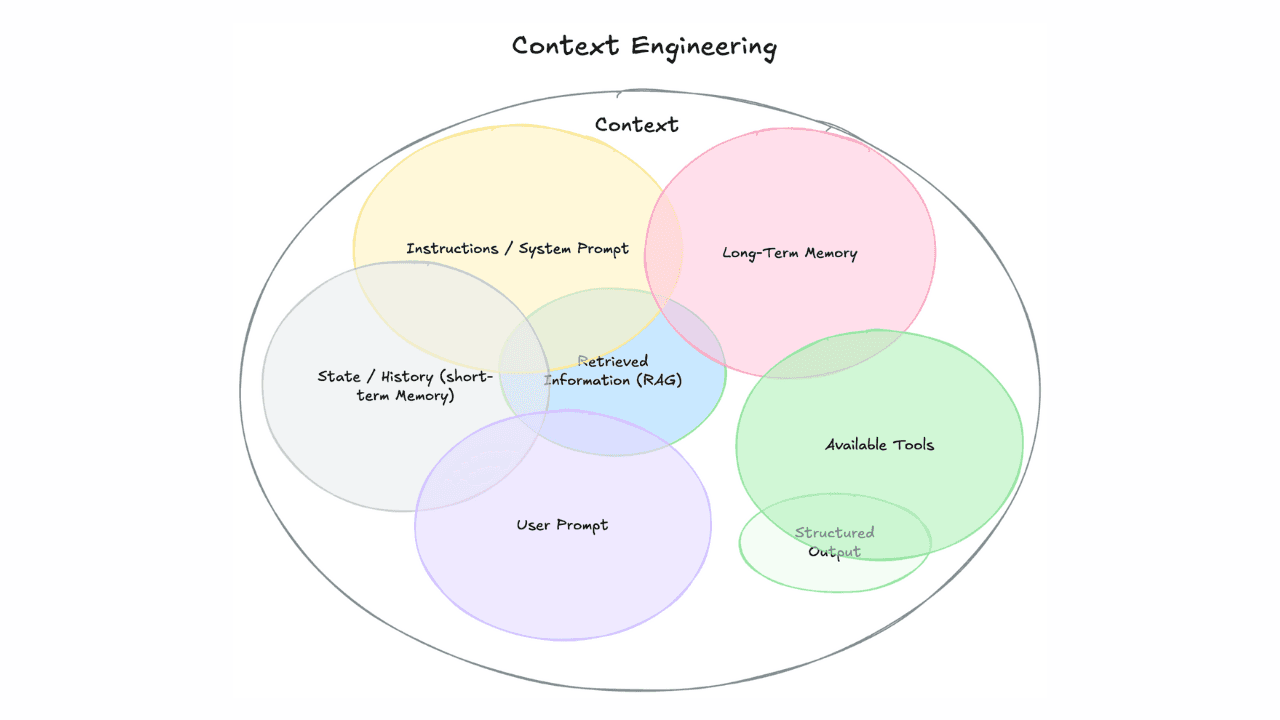

When using generative AI, context windows help to pass additional, user-prompted, real-time, private data to the model at query-time, in parallel with the question you’re submitting. This enables users to receive better answers as output, based on the public knowledge that the LLM is trained on, but also in the space of the specific domain you provided. Generative AI’s effectiveness highly depends on input engineering, and context really improves quality of results.

Once the user submits their question via your website search box, Elasticsearch digs into your internal knowledge base, searches for related content, and returns it for further processing on the awaiting generative model. Searching information inside your business, from multiple diverse data sources, is what Elasticsearch is designed for.

But when the context window size is limited, common models only allow processing of just a few thousands tokens. It’s therefore impossible to pass the whole enterprise data set to each query. Moreover, the bigger the query the user submits, the more performance and cost are impacted. You need to filter and find relevant context even before sending the query to generative AI.

The Elasticsearch Relevance EngineTM (ESRE) is a package built on years of R&D and expertise from Elastic in the search and AI space to help customers leverage the power of Elasticsearch with high-end machine learning features. ESRE enables relevant results retrieval from huge, heterogeneous data sets quickly using advanced vector search features on text, images, audio, and video, all while combining with classic keyword text-search on Elasticsearch indices. With ESRE, not only is every information retrieved relevant, but also secured thanks to document- and field-level security applicable to all content that Elastic handles.

With Elastic, you have the freedom of choosing and running your own machine learning (ML) models to create embeddings or for feature extraction, thus allowing full control and customization depending on your specific domain, language, and data type. Vertex AI can then help you create your transformer models to import into the Elastic platform. No worries if you want to get started quickly instead, with no internal ML skills: the out-of-the-box ELSER retrieval model lets you be up and running with just a few clicks.

Fine-tuning foundation models in Vertex AI

Prompt design strategies and context windows might not be sufficient for tailoring model behavior.

Model Garden: Vertex AI Model Garden is a rich repository of pre-constructed ML models that cater to a diverse set of use cases. These enterprise-ready models have been meticulously tested and optimized for enterprise applications' performance and precision needs. They offer user-friendly access through APIs, notebooks, and web services. Designed with scalability in mind, these models can handle large data volumes and serve numerous users. Furthermore, they are freely accessible and regularly updated with fresh models.

Model customization: Retailers may prefer to interact with foundational models to adapt them to their unique needs. Fine-tuning is an efficient and economical approach to enhance a model's task-specific performance or comply with distinct output requirements when instructions aren't adequate. But developing and training new foundational models from the ground up can be costly. Techniques such as efficient parameters tuning reduce overhead while implementing adapter layers atop existing LLMs. These layers supplement the models with your specific missing knowledge, maintaining their already established skills.

Security and privacy: While consumer AI assistants are typically robust, controlling their features and security isn’t always in your hands. Having complete insight into your data's usage and how it is stored is vital to compliance, privacy, and maintaining a competitive edge. Google’s Secure AI Framework provides clear industry standards for building and deploying AI technology in a responsible manner. This is why adopting generative AI for enterprises via Google’s Vertex AI capabilities is so smart.

Enterprise-grade solution: Vertex AI Generative AI Studio provides access to cutting-edge models and allows for navigation through various releases and modifications. Once customized, you can test, deploy, and integrate them with other applications and platforms hosted on Google Cloud. This can be done via the Vertex AI SDK or directly through the Google Cloud console, making it user friendly for almost anyone, even without specialized data-science expertise.

Responsible AI: With great power comes great responsibility. LLMs have the capacity to translate languages, condense text, and generate creative writing, code, and images, among many other tasks. However, as an emerging technology, its evolving abilities and applications may lead to potential misuse, misapplication, and unexpected consequences. These models can sometimes generate outputs that are unpredictable, including text that might be offensive, insensitive, or factually incorrect. Google is dedicated to promoting responsible use of this technology, providing safety features and filters to protect users and ensure compliance with its AI principles and commitment to responsible AI.

Next steps

Getting started with building your own generative AI-powered customer facing retail app has never been so easy: take a look at Elastic’s official GitHub repo that helps you create and run, with guided steps, an ecommerce search bar with ESRE and Vertex AI.

If you're interested in exploring further through code examples, Google Lab provides a comprehensive guide entitled Dialogflow CX: Build a retail virtual agent. For additional examples of Google generative AI designs, we encourage you to visit the Generative AI examples page.

Concerned about the usage of your Vertex AI models and services? Explore how Elastic provides an end-to-end observability platform that leverages all your logs, metrics, and traces directly from the Google Cloud operations suite thanks to native integrations.

Don't miss out on this groundbreaking innovation. We encourage you to explore and adopt this solution and start transforming your customers' shopping experiences. The best way to start is to create a free 14-day trial cluster on Elastic Cloud using your Google Cloud account, or easily subscribe to Elastic Cloud through Google Cloud Marketplace.