KI-gesteuertes SIEM, das

Open Source und erschwinglich ist

Elastic SIEM hat sich in Tausenden von realen Umgebungen bewährt und basiert auf KI – so können Sie Bedrohungen schneller erkennen und skalieren, ohne zu viel Geld auszugeben.

Geführte Demo

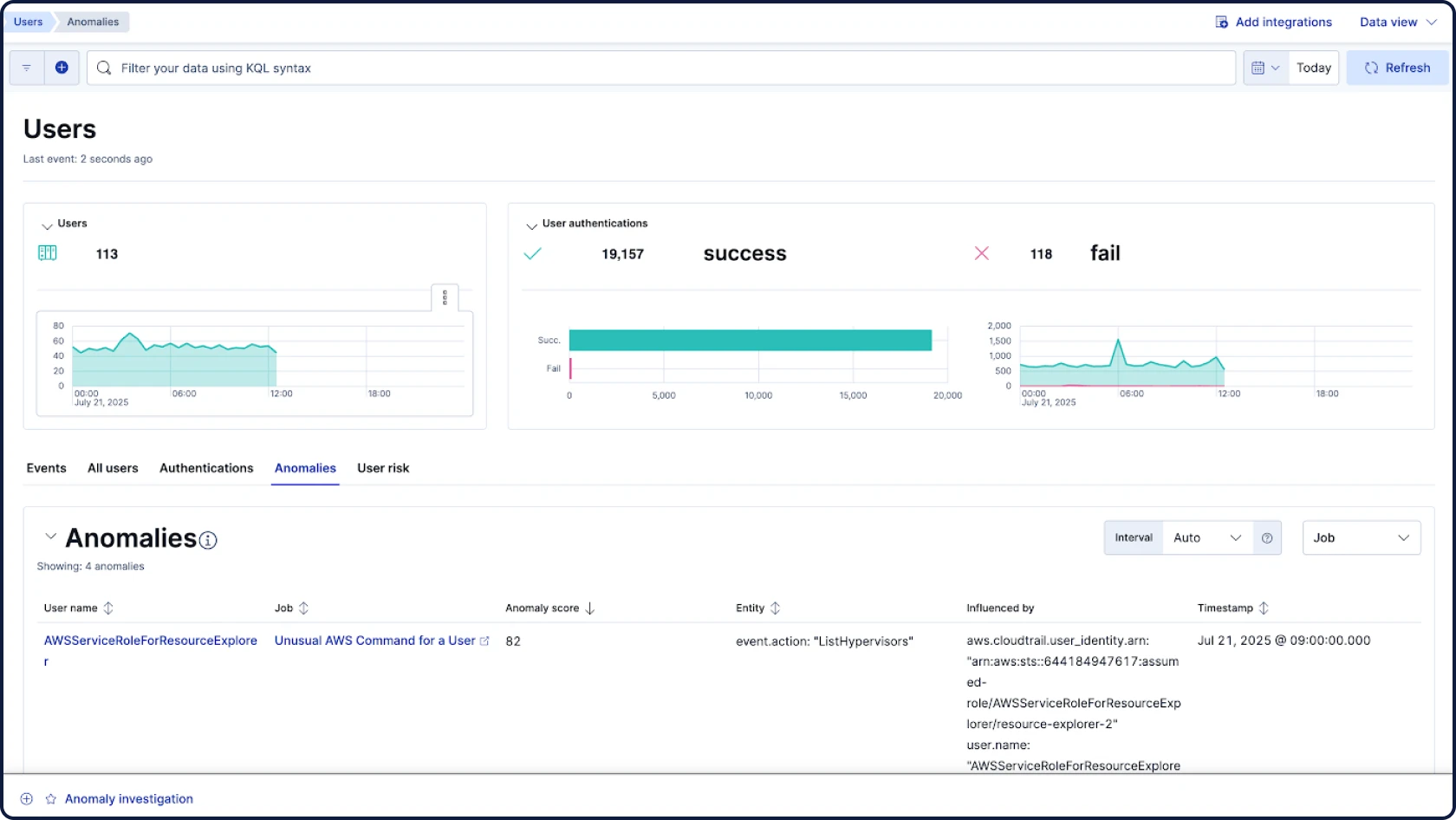

Finden Sie die Bedrohungen, die in Ihren Daten lauern

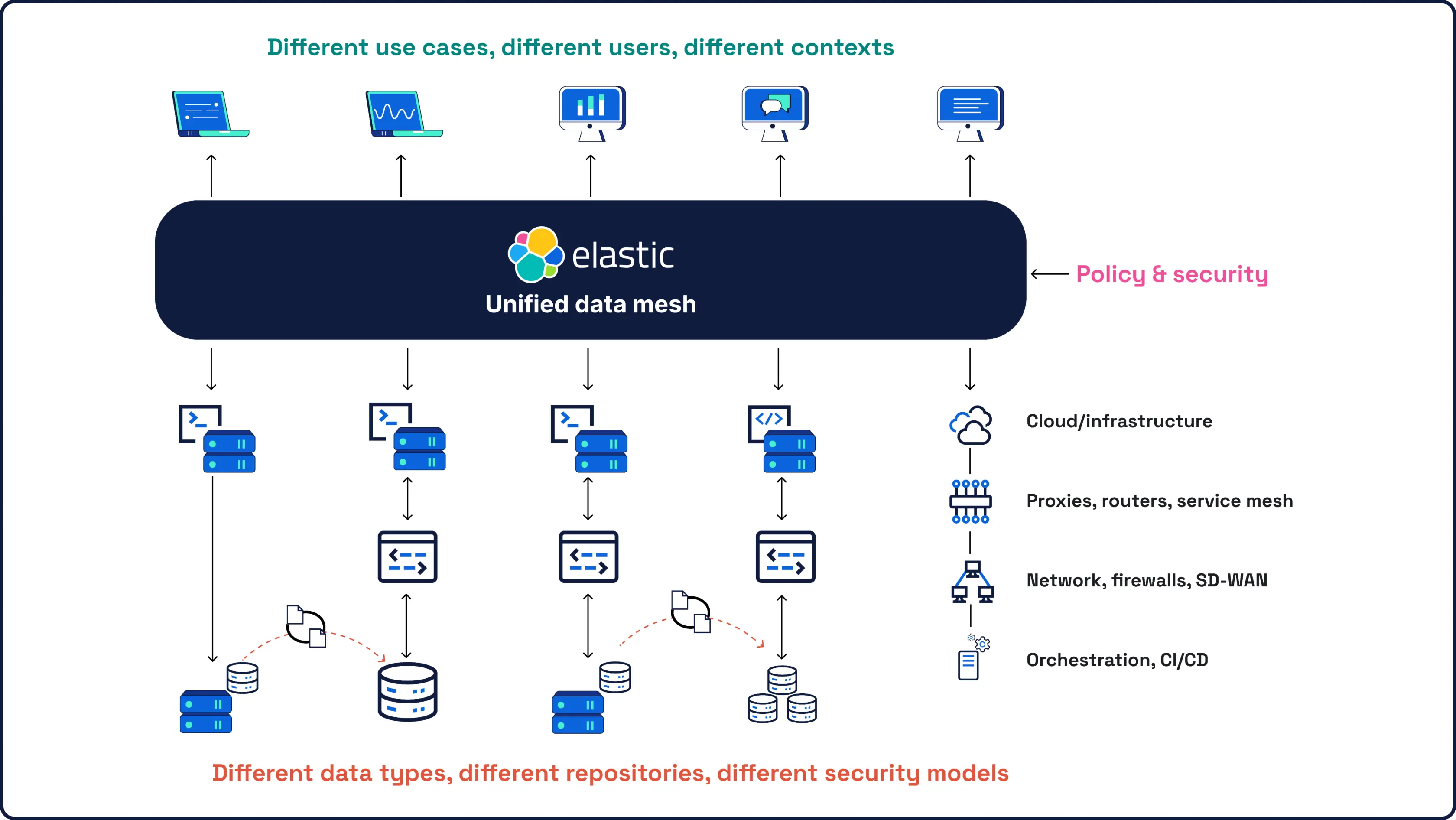

Sicherheit beginnt mit Daten – und mit Daten kennen wir uns am besten aus. Als das Unternehmen hinter Elasticsearch, der weltweit führenden Open-Source-Such- und Analytics-Engine, bietet unser SIEM leistungsstarke, KI-gesteuerte Erkennung und Untersuchung für all Ihre Sicherheitsdaten in jedem Umfang.

HUNT & INVESTIGATE

SIEM für das SOC von morgen

Alle Ihre Sicherheitsdaten, KI und Untersuchungen auf einer offenen, erweiterbaren und skalierbaren Platform

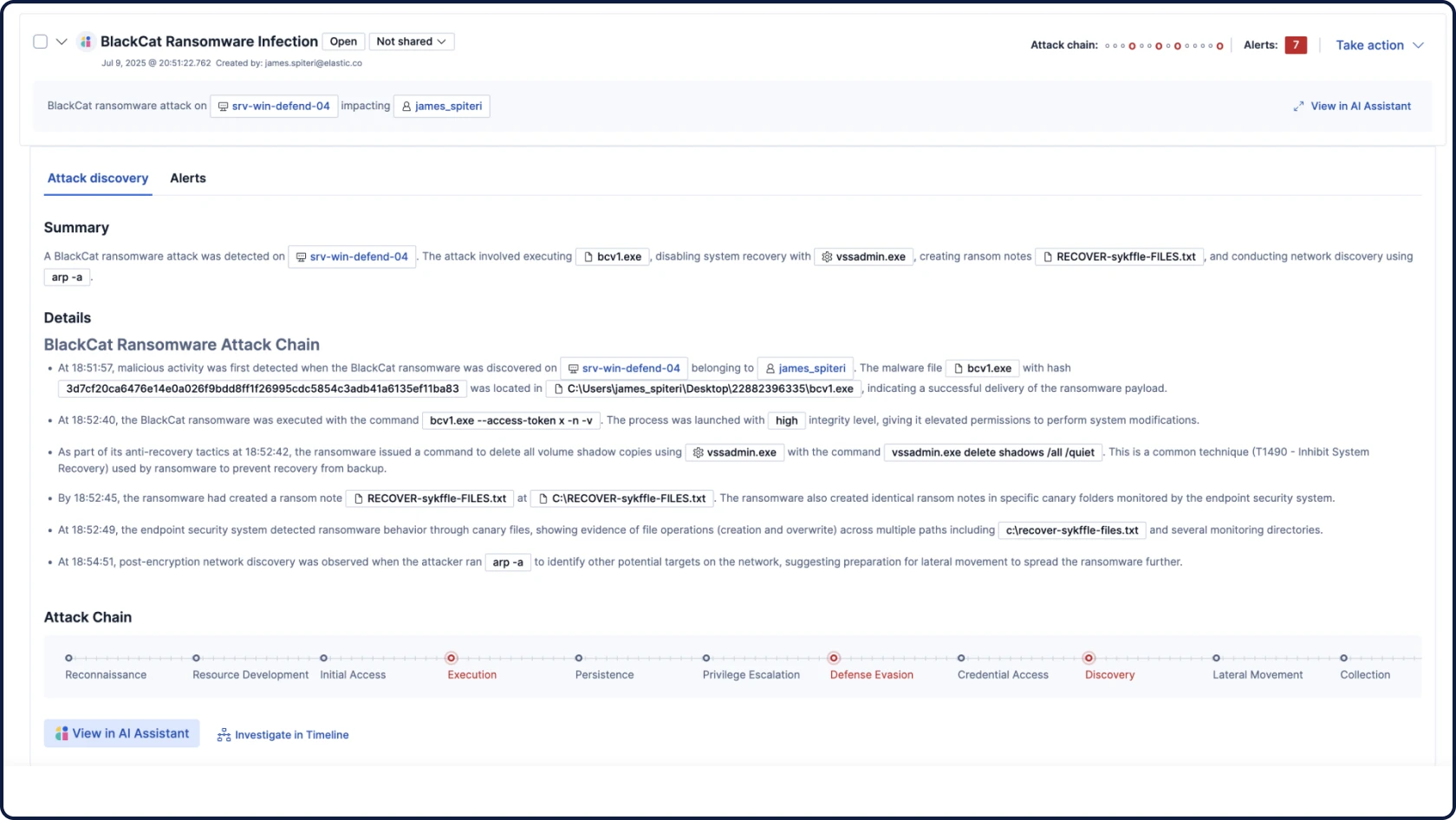

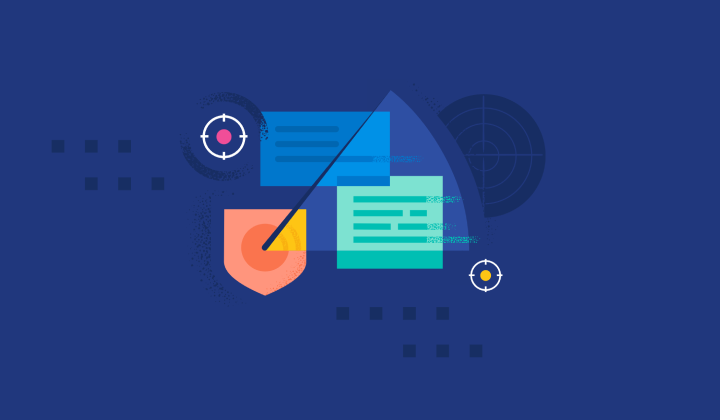

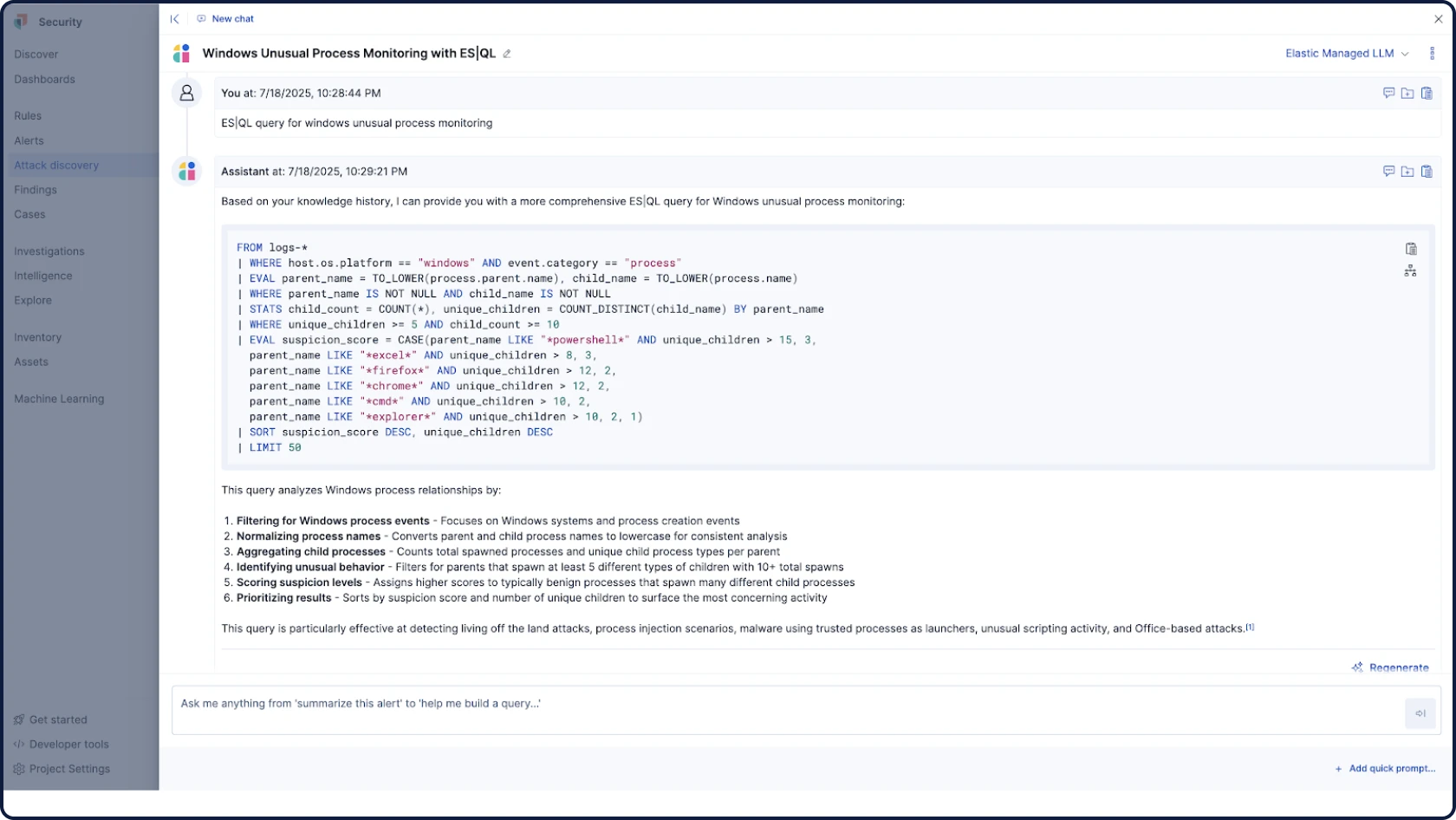

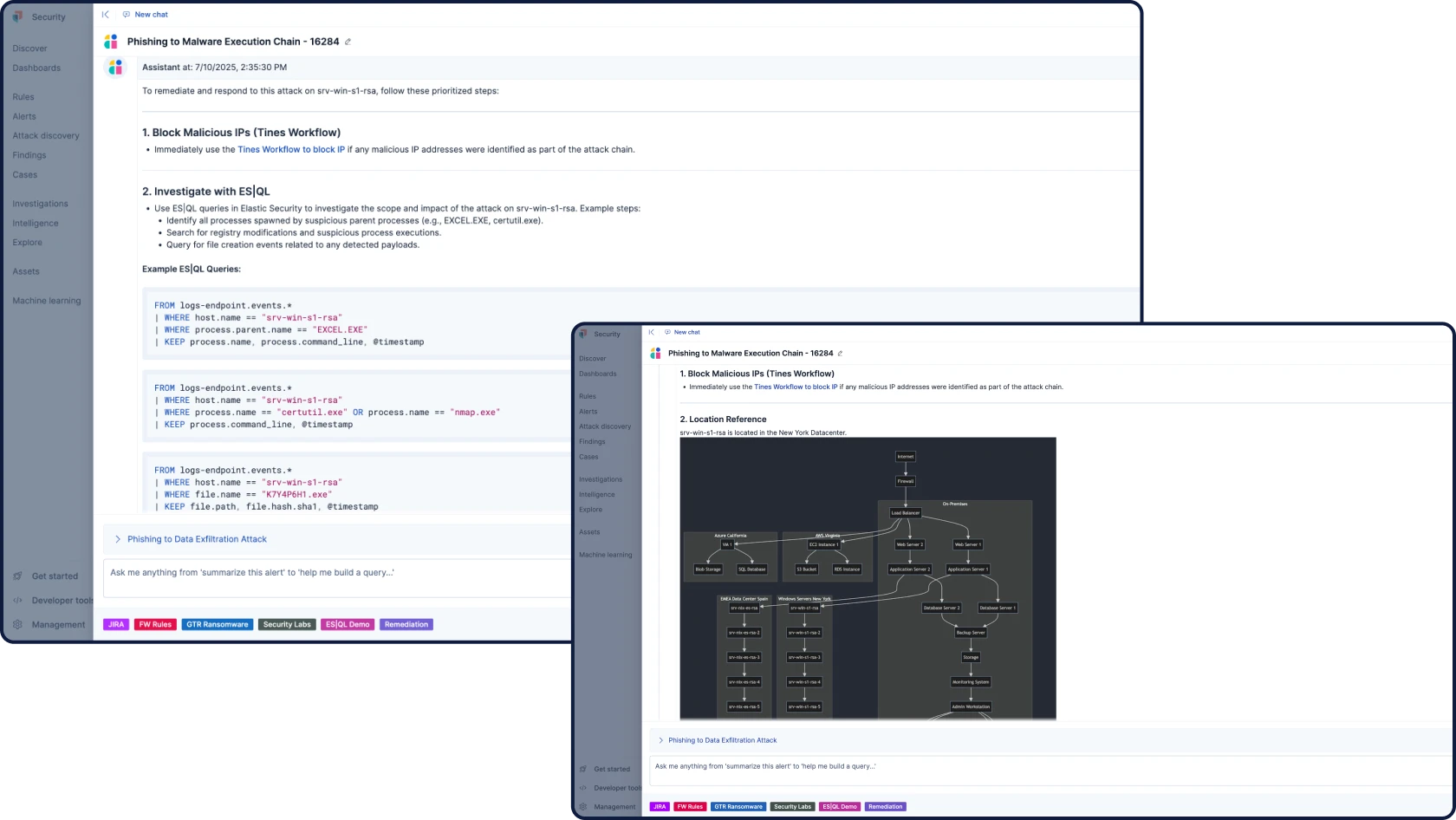

Automatisieren Sie Ihre Workflows mit fundierter, kontextbezogener und transparenter KI für Sicherheit. Attack Discovery identifiziert echte Angriffsverhalten und -pfade durch die Korrelation von Warnungen, während AI Assistant Fragen beantwortet und den:die Analyst:in bei den nächsten Schritten unterstützt.

VERPACKUNGSOPTIONEN

Übernehmen Sie alles oder gehen Sie in Ihrem eigenen Tempo vor

Unsere Sicherheitsplattform holt Sie dort ab, wo Sie sind – und bringt Sie dorthin, wo herkömmliche Platform nicht hinkommen.

Elastic Security

Alles, was Sie brauchen – SIEM, XDR, Cloud-Sicherheit und integrierte KI – auf einer einheitlichen Plattform. Keine zusätzlichen SKUs, keine Ergänzungen, keine Kompromisse. Eine einzige nahtlose Erfahrung, die speziell für die Denkweise, Recherche und Reaktion von Analysten entwickelt wurde.

Elastic AI SOC Engine (EASE)

Ein Paket von KI-Funktionen, mit dem Sie Elastic Security nach Ihrem Zeitplan einführen können, ohne alles komplett ersetzen zu müssen. Stärken Sie Ihr vorhandenes SIEM, EDR und andere Alerting-Tools mit KI, die sich in Ihre Daten und Workflows einfügt – und erweitern Sie es auf die vollständige Plattform, wenn Sie bereit sind.

Sie sind in guter Gesellschaft

Kunden-Spotlight

Airtel verbessert mit den KI-Fähigkeiten von Elastic seine Cybersicherheitslage, steigerte die SOC-Effizienz um 40 % und beschleunigte Untersuchungen um 30 %.

Airtel verbessert mit den KI-Fähigkeiten von Elastic seine Cybersicherheitslage, steigerte die SOC-Effizienz um 40 % und beschleunigte Untersuchungen um 30 %.Kunden-Spotlight

Die Sierra Nevada Corporation schützt ihre Infrastruktur und die anderer Rüstungsunternehmen mit Elastic Security.

Die Sierra Nevada Corporation schützt ihre Infrastruktur und die anderer Rüstungsunternehmen mit Elastic Security.Kunden-Spotlight

Mimecast zentralisiert die Transparenz, treibt Untersuchungen voran und reduziert kritische Vorfälle um 95 % und transformiert so globale SecOps.

Am Chat teilnehmen

Verbinden Sie sich mit der globalen Community von Elastic Security – von offenen Gesprächen und Zusammenarbeit bis hin zur Stärkung unseres Produkts durch unser Bug-Bounty-Programm.

Stellen Sie Fragen, erhalten Sie Antworten und verschaffen Sie sich Gehör in unserem offenen Forum.

Tauchen Sie in Elastic ein. Lernen Sie, erkunden Sie und treten Sie mit Gleichgesinnten in Kontakt.

.jpg)