チュートリアル:ホスティングされたElasticsearchとElastic Stack 5.x用のサンプルデータを使ってのElastic Cloudをはじめる

| 本稿では、Elastic Stackバージョン5.xを操作するための最新の説明が記載されています。古いバージョンでは、Elasticsearch 2.xおよびKibana 4.x用のサンプルデータセットが用意されていました。 |

ホスティングされたElasticsearchの環境を起ち上げて稼働させるのは極めて簡単です。Elastic Cloudを使用すれば、文字通り数分でクラスタを起動してデータの取り込みを開始することができます。その方法はこの3分間の動画で確認できます。本記書では1つ1つの手順を段階的に説明しているので、Elastic Cloudアカウントのセットアップ、Elasticsearchクラスタの作成/セキュリティ設定、データのインポート、Kibana上のデータの可視化を習得できます。それでは始めましょう。

Elastic Cloudにログインする

- https://www.elastic.co/cloud/elasticsearch-serviceにアクセスして無料トライアルに登録してください

- 確認用Eメールが届きます。Eメールを開き、記載されている手順に従ってトライアルを開始してください

- Elastic Cloudアカウントにログインしてください

最初のホスティングされたElasticsearch

- 登録が完了したら、[クラスタ]タブに移動してクラスタを作成してください

- クラスタのサイズを選択してください。本例では、メモリが4GB、ストレージが96GBのクラスタを選択します。

- お客様のロケーションに近い地域を選択してください。本例では米国東部を選択します。

- レプリケーションを選択します。本例では1データセンター選択します。

- [作成]をクリックして、クラスタをプロビジョニングしてください。プロビジョニングが開始し、完了すると通知が届きます。

- ポップアップ表示されるelasticユーザー用のパスワードを保管してください。後で必要になります。

Kibanaを有効にする

次に、クラスタへのアクセスやセキュリティを設定できるように、Kibanaを有効にしましょう。

- Kibanaを有効にするには、[設定]リンクをクリックして「Kibana」セクションが表示されるまでスクロールしてください。

- クラスタのプロビジョニングが完了するまで待つと、「Kibana」セクションに有効ボタンが表示されます。

- 有効ボタンをクリックしてください

- リンクをクリックして、新規タブでKibanaを開いてください。Kibanaを有効にする手順では、「利用できません」という応答が表示されることがあり、この応答が表示されるまで少し時間がかかります。この場合、数秒待ってから再読み込みをお試しください。

- ユーザー名「elastic」と最初のプロビジョニング手順でコピーしたパスワードを使用してKibanaにログインしてください。

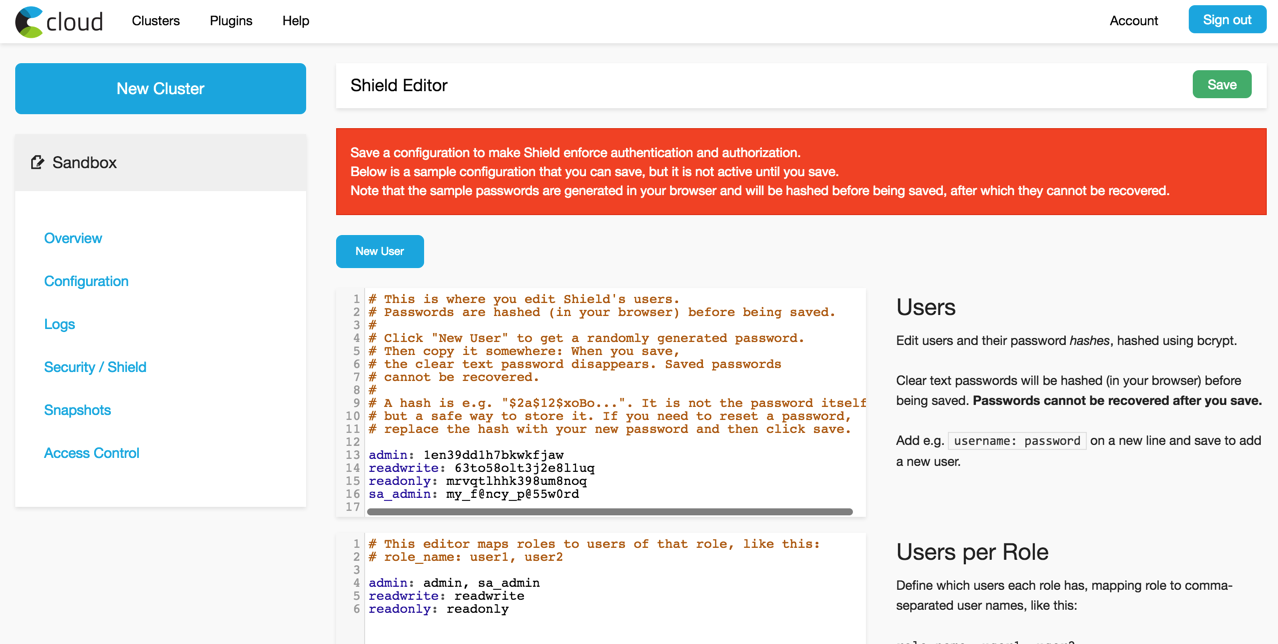

クラスタをセキュアにする

次に、Elastic Cloudアカウントに用意されているX-Packセキュリティモジュールを使用してクラスタのアクセスとセキュリティを設定しましょう。

- ユーザー名「elastic」と最初のプロビジョニング手順でコピーしたパスワードを使用してKibanaインターフェースにログインしてください。

- 追加ユーザーの作成および/または既存のユーザー名とパスワードの設定を行ってください。本例では新規ユーザー「sa_admin」を作成します。

- 新規ユーザーを追加した場合は各ユーザーにロールを設定してください。本例ではsa_adminユーザーにスーパーユーザーのロールを設定します。

- ユーザーのパスワードを指定してください。本例では、セキュリティの高いパスワードを使用しています。

- 追加したユーザー名とパスワードを覚えておき、この認証情報を安全な場所に保管してください。あるいは付箋にメモしてください。

同じ手順を使用すれば、パスワードを更新したりユーザーを追加したりできます。当社のドキュメントの手順を元に、5.5に実装されている新しいセキュリティAPIを使用することもできます。

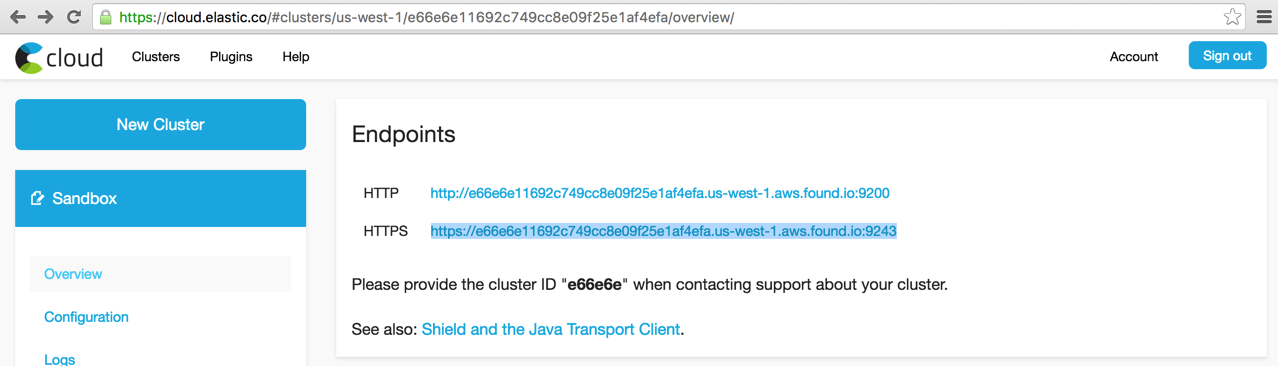

Elasticsearchエンドポイント

Kibanaにログインすると、[ディスカバリー]タブが最初に表示されます。とはいえ、可視化できるデータはありません。次に、Elasticsearchにデータを取り込む操作を行います。うまくできるようにデータをいくつか準備しましょう!

- Go to the Overview link in the Elastic Cloud console. The listed endpoints are used for API access for your cluster.

- Elastic Cloudコンソールで[概要]リンクをクリックしてください。一覧表示されたエンドポイントは、クラスターへのAPIアクセスに使用します。

- httpsのリンクをクリックするとクラスターが表示されます。httpsのURLをコピーしてください。以下の手順で使用します。

データをインポートする

ここでは、Elasticsearchクラスタにデータを取り込んでElastic Stackの動作を確認しましょう。サンプルデータセットをお持ちでない場合は、GitHub Examples Repoにある各種データサンプルの1つを使用してください。本例ではApacheログのサンプルとLogstashを使用します(お客様のシステムバージョンに合うものをダウンロードしてください。ホスティングされたElasticsearchクラスターにログを取り込むには、本例に記載されているLogstash configのElasticsearch出力セクションを修正する必要があります。

1. リポジトリをダウンロードして、apache_logstash.confファイルがあるディレクトリに移動してください。config内のホスト用エンドポイントを(前の手順でコピーした)ご自身のクラスタ用のエンドポイントに変更してください。

2. 「Elasticsearchのセキュリティ設定」セクションに書き込み権限が設定されたユーザーアカウントのユーザー名とパスワードを修正してください。本例では、はじめに追加したsa_adminユーザーを使用します。

elasticsearch {

hosts => "https://e66e6e11692c749cc8e09f25e1af4efa.us-west-1.aws.found.io:9243/"

user => "sa_admin"

password => "my_f@ncy_p@55w0rd"

index => "apache_elastic_example"

template => "./apache_template.json"

template_name => "apache_elastic_example"

template_overwrite => true

}

3. 次のコマンドを実行して、Logstash経由でデータをElasticsearchでインデックス化します。

cat ../apache_logs | <Logstash_Install_Dir>/bin/logstash -f apache_logstash.conf

4. ES_ENDPOINTの値を環境変数に保存すると便利です。以下は、私がいくつかのテストで行っているやり方です。

export ES_ENDPOINT=https://somelongid.us-east-1.aws.found.io:9243

5. ES_ENDPOINT/apache_elastic_example/_count(ES_ENDPOINTはElasticsearchエンドポイントURL)で、データの有無を確認できます。この数は10000になっているはずです。上記の環境変数が設定されている場合は次のcurlコマンドを使用して実行できます(httpsエンドポイントの基本認証用にユーザーパラメータを指定してください)。

%> curl --user sa_admin:s00p3rS3cr3t

${ES_ENDPOINT}/apache_elastic_example/_count

{"count":10000,"_shards":{"total":5,"successful":5,"failed":0}}

6. ES_ENDPOINT/_cat/indicesにアクセスすれば、クラスタの健全性を確認できます。統計情報に基づいて一覧表示されるapache_elastic_exampleインデックスを確認してください。

%> curl --user sa_admin:s00p3rS3cr3t ${ES_ENDPOINT}/_cat/indices

health status index pri rep docs.count docs.deleted store.size pri.store.size

yellow open .kibana 1 1 2 0 19.1kb 19.1kb

yellow open apache_elastic_example 1 1 10000 0 7.3mb 7.3mb

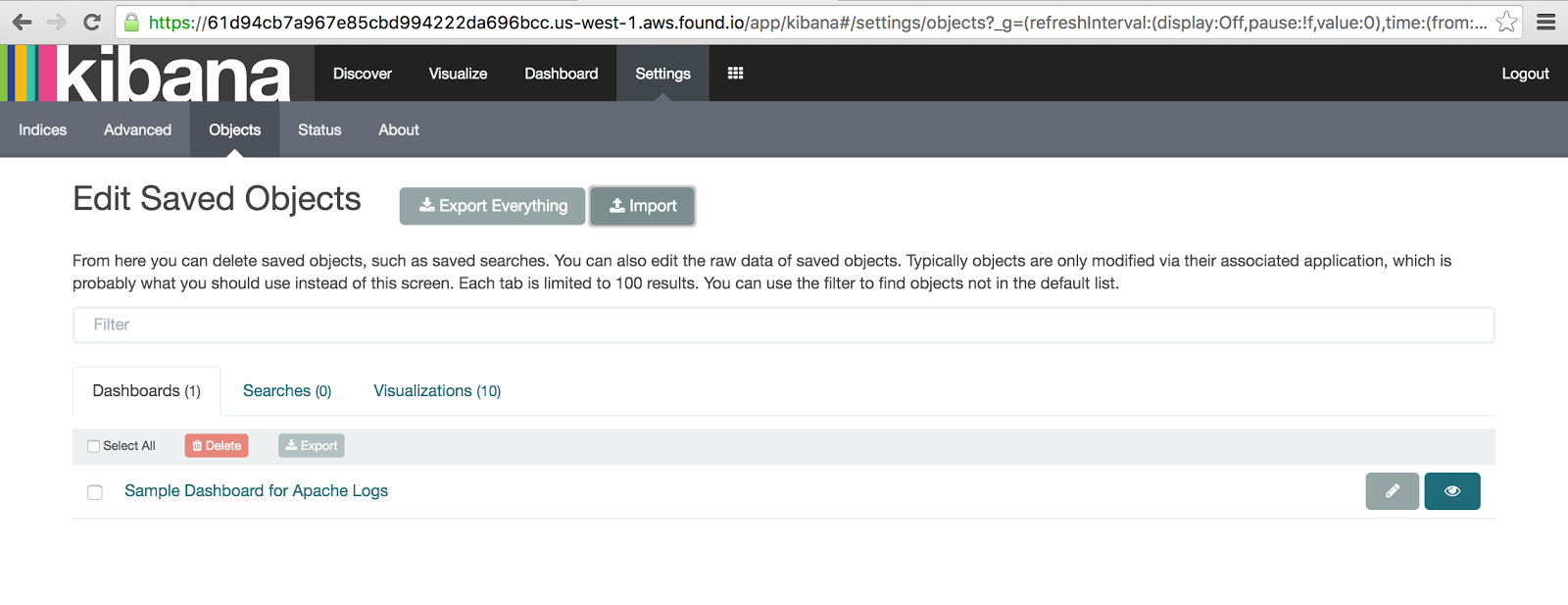

データを可視化する

ここでは、Kibanaインスタンスにアクセスして、引き続きデータの可視化に関する事例説明を行います。

- [管理]タブの「インデックスのパターン」オプションに移動し、apache_elastic_exampleインデックスをKibanaに追加して[作成]をクリックしてください。

2. [ディスカバリー]タブでapache_elastic_exampleインデックスを選択すると、データを確認できます。

3. 管理 > 保存済みオブジェクト > インポートをクリックし、apache_kibana.jsonファイルを選択して、サンプルのダッシュボードをインポートしてください。

4. インポートした新しいダッシュボードを確認してください。 - 左側に[ダッシュボード]タブに移動して、Apacheログ用ダッシュボードのサンプルを選択してください。

まとめ

これで、クラスタのセットアップ、サンプルデータのインポート、最初に保存したダッシュボードの確認が完了しました! サンプルのApacheログデータはElasticsearchに取り込まれており、お客様のログからインサイトや重要な価値を入手できるようになりました。引き続き、Examples repoやKibana開始ガイドからその他のサンプルデータセットを確認することができます。LogstashやBeatsを使用してデータ送信を開始することもできます。

その他の便利なリンクを以下に掲載します。クラウド上のElastic Stackを使用する手順で役立ちます。

- Cloud https://www.elastic.co/guide/en/cloud/current/index.html

- Kibana https://www.elastic.co/guide/en/kibana/4.5/index.html

- Security https://www.elastic.co/guide/en/shield/2.3/index.html

- Elasticsearch https://www.elastic.co/guide/en/elasticsearch/reference/2.4/index.html

- Beats https://www.elastic.co/guide/en/beats/libbeat/1.3/index.html

- Logstash https://www.elastic.co/guide/en/logstash/2.4/index.html

- The Definitive Guide https://www.elastic.co/guide/en/elasticsearch/guide/current/index.html

その他、世界レベルの教育エンジニアによる、公式トレーニングを続けることもできます: https://www.elastic.co/training