Upkeep & Maintenance

Mr. Turing migrated to Microsoft Azure to enable the organization to stay on the latest, official version of Elastic.

Full compatibility

Highly customizable

Elastic allows Mr. Turing to be quick, fast, and reliable while enabling the organization to index documents and vectors very quickly.

Meet Alan: He reads. He learns. You ask. He answers.

Mr. Turing is a Brazilian company named after Alan Turing, the famous mathematician and computer scientist. Alan, Mr. Turing's software-as-a-service product, solves a problem that's faced by people at virtually every company: finding the best answer to their questions within a large set of documents. Alan meets this need by using artificial intelligence to "read" a set of documents, employing computer vision and natural language processing (NLP) to learn and understand the information they contain, and then store that knowledge as mathematical vectors within a database. Peoples' questions are similarly vectorized, upon which the database that serves as Alan's equivalent of a human memory can be searched for the closest match.

When company founder and CTO Marco Aleixo formed Mr. Turing in 2016, he knew that he needed to go "cloud native" from the start to achieve the scalability needed to run Alan's deep neural networks.

He initially chose a cloud provider that was popular with open-source developers and, after experimenting with a few NoSQL databases, chose Elasticsearch as Alan's main data store. The reasons for this choice included Elasticsearch's superior scalability, its powerful search tools, and its popularity and support within the open source community.

"Elastic enables us to index documents and vectors very quickly," says Juliana Resplande, an AI Engineer at Mr. Turing. "Elastic is great because it's highly customizable. Every startup needs to be quick, fast, and reliable - Elastic allows for that."

Everything worked fairly well until April 2021, when the company's cloud provider announced it would no longer support the official, ongoing versions of Elastic. "I wanted to stay on the 'real' version of Elastic for several reasons, including compatibility, management tools, documentation, access to community support, and planned new features," says Aleixo.

Elastic on Azure – an ideal solution

The answer to Aleixo's dilemma came a month later, in May 2021, when Elastic and Microsoft announced they had partnered to deliver Elastic on Azure. After confirming that Azure offered the other managed services he would need, such as those for storing customer documents, running serverless functions, and managing Kubernetes clusters, Aleixo chose the fully managed Elastic Cloud service over the self-managed option and was quickly up-and-running. He also joined the Microsoft for Startups program, which gave him access to Azure credits, development tools, technical guidance, and other resources.

"The more I learned about Azure, the more I was convinced that it was a better all-around fit for us," says Aleixo. "Not only did Azure offer all the managed services I needed, including the 'real' version of Elasticsearch, but I was also impressed by the direction Microsoft was headed with OpenAI."

Mr. Turing started its migration to Azure in the Summer of 2021. Within a few days, the company had deployed Elastic on Azure and copied-over its data. "It was really easy to migrate—the Azure documentation was very helpful," says Resplande. "And because Elastic on Azure is based on the official version of Elasticsearch, full compatibility was assured."

Tooling – Visual Studio Code and GitHub Enterprise

Developers were already using Visual Studio Code to program in JavaScript and Python, so they didn't have to learn a new IDE. Similarly, they were able to keep using all of their existing Python packages, frameworks, and other tools—including GeneXus for low-code software development, Hugging Face for managing NLP models, Django and FastAPI for building and deploying web APIs, and TensorFlow and PyTorch for building, training, and deploying neural networks.

"We love Visual Studio Code because it's so easy to use," says Aleixo. "It's lightweight, it's open-source, and it's the most powerful IDE available for coding in Python. Best of all, it's extensible. We use a lot of Visual Studio code extensions for developing in Python—for example, to detect coding errors and help us work more quickly."

Adds Resplande, "I didn't use Visual Studio Code before I joined Mr. Turing last year. When I joined the company, everybody told me ‘Use Visual Studio Code… you're going to love it.' I started using Visual Studio Code a year ago and now I'm addicted. It's the future."

Source code resides in GitHub Enterprise, which also hosts the company's CI/CD pipeline. Within that pipeline, the company is using GitHub Actions for automation—including deployment to Azure. "We need to keep our code safe, and GitHub lets us lock it down against unauthorized access," says Aleixo. "We're using GitHub Actions to create automated pipelines that we can run to test our code quality and perform other tasks that we need to repeat every time a change is made."

Moving forward, Aleixo plans to adopt GitHub Advanced Security to create a modern ‘DevSecOps' environment that puts security front-and-center throughout the software supply chain. Security scans performed by GitHub Advanced Security include:

- Code scanning - searching for potential vulnerabilities and coding errors, such as vulnerability to a SQL injection attack. These scans are powered by CodeQL, an industry-leading semantic code analysis engine developed by GitHub.

- Secret scanning - checking for keys, tokens, and other secrets that may have been checked into a repo.

- Dependency review - showing the full impact of changes to dependencies and the details of any vulnerable versions—including automated fixes to many vulnerable dependencies. (Dependency review also covers open source, as described in this article.)

"We use a lot of open-source packages, so we need to follow each version of each package to make sure that we don't install any malicious code," says Aleixo. "Having such automated security checks baked-into our workflows will be a huge benefit; we're planning to use all that GitHub Advanced Security has to offer."

Together, the combination of Visual Studio Code and GitHub provide a powerful collaboration tool-set for developers at Mr. Turing—including features like integrated pull requests, commenting, and issue tracking from within Visual Studio Code. "I love how it lets me do everything I need to do remotely, which is important because I live 300 miles away from our office," says Resplande. "Half of our developers live in other states within Brazil, and we can still function as a single team, collaboratively edit documents, and more."

Developers' favorite feature, however, is GitHub Copilot, an AI assistant that helps them work faster by providing suggestions for code completion. Powered by Codex, the powerful new GPT-3 based machine learning model from OpenAI, GitHub Copilot is trained on billions of lines of public code and is accessed via an extension for Visual Studio Code. In addition to its autocomplete features, GitHub Copilot helps developers convert comments to code, can produce long repetitive code patterns given a few examples, can suggest tests to match code, and can even provide multiple options for developers to evaluate.

"As a startup, we need to work quickly," says Aleixo. "GitHub Copilot not only helps us write code faster, but it also helps us ensure code quality. It's the best coding assistant I've ever seen."

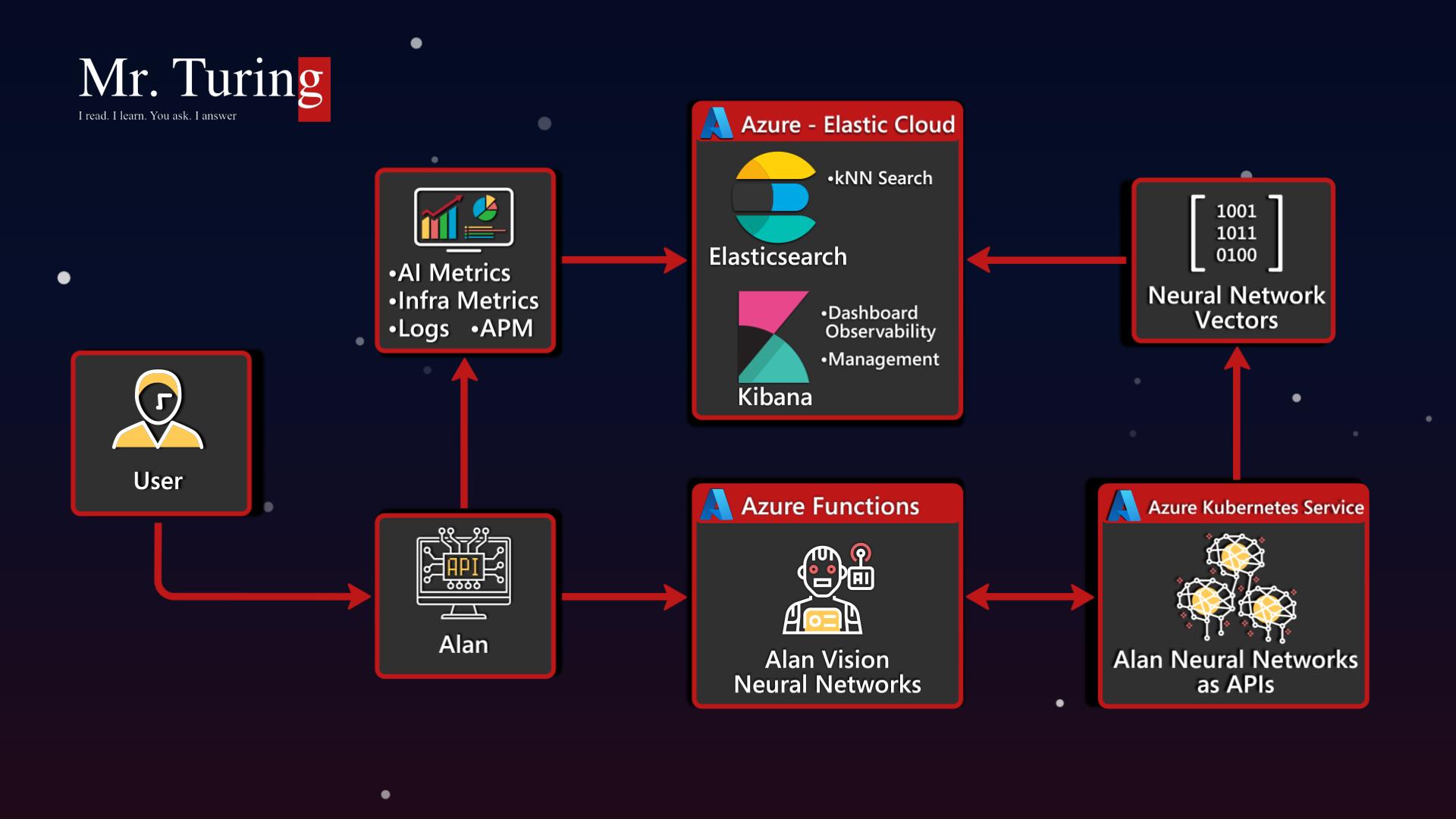

Architecture and data flow

Figure 1 shows the high-level architecture for Alan. Customers upload their documents via a web API or a web page, both of which are hosted within Azure Kubernetes Service. Bridge to Kubernetes, a feature of Visual Studio Code, allows developers to run and debug code on their development computers, while still connected to their Kubernetes cluster—for example, as needed to debug locally in a single pod that's part of an Azure Kubernetes Service (AKS) cluster.

Uploaded documents are then stored in Azure Blob Storage for processing. Currently, Alan can process Microsoft Word documents, PDF files, text files, and Excel spreadsheets. Developers are working on adding support for .srt (subtitle) files for videos.

Figure 1. Processing pipeline for Alan.

The processing pipeline consists of two main steps. First, the document is examined by a deep neural network that uses computer vision to determine its component parts—such as titles, subtitles, headings, logos, tables, and paragraphs. These neural networks are hosted in Azure Functions, enabling Alan to scale out and process thousands of documents at a time.

Second, after Alan develops a "picture" of everything a document contains, the documents flow into a second deep neural network that's hosted on Azure Kubernetes Service. This neural network uses NLP to examine each element identified within the document and convert it to a mathematical vector. On average, each page of a document generates 10 to 20 vectors, with each vector containing some 768 scalars—resulting in a vector size of about 3KB. As the vectors are computed, they're stored in Elasticsearch as JSON documents.

"When customers send us documents, we need to process them fast," says Vinícius Oliveira, Research and Development Lead at Mr. Turing. "Azure Functions and Azure Kubernetes Service both deliver great scalability, enabling us to process many documents in parallel to keep our customers happy—without paying for spare capacity when we don't need it."

Customers ask their questions through another web interface hosted in Azure Kubernetes Service. Those questions are vectorized in a manner similar to the documents, using NLP, upon which the Elasticsearch k-nearest neighbor (kNN) search API is called to find the best match to the question within the database.

Alan uses Elastic APM for application performance monitoring and Elastic Filebeat for log analysis. Data from both sources flows into Kibana, a visualization dashboard that's included with Elastic on Azure, which Mr. Turing uses to monitor activity within Alan's processing pipeline. The company uses Azure Load Balancer for load balancing and uses Log Analytics—a feature of Azure Monitor—to monitor activity within Azure Functions.

Developers use powerful GPU-powered Azure VMs such as the NDv2-series to train their models. Each VM has 8 NVIDIA Tesla V100 NVLINK-connected GPUs, each with 32 GB of GPU memory. Each NDv2 VM also has 40 non-hyperthreaded Intel Xeon Platinum 8168 (Skylake) cores and 672 GiB of system memory. Even with such massive processing power, it can still take 3-4 days to train one of the company's deep neural network models.

2000 questions and answers per day—and growing

Mr. Turing's customers have collectively fed some 60,000 documents into Alan. Altogether, his Elasticsearch database is about 300 GB in size, representing 1 million pages of knowledge that he uses to answer some 2,000 questions per day.

"Alan can read, organize, assimilate, and determine relations across thousands of pages of information, in just a few seconds," says Marcelo Noronha, CEO at Mr. Turing. "He can also do semantic correlation, registering multiple variants of the same question to help people find what they need—even if they describe what they're looking for using different terminology than what's contained in the documents."

While Alan is busy providing users with fast and accurate answers to their questions, the company's move to Azure has enabled Mr. Turing to better meet its own needs—starting with the company's desire to continue using the official version of Elasticsearch. What's more, with fully managed Azure services, developers at Mr. Turing can stay focused on building the company's leading-edge AI assistant instead of managing IT infrastructure.

The move to Azure has yielded other benefits, as well. "On Azure, Alan is twice as fast and less costly to operate compared to when he was running on our previous cloud provider," says Noronha. "In addition, because of the strong integration between Azure, GitHub, and Visual Studio Code, we can deliver new features faster than we could before."

Adds Aleixo, "The great support provided by Microsoft has increased our confidence when it comes to taking risks and trying new things. Support for open source on Azure has been great, and the many ways Microsoft is continuing to innovate with open source will help us make Alan even smarter and faster. We're excited to start experimenting with the native support for natural language processing in Elastic. We're also excited about the new Hugging Face Endpoints service on Azure, which would give us a way to further streamline model deployment. We're equally eager to try the new Azure OpenAI Service, which will let us run OpenAI GPT-3 models on Azure."

Concludes Noronha, "Our ultimate goal—the vision we all share at Mr. Turing—is to make Alan as intelligent and helpful as Jarvis, Ironman's virtual assistant in the Avenger movies. With Azure and Elastic, we're confident that we'll get there."