引导式演示

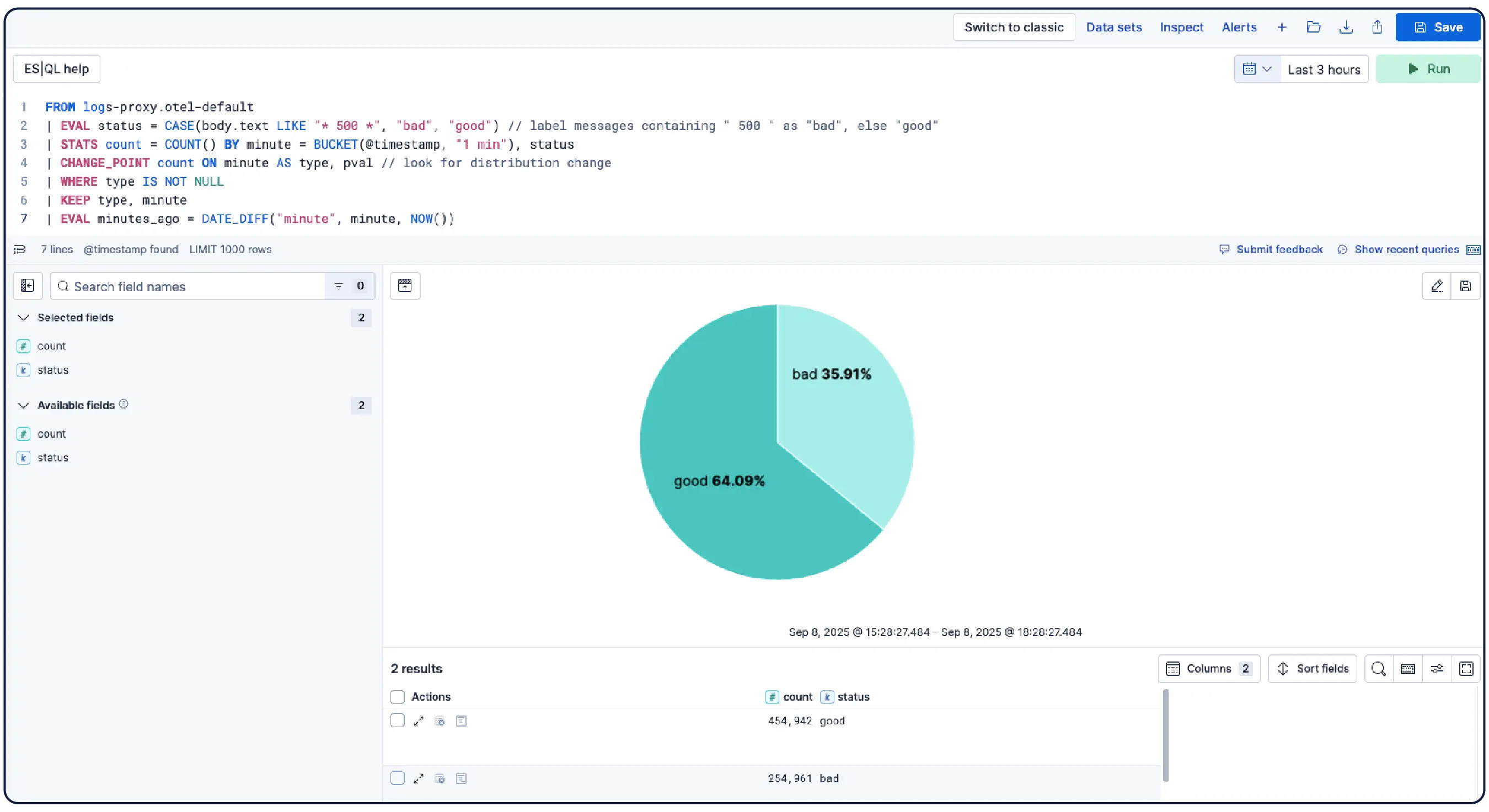

从原始日志到真实的答案

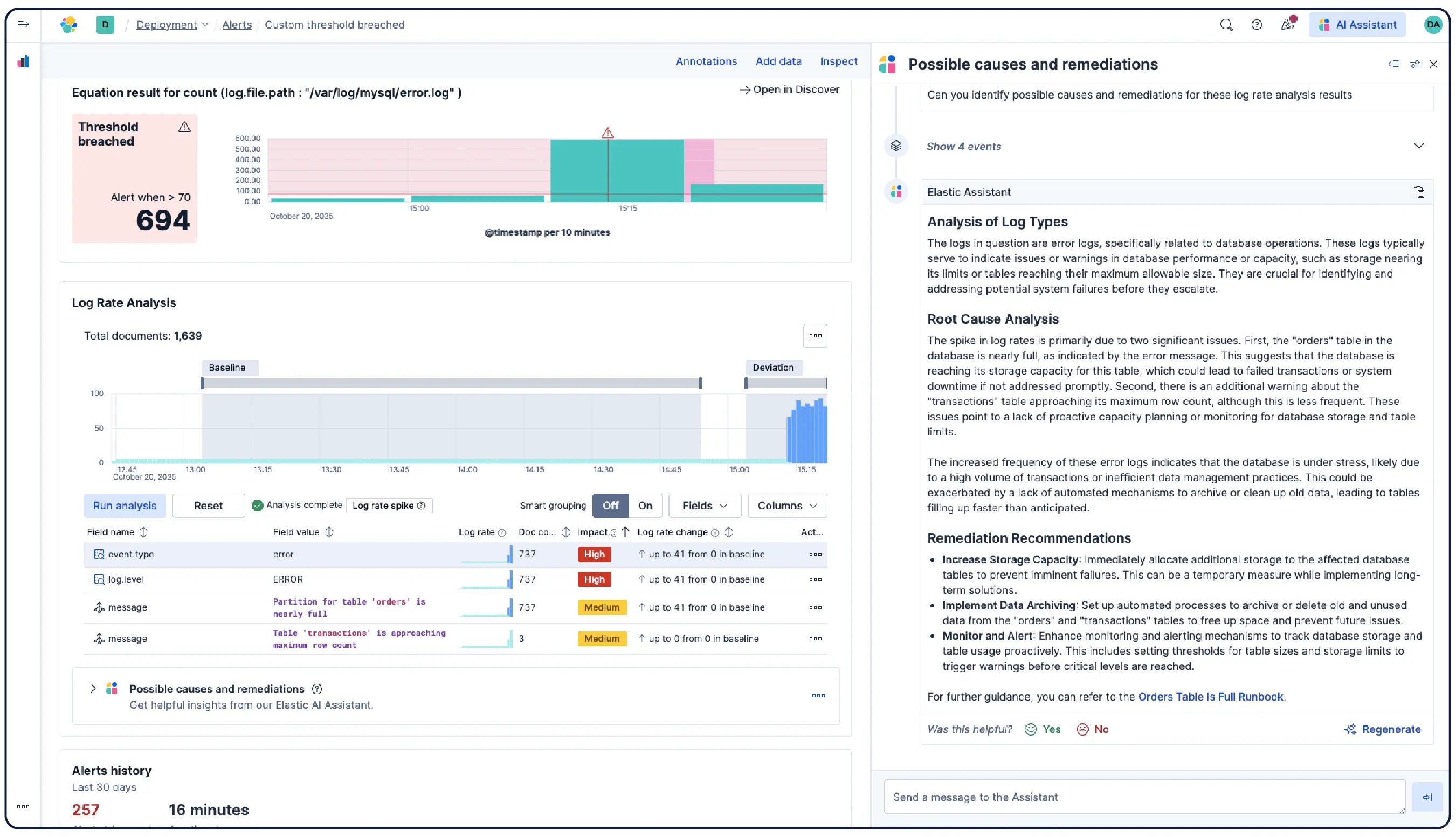

日志会告诉您发生了什么。Elastic 帮助您理解原因。

功能

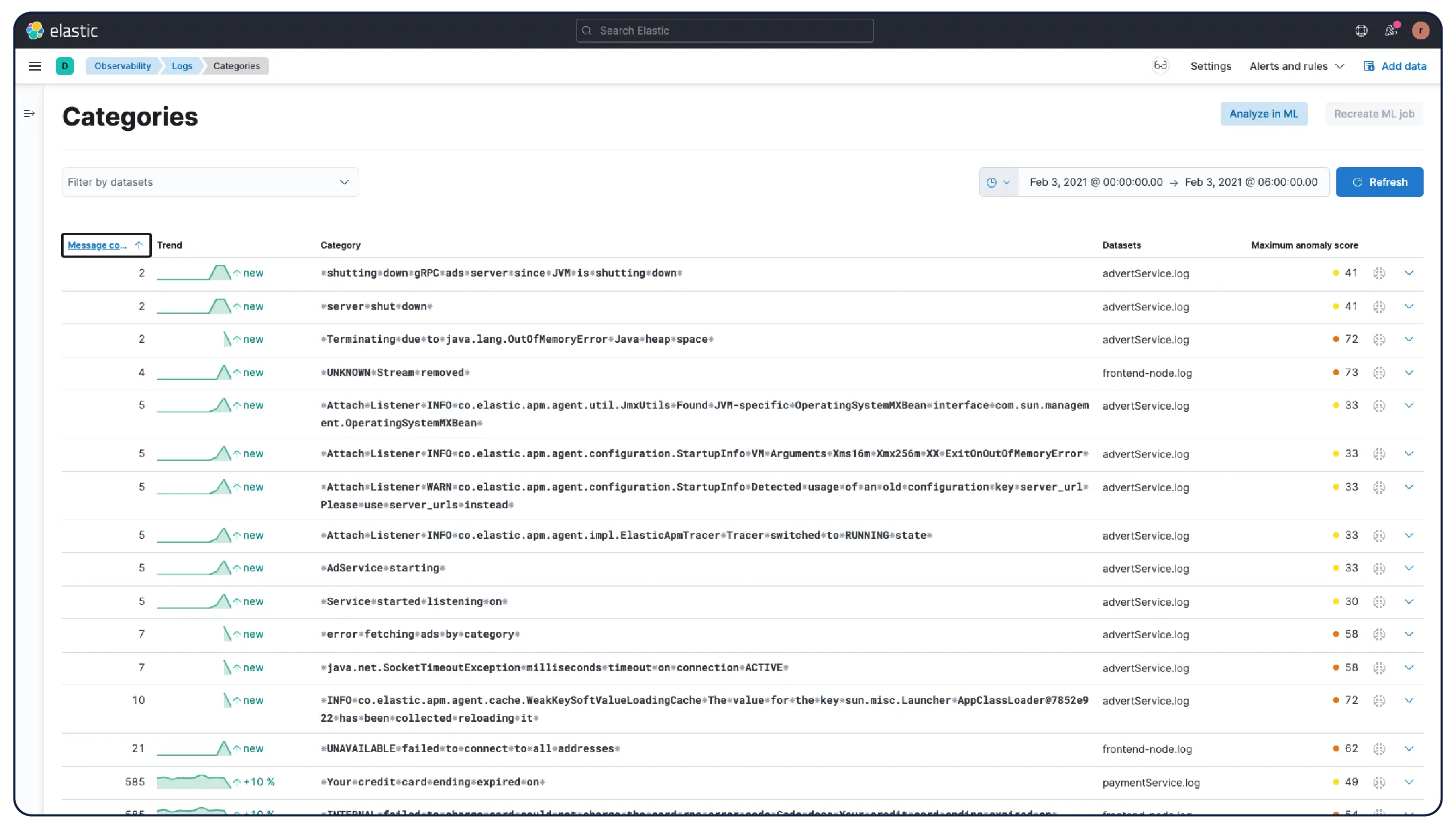

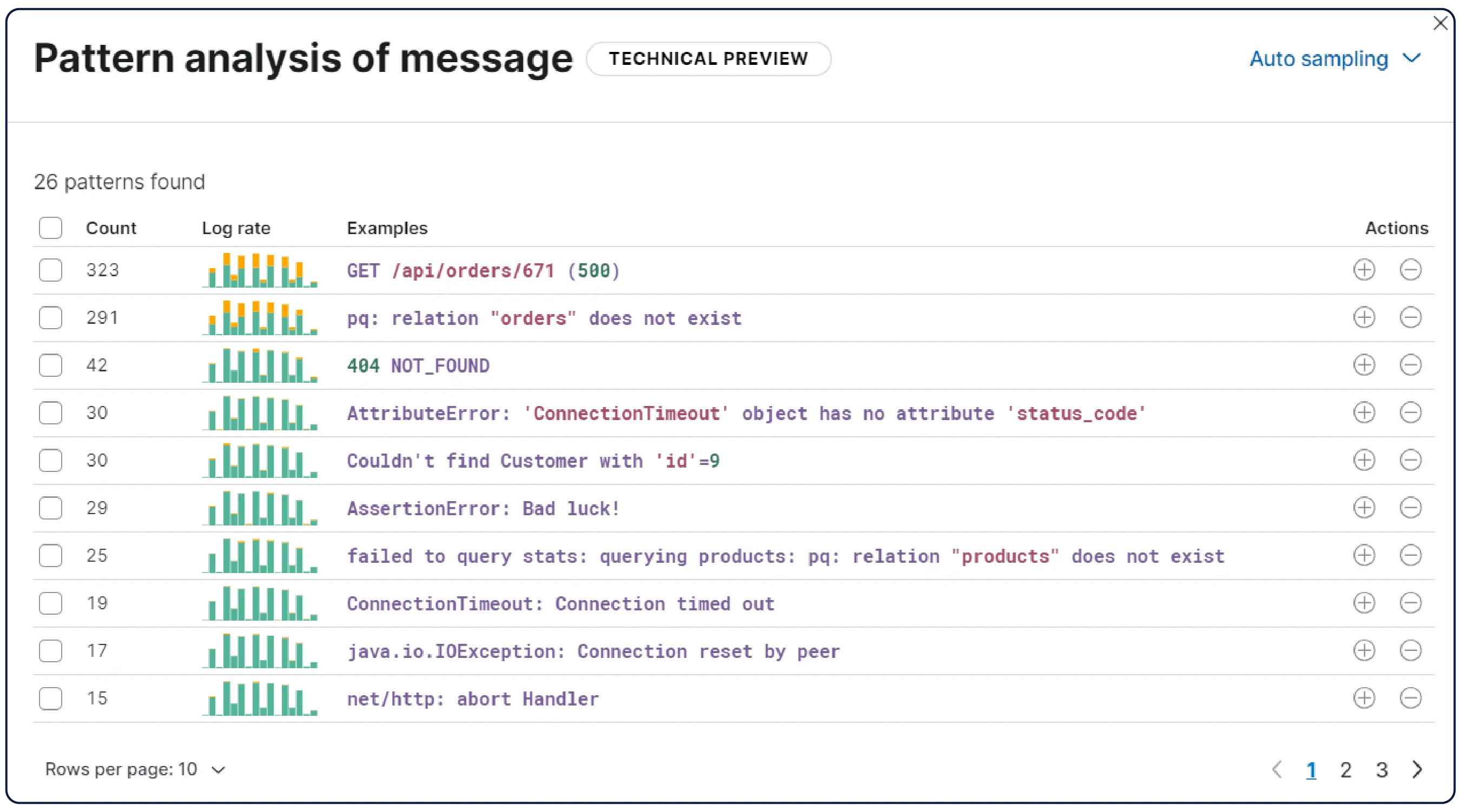

数十亿个日志,一幅清晰的画面

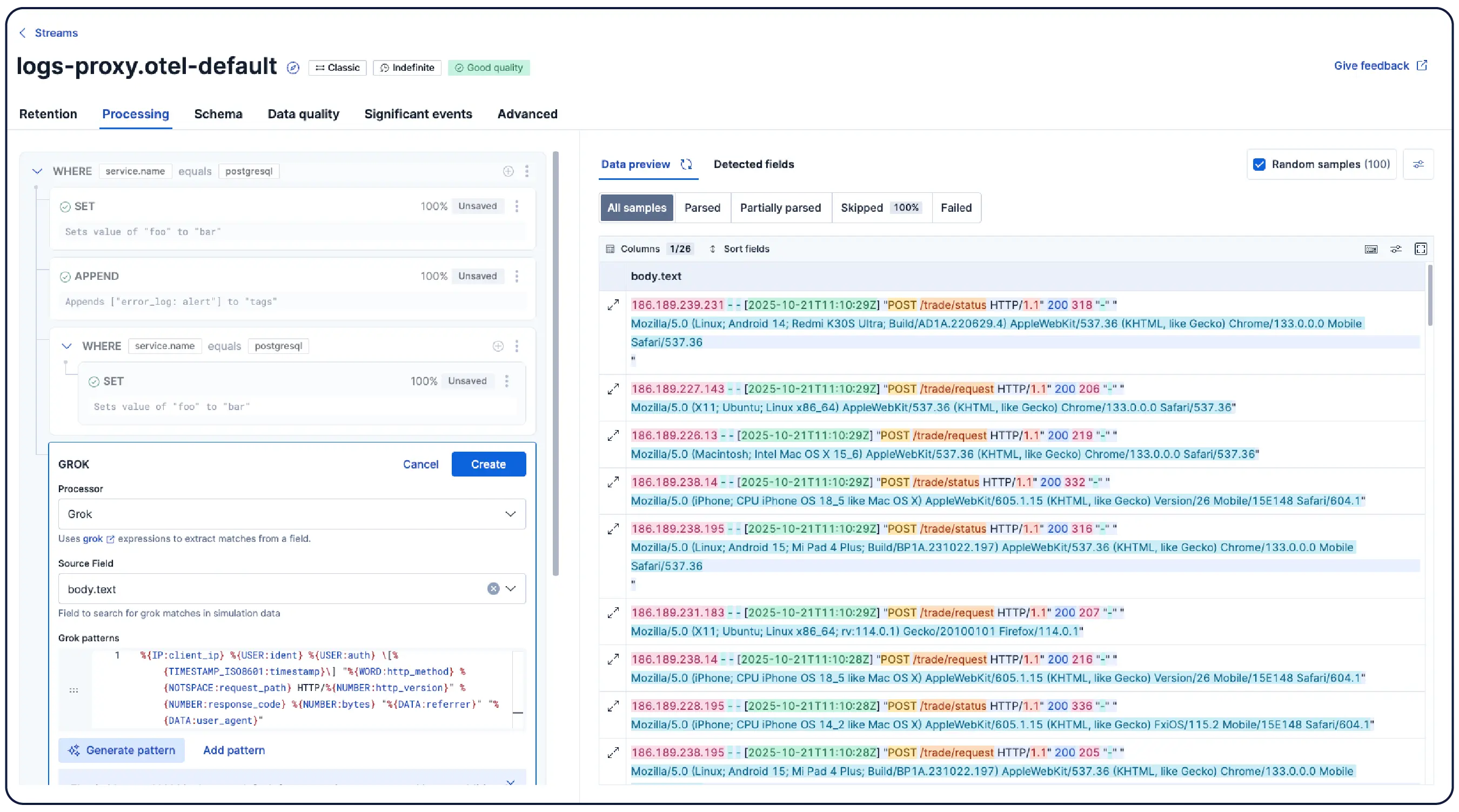

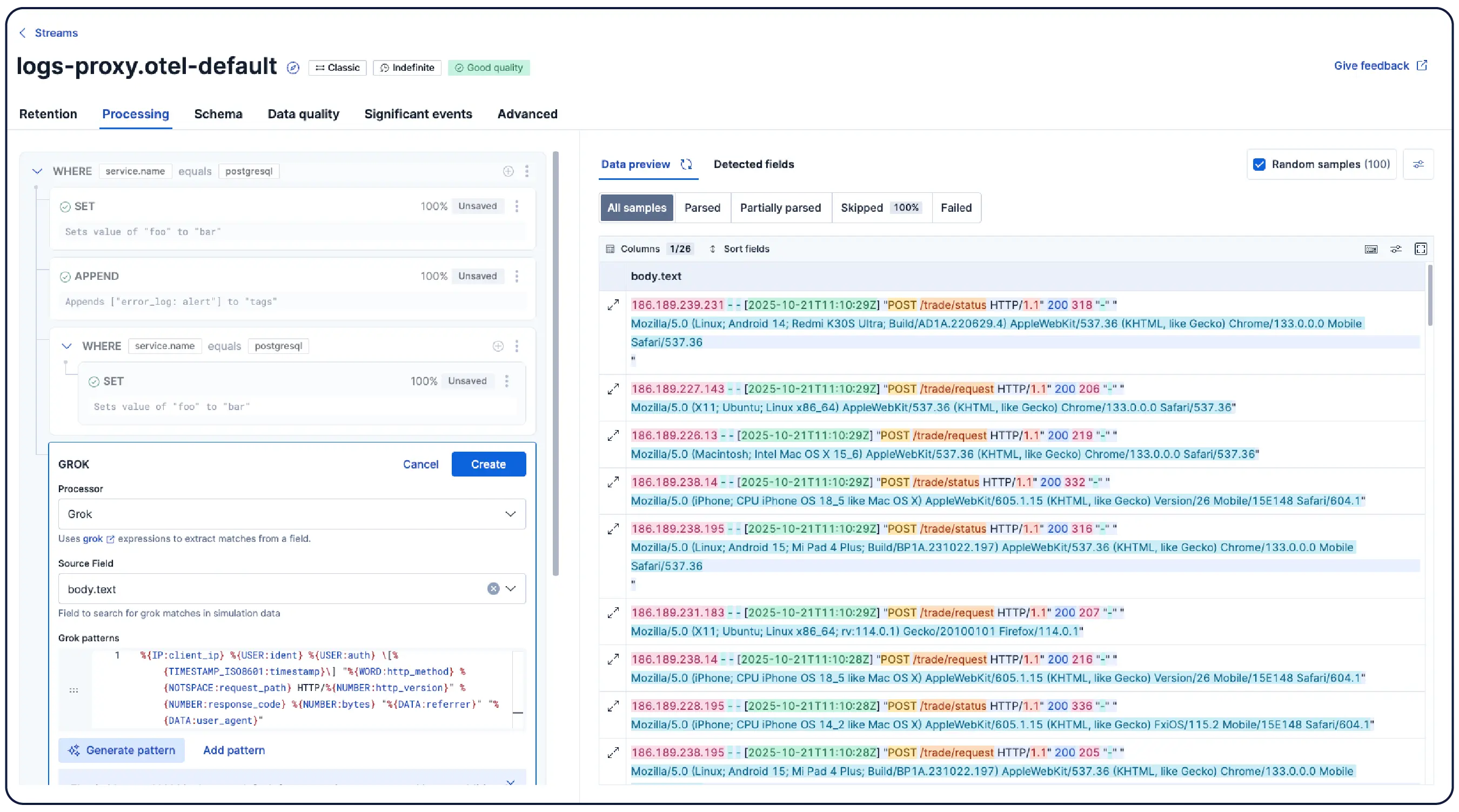

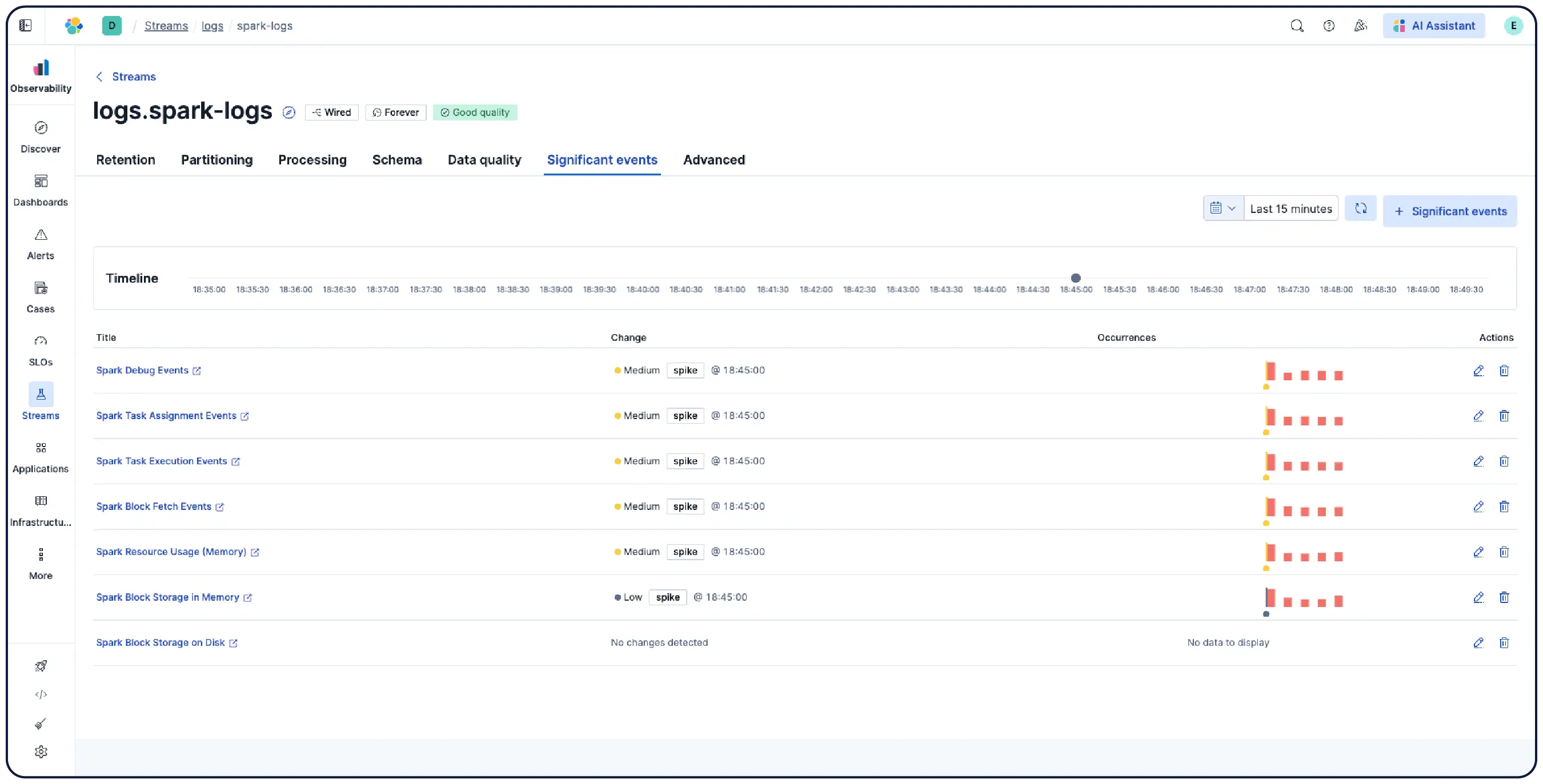

Elastic 能够从任何来源摄取日志,并自动将它们分类到模式中,突出显示异常,并定位突发事件——这样您就能获得答案,而非被海量数据淹没。

自动将数据按逻辑流进行整理,应用解析、分区、字段提取和生命周期策略,几乎无需手动设置。

看看像您这样的公司为何纷纷选择 Elastic Observability

借助可扩展的日志分析能力,将杂乱数据转化为可操作的洞察。

客户聚焦

Comcast 每天通过 Elastic 摄取 400 TB 的数据,以监测服务并加速根本原因分析,确保一流的客户体验。

客户聚焦

通过使用 Elastic 实施集中式日志记录平台,Discover 将存储成本降低了 50%,并缩短了数据检索时间。

客户聚焦

Informatica 将其整个日志工作负载迁移到 Elastic,涵盖 100 多个应用程序和 300 多个 Kubernetes 集群,从而降低了成本并减少了 MTTR。

.jpg)