Implementing Japanese autocomplete suggestions in Elasticsearch

Autocomplete suggestions are an important part of a great search experience. However, autocomplete can be difficult to implement in some languages, which is the case with Japanese. In this blog, we’ll explore the challenges of implementing autocomplete in Japanese and demonstrate some ways you can overcome these challenges in Elasticsearch.

What’s different about autocomplete in Japanese?

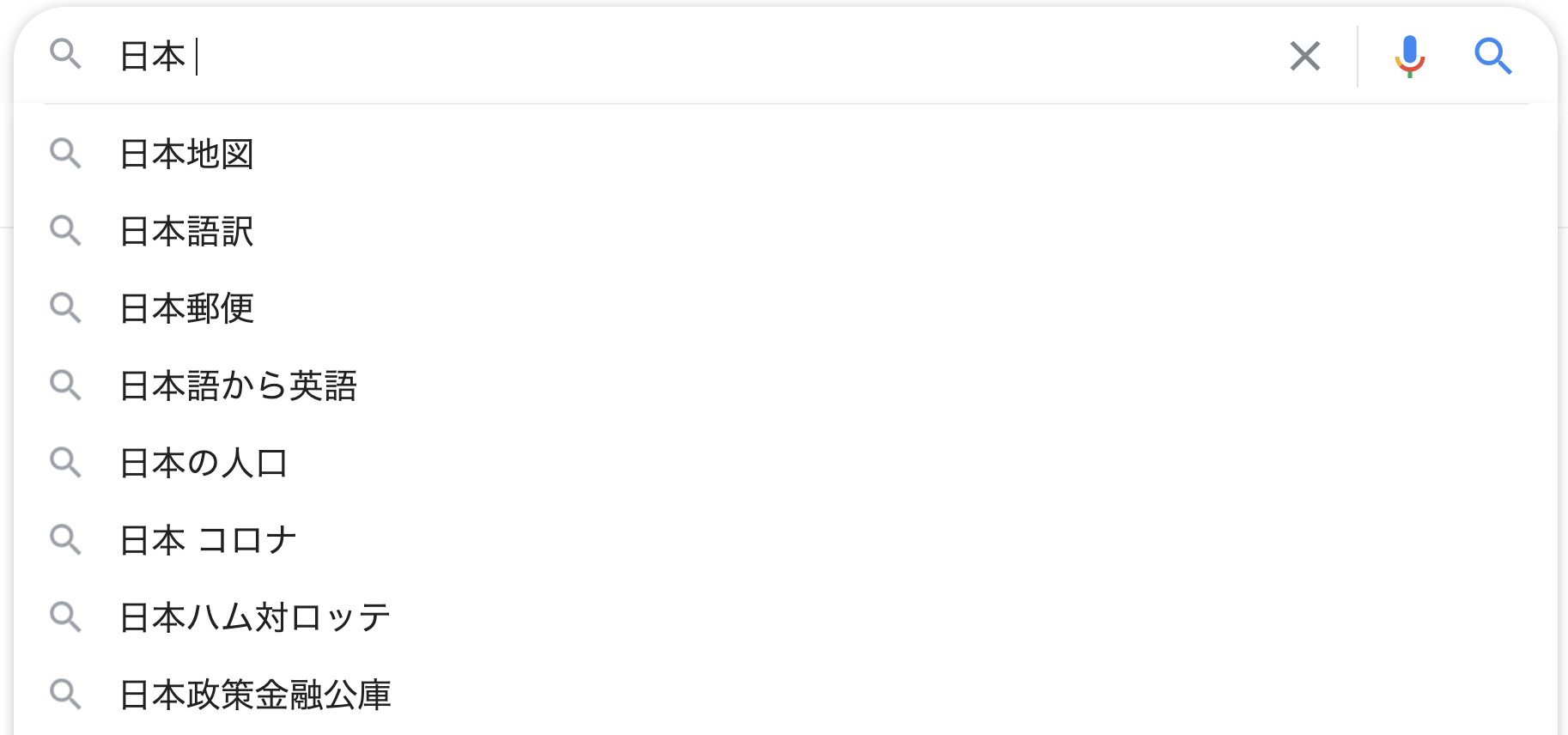

Here is a typical list of autocomplete suggestions from Google. In this example, the keyword is "日本" (Japan).

Several factors make the implementation of autocomplete for Japanese more difficult than English.

Word breaks don’t depend on whitespace

A word break analyzer is required to implement autocomplete suggestions. In most European languages, including English, words are separated with whitespace, which makes it easy to divide a sentence into words. However, in Japanese, individual words are not separated with whitespace. This means that, to split a Japanese sentence into individual words, morphological analysis is required.

Need to consider both kanji and reading

The Japanese writing system is made up of two syllabaries, hiragana and katakana (known collectively as kana), as well as logographic Chinese characters called kanji. A single kanji can have several different readings, or pronunciations. Therefore, when implementing autocomplete, it is necessary to consider the reading of a kanji as well as the kanji character itself.

Typically, when typing in Japanese, the user will input letters of the alphabet (romaji), which convert to kana and then to kanji as the user continues to type — for example, typing k and then e will result in the kana character け, which is pronounced “ke.” When implementing autocomplete, it is necessary to consider incomplete input that has not successfully converted to kana during typing. For example, for the query "けんs" (kens-), autocomplete suggestions should include words such as "検索" (kensaku) and "検査" (kensa). In this case, converting the input "けんs" to romaji (kens-) provides the stem necessary to offer these autocomplete suggestions.

One more consideration is that the reading of a kanji depends on whether it appears alone or with other characters. For example, the character "東" by itself is pronounced "higashi," but in the word "東京" (meaning “Tokyo”), the same kanji is pronounced "to." In general, when a user searches for "東," the autocomplete suggestion "東京" should be offered, since this is a very common word. However, this can't be achieved based on the reading of "東" alone — in this case, to provide the required autocomplete suggestions, it is necessary both to convert the text to romaji and to perform a search of the kanji character itself without converting to romaji.

Why not use an Elasticsearch suggester?

When designing an analyzer that meets these requirements, it is often necessary to prepare multiple analyzers. Therefore, using multiple fields is also required. However, Elasticsearch suggesters are only compatible with one field, which does not work well under the specific requirements for Japanese. To address this, it is necessary to use either multi fields or the mapping parameter copy_to.

Generally, the more complex the requirements — such as handling suggestions in the middle of the typing — the more difficult autocomplete will be to implement. Therefore, the use of Elasticsearch suggesters is generally not recommended for autocomplete suggestions in CJK languages (Chinese, Japanese, and Korean).

Instead, in most cases, it is better to use a regular search query.

Let's look at an example of Japanese autocomplete

Before going into the detailed explanation, let's first look at an example of implementing autocomplete suggestions in Japanese.

Main requirements

- When a user enters a search keyword, related suggestions will be displayed. Example: If you enter "日本," then "日本," "日本 地図," "日本 人口," etc. will be suggested.

- If you enter an incomplete search keyword, related suggestions will still be displayed. Example: If you enter "にほn," then "日本," "日本 地図," "日本の人口," etc. will be suggested.

- Even if you mistype a word, meaningful suggestions will still be provided. Example: If you enter "にhん," "にっほん," or "日本ん," then "日本," "日本 地図," "日本の人口," etc. will be suggested.

- The candidates are listed and displayed in descending order of the number of times the keywords have been searched.

Preparation for implementation

In general, it is better to create a dedicated index for suggesting purposes, separated from the regular index. In this example, we’ll create a suggestion index named my_suggest. Also, we’ll use my_field as the suggestion field.

Also, use the keyword search history as the index to populate the suggestions.

Example: Configuration of index settings and mappings

We will describe the index settings and mapping in detail later. For now, here’s the PUT command to create them.

PUT my_suggest

{

"settings": {

"analysis": {

"char_filter": {

"normalize": {

"type": "icu_normalizer",

"name": "nfkc",

"mode": "compose"

},

"kana_to_romaji": {

"type": "mapping",

"mappings": [

"あ=>a",

"い=>i",

"う=>u",

"え=>e",

"お=>o",

"か=>ka",

"き=>ki",

"く=>ku",

"け=>ke",

"こ=>ko",

"さ=>sa",

"し=>shi",

"す=>su",

"せ=>se",

"そ=>so",

"た=>ta",

"ち=>chi",

"つ=>tsu",

"て=>te",

"と=>to",

"な=>na",

"に=>ni",

"ぬ=>nu",

"ね=>ne",

"の=>no",

"は=>ha",

"ひ=>hi",

"ふ=>fu",

"へ=>he",

"ほ=>ho",

"ま=>ma",

"み=>mi",

"む=>mu",

"め=>me",

"も=>mo",

"や=>ya",

"ゆ=>yu",

"よ=>yo",

"ら=>ra",

"り=>ri",

"る=>ru",

"れ=>re",

"ろ=>ro",

"わ=>wa",

"を=>o",

"ん=>n",

"が=>ga",

"ぎ=>gi",

"ぐ=>gu",

"げ=>ge",

"ご=>go",

"ざ=>za",

"じ=>ji",

"ず=>zu",

"ぜ=>ze",

"ぞ=>zo",

"だ=>da",

"ぢ=>ji",

"づ=>zu",

"で=>de",

"ど=>do",

"ば=>ba",

"び=>bi",

"ぶ=>bu",

"べ=>be",

"ぼ=>bo",

"ぱ=>pa",

"ぴ=>pi",

"ぷ=>pu",

"ぺ=>pe",

"ぽ=>po",

"きゃ=>kya",

"きゅ=>kyu",

"きょ=>kyo",

"しゃ=>sha",

"しゅ=>shu",

"しょ=>sho",

"ちゃ=>cha",

"ちゅ=>chu",

"ちょ=>cho",

"にゃ=>nya",

"にゅ=>nyu",

"にょ=>nyo",

"ひゃ=>hya",

"ひゅ=>hyu",

"ひょ=>hyo",

"みゃ=>mya",

"みゅ=>myu",

"みょ=>myo",

"りゃ=>rya",

"りゅ=>ryu",

"りょ=>ryo",

"ぎゃ=>gya",

"ぎゅ=>gyu",

"ぎょ=>gyo",

"じゃ=>ja",

"じゅ=>ju",

"じょ=>jo",

"びゃ=>bya",

"びゅ=>byu",

"びょ=>byo",

"ぴゃ=>pya",

"ぴゅ=>pyu",

"ぴょ=>pyo",

"ふぁ=>fa",

"ふぃ=>fi",

"ふぇ=>fe",

"ふぉ=>fo",

"ふゅ=>fyu",

"うぃ=>wi",

"うぇ=>we",

"うぉ=>wo",

"つぁ=>tsa",

"つぃ=>tsi",

"つぇ=>tse",

"つぉ=>tso",

"ちぇ=>che",

"しぇ=>she",

"じぇ=>je",

"てぃ=>ti",

"でぃ=>di",

"でゅ=>du",

"とぅ=>tu",

"ぢゃ=>ja",

"ぢゅ=>ju",

"ぢょ=>jo",

"ぁ=>a",

"ぃ=>i",

"ぅ=>u",

"ぇ=>e",

"ぉ=>o",

"っ=>t",

"ゃ=>ya",

"ゅ=>yu",

"ょ=>yo",

"ア=>a",

"イ=>i",

"ウ=>u",

"エ=>e",

"オ=>o",

"カ=>ka",

"キ=>ki",

"ク=>ku",

"ケ=>ke",

"コ=>ko",

"サ=>sa",

"シ=>shi",

"ス=>su",

"セ=>se",

"ソ=>so",

"タ=>ta",

"チ=>chi",

"ツ=>tsu",

"テ=>te",

"ト=>to",

"ナ=>na",

"ニ=>ni",

"ヌ=>nu",

"ネ=>ne",

"ノ=>no",

"ハ=>ha",

"ヒ=>hi",

"フ=>fu",

"ヘ=>he",

"ホ=>ho",

"マ=>ma",

"ミ=>mi",

"ム=>mu",

"メ=>me",

"モ=>mo",

"ヤ=>ya",

"ユ=>yu",

"ヨ=>yo",

"ラ=>ra",

"リ=>ri",

"ル=>ru",

"レ=>re",

"ロ=>ro",

"ワ=>wa",

"ヲ=>o",

"ン=>n",

"ガ=>ga",

"ギ=>gi",

"グ=>gu",

"ゲ=>ge",

"ゴ=>go",

"ザ=>za",

"ジ=>ji",

"ズ=>zu",

"ゼ=>ze",

"ゾ=>zo",

"ダ=>da",

"ヂ=>ji",

"ヅ=>zu",

"デ=>de",

"ド=>do",

"バ=>ba",

"ビ=>bi",

"ブ=>bu",

"ベ=>be",

"ボ=>bo",

"パ=>pa",

"ピ=>pi",

"プ=>pu",

"ペ=>pe",

"ポ=>po",

"キャ=>kya",

"キュ=>kyu",

"キョ=>kyo",

"シャ=>sha",

"シュ=>shu",

"ショ=>sho",

"チャ=>cha",

"チュ=>chu",

"チョ=>cho",

"ニャ=>nya",

"ニュ=>nyu",

"ニョ=>nyo",

"ヒャ=>hya",

"ヒュ=>hyu",

"ヒョ=>hyo",

"ミャ=>mya",

"ミュ=>myu",

"ミョ=>myo",

"リャ=>rya",

"リュ=>ryu",

"リョ=>ryo",

"ギャ=>gya",

"ギュ=>gyu",

"ギョ=>gyo",

"ジャ=>ja",

"ジュ=>ju",

"ジョ=>jo",

"ビャ=>bya",

"ビュ=>byu",

"ビョ=>byo",

"ピャ=>pya",

"ピュ=>pyu",

"ピョ=>pyo",

"ファ=>fa",

"フィ=>fi",

"フェ=>fe",

"フォ=>fo",

"フュ=>fyu",

"ウィ=>wi",

"ウェ=>we",

"ウォ=>wo",

"ヴァ=>va",

"ヴィ=>vi",

"ヴ=>v",

"ヴェ=>ve",

"ヴォ=>vo",

"ツァ=>tsa",

"ツィ=>tsi",

"ツェ=>tse",

"ツォ=>tso",

"チェ=>che",

"シェ=>she",

"ジェ=>je",

"ティ=>ti",

"ディ=>di",

"デュ=>du",

"トゥ=>tu",

"ヂャ=>ja",

"ヂュ=>ju",

"ヂョ=>jo",

"ァ=>a",

"ィ=>i",

"ゥ=>u",

"ェ=>e",

"ォ=>o",

"ッ=>t",

"ャ=>ya",

"ュ=>yu",

"ョ=>yo"

]

}

},

"tokenizer": {

"kuromoji_normal": {

"mode": "normal",

"type": "kuromoji_tokenizer"

}

},

"filter": {

"readingform": {

"type": "kuromoji_readingform",

"use_romaji": true

},

"edge_ngram": {

"type": "edge_ngram",

"min_gram": 1,

"max_gram": 10

},

"synonym": {

"type": "synonym",

"lenient": true,

"synonyms": [

"nippon, nihon"

]

}

},

"analyzer": {

"suggest_index_analyzer": {

"type": "custom",

"char_filter": [

"normalize"

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase",

"edge_ngram"

]

},

"suggest_search_analyzer": {

"type": "custom",

"char_filter": [

"normalize"

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase"

]

},

"readingform_index_analyzer": {

"type": "custom",

"char_filter": [

"normalize",

"kana_to_romaji"

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase",

"readingform",

"asciifolding",

"synonym",

"edge_ngram"

]

},

"readingform_search_analyzer": {

"type": "custom",

"char_filter": [

"normalize",

"kana_to_romaji"

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase",

"readingform",

"asciifolding",

"synonym"

]

}

}

}

},

"mappings": {

"properties": {

"my_field": {

"type": "keyword",

"fields": {

"suggest": {

"type": "text",

"search_analyzer": "suggest_search_analyzer",

"analyzer": "suggest_index_analyzer"

},

"readingform": {

"type": "text",

"search_analyzer": "readingform_search_analyzer",

"analyzer": "readingform_index_analyzer"

}

}

}

}

}

} Example: Data preparation

Now we’ll POST the search history and the date of the searches as input data and index the data into Elasticsearch. This data assumes the following numbers of searches.

- 「日本」 searched for 6 times

- 「日本 地図」 searched for 5 times

- 「日本 郵便」 searched for 3 times

- 「日本の人口」 searched for 2 times

- 「日本 代表」 searched for 1 time

POST _bulk

{"index": {"_index": "my_suggest"}}

{"my_field": "日本", "created": "2016-11-11T11:11:11"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本", "created": "2017-11-11T11:11:11"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本", "created": "2018-11-11T11:11:11"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本", "created": "2019-11-11T11:11:11"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本", "created": "2020-11-11T11:11:11"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本", "created": "2020-11-11T11:11:11"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 地図", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 地図", "created": "2020-03-11T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 地図", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 地図", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 地図", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 郵便", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 郵便", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 郵便", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本の人口", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本の人口", "created": "2020-04-07T12:00:00"}

{"index": {"_index": "my_suggest"}}

{"my_field": "日本 代表", "created": "2020-04-07T12:00:00"} Example: Search query preparation

Below are the queries when the search keywords are "日本," "にほn," "にhん," "にっほん," and "日本ん." The difference between queries is only the query description in my_field.suggest and my_field.readingform.

Search keyword is "日本"

GET my_suggest/_search

{

"size": 0,

"query": {

"bool": {

"should": [

{

"match": {

"my_field.suggest": {

"query": "日本"

}

}

},

{

"match": {

"my_field.readingform": {

"query": "日本",

"fuzziness": "AUTO",

"operator": "and"

}

}

}

]

}

},

"aggs": {

"keywords": {

"terms": {

"field": "my_field",

"order": {

"_count": "desc"

},

"size": "10"

}

}

}

} Search keyword is "にほn"

GET my_suggest/_search

{

"size": 0,

"query": {

"bool": {

"should": [

{

"match": {

"my_field.suggest": {

"query": "にほn"

}

}

},

{

"match": {

"my_field.readingform": {

"query": "にほn",

"fuzziness": "AUTO",

"operator": "and"

}

}

}

]

}

},

"aggs": {

"keywords": {

"terms": {

"field": "my_field",

"order": {

"_count": "desc"

},

"size": "10"

}

}

}

} Search keyword is "にhん"

GET my_suggest/_search

{

"size": 0,

"query": {

"bool": {

"should": [

{

"match": {

"my_field.suggest": {

"query": "にhん"

}

}

},

{

"match": {

"my_field.readingform": {

"query": "にhん",

"fuzziness": "AUTO",

"operator": "and"

}

}

}

]

}

},

"aggs": {

"keywords": {

"terms": {

"field": "my_field",

"order": {

"_count": "desc"

},

"size": "10"

}

}

}

} Search keyword is "にっほん"

GET my_suggest/_search

{

"size": 0,

"query": {

"bool": {

"should": [

{

"match": {

"my_field.suggest": {

"query": "にっほん"

}

}

},

{

"match": {

"my_field.readingform": {

"query": "にっほん",

"fuzziness": "AUTO",

"operator": "and"

}

}

}

]

}

},

"aggs": {

"keywords": {

"terms": {

"field": "my_field",

"order": {

"_count": "desc"

},

"size": "10"

}

}

}

} Search keyword is "日本ん"

GET my_suggest/_search

{

"size": 0,

"query": {

"bool": {

"should": [

{

"match": {

"my_field.suggest": {

"query": "日本ん"

}

}

},

{

"match": {

"my_field.readingform": {

"query": "日本ん",

"fuzziness": "AUTO",

"operator": "and"

}

}

}

]

}

},

"aggs": {

"keywords": {

"terms": {

"field": "my_field",

"order": {

"_count": "desc"

},

"size": "10"

}

}

}

} Example: Search (Suggest) result

The following is the search (suggest) result when the search keyword is "日本." We’ll skip the search results for search keywords "にほn," "にhん," "にっほん," and "日本ん" because they are the same as "日本."

{

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 17,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"keywords" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "日本",

"doc_count" : 6

},

{

"key" : "日本 地図",

"doc_count" : 5

},

{

"key" : "日本 郵便",

"doc_count" : 3

},

{

"key" : "日本の人口",

"doc_count" : 2

},

{

"key" : "日本 代表",

"doc_count" : 1

}

]

}

}

} Let’s take a deeper look at the mappings and analyzers we used.

Mapping configuration

Here are the main decisions we made with our mappings:

- As Elasticsearch supports multi-fields, we will use them for our implementation.

- We used a terms aggregation to show the suggestion candidate list, we set the field type to

keyword. Also, please note that this operation can be costly when the dataset becomes large, so it’s better to make a dedicated index or, even better, to have a dedicated cluster for suggesting purposes only. - We prepared a multi-field called

suggestwith analyzers to absorb the variations in kanji readings. Example: When "東" is entered, display "東京" as a suggestion, or when "日" is entered, display "日本" as a suggestion, etc. - We prepare one more multi-field called

readingformwith analyzers to handle undetermined kana. Example: When "にほn" is entered, display "日本," "日本 地図," and "日本の人口," etc. as suggestions.

"mappings": {

"properties": {

"my_field": {

"type": "keyword",

"fields": {

"suggest": {

"type": "text",

"search_analyzer": "suggest_search_analyzer",

"analyzer": "suggest_index_analyzer"

},

"readingform": {

"type": "text",

"search_analyzer": "readingform_search_analyzer",

"analyzer": "readingform_index_analyzer"

}

}

}

} Analyzer configuration for suggest field

We prepared two analyzers separately for search and index use:

Search analyzer

- We used ICU normalizer (nfkc) in

char_filterto absorb the variation of full-width and half-width notation for alphanumeric and katakana characters in the search target. Example: Full-width "1" will be converted to half-width "1." - We used kuromoji tokenizer for morphological analysis. Also, set mode to

normalso that it will not divide the word too trivially. Example: "東京大学" (University of Tokyo) will remain as "東京大学." - We used lowercase token filter to normalize the alphabet to lowercase. Example: "TOKYO" will be converted to "tokyo."

"suggest_search_analyzer": {

"type": "custom",

"char_filter": [

"normalize"

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase"

]

} Index analyzer

In addition to the configuration described above, we also used an edge ngram token filter to perform prefix search when the user's input contents are divided into multiple parts.

"suggest_index_analyzer": {

"type": "custom",

"char_filter": [

"normalize"

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase",

"edge_ngram"

]

} Examples of analyzer results

Example of suggest_search_analyzer analyze result

# request

GET my_suggest/_analyze

{

"analyzer": "suggest_search_analyzer",

"text": ["日本 地図"]

}

# response

{

"tokens" : [

{

"token" : "日本",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "地図",

"start_offset" : 3,

"end_offset" : 5,

"type" : "word",

"position" : 1

}

]

} Example of suggest_index_analyzer analyze results

# request

GET my_suggest/_analyze

{

"analyzer": "suggest_index_analyzer",

"text": ["日本 地図"]

}

# response

{

"tokens" : [

{

"token" : "日",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "日本",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "地",

"start_offset" : 3,

"end_offset" : 5,

"type" : "word",

"position" : 1

},

{

"token" : "地図",

"start_offset" : 3,

"end_offset" : 5,

"type" : "word",

"position" : 1

}

]

} Analyzer configuration for the readingform field

We prepared two analyzers separately for search and index use:

Search analyzer

- We used ICU normalizer (nfkc) in

char_filterto absorb the variation of full-width and half-width notation for alphanumeric and katakana characters in the search target. Example: Full-width "1" will be converted to half-width "1." - In readingform token filter, some kana conversions are not correctly performed (Example: "きゃぷてん" is converted to "ki" and "ゃぷてん"). Therefore, we defined a separate kana to romaji

char_filter(for this blog, we’ll name it “kana_to_romaji”). - We used kuromoji tokenizer for morphological analysis. Also, set mode to

normalso that it will not divide the word too trivially. Example: "東京大学" will remain as "東京大学." - We used lowercase token filter to normalize the alphabet to lowercase. Example: "TOKYO" will be converted to "tokyo."

- We then used a

readingformtoken filter. Also to convert kanji to romaji, setuse_romajito true. Example: "寿司" will be converted to "sushi." - In the

readingformtoken filter, some conversions can result in macron letters (e.g. "とうきょう" will be converted to "tōkyō"). To normalize the macron letters (e.g., convert "tōkyō" to "tokyo"), useasciifoldingtoken filter. - When there are two or more ways of reading (e.g., "日本" can be "nippon" or "nihon"), use synonym token filter to absorb the reading difference.

"readingform_search_analyzer": {

"type": "custom",

"char_filter": [

"normalize", <= icu_normalizer nfkc compose mode

"kana_to_romaji" <= convert Kana to Romaji

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase",

"readingform",

"asciifolding",

"synonym"

]

} Index analyzer

In addition to the configuration described above, we also used an edge ngram token filter to perform prefix search when the user's input contents are divided into multiple parts.

"readingform_index_analyzer": {

"type": "custom",

"char_filter": [

"normalize",

"kana_to_romaji"

],

"tokenizer": "kuromoji_normal",

"filter": [

"lowercase",

"readingform",

"asciifolding",

"synonym",

"edge_ngram"

]

}, Examples of analyzer results

Example of readingform_search_analyzer analyze results

# request

GET my_suggest/_analyze

{

"analyzer": "readingform_search_analyzer",

"text": ["にほn"]

}

# response

{

"tokens" : [

{

"token" : "nihon",

"start_offset" : 0,

"end_offset" : 3,

"type" : "word",

"position" : 0

},

{

"token" : "nippon",

"start_offset" : 0,

"end_offset" : 3,

"type" : "SYNONYM",

"position" : 0

}

]

} Example of readingform_index_analyzer analyze results

# request

GET my_suggest/_analyze

{

"analyzer": "readingform_index_analyzer",

"text": ["日本"]

}

# response

{

"tokens" : [

{

"token" : "n",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "ni",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "nip",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "nipp",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "nippo",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "nippon",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "n",

"start_offset" : 0,

"end_offset" : 2,

"type" : "SYNONYM",

"position" : 0

},

{

"token" : "ni",

"start_offset" : 0,

"end_offset" : 2,

"type" : "SYNONYM",

"position" : 0

},

{

"token" : "nih",

"start_offset" : 0,

"end_offset" : 2,

"type" : "SYNONYM",

"position" : 0

},

{

"token" : "niho",

"start_offset" : 0,

"end_offset" : 2,

"type" : "SYNONYM",

"position" : 0

},

{

"token" : "nihon",

"start_offset" : 0,

"end_offset" : 2,

"type" : "SYNONYM",

"position" : 0

}

]

} Configuration of query for suggestion

We used a normal search query to generate the suggested candidate list. There are two ways to do this.

- Use a match query + terms aggregation to display the suggestion candidate list sorted by the most-searched words

- Use a match query with field duplicated handling (e.g., collapse) + sort to display the suggestion candidate list

In this blog, we used a terms aggregation to describe the query implementation.

When implementing suggestions based on the index of search history, using a terms aggregation (option 1) is a quick way. However, it might not be performant, so rather than giving a suggested sentence, users can also see it as a document suggester (returning documents directly instead of suggestions).

Moreover, for large-scale suggestions, you can also consider preparing data for suggestions and perform the sorting (option 2) based on the requirements.

Query design

GET my_suggest/_search

{

"size": 0,

"query": {

"bool": {

"should": [

{

"match": {

"my_field.suggest": {

"query": "にほn"

}

}

},

{

"match": {

"my_field.readingform": {

"query": "にほn",

"fuzziness": "AUTO",

"operator": "and"

}

}

}

]

}

},

"aggs": {

"keywords": {

"terms": {

"field": "my_field",

"order": {

"_count": "desc"

},

"size": "10"

}

}

}

} Notes on tuning

As already described, we performed some tunings in our example above. Other parts can also be tuned. Please feel free to do the tuning according to your actual requirements.

Part 1: Use synonyms to handle words not in the dictionary

Example: Suggest "日本" when typing にほn as search keyword

Based on the result of readingform_index_analyzer, without using a synonym, "日本" will be divided to "n, ni, nip, nipp, nippo, nippon." However, the result of readingform_search_analyzer without synonyms will convert "にほn" to "nihon," which will not match the above tokens.

This is a limitation of kuromoji, because the reading of "日本" is only described as "nippon," not "nihon." To handle this, use synonyms and define "nihon" as a synonym of "nippon."

Part 2: Absorb variance during typing

Example: Suggest "日本" when typing にhん as search keyword

As the result of readingform_index_analyzer (with above synonym definition), "日本" will be divided to "n, ni, nip, nipp, nippo, nippon, nih, niho, nihon." In readingform_search_analyzer, icu_normalizer char_filter will convert the full-width "h" to half-width "h" in "にhん." Then, in kana_to_romaji char_filter, "にhん" will be converted to "nihn." Moreover, in match query, using fuzziness: auto, "nihn" will match "niho" and thus will give the expected suggestion.

Others like "にっほん" or "日本ん" are similar to this. We designed the analyzer to absorb variance during typing as much as possible.

Part 3: Display popular search keywords at the top

In this blog, we showed an example of displaying suggestion candidates at the top simply based on the highest number of searches. In practice, it is also good to prepare a dedicated field for recording the popularity of the search keyword and periodically update the popularity based on search trends. Suggestions that are sorted based on the popularity field may result in a better user experience.

Conclusion

In this article, we saw why autocomplete suggestions are much more difficult to implement in Japanese than in English. We then explored some considerations for implementing Japanese autocomplete suggestions, went through a detailed example of implementation, and wrapped up with some fine tuning options.

If you’d like to try this for yourself but don’t have an Elasticsearch cluster running, you can spin up a free trial of Elastic Cloud, add the analysis-icu and analysis-kuromoji plugin, and use the example configurations and data from this blog. When you do, please feel free to give us your feedback on our forums. Enjoy your autocomplete journey!