引导式演示

发现潜伏在您数据中的威胁

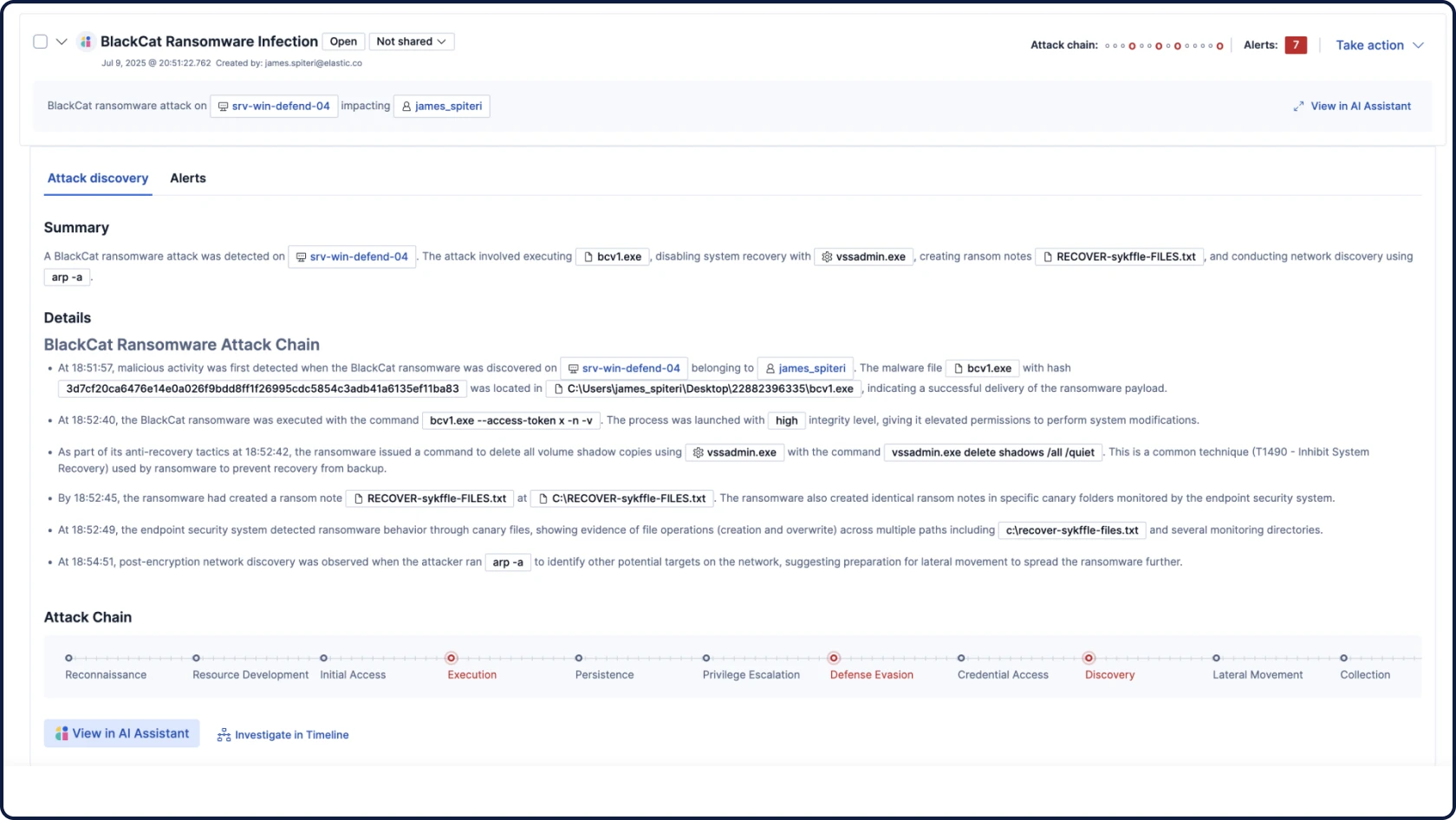

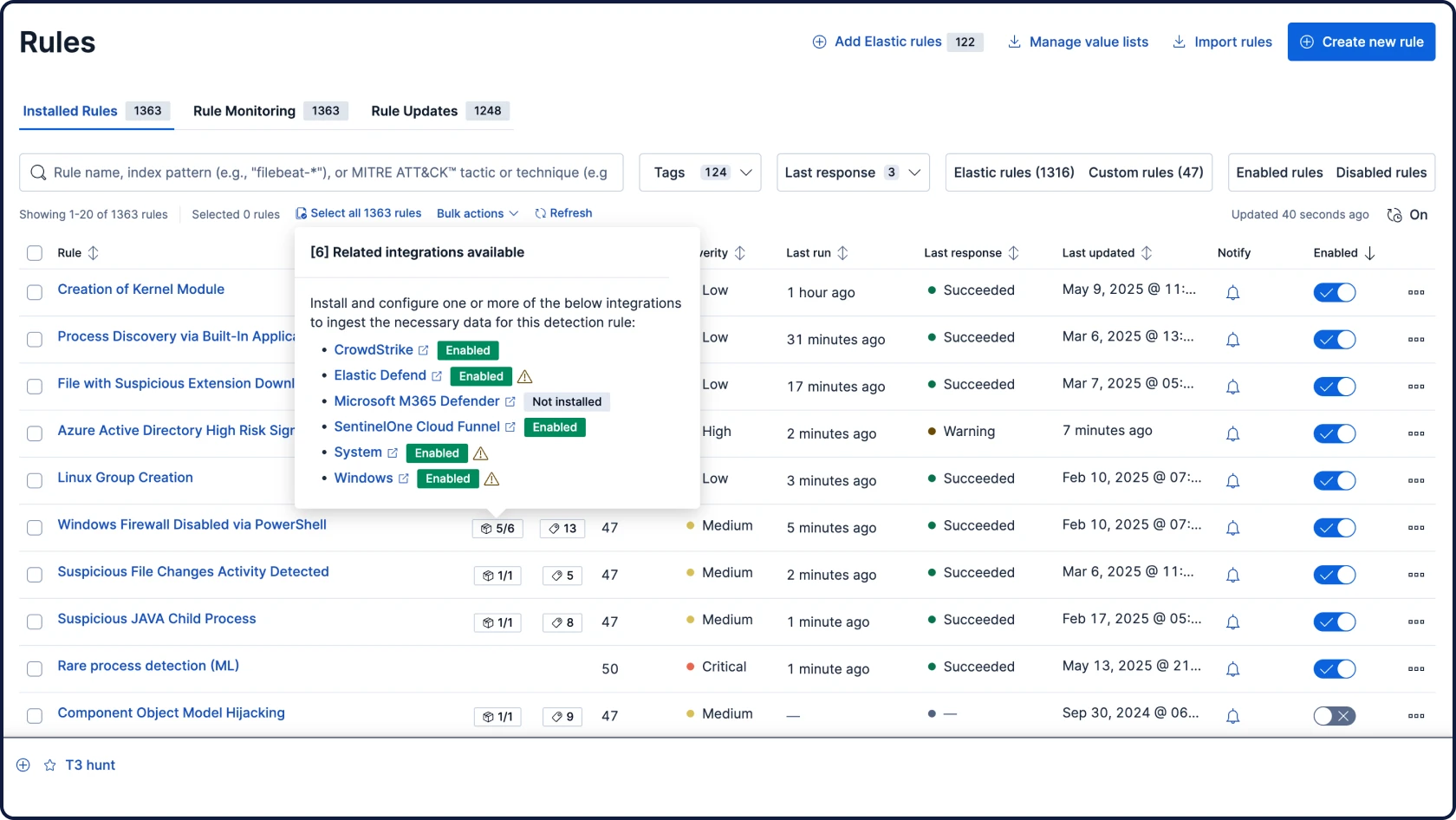

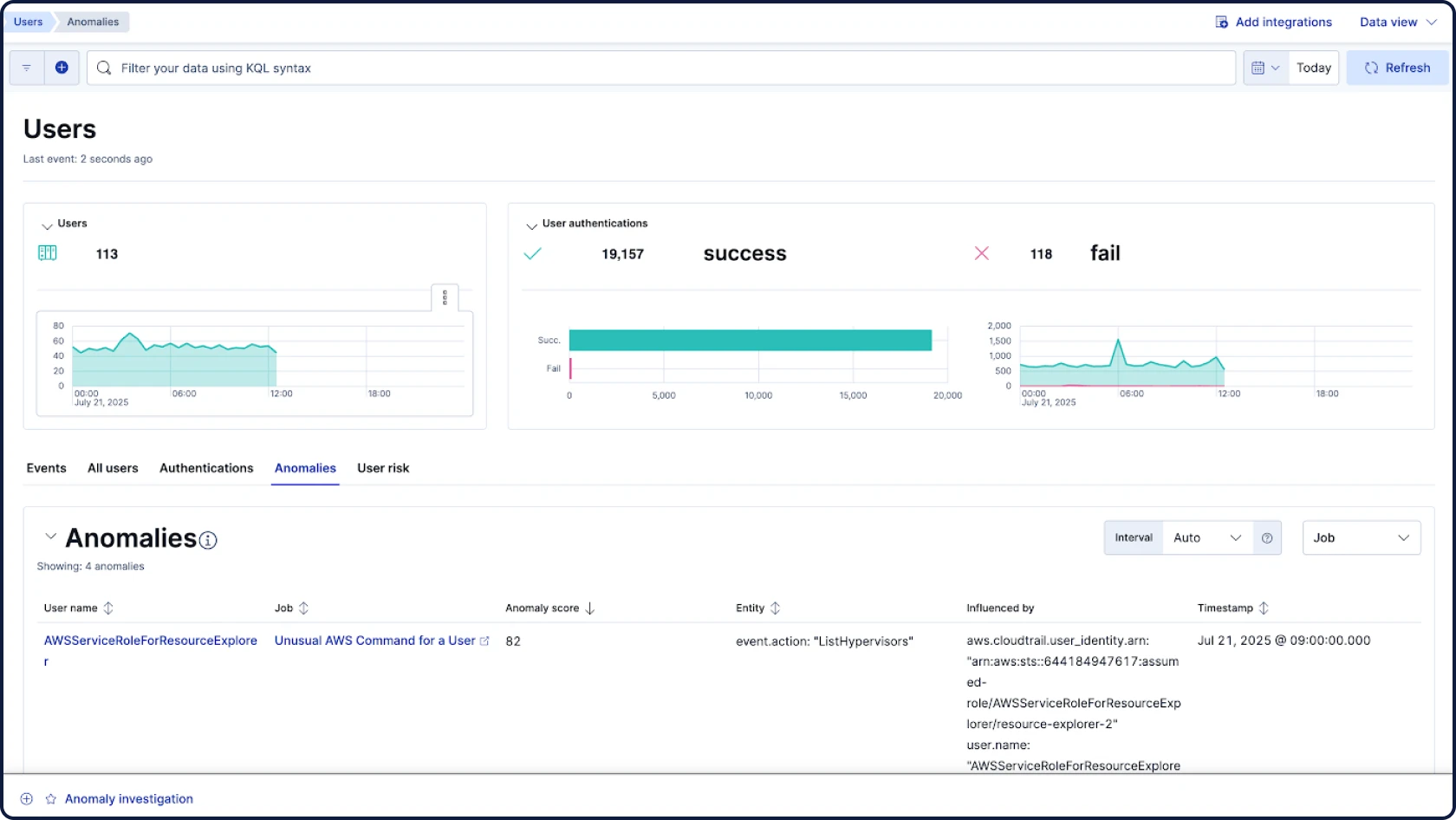

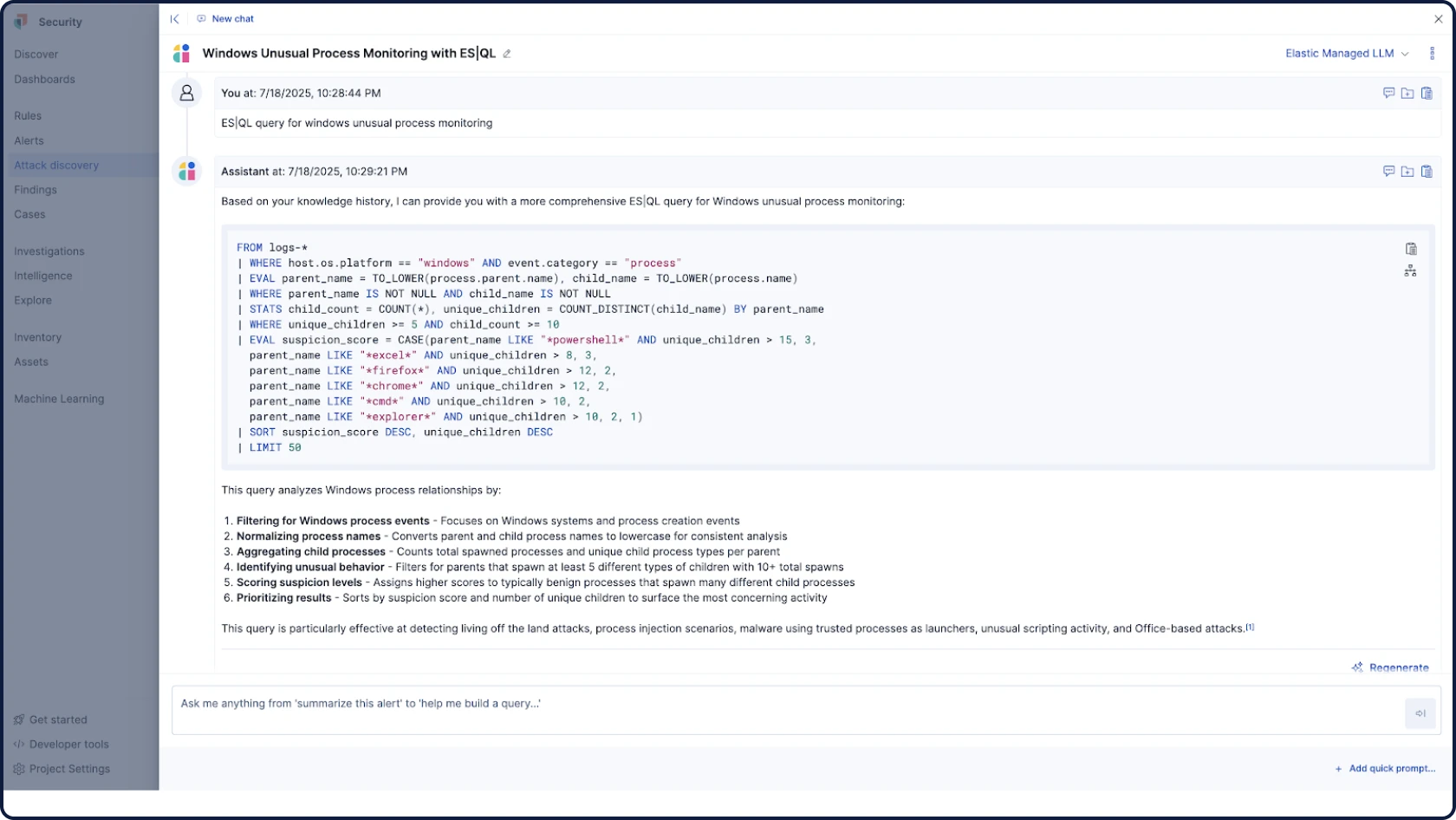

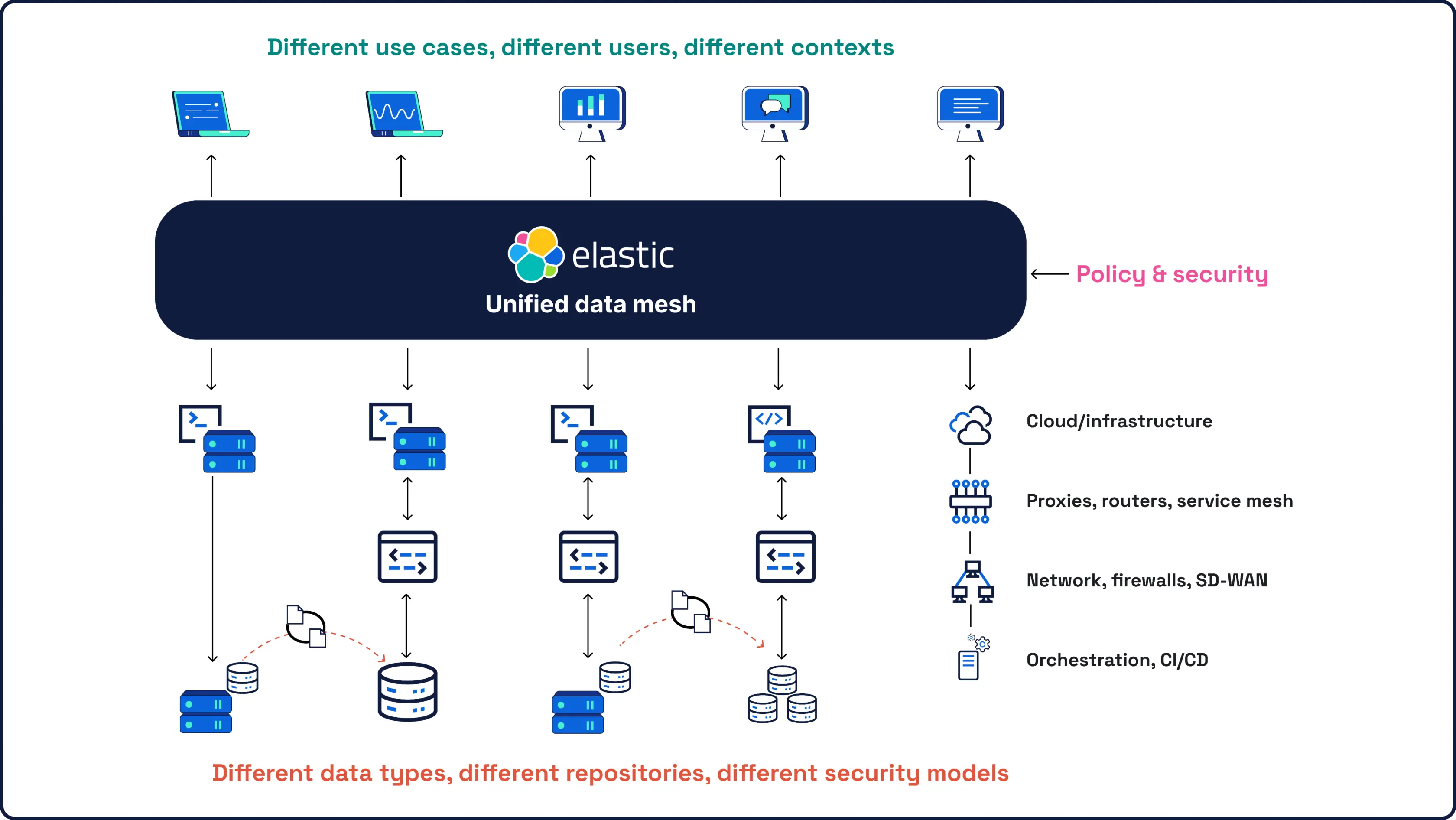

安全始于数据,而数据是我们最了解的。作为全球领先的开源搜索和分析引擎 Elasticsearch 背后的公司,我们的 SIEM 为您所有规模的安全数据提供强大的、AI驱动的检测和调查功能。

包装选项

全面采用,或按照自己的节奏推进部署

我们的安全平台可以满足您的需求,并带您到达传统平台无法到达的地方。

Elastic Security

您所需的一切——SIEM、XDR、云安全和集成 AI——都在一个统一的平台上。无需额外 SKU,无需附加组件,无需妥协。专为分析师的思维、搜寻和响应方式打造的无缝体验。

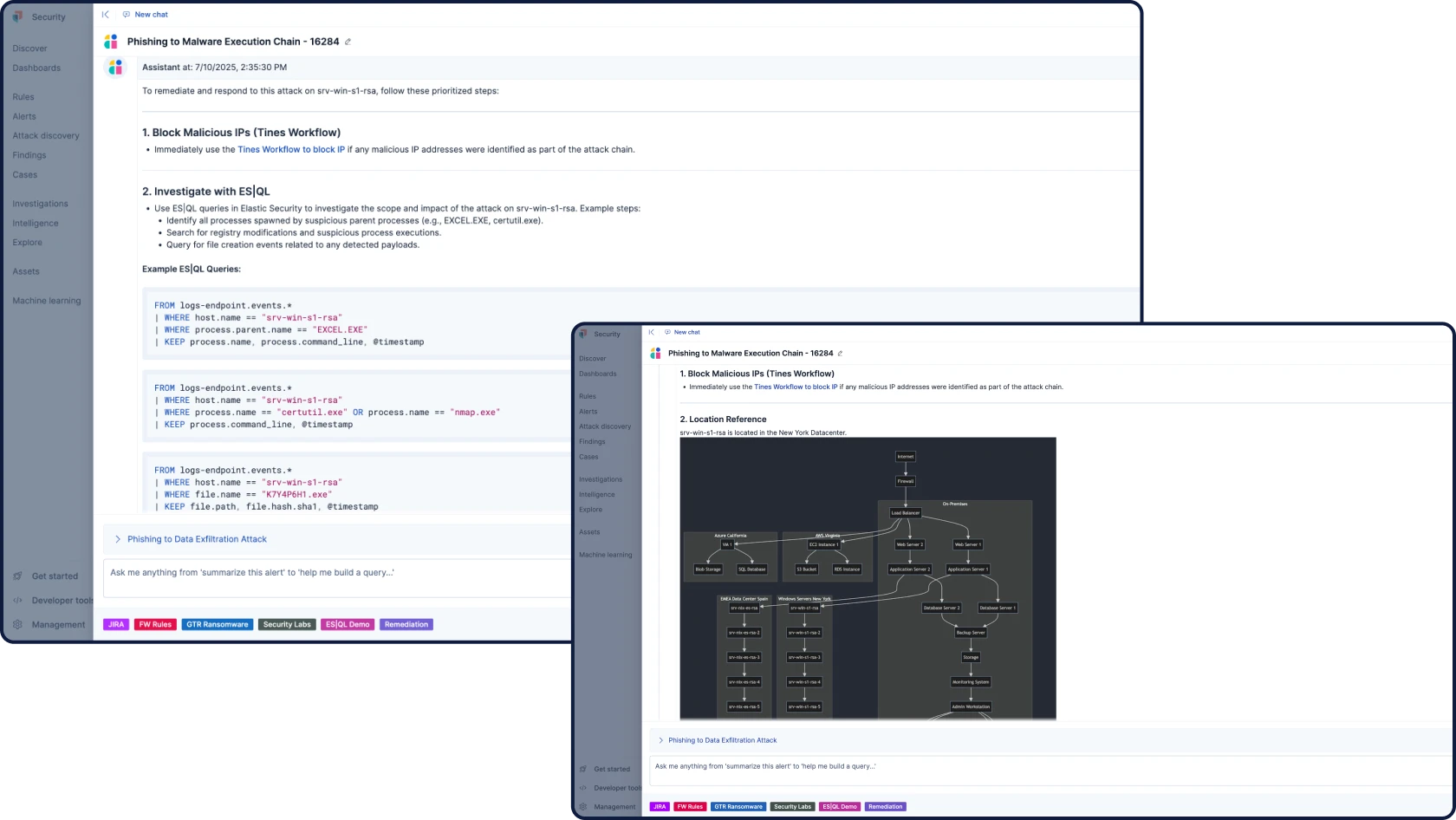

Elastic AI SOC Engine (EASE)

一套 AI 功能包,让您能够按照自己的计划采用 Elastic Security,无需进行全面的淘汰和更换。利用可集成到您的数据和工作流的 AI 来增强您现有的 SIEM、EDR 和其他告警工具——并在您准备就绪时扩展到完整平台。

很多公司面临相同的问题

客户聚焦

Airtel 利用 Elastic 的 AI 功能改善了网络态势,将 SOC 效率提高了 40%,并将调查速度提高了 30%。

Airtel 利用 Elastic 的 AI 功能改善了网络态势,将 SOC 效率提高了 40%,并将调查速度提高了 30%。客户聚焦

Sierra Nevada Corporation 使用 Elastic Security 保护其基础架构以及其他国防承包商的基础架构。

Sierra Nevada Corporation 使用 Elastic Security 保护其基础架构以及其他国防承包商的基础架构。客户聚焦

Mimecast 统一可视性,推动调查,将关键事件减少 95%,重塑全球安全运维 (SecOps)。

.jpg)