New to Elasticsearch? Join our getting started with Elasticsearch webinar. You can also start a free cloud trial or try Elastic on your machine now.

Navigating Elasticsearch Serverless pricing is simple... you pay for the resources you use. Getting a handle on VCUs, ECUs, and the factors that drive your consumption is key to making informed decisions about your usage. In this blog, we'll break down exactly how Elasticsearch Serverless pricing works so you can plan, monitor, and optimize your spend.

When we built Elasticsearch Serverless, we had to decide how to bill our users. While a charge per query may have been easier to reason about from a consumption perspective, it would be a lot harder to reason about from a resource perspective. Instead, we implemented a simple pricing scheme comprising three dimensions for compute: search, ingest, and machine learning VCUs. This means we charge users for the actual resources we allocate to fulfill your requested workloads.

VCU, ECU, and other terms

Let's start by defining a few terms that will keep coming back throughout this post.

VCU

A VCU is a Virtual Compute Unit, representing a fraction of RAM, CPU, and local disk for caching. We separate compute by the workloads they support, so we have three flavors of VCU:

- Search VCU

- Ingest VCU

- Machine Learning (ML) VCU

VCU’s are charged by the hour.

Regional pricing

We have different prices for different regions and different cloud providers. You can find a full list of prices on this page.

ECU

An ECU is an Elastic Consumption Unit, which is the unit we bill you in. The nominal value of an ECU is $1.00 USD. All of the different components of consumption are charged at a specific rate of ECUs per time unit. For example, one Gigabyte of storage might cost 0.047 ECU per month, so 100 GB of storage will cost you 4.7 ECU = $4.70 for one month. Similarly, if your search workload consumed 10 VCUs in a day and the Search VCU rate in your region is 0.09 ECU, your cost for that day would be $0.90.

Interactive Dataset Size

The amount of data in your project has a direct influence on your costs. We make the distinction of “interactive dataset” primarily for time-series data, as this relates to the amount of data in the Boost Window. For non-time-series data, this is simply the amount of data in the project.

Project settings

We have three project settings that allow you to control your project's usage.

Search power

Search Power controls the speed of searches against your data. With Search Power, you can improve search performance by adding more resources for querying, or you can reduce provisioned resources to cut costs. Choose from three Search Power settings:

On-demand: Autoscales based on data and search load, with a lower minimum baseline for resource use. This flexibility results in more variable query latency and reduced maximum throughput.

Performant: Delivers consistently low latency and autoscales to accommodate moderately high query throughput.

High-availability: Optimized for high-throughput scenarios, autoscaling to maintain query latency even at very high query volumes.

Boost window

For time series use cases, the boost window is the number of days of data that constitutes your interactive dataset size. The interactive dataset is the portion of your data that we keep cached, and that we use to determine how to scale the Search tier for your project. By default, the boost window is seven days.

Data retention

You can set the number of days of data that are retained in your project, which will affect the amount of storage we need. You can do this on a per-data stream basis in your project.

Price components

Serverless Elasticsearch contains a few different pricing components. For most use cases, the components you will care most about are Search, Ingest, and ML VCUs, as well as the Elastic Inference Service's token consumption.

Search VCUs

Search VCU consumption is the most complex part of pricing. We make this simple for you by automatically determining the right amount of VCUs that are needed to fulfill your workloads. For more details on how our autoscaling logic works, see our earlier blog on the topic.

Search VCU inputs

Search VCUs are allocated based on a few factors, but mainly, we can boil it down to three inputs: the interactive dataset size, the search load on the system, and Search Power.

For traditional search use cases, the interactive dataset size will generally be your entire dataset. For time series use cases, it will be the portion of your dataset that fits inside the Boost Window.

Search load measures the amount of load being placed on the system by currently active searches. The main contributing factors are the number of searches per second, the complexity of the searches (the more that needs to be computed, the higher the load), and the size of the dataset that needs to be searched to fulfill the result. If we can get you the right number of results by scanning 10% of the dataset, then the load will be much lower than if we need to scan the full dataset.

Finally, Search Power influences the number of VCUs we allocate. Each Search Power setting defines the baseline capacity of the search tier.

In short: the larger the dataset size and the higher the search load, the more VCUs we need to fulfill your search requests. Search Power allows you to tune to what extent we will scale up and down.

Minimum VCUs

Elasticsearch Serverless is designed to align infrastructure costs directly with your application's demand.

For smaller workloads, the search infrastructure can scale down to zero VCUs during periods of inactivity. If the system detects fifteen minutes of total inactivity, the associated hardware resources are deprovisioned. This makes the platform highly cost-effective for development environments, bursty workloads, or applications with intermittent usage. Note that inactivity means actual inactivity: no user-initiated searches whatsoever. As soon as we need to serve a search of any kind, we need to allocate hardware resources to execute that search.

As your interactive dataset grows, the system eventually reaches a storage threshold where a baseline level of resources is required to maintain data availability and indexing readiness. A minimum VCU allocation is maintained to ensure your data remains "warm" and queryable, even if no active searches are occurring.

VCU consumption is not linear

Because our hardware is allocated in steps, consumption of VCUs does not necessarily scale linearly with workload size. Each scaling step can contain a wide range of workloads, and if your workload is at the bottom of that range, it may have a lot of room to grow before we need to jump to the next scaling step.

This can make estimating based on a non-representative workload hard. For example, you may be consuming 2 VCUs per hour on a small workload. It's entirely possible that you could increase your workload size by a factor of 100 and still fit in that 2 VCU per hour load before we need to start increasing the amount of VCUs we allocate to serve your workload.

We know this makes estimating your cost a little harder, and we are working on ways to make that easier for you. If you need more help estimating your likely price, you can always talk to our customer team and get more personalized assistance.

Ingest VCUs

Ingest VCUs are much simpler than Search VCUs.

Ingest VCU Inputs

Ingest VCUs have essentially three inputs: the number of indices, the ingest rate, and the ingest complexity. We need to allocate a little bit of memory for every index in your system, which is why the number of indices matters. Read indices in data streams do not count for this calculation.

The faster you ingest, the more CPU we will need to process that ingestion. And the more complex your ingest requests, the more CPU we will need. Some factors that make ingest requests more expensive to execute are complicated field mappings or a lot of post-processing.

Minimum Ingest VCUs

We do not have a minimum number of VCUs we allocate to your ingest. If you do not ingest data, we do not need to allocate any VCUs to processing ingestion. There is an exception for a large number of indices (think: thousands of indices), where we do need to keep some resources allocated to be responsive when indexing requests come in.

VCU consumption is not linear

As with Search VCUs, we allocate Ingest VCUs based on step functions. Each step can contain a wide range of workloads: it's entirely possible that if you have a minimal amount of ingest, you could increase your ingest rate by a factor of 100 and still fit in the same step, thus not actually increasing your cost.

AI workloads

When running machine learning tasks in Serverless, we give you three options:

- You use our Elastic Inference Service (EIS) to run your inference and completion workloads. We take care of everything, and you are charged per token.

- You use traditional Elasticsearch Machine Learning capabilities to run your workloads. These use our Trained Models capabilities. We will scale up and down based on your machine learning workload requirements.

- You do it yourself, outside of our systems, and just bring your vectors or other inference results to store and search in Elasticsearch.

EIS

The pricing for EIS is quite straightforward: you get charged a rate per one million consumed tokens. Token consumption is generally easy to predict for inference workloads. For LLM-based tasks, particularly agentic ones, this can be more complex, and some experimentation and trial runs may be useful to determine how many tokens your workloads typically consume.

ML VCUs

Machine Learning VCUs work on one simple input: machine learning workloads. The more inference you require, the more VCUs we will consume. Once you stop performing inference, we will scale down. We will keep a trained model in memory for about 24 hours after you last used it so that we can be responsive, which means that the minimal amount of VCU required to keep that model available will remain up for 24 hours before scaling down entirely.

We generally recommend our customers use EIS instead of our Machine Learning nodes for inference, particularly if your usage is periodic. By switching to EIS, you will not have to wait for machine learning nodes to spin up, and we won't charge you for unused ML node time before scaling down. EIS charges on a per token basis.

Storage

We charge storage per gigabyte per month. Storage does serve as an input into other parts of our system, particularly Search VCUs (see Search VCU above), but the pricing for storage itself is quite straightforward.

Data Out (egress)

We charge you for the data you take out of the system.

To minimize your egress costs, we recommend a few optimizations on your queries:

- Do not return vectors in your query responses. We do this by default for indices created after October 2025. You can always return vectors in your responses explicitly if necessary.

- Return only the fields needed for your application. You can do this by using the

fieldsand_sourceparameters.

Support

We charge support as a percentage of your total ECU usage. We currently have four levels of support:

- Limited support

- Base support

- Enhanced support

- Premium support

Project subtype profiles

We currently offer two project subtypes for Serverless Elasticsearch, referred to as “General Purpose” and “Vector Optimized”. All Serverless Elasticsearch projects created through the cloud console UI will be created using the “General Purpose” option. You may create a “Vector Optimized” by calling the API directly with the optimized_for parameter (see documentation for all options).

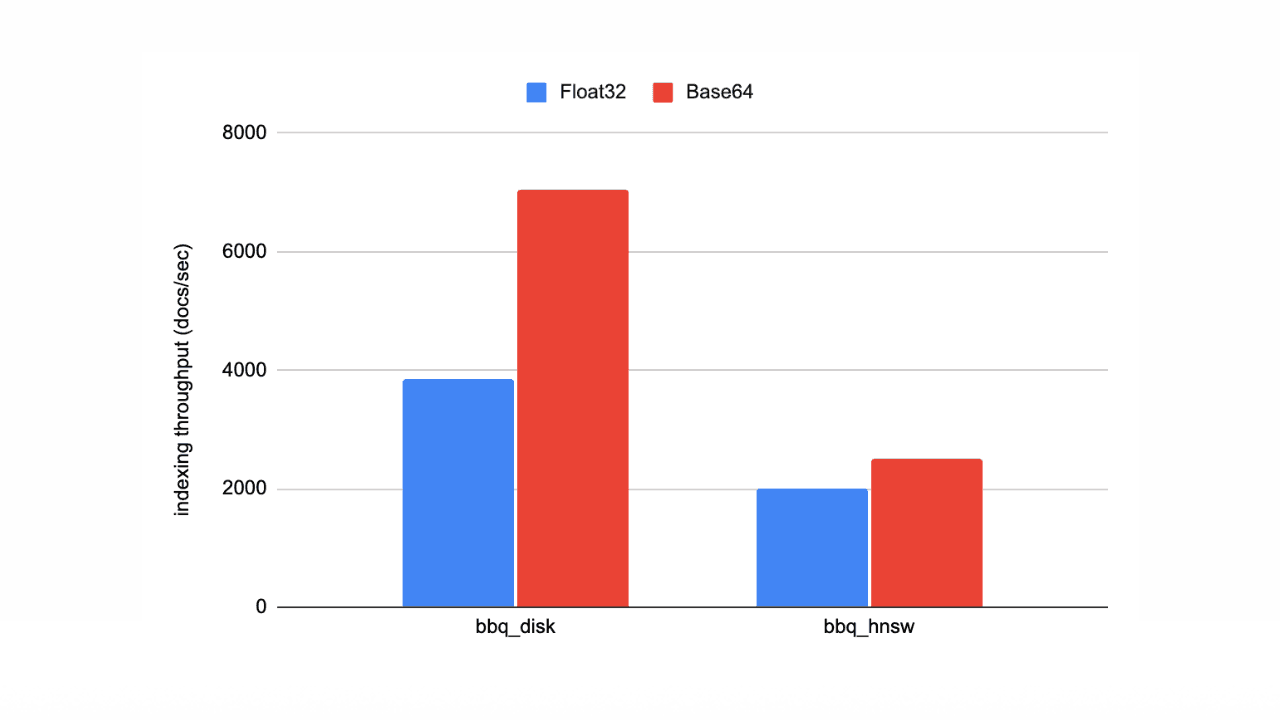

The difference between the two options is the allocation of resources. We allocate approximately four times more resources (aka VCUs) to the “Vector Optimized” profile, which will result in your costs being up to four times higher. This is why we recommend starting on the “General Purpose” profile and only using the “Vector Optimized” profile when your use case demands the use of uncompressed dense vectors with high dimensionality, and quantization and DiskBBQ will not serve your needs.

When Serverless Elasticsearch was envisioned years ago, we thought that vector workloads would require much more resources to remain performant. However, with innovations like semantic_text, sparse_vector models, and Better Binary Quantization (BBQ), we’ve found that many vector workloads perform well on the “General Purpose” profile at a fraction of the cost. Therefore, don’t let the “Vector Optimized” label fool you…you can get excellent price and performance for vector workloads on the “General Purpose” profile.

Monitoring costs

We recognize that keeping track of your costs, especially when you are new to Elasticsearch Serverless, is important to you. We built a few tools just for this purpose, and continue to improve them for even greater visibility.

Cloud console billing usage

The Elastic Cloud Console provides billing details for your cloud account, across all cloud-based resources, including Elasticsearch Serverless. There, you can find a breakdown of all the price components described in this article. Filters allow you to zoom in on specific time periods and resources.

To further monitor your costs, you can also configure custom budget alerts from the Budgets and notifications tab under the Billing and subscriptions page.

AutoOps monitoring

We’re bringing AutoOps to Serverless! One of the key value propositions of Elasticsearch Serverless is that we ensure everything runs smoothly, but that also means you have limited observability into the infrastructure. AutoOps for Serverless gives users visibility into what is driving usage, and, therefore, costs.

AutoOps is rolled out in new Serverless regions regularly, and we're always working to add new monitoring tools. Make sure to check out the region coverage and future planned monitoring tools.