What is prompt engineering?

Prompt engineering process definition

Prompt engineering is an engineering technique used to design inputs for generative AI tools to tune large language models and refine outputs.

Prompts are referred to as inputs, while the answers generated by the generative AI tool are the outputs. Accuracy and specificity at the input level—this is where prompt engineering comes in—is how the generative AI tool produces more accurate and specific answers in turn. With effective prompt engineering, a generative AI tool better performs its generative tasks, such as producing code, writing marketing copy, creating images, analyzing and synthesizing text, and more.

Prompt engineering combines the principles of logic and coding. It also requires some human creativity. Prompt engineering requirements can vary from one technology to the next, though most generative AI tools can process natural language prompts or queries. In other words, prompt engineering is like asking a question with specific instructions that help guide how the answer is prepared.

The basics of prompt engineering

To understand the basics of prompt engineering, it is important to review generative AI and large language models (LLMs).

Generative AI, or gen AI, refers to the type of artificial intelligence that can generate content, be it text, code, images, music, or video. Large language models are part of the underlying technology that enables gen AI to produce outputs.

LLMs are the backbone of natural language in AI. They enable any text-related applications: analysis, synthesis, translation, recognition, and generation. Large language models are trained on large bodies of information, often text, and learn patterns from this text, which enables them to generate predictions or outputs when queried.

Understanding the predictive aspect of LLMs is key to understanding how prompt engineering works:

- You enter an input—the prompt. It may include an output indicator, letting the model know what format the response generated should take.

- The model 'thinks' by using the deductions and patterns (in the form of numbers) learned from the training data. During this process, it attempts to recognize patterns, which is why the results generated are referred to as predictions.

- It then generates an output—a response.

At a basic level, an effective prompt therefore might contain an instruction or a question and be bolstered by context, inputs, or examples.

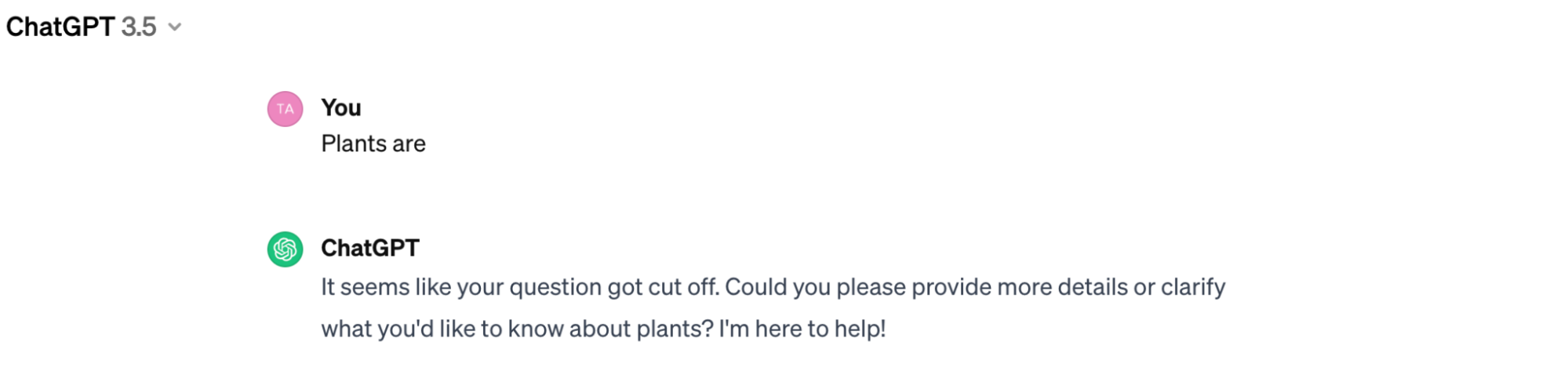

Here's a basic example of prompt engineering:

Input:

Plants are

With this prompt, we'd like the AI to complete the sentence. However, it lacks specificity. When entered into OpenAI's ChatGPT 3.5 model, it generates the following output:

So we attempt to clarify by adding an ellipsis.

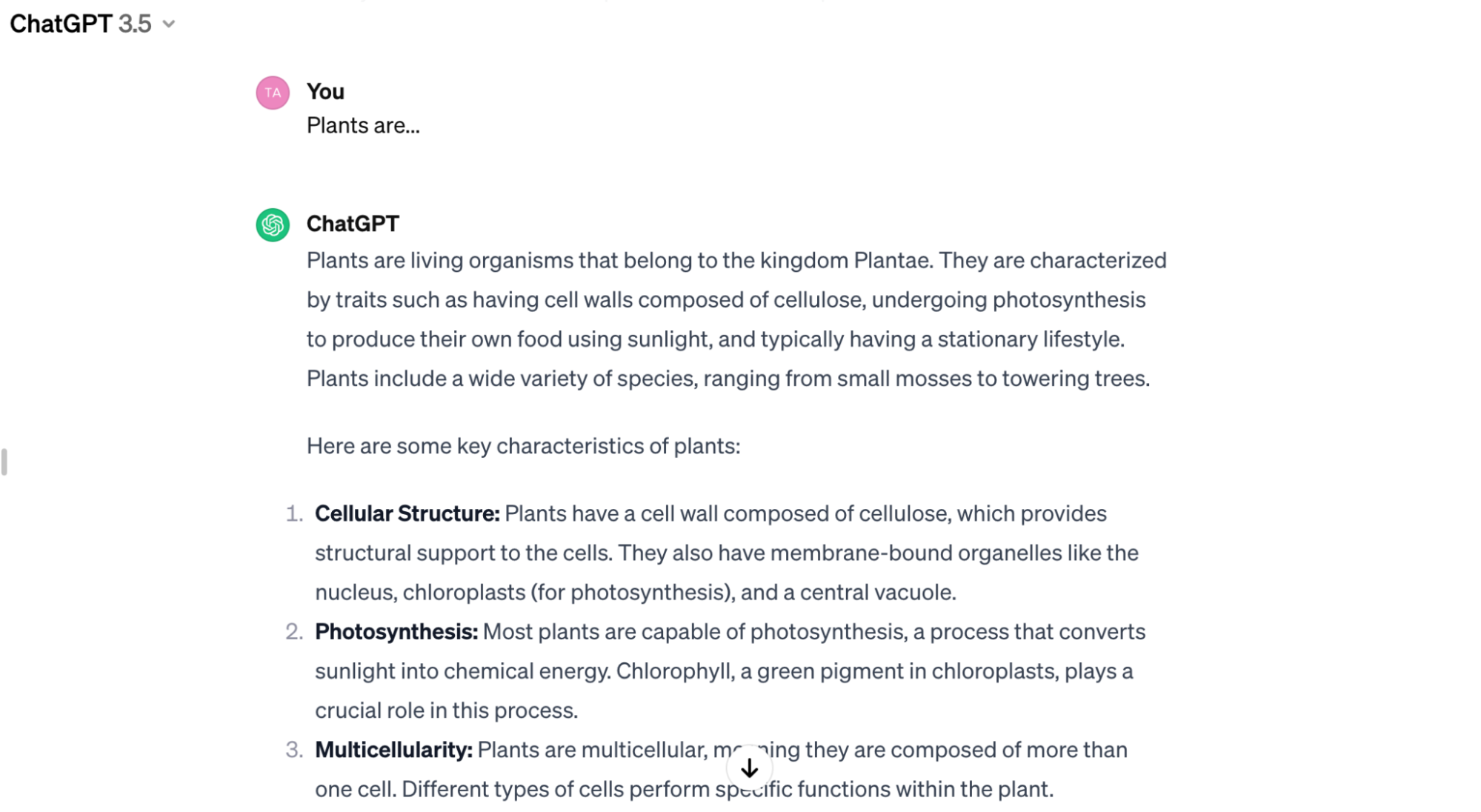

Input:

Plants are…

The ellipsis is enough to generate an output that completes the sentence. However, the model over-delivers:

Since we're not looking for the entire encyclopedic entry on plants, we specify our instructions.

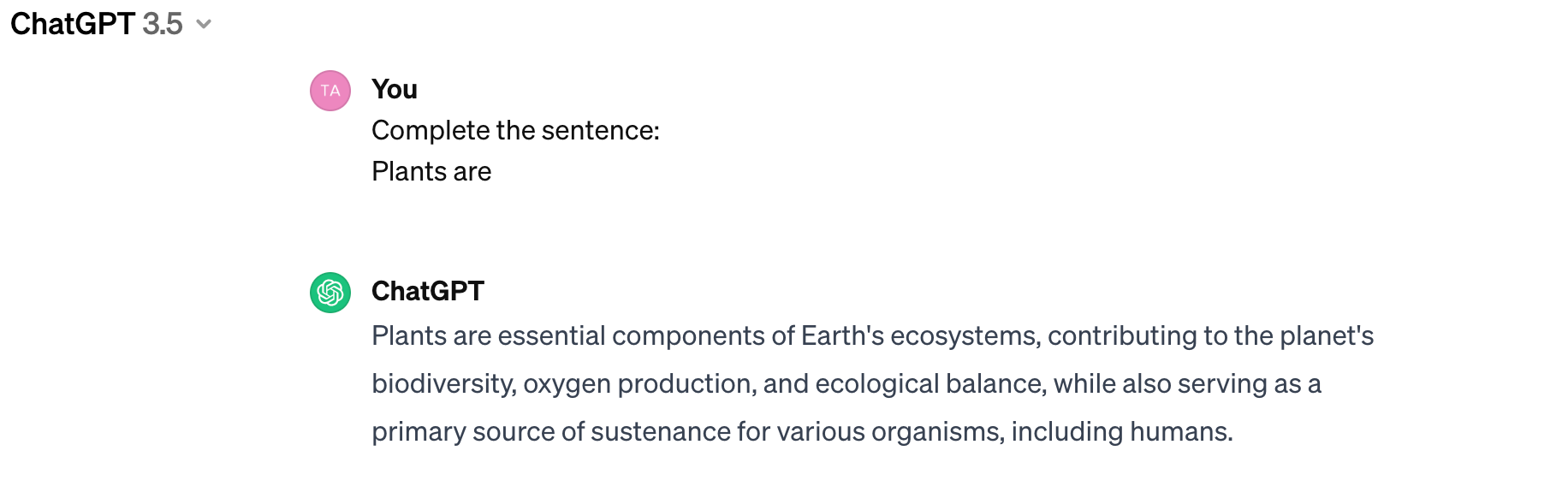

Input:

Complete the sentence:

Plants are

Now the model produces this output:

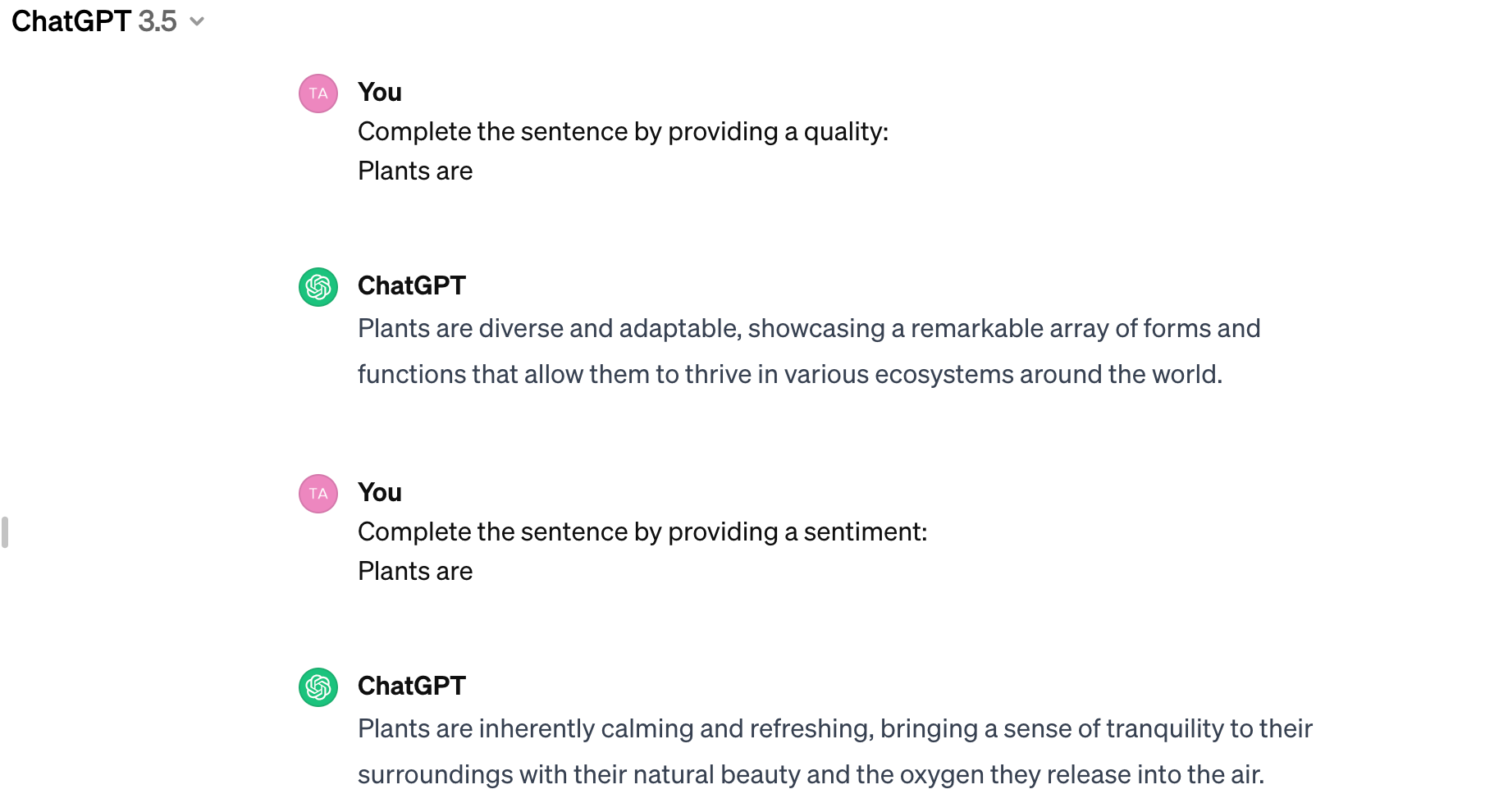

We can continue to specify our instructions:

This iterative instruction tuning is the basic principle of prompt engineering. It will vary from model to model and can take on various formats depending on the task required.

Why is prompt engineering important?

Prompt engineering is an important practice in the field of generative AI because it improves AI-powered tools and consequently betters the user experience and the results users obtain from the models.

Zero-shot training is one such engineering technique that consists of feeding a prompt that is not part of the model's training data to generate a specific result.

Developers might experiment with this type of prompt engineering to tune a large language model, like GPT-3, to improve its application as a customer-service chatbot, or its industry-specific content-generating ability—think contracts, marketing emails, and so on.

Let's look at the importance of prompt engineering in a real-life example:

An e-commerce company chooses to build an application that incorporates a generative AI tool to improve its customer service. They require the AI assistant to have the ability to assess customer sentiment, review customer files and customer transcripts, and provide the customer with options relevant to their queries. The company will need to fine-tune the tool with the help of prompt engineering to perform these specific tasks specifically and in the context of the products or services the company sells. In this scenario, prompt engineering ensures that the AI assistant in the application is useful to both the company and the user. The company may also want to include prompts before a customer's input – to ensure the safety of the customer's experience and to not open up the tool to misuse. For example, a prompt designed to ensure a good user experience may look like this: "Respond only to questions that are relevant to e-commerce or shopping experiences." For questions that are not related to e-commerce or shopping, reply with "I cannot respond to that question, consider contacting customer support instead."

Types of prompt engineering

For every type of task or desired output, there is an associated type of prompt engineering. Here are several types of prompt engineering techniques:

- Instructional prompting: Like the example above, this type of prompt engineering requires the designer to craft a prompt with explicit instructions for the desired output.

- Few-shot prompting: While zero-shot prompting is a type of prompt that offers the model no examples of sought answers, few-shot prompting consists of inputting some examples to demonstrate how you would like the model to respond.

- Chain-of-thought prompting: Useful for mathematical problems, this technique consists of laying out reasoning steps in the prompt, so the model provides its reasoning in the output.

- Generated knowledge prompting: This technique helps fine-tune the large language model by feeding it a fact, coupled with information relevant to the fact so it may first generate a knowledge-based output. Then, a secondary input will prompt the model on the accuracy of a fact, coupled with the knowledge it produced in the first output. In response, the model should output an accurate response to the query. This is a technique that is used to improve the accuracy of large language models.

- Tree-of-thought prompting: This technique enables more complex problem-solving by generalizing chain-of-thought prompting and inputting natural language intermediate steps, or thoughts, into the model. Tree-of-thought prompting gives a language model the ability to self-evaluate the effect of intermediate thoughts on problem-solving. In other words, it is a prompt engineering technique that encourages the model to go through a deliberate reasoning process.

This is a non-exhaustive list of several prompt engineering techniques. As research into generative AI and large language models continues, various new prompt engineering techniques will emerge.

Challenges and limitations of prompt engineering

Although prompt engineering helps get the most out of generative AI technology, it also presents some challenges and limitations related to adversarial prompting, factuality, and biases.

Adversarial prompting is a type of prompt injection that solicits 'adversarial behavior' from the large language model. It presents important safety issues to users, and these types of prompts can hijack the output and affect a model's accuracy.

There are various documented types of adversarial prompting, including prompt leaking and jailbreaking—techniques that aim to get the large language model to do what it was never intended to do. Such behavior is often harmful. So while prompt engineering can help improve the large language model, malicious prompt engineering can have the opposite effect.

The factuality and biases of outputs generated by large language models also point to the limitations of prompt engineering. A large language model is only as good as the data it was trained on. That data inherently contains biases that the model learns. As a result, even when effectively prompted, the model will generate biased responses or responses that read as very convincing but may not be accurate or factual at all. These errors are also referred to as hallucinations. Some additional prompt engineering—adding context and knowledge in the input string—might help improve the output, but the user would have to know first that the original output the model generated was incorrect.

To benefit from the full potential of prompt engineering, users must use discernment and apply verification techniques or processes.

At a human level, prompt engineering will disrupt the job market. According to McKinsey, generative AI has the potential to automate work tasks that take up to 70% of employees' time1. Prompt engineering, already a sought-after specialty skill in some fields, will likely become a basic requirement for most employees. Industries and organizations will face the challenge of upskilling their workers.

Strategies for effective prompt engineering

Prompt engineering combines coding, logic, and some art. Strategies for effective prompt engineering should rely on all of the above. Consider these strategies:

Keep it simple… at first

Keep it simple—when you start. Designing prompts is an iterative process, so starting small helps you understand how the model responds and how to shape your prompt for optimal results.

Be specific

Specificity at the input level ensures accuracy at the output level. Use active commands in your instructions such as 'Complete, 'Summarize,' 'Translate,' and so on, and add modifiers that set clear interpretation boundaries for the model such as 'Translate this text into French-Canadian,' or 'Summarize Mary Shelley's Frankenstein in 100 words or fewer.'

Consider our example on the basics of prompt engineering. As we refine the prompt, we specify what we want the model to tell us about plants as it completes the sentence.

Tell it what to do (not what not to do)

Phrase what you want the model to do in the positive, rather than the negative. Meaning, don't tell it what not to do. Again, use active voice prompts and be specific to the desired outcome.

Be concise

While being specific is key, it is important to balance that specificity with concision and precision. Long prompts, even if specific, often muddle the models.

When prompting a large language model, consider how you would respond to a set of instructions to perform a task. Do you know clearly what is being asked of you? Have you lost your way in the brief because it is too long, or too wordy? This thinking, coupled with your understanding of the way large language models work, will guide your prompt engineering for optimal results.

Prompt engineering with Elastic

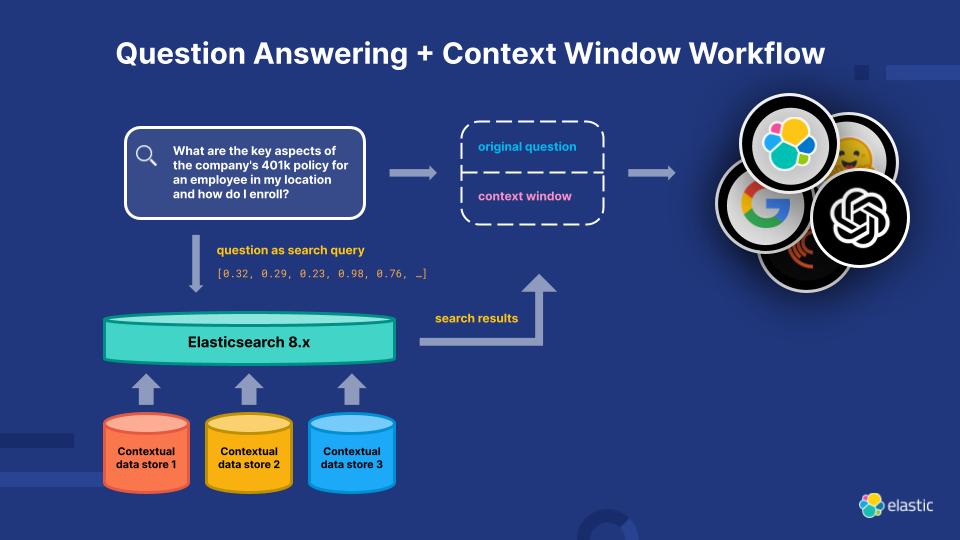

The Elasticsearch Relevance Engine (ESRE) is built for artificial intelligence-powered search applications, combining natural language processing (NLP) and generative AI to enhance customers' search experiences.

Powered by the Elastic Search Relevance Engine, Elastic's AI Assistants for Security and Observability can help your team with alert investigation, incident response, and query generation or conversion. The Elastic AI Assistants offer recommendations for prompts that empower your teams' cybersecurity or observability operations.

What you should do next

What you should do next Whenever you're ready... here are 4 ways we can help you bring data to your business:

- Start a free trial and see how Elastic can help your business.

- Tour our solutions, see how Elastic's Search AI Platform works, and how our solutions will fit your needs.

- Learn how to set up your Elasticsearch Cluster and get started on data collection and ingestion with our 45-minute webinar.

- Share this article with someone you know who'd enjoy reading it. Share it with them via email, LinkedIn, Twitter, or Facebook.

Footnotes

Chui, Michael. “Economic potential of generative AI: The next productivity frontier,” McKinsey, 14 June, 2023, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#/