February 6, 2026

Using Groq with Elasticsearch for intelligent queries

Learn how to use Groq with Elasticsearch to run LLM queries and natural language searches in milliseconds.

February 5, 2026

ES|QL dense vector search support

Using ES|QL for vector search on your dense_vector data.

February 4, 2026

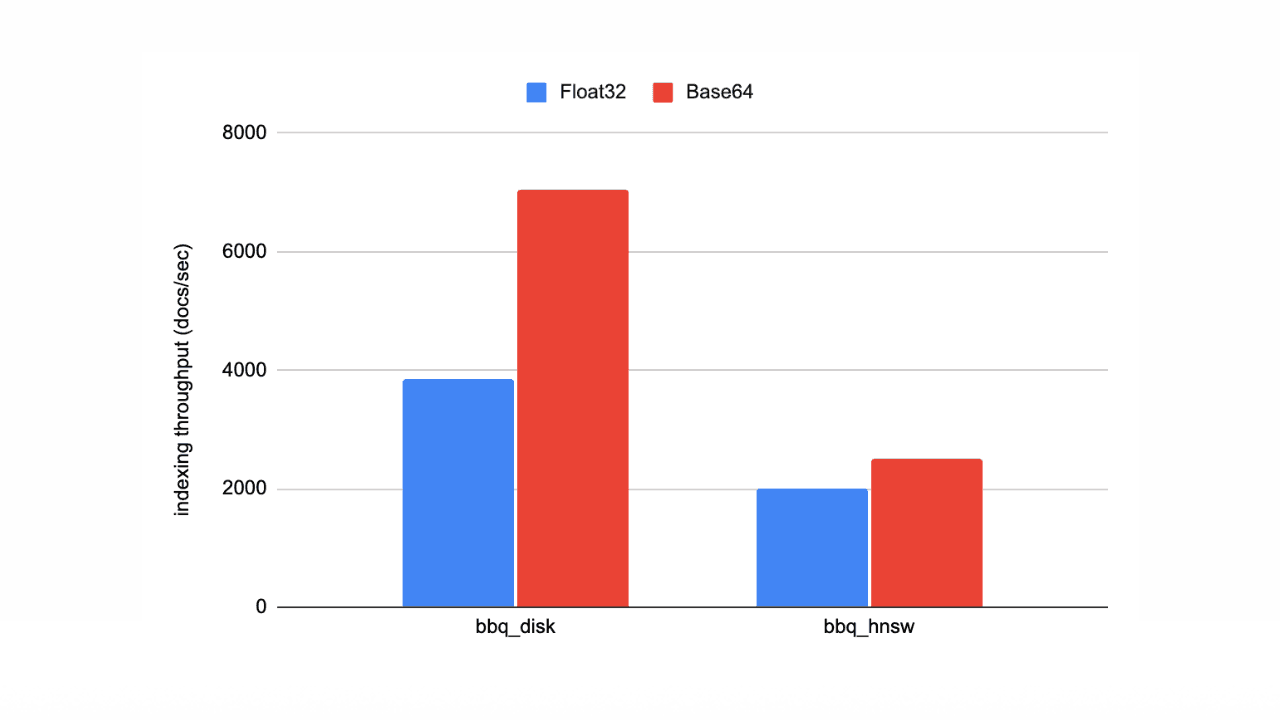

Speed up vector ingestion using Base64-encoded strings

Introducing Base64-encoded strings to speed up vector ingestion in Elasticsearch.

February 3, 2026

Jina Rerankers bring fast, multilingual reranking to Elastic Inference Service (EIS)

Elastic now offers jina-reranker-v2-base-multilingual and jina-reranker-v3 on EIS, enabling fast multilingual reranking directly in Elasticsearch for higher-precision retrieval, RAG, and agentic workflows without added infrastructure.

February 3, 2026

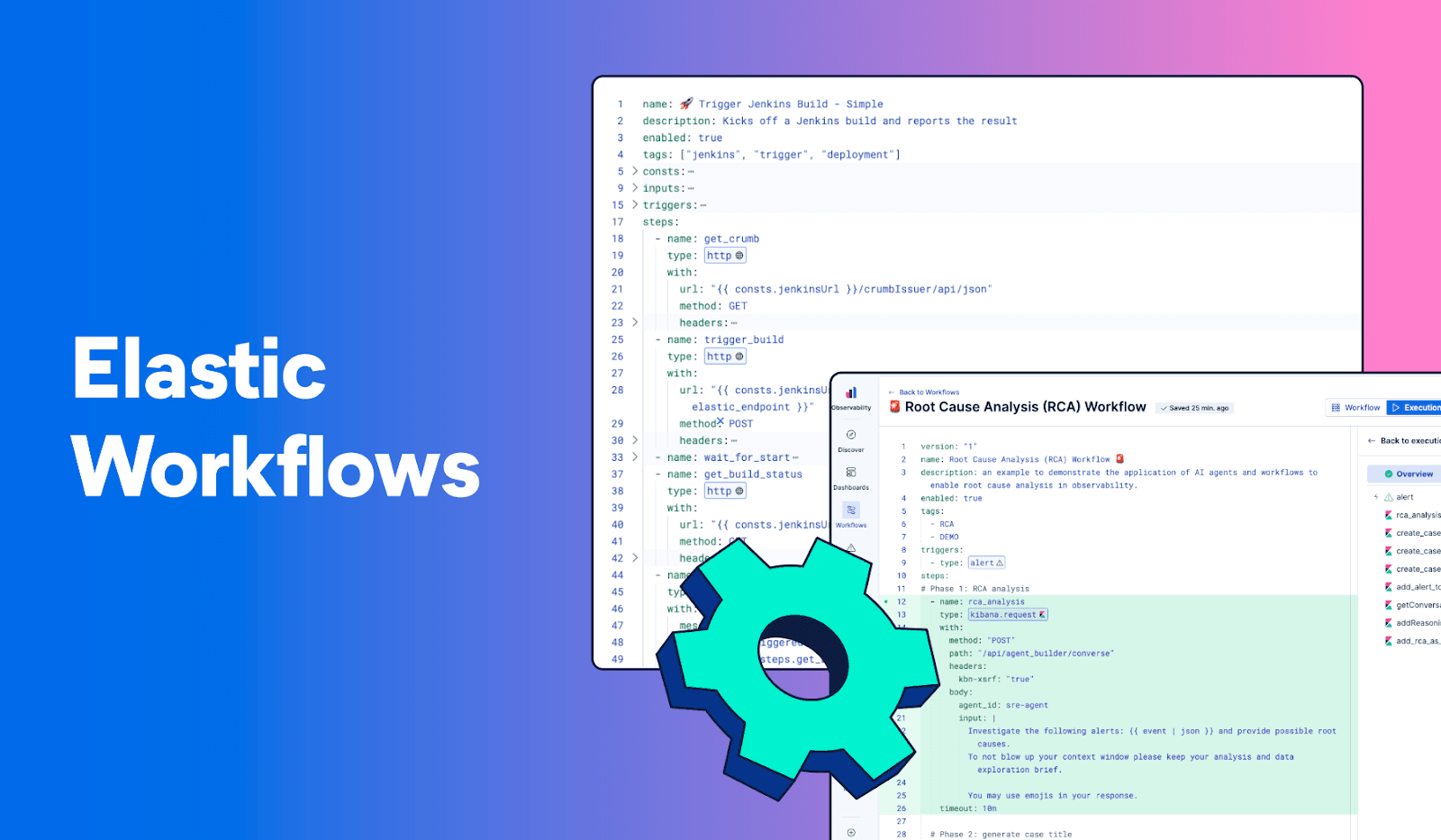

Building automation with Elastic Workflows

A practical introduction to workflow automation in Elastic. Learn what workflows look like, how they work, and how to build one.

February 3, 2026

Skip MLOps: Managed cloud inference for self-managed Elasticsearch with EIS via Cloud Connect

Introducing Elastic Inference Service (EIS) via Cloud Connect, which provides a hybrid architecture for self-managed Elasticsearch users and removes MLOps and CPU hardware barriers for semantic search and RAG.

February 2, 2026

Cookbook for a production-grade generative AI sandbox

Exploring the recipe for a generative AI sandbox, giving developers a secure environment to deploy application prototypes while enabling privacy and innovation.

January 30, 2026

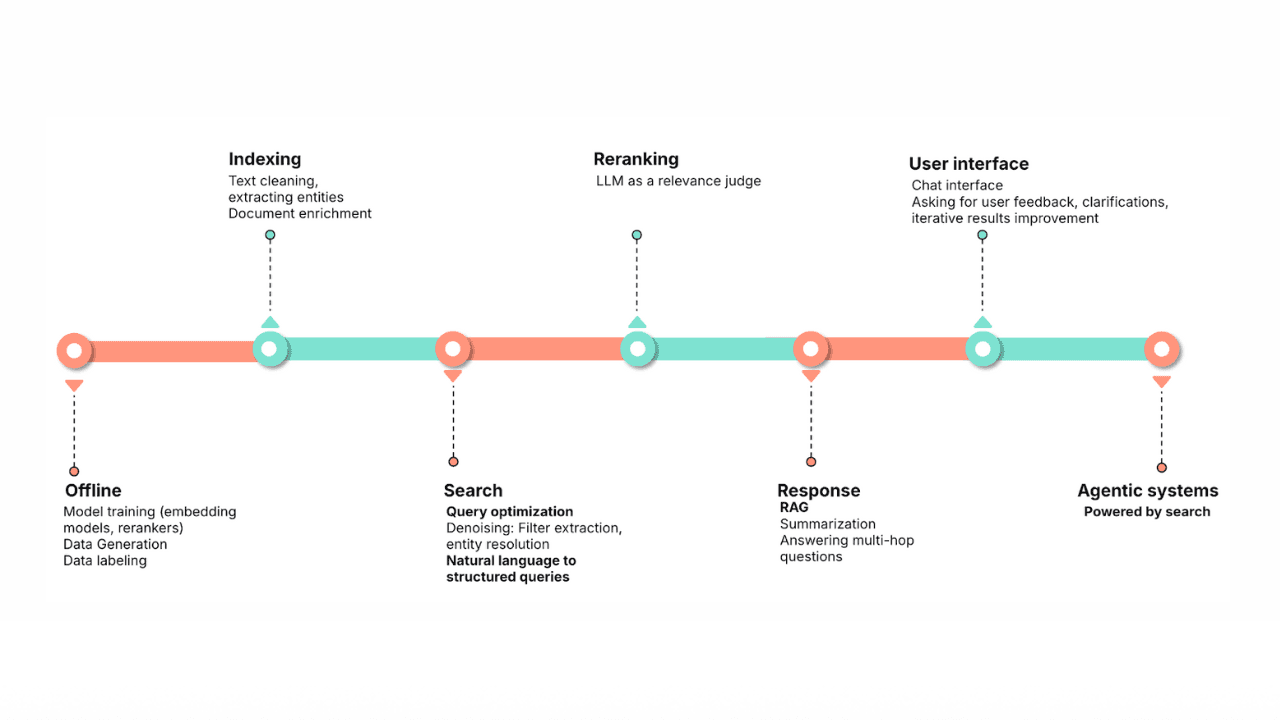

Query rewriting strategies for LLMs and search engines to improve results

Exploring query rewriting strategies and explaining how to use the LLM's output to boost the original query's results and maximize search relevance and recall.

January 29, 2026

Building human-in-the-loop (HITL) AI agents with LangGraph and Elasticsearch

Learn what human-in-the-loop (HITL) is and how to build an HITL system with LangGraph and Elasticsearch for a flight system.