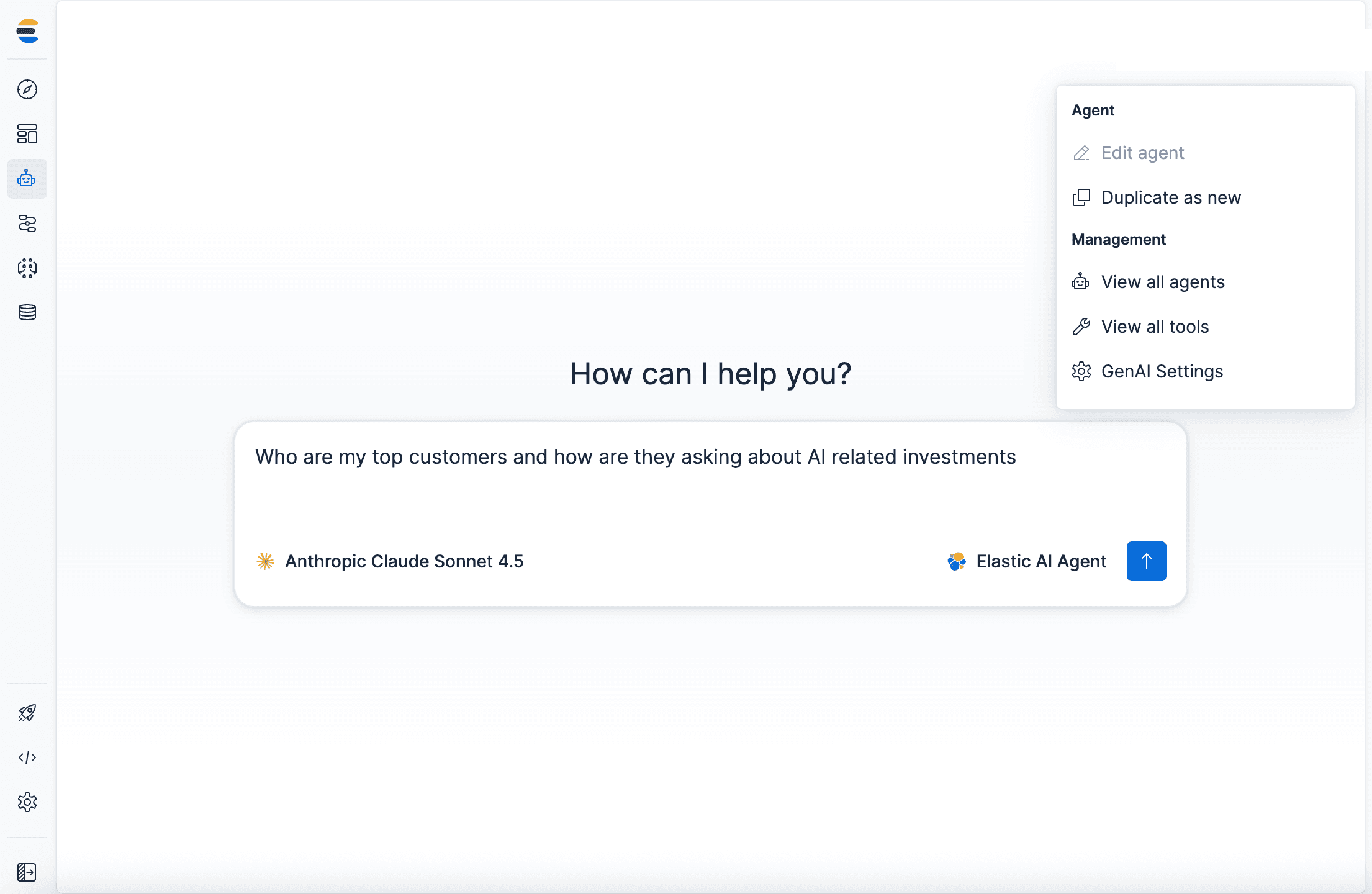

Agent Builder is available now GA. Get started with an Elastic Cloud Trial, and check out the documentation for Agent Builder here.

Introduction

Current LLM-backed systems are quickly evolving beyond single-model applications into complex networks where specialized agents work together to accomplish tasks never before thought possible by modern computing. As these systems grow in complexity, the infrastructure enabling agent communication and tool access becomes the main focus of development. Two complementary approaches have emerged to address these needs: Agent2Agent (A2A) protocols for multi-agent coordination, and the Model Context Protocol (MCP) for standardized tool and resource access.

Understanding when to use each in harmony with and without the other can significantly impact the scalability, maintainability, and effectiveness of your applications. This article explores the concepts and implementations of A2A within the practical example of a digital newsroom, where specialized LLM agents collaborate to research, write, edit, and publish news articles.

An accompanying repository may be found here, and we will examine concrete examples of A2A in action near the end of the article in Section 5.

Prerequisites

The repository consists of Python-based implementations of the A2A agents. An API server is provided in Flask, as well as a custom Python messaging service named Event Hub, which routes messages for logging and ui updates. Lastly, a React UI is provided for standalone usage of the newsroom features. Everything is contained within a Docker image for easier implementation. If you’d like to run the services directly with your machine, you’ll want to ensure you have these technologies installed:

Languages and runtimes

- Python 13.12 - Core backend language

- Node.js 18+ - Optional React UI

Core frameworks and SDKS:

- A2A SDK 0.3.8 - Agent coordination and communication

- Anthropic SDK - Claude integration for AI generation

- Uvicorn - ASGI server for running agents

- FastMCP 2.12.5+ - MCP Server implementation

- React 18.2 - Frontend UI framework

Data & search

- Elasticsearch 9.1.1+ - Article indexing and search

Docker deployment (optional, but recommended)

- Docker 28.5.1+

Section 1: What is Agent2Agent (A2A)?

Definition and core concepts

Agent2Agent (A2A) is a standardized protocol for interaction between independent LLM agents. Rather than a single monolithic system handling all tasks, A2A enables multiple specialized agents to communicate, coordinate, and collaborate to accomplish complex workflows that would be difficult, slow, or downright impossible for any single agent to handle efficiently.

Official specification: https://a2a-protocol.org/latest/specification/

Origins and evolution

The concept of Agent2Agent communication, or multi-agent systems, has roots in distributed systems, microservices, and multi-agent research dating back decades. Early work in distributed artificial intelligence laid the groundwork for agents that could negotiate, coordinate, and collaborate. These early systems were dedicated to large-scale social simulations, academic research, and power-grid management.

With the onset of LLM availability and the reduced cost of operation, multi-agent systems became available to “prosumer” markets, with backing from Google and the broader AI research community. Now known as Agent2Agent systems, the addition of the A2A protocol has evolved into a modern standard designed specifically for the era of multiple large language models coordinating efforts and tasks.

The A2A protocol ensures seamless communication and coordination between agents by applying consistent standards and principles to interaction points where LLMs connect and communicate. This standardization allows agents from different developers - using different underlying models - to work together effectively.

Communication protocols are not new, and have widely established roots in almost every digital transaction taken on the internet. If you typed https://www.elastic.co/search-labs into a browser to reach this article, the chances are high that TCP/IP, HTTP transport, and DNS lookup protocols all executed, ensuring a consistent browsing experience for us.

Key characteristics

A2A systems are built on several foundational principles to ensure smooth communication. Building upon these principles ensures that different agents, based on differing LLMs, frameworks, and programming languages, all interact seamlessly.

Here are the four main principles:

- Message passing: Agents communicate through structured messages with well-defined properties and formats

- Coordination: Agents orchestrate complex workflows by delegating tasks to one another and managing dependencies without blocking other agents

- Specialization: Each agent focuses on a specific domain or capability, becoming an expert in its area and offering task completion based around that skillset

- Distributed state: State and knowledge are distributed across agents rather than centralized, with agents having the ability to update each other on progress with task state and partial returns (artifacts)

The newsroom: A running example

Imagine a digital newsroom powered by AI agents, each specializing in a different aspect of journalism:

- News Chief (coordinator/client): Assigns stories and oversees the workflow

- Reporter Agent: Writes articles based on research and interviews

- Researcher Agent: Gathers facts, statistics, and background information

- Archive Agent: Searches historical articles and identifies trends using Elasticsearch

- Editor Agent: Reviews articles for quality, style, and SEO optimization

- Publisher Agent: Publishes approved articles to the blog platform via CI/CD

These agents don't work in isolation; when the News Chief assigns a story about renewable energy adoption, the Reporter needs the Researcher to gather statistics, the Editor to review the draft, and the Publisher to publish the final piece. This coordination happens through A2A protocols.

Section 2: understanding A2A architecture

Client Agent and Remote Agent Roles

In A2A architecture, agents take on two primary roles. The Client Agent is responsible for formulating and communicating tasks to other agents in the system. It identifies remote agents and their capabilities, using this information to make informed decisions about task delegation. The client agent coordinates the overall workflow, ensuring that tasks are properly distributed and that the system progresses toward its goals.

The Remote Agent, in contrast, acts on tasks delegated by clients. It provides information or takes specific actions in response to requests, but does not initiate actions independently. Remote agents may also communicate with other remote agents as needed to fulfill their assigned responsibilities, creating a collaborative network of specialized capabilities.

In our newsroom, the News Chief acts as the client agent, while the Reporter, Researcher, Editor, and Publisher are remote agents that respond to requests and coordinate with each other.

Core A2A capabilities

A2A protocols define several capabilities that enable multi-agent collaboration:

1. Discovery

A2A servers must announce their capabilities so clients know when and how to utilize them for specific tasks. This is accomplished through Agent Cards—JSON documents that describe an agent's abilities, inputs, and outputs. Agent Cards are made available at consistent, well-known endpoints (such as the recommended /.well-known/agent-card.json endpoint), allowing clients to discover and query an agent's capabilities before initiating collaboration.

Below is an example Agent Card for Elastic's custom Archive Agent "Archie Archivist". Note that software providers such as Elastic host their A2A agents and supply a url for access:

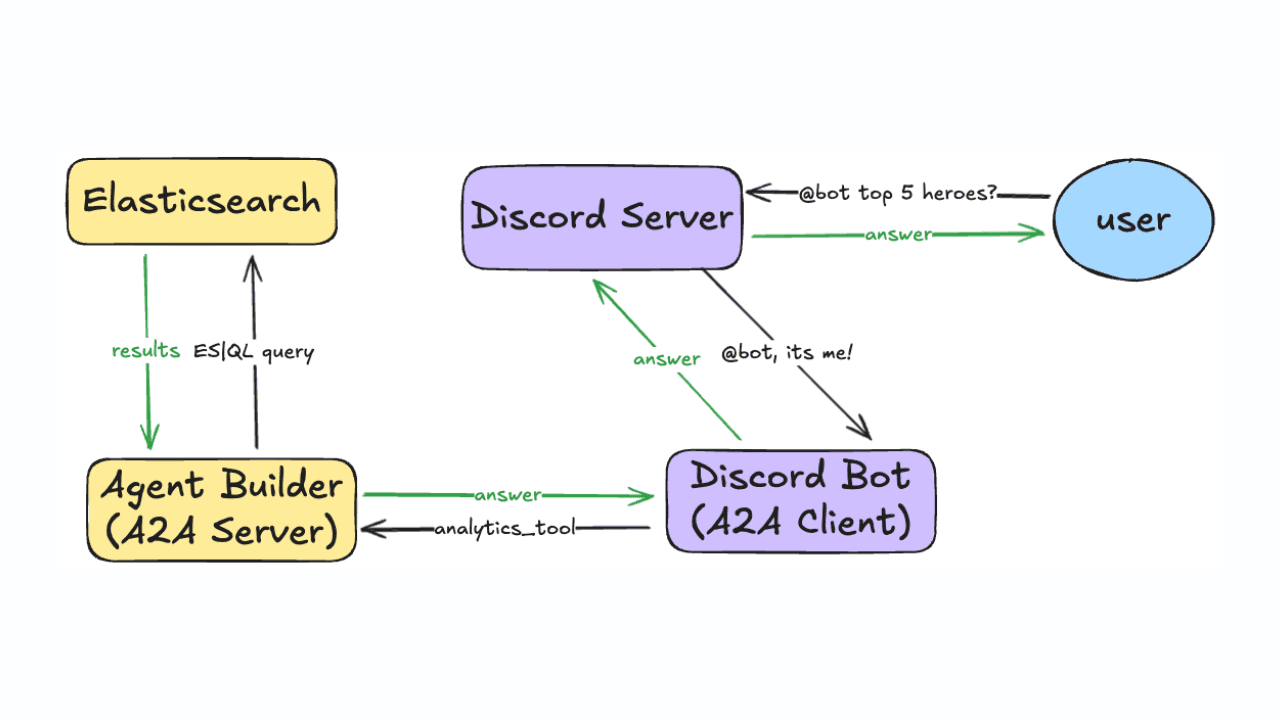

This Agent Card reveals several important aspects of Elastic's Archive Agent. The agent identifies itself as "Archie Archivist" and clearly states its purpose: helping find historical news documents in an Elasticsearch index. The card specifies the provider (Elastic) and the protocol version (0.3.0), ensuring compatibility with other A2A-compliant agents. Most importantly, the skills array enumerates the specific capabilities this agent offers, including powerful search functionality and intelligent index exploration. Each skill defines what input and output modes it supports, allowing clients to understand exactly how to communicate with this agent. This agent is derived from Elastic’s Agent Builder service, which provides a suite of native LLM-backed tools and API endpoints to have a conversation with your data store, not just retrieve from it. Access to A2A Agents in Elasticsearch may be found here.

2. Negotiation

Clients and agents need to agree on communication methods—whether interactions happen via text, forms, iframes, or even audio/video—to ensure proper user interaction and data exchange. This negotiation happens at the beginning of agent collaboration and establishes the protocols that will govern their interaction throughout the workflow. For example, a voice-based customer service agent might negotiate to communicate via audio streams, while a data analysis agent might prefer structured JSON. The negotiation process ensures that both parties can effectively exchange information in a format that suits their capabilities and the requirements of the task at hand.

The capabilities listed in the above JSON snippet all have input and output schemas; these set an expectation of how to interact with this agent from other agents.

3. Task and state management

Clients and agents need mechanisms to communicate task status, changes, and dependencies throughout task execution. This includes managing the entire lifecycle of a task from creation and assignment through progress updates and status changes. Typical statuses include pending, in progress, completed, or failed states. The system must also track dependencies between tasks to ensure that prerequisite work is completed before dependent tasks begin. Error handling and retry logic are also essential components, allowing the system to recover gracefully from failures and continue making progress toward the main goal.

Example task message:

This example task message demonstrates several key aspects of A2A communication.

- The message structure includes metadata such as a unique message identifier, the type of message being sent, sender and receiver identification, and a timestamp for tracking and debugging.

- The payload contains the actual task information, specifying which capability is being invoked on the remote agent, and providing the necessary parameters to execute that capability.

- The context section provides additional information that helps the receiving agent understand the broader workflow, including deadlines and priority levels that inform how the agent should allocate its resources and schedule its work.

4. Collaboration

Clients and agents must support dynamic yet structured interaction, enabling agents to request clarifications, information, or sub-actions from the client, other agents, or users. This creates a collaborative environment where agents can ask follow-up questions when initial instructions are ambiguous, request additional context to make better decisions, delegate subtasks to other agents with more appropriate expertise, and provide intermediate results for feedback before proceeding with the full task. This multidirectional communication ensures that agents aren't working in isolation but are instead engaged in an ongoing dialogue that leads to better outcomes.

Distributed, peer-to-peer communication

A2A enables distributed communication where agents may be hosted by different organizations, with some agents maintained in-house while others are provided by third-party services. These agents can run on different infrastructures - potentially spanning multiple cloud providers or on-premises data centers. They may use different underlying LLMs, with some agents powered by GPT models, others by Claude, and still others by open-source alternatives. Agents might even operate across different geographic regions to comply with data sovereignty requirements or reduce latency. Despite this diversity, all agents agree upon a common communication protocol for exchanging information, ensuring interoperability regardless of implementation details. This distributed architecture provides flexibility in how systems are built and deployed, allowing organizations to mix and match the best agents and infrastructure for their specific needs.

This is the final architecture of the newsroom application:

Section 3: Model Context Protocol (MCP)

Definition and purpose

The Model Context Protocol (MCP) is a standardized protocol developed by Anthropic to enhance and empower an individual LLM with user-defined tools, resources, and prompts, among other supplemental codebase additions. MCP provides a universal interface between language models and the external resources they need to complete tasks effectively. This article outlines the current state of MCP with examples of use cases, emerging trends, and Elastic’s own implementation.

Core MCP concepts

MCP operates on a client-server architecture with three main components:

- Clients: applications (like Claude Desktop or custom AI applications) that connect to MCP servers to access their capabilities.

- Servers: applications that expose resources, tools, and prompts to language models. Each server specializes in providing access to specific capabilities or data sources.

- Tools: user-defined functions that models can invoke to take actions, such as search databases, call external APIs, or execution transformations upon data

- Resources: data sources that models can read from, served with dynamic or static data, and accessed via URI patterns (similar to REST routes)

- Prompts: reusable prompt templates with variables that guide the model in accomplishing specific tasks.

Request-response pattern

MCP follows a familiar request-response interaction pattern similar to REST APIs. The client (LLM) requests a resource or invokes a tool, then the MCP server processes the request and returns the result, which the LLM uses to continue its task. This centralized model with peripheral servers provides a simpler integration pattern compared to peer-to-peer agent communication.

MCP in the newsroom

In our newsroom example, individual agents use MCP servers to access the tools and data they need:

- Researcher Agent uses:

- News API MCP Server (access to news databases)

- Fact-Checking MCP Server (verify claims against trusted sources)

- Academic Database MCP Server (scholarly articles and research)

- Reporter Agent uses:

- Style Guide MCP Server (newsroom writing standards)

- Template MCP Server (article templates and formats)

- Image Library MCP Server (stock photos and graphics)

- Editor Agent uses:

- Grammar Checker MCP Server (language quality tools)

- Plagiarism Detection MCP Server (originality verification)

- SEO Analysis MCP Server (headline and keyword optimization)

- Publisher Agent uses:

- CMS MCP Server (content management system API)

- CI/CD MCP Server (deployment pipeline)

- Analytics MCP Server (tracking and monitoring)

Section 4: architecture comparison

When to use A2A

A2A architecture excels in scenarios requiring genuine multi-agent collaboration. Multi-step workflows requiring coordination benefit greatly from A2A, particularly when tasks involve multiple sequential or parallel steps, workflows requiring iteration and refinement, and processes with checkpoints and validation needs. In our newsroom example, the story workflow requires the Reporter to write, but may need to iterate back to the Researcher if confidence in certain facts is low, then proceed to the Editor, and finally to the Publisher.

Domain-specific specialization across multiple areas is another strong use case for A2A. When multiple experts in various fields are needed to accomplish a greater task, with each agent bringing deep domain knowledge and specialized reasoning capabilities for different aspects, A2A provides the coordination framework needed to make those connections. The newsroom exemplifies this perfectly: the Researcher specializes in information gathering, the Reporter in writing, and the Editor in quality control—each with distinct expertise.

The need for autonomous agent behavior makes A2A particularly valuable. Agents that can make independent decisions, exhibit proactive behavior based on changing conditions, and dynamically adapt to workflow requirements thrive in an A2A architecture. Horizontal scaling of specialized functions is another key advantage—rather than having a single master-of-all-trades, multiple specialized agents work in coordination, and multiple instances of the same agent may handle subtasks asynchronously. During breaking news in our newsroom, for instance, multiple Reporter agents might work on different angles of the same story simultaneously.

Finally, tasks requiring genuine multi-agent collaboration are ideal for A2A. This includes LLM-as-jury evaluation mechanisms, consensus-building and voting systems, and collaborative problem-solving where multiple perspectives are needed to reach the best outcome.

When to use MCP

Model Context Protocol is ideal when extending a single AI model's capabilities. When a single AI model needs access to multiple tools and data sources, MCP provides the perfect solution with centralized reasoning paired with distributed tools and straightforward tool integration. In our newsroom example, the Researcher Agent (one model) needs access to multiple data sources, including News API, fact-checking services, and academic databases—all accessed through standardized MCP servers.

Standardized tool integration becomes a priority when the wide sharing and reusability of tool integrations matter. MCP shines here with its ecosystem of pre-built MCP servers that significantly reduce development time for common integrations. When simplicity and maintainability are required, MCP's request-response patterns are familiar to developers, easier to understand and debug than distributed systems, and carry lower operational complexity.

Lastly, MCP is often offered by software providers to ease remote communication with their systems. These provider-offered MCP servers significantly reduce onboarding and development time while offering a standardized interface to proprietary systems, making integration much more straightforward than custom API development.

When to use both (A2A ❤️’s MCP)

Many sophisticated systems benefit from combining A2A and MCP as noted in the A2A documentation on MCP integration. Systems requiring both coordination and standardization are ideal candidates for a hybrid approach. A2A handles agent coordination and workflow orchestration while MCP provides individual agents with tool access. In our newsroom example, agents coordinate via A2A; with the workflow moving from Reporter to Researcher to Editor to Publisher. However, each agent uses MCP servers for their specialized tools, creating a clean architectural separation.

Multiple specialized agents, each using MCP for tool access, represents a common pattern where there's an agent coordination layer handled by A2A and a tool access layer managed by MCP. This clear separation of concerns makes systems easier to understand and maintain.

The benefits of combining both approaches are substantial. You gain the organizational benefits of multi-agent systems including specialization, autonomy, and parallel processing, while also enjoying the standardization and ecosystem benefits of MCP such as tool integration and resource access. There's a clear separation between agent coordination (A2A) and resource access (MCP), and importantly, A2A isn't needed for smaller tasks like API access alone—MCP handles those efficiently without the overhead of multi-agent orchestration.

FAQ: A2A vs. MCP- Use cases

| Feature | Agent2Agent (A2A) | Model Context Protocol (MCP) | Hybrid (A2A + MCP) |

|---|---|---|---|

| Primary goal | Multi-Agent coordination: Enables a team of specialized agents to work together on complex, multi-step workflows. | Single agent enhancement: Extends the capability of a single LLM/Agent with external tools, resources, and data. | Combined strength: A2A handles the team's workflow, while MCP provides tools to each team member. |

| Newsroom team example | The workflow chain: News Chief → Reporter → Researcher → Editor → Publisher. This is the coordination layer. | Individual agent's tools: The Reporter Agent accessing the style guide server and template server (via MCP). This is the tool access layer. | The full system: Reporter coordinates with the Editor (A2A), and the Reporter uses the Image Library MCP Server to find a graphic for the story. |

| When to use which | When you need genuine collaboration, iteration, and refinement, or specialized expertise split across multiple agents. | When one single agent needs access to multiple tools and data sources or requires standardized integration with proprietary systems. | When you need the organizational benefits of multi-agent systems and the standardization and ecosystem benefits of MCP. |

| Core benefit | Autonomy and scaling: Agents can make independent decisions, and the system allows for horizontal scaling of specialized functions. | Simplicity and standardization: Easier to debug and maintain due to the centralized reasoning, and provides a universal interface for resources. | Clear separation of concerns: Makes the system easier to understand: A2A = teamwork, MCP = tool access. |

Conclusion

This is the first section of two pieces covering the implementation of A2A-based agents bolstered with MCP servers to provide support and external access to data and tools. The next piece will explore actual code to demonstrate them working together to emulate the activities in an online newsroom. While both frameworks are extremely capable and flexible in their own right, you will see just how much they complement each other when working in tandem.