From vector search to powerful REST APIs, Elasticsearch offers developers the most extensive search toolkit. Dive into our sample notebooks in the Elasticsearch Labs repo to try something new. You can also start your free trial or run Elasticsearch locally today.

Elasticsearch 9.3 brings with it several important improvements to vector data, including a new element_type: bfloat16. This has the potential to halve your vector data storage, with minimal reductions in recall and runtime performance for most use cases.

Storage formats in dense_vector fields

Prior to 9.3, dense_vector fields support vectors of single bits, 1-byte integers, and 4-byte floats. We store the original vectors on top of any quantization and/or hierarchical navigable small world (HNSW) graph used for indexing, and the original vectors make up the vast majority of the required disk space of the vector indices. If your vectors are floating point, then the only option versions of Elasticsearch prior to 9.3 provide is to store 4 bytes per vector value: That’s 4kB for a single 1024-dimensional vector.

There are other floating-point sizes available, of course: IEEE-754 specifies floating-point sizes of many different lengths, including the 4-byte float32 and 8-byte float64 used by Java float and double types. It also specifies a float16 format, which only uses 2 bytes per value. However, this only has a maximum value of 65,504, compared to the 3.4x1038 of 4-byte float32 values, and the conversion between the two involves several arithmetic operations.

As an alternative, many machine learning (ML) applications now use bfloat16, which is a modification of IEEE-754 float32 to only use 2 bytes. It does this by discarding the lowest 2 bytes of the fractional part of the value, leaving the sign and exponent unchanged. This effectively reduces the precision of the floating-point value without a corresponding reduction in range. The conversion from float32 to bfloat16 is a simple bitwise truncation on the float32 value, with a bit of jiggling to account for rounding.

bfloat16 in Elasticsearch 9.3

Elasticsearch 9.3 now supports storing vector element types as bfloat16. In memory, it will still process every vector value as a 4-byte float32, as Java does not have built-in support for bfloat16. As it writes vector data to disk, it will simply truncate and round each float32 value to a 2-byte bfloat16, and zero-expand each bfloat16 value back to float32 on reading the value into memory.

This effectively halves your vector index sizes, as it uses 2 bytes per value rather than 4 bytes. There may be a small performance cost during reading and writing data as Elasticsearch performs the necessary conversions, but this is often counterbalanced by a significant reduction in the I/O required, as the OS now has to read half as much data. And, for most datasets, there is a minimal effect on search recall.

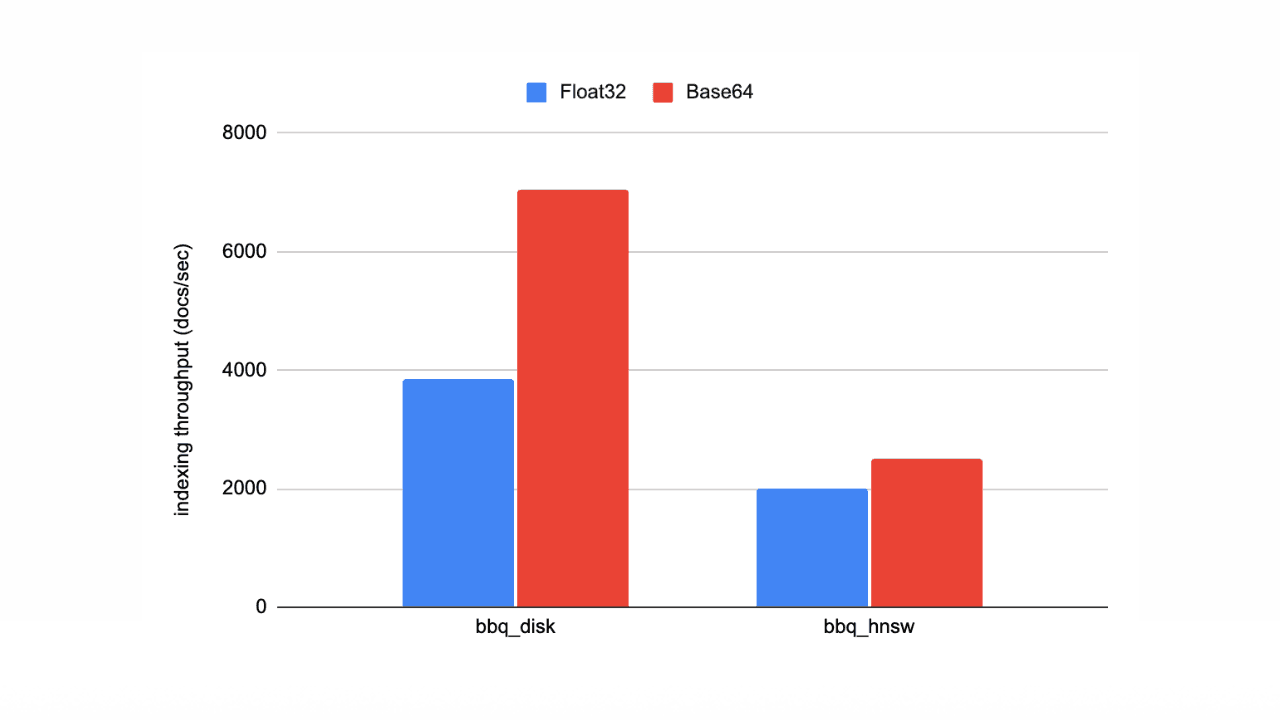

As an example, this is the difference in sizes for bfloat16 on our dense_vector dataset:

So, if your input vectors are already at bfloat16 precision, then happy days! Elasticsearch accepts raw bfloat16 vectors as float values, and as Base64-encoded vectors. The vectors are persisted to disk with the same precision as your original source data, immediately halving your data storage requirements.

If your input vectors are at 4-byte precision, then you can also use bfloat16 format to halve your index data sizes. Elasticsearch will truncate and round each value to 2-byte precision, throwing away the least significant bits of the fraction. This means that the vector values you get back from Elasticsearch won’t be exactly the same as what you originally indexed, so don’t use bfloat16 if you need to maintain the full 4-byte precision of float32.

Starting in Elasticsearch 9.3, and on Elasticsearch Serverless, you can specify element_type: bfloat16 with all dense_vector index types on any newly created indices. If you wish to use bfloat16 with existing indices, you can reindex into an index with element_type: bfloat16 and Elasticsearch will automatically convert your existing float vectors to bfloat16.