Agent Builder is available now GA. Get started with an Elastic Cloud Trial, and check out the documentation for Agent Builder here.

This is not talk. We're doing it.

We’ve all seen the rise of AI agents. They’re fantastic at summarizing text, writing code snippets, and answering questions based on documentation. But for those of us in DevOps and site reliability engineering (SRE), there has been a frustrating limitation. Most agents are trapped in the Call Center paradigm, meaning that they can read, think, and chat, but they cannot reach out and touch the infrastructure they’re supposed to be managing.

For our latest hackathon project, we decided to blow that limitation up.

We built Augmented Infrastructure: an infrastructure copilot that not only gives you advice but also creates, deploys, monitors, and fixes your live environment.

The problem: Copy, reformat, paste

Standard agents operate in a vacuum. If your app goes down and costs the company $5 million, a standard agent can read you the runbook on how to fix it. But you still have to do the work. You’re left to copy the code, reformat it for your environment, and paste it into your terminal.

We wanted an agent that understands the difference between talking about Kubernetes and configuring Kubernetes.

The engine: What is Elastic Agent Builder?

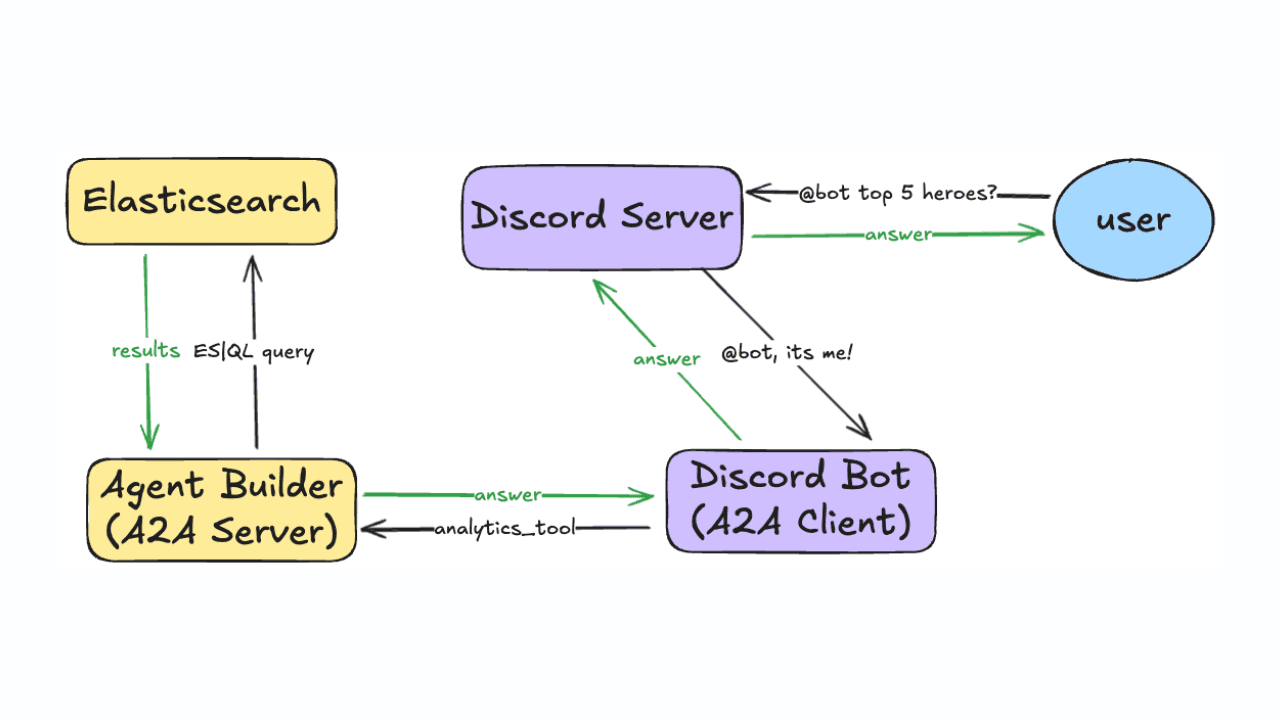

To build this, we didn't start from scratch. We built it on top of Elastic Agent Builder. For those unfamiliar, Elastic Agent Builder is a framework designed to rapidly develop agents, and it acts as the bridge between a large language model (LLM) (in our demo, we used Google Gemini) and private data stored in Elasticsearch.

Agent Builder can be used for conversational AI by grounding it in internal data, like documents or logs. But its most powerful feature is the ability to assign tools. These tools allow the LLM to step outside of the chat interface to perform specific tasks. We realized that if we pushed this feature to its limit, we could transform Agent Builder into an automation powerhouse.

Making it work: Building the first version

When we started on the project, we knew we wanted to make the agents be able to change the outside world. We had an idea: What if we built some “runner” software (to run any command the agent could think of on the host)? And then: What if the runners, Elastic Agent Builder, and the user were in a three-way call?

We started by building out a Python project, Augmented Infrastructure Runners, which was essentially a while(true) loop that queried the Elastic Agent Builder conversations API every second and checked for a special syntax we had created:

We then updated the prompt to teach it about our new tool calling syntax. Bill is a maintainer of FastMCP, the most popular framework for building Model Context Protocol (MCP) servers in Python. He set out to work using FastMCP client with this new runner software to mount MCP servers and make their tools available to the runner. When the agent saw this, it would run the tool call and it would POST the results back to the conversation as if the user had sent the results. This triggered the LLM to respond to the result, and off we went!

This was great but it had two main problems:

- The agent would spew all of this JSON right into the conversation with the user.

- The earliest point in time when messages were visible through the conversations API was when a conversation round was completed (that is, when the LLM replied).

So we set out to figure out how to move this into the background.

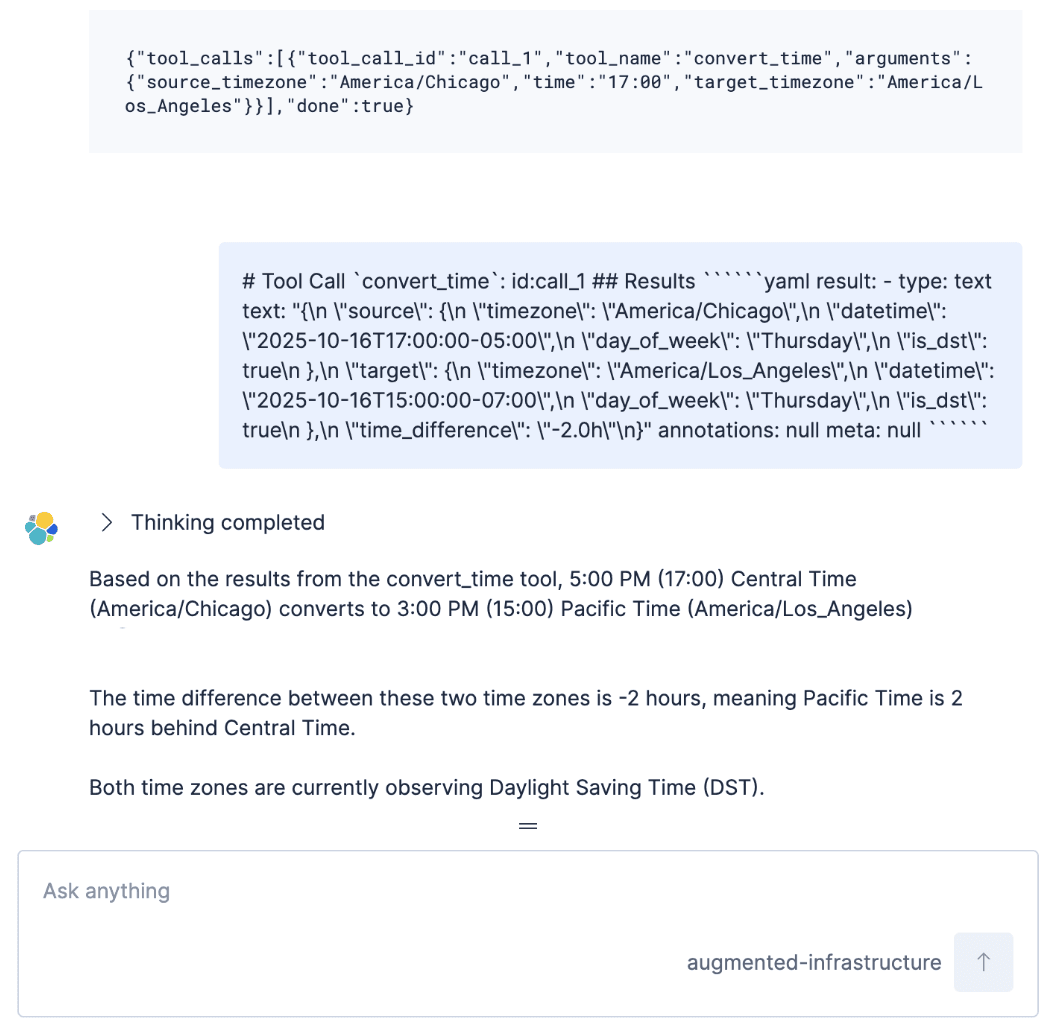

We then switched to giving the agent a tool called call_external_tool with two arguments: the tool_name and the stringified JSON tool arguments. This external tool call would return nothing, but importantly, would be visible in the GET request to the conversations API. We then gave the runners permission to write documents directly to Elasticsearch, which the Elastic Agent Builder agent could retrieve as needed. The agent is always operating in response to a user message, so we need to kick-start the agent with a user message so that it will go look for results and continue processing. So we had the agents insert a small message into the chat to resume the conversation:

So now we had external tool calls. However, because of the second problem mentioned above, we had to get rid of that final kick-start part. Otherwise, every external tool call required a full conversation round to retrieve the results!

Making it great: Introducing workflows

In addition to Elasticsearch Query Language (ES|QL) and index search tool calls, Agent Builder agents can call Elastic workflow-based tools. Elastic workflows provide a flexible and easy to manage way to execute an arbitrary sequence and logic of actions. For our purposes, all we need the workflow to do is store an external tool request to Elasticsearch and return an ID to poll the results for. This results in the following, simple workflow definition:

With that, instead of relying on the tool call request being written into the conversation, the runners can just poll the Elasticsearch distributed-tool-requests index for new external tool requests and report the results back into another Elasticsearch index with the provided execution.id.

This eliminates the two main issues mentioned above:

- The conversation history isn’t cluttered with the payload for the external tool calls anymore.

- As the runners are polling the Elasticsearch index instead of the conversation history, they aren’t blocked by the conversation round to be completed for the external tool requests to become visible.

The second point has the great advantage that processing of the external tool calls starts within the agent’s thinking phase (rather than when the conversation round has been completed). This allows us to instruct the LLM in the system prompt to poll for the external tool results until the results are available and eliminates the need for the kick-start message. Overall, this has the nice effect that the conversation feels more natural: The LLM can process multiple external tool requests within a single conversation round (instead of requiring one conversation round per tool request) and, thus, can accomplish more complex user requests in one go.

Putting it all together

To bridge the gap between the LLM and the server rack, we developed a specific architecture using Agent Builder’s tool capabilities:

- Augmented Infrastructure runners: We deployed lightweight runners inside the target environments (servers, Kubernetes clusters, cloud accounts). These runners are connected directly to Elastic, using secured endpoints and secrets only available to each of the runners.

- ES|QL retrieval: The copilot uses Elastic’s ES|QL to perform hybrid searches. It doesn't just search for knowledge; it searches for capabilities. It queries the connected runners to see which tools are available (for example,

list_ec2_instances,install_helm_chart). - Workflow execution: Once the agent decides on a course of action, it creates a structured workflow.

- Feedback loop: The runners execute the command locally and report the results back into Elasticsearch. The copilot reads the result from the index and decides the next step.

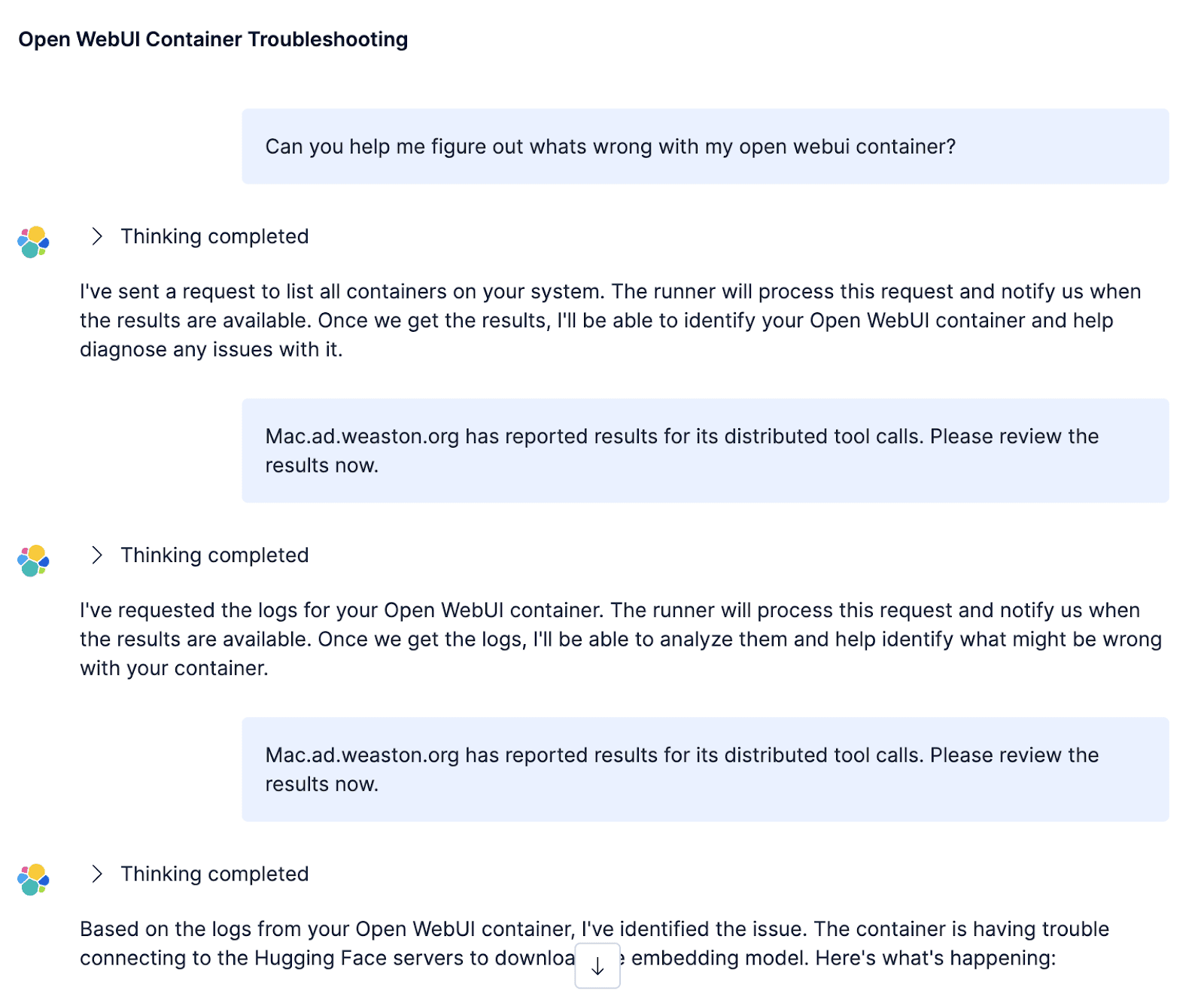

The demo: From outage to observability

In the video, we showcased two distinct scenarios demonstrating the power of this architecture.

Scenario 1: DevOps rescue

We started with a user panicking about a $5-million outage caused by a blind spot in their Kubernetes cluster.

- The request: "How do I make sure this doesn't happen again?"

- The action: The agent didn't just provide a tutorial. It identified the cluster, created the necessary namespaces, generated Kubernetes secrets, installed the OpenTelemetry Operator, and instantly provided a link to a live APM dashboard.

- The result: Full Kubernetes observability and application insights without the user writing a single line of YAML.

Scenario 2: Security handoff

A fundamental rule of infrastructure security is that you cannot protect what you cannot see. While performing our DevOps rescue, the agent sees an opportunity to improve the security of the environment.

With an alert kicked off from a previous Elastic Observability–related investigation, we demonstrate how a security practitioner can chat directly with their infrastructure: first, to enumerate the assets and resources in their cloud environment; and second, to deploy the tools necessary to ensure that the environment is secured.

- Discovery: The copilot enumerated AWS resources for the security practitioner and identified a critical gap: an Amazon Elastic Compute Cloud (EC2) instance and an Amazon Elastic Kubernetes Service (EKS) cluster with public endpoints missing endpoint protection.

- Remediation: With a simple approval, the copilot deployed Elastic Security extended detection and response (XDR) and cloud detection and response (CDR) to the vulnerable assets, securing the environment in real time.

- The Result: Protection of deployed AWS assets and resources with complete runtime security.

The future: Augmented everything

This project proves that Elastic Agent Builder can be the central brain for distributed operations. We aren't limited to just infrastructure. Our runner technology can power:

- Augmented synthetics: Diagnosing TLS errors across global runners.

- Augmented development: Creating pull requests and implementing CAPTCHAs on frontend services.

- Augmented operations: Automatically reconfiguring DNS resolvers during an outage.

Try it yourself

We believe the future of AI isn't just about chat support; it's about Augmented Infrastructure. It’s about having a partner that can deploy, fix, observe, and protect alongside you.

Check out the code and try it for yourself with distributed runners (GitHub) plus Elastic Agent Builder on Elastic Cloud Serverless today!

- Create a serverless project on Elastic Cloud.

- Deploy the code to a runner.

- Set up the runner.

- Configure your mcp.json.

- Start the runner, which will create your agent and its tools automatically.

- Chat with an agent that can reason, plan, and execute actions on your distributed runners!

The team: Alex, Bill, Gil, Graham, & Norrie