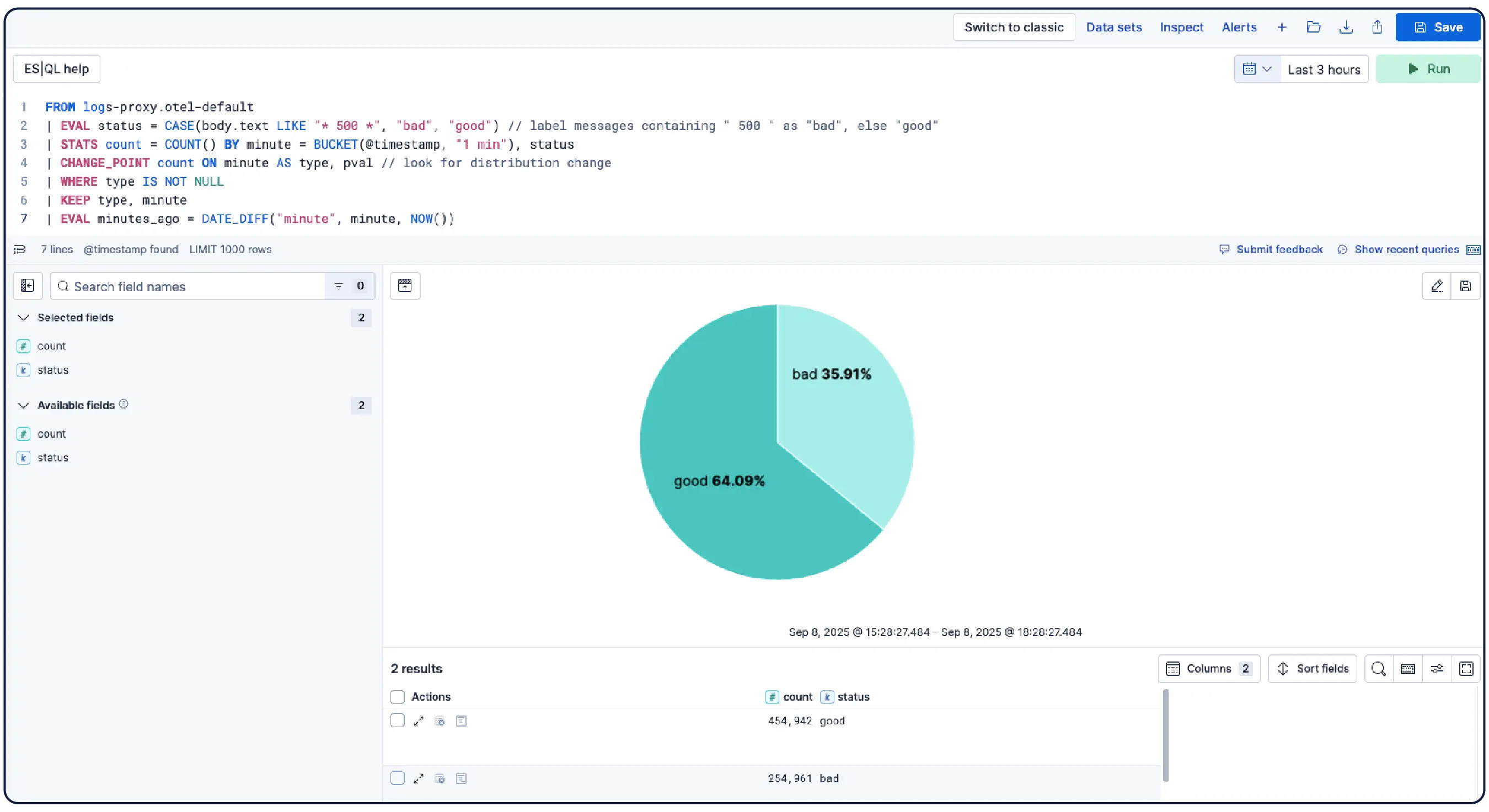

가이드 데모

원시 로그에서 진짜 해답까지

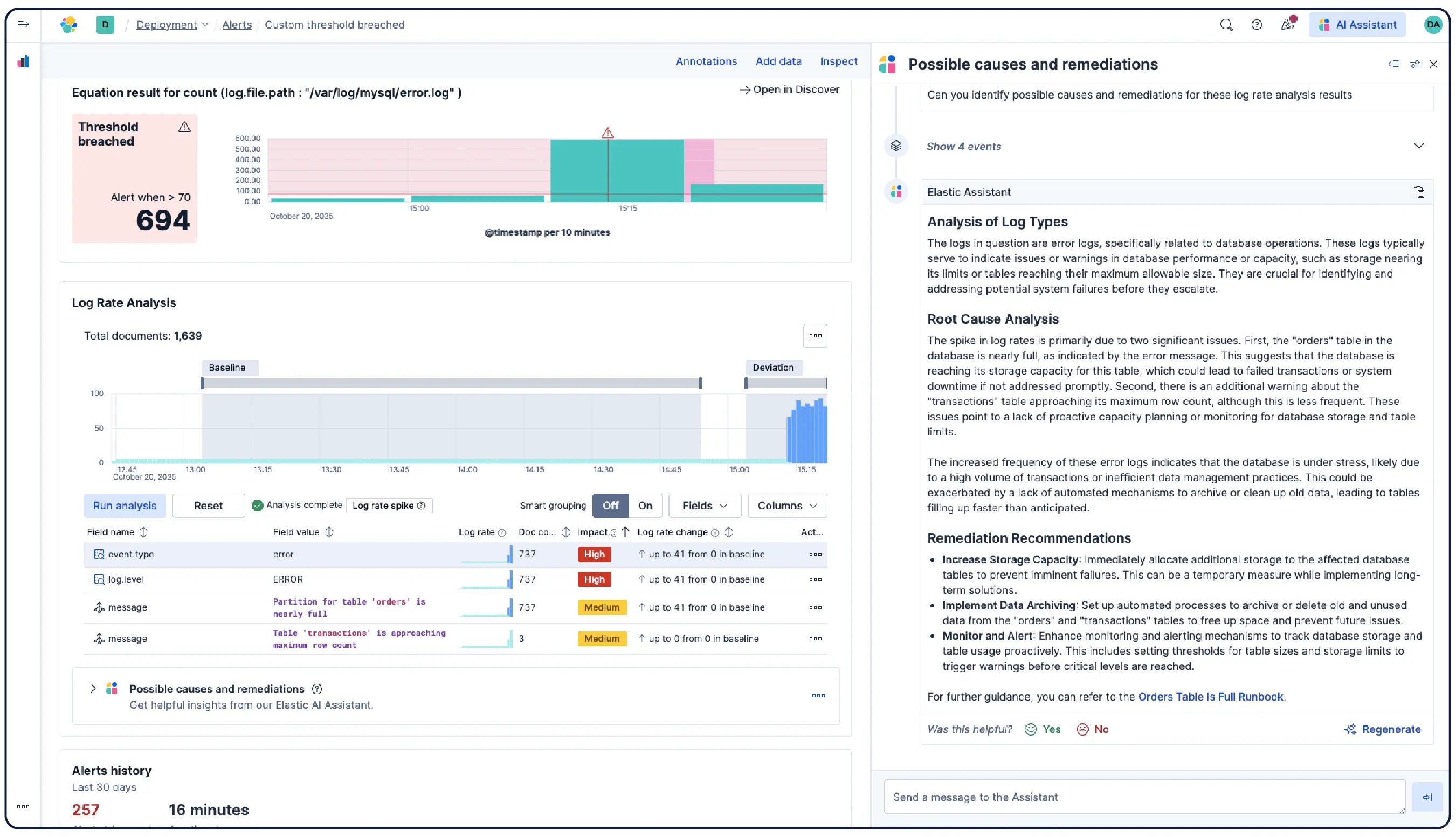

로그는 무슨 일이 있었는지 알려줍니다. Elastic은 왜 그 일이 일어났는지 이해하는 데 도움을 줍니다.

성능

수십억 개의 로그, 하나의 명확한 그림

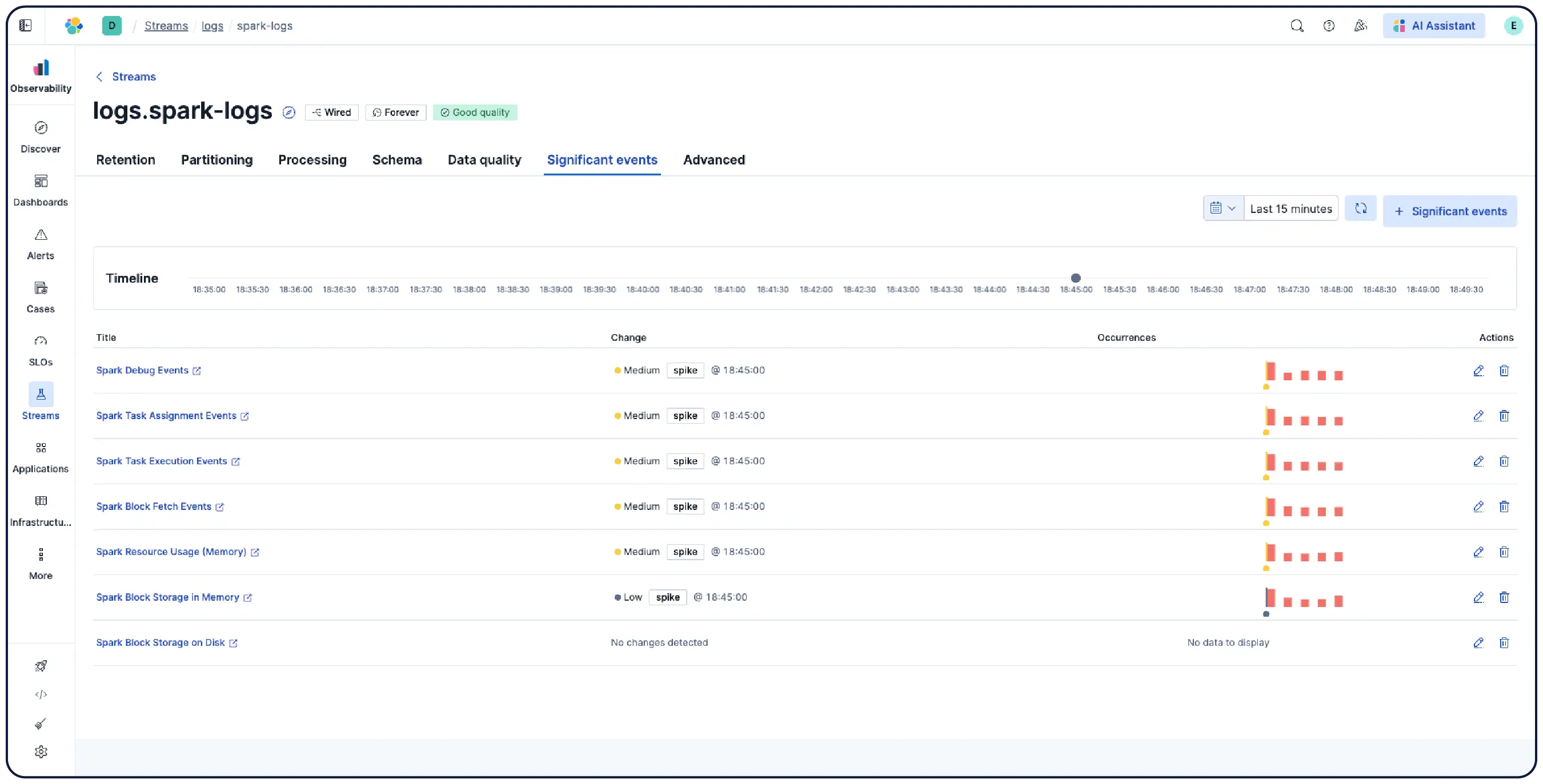

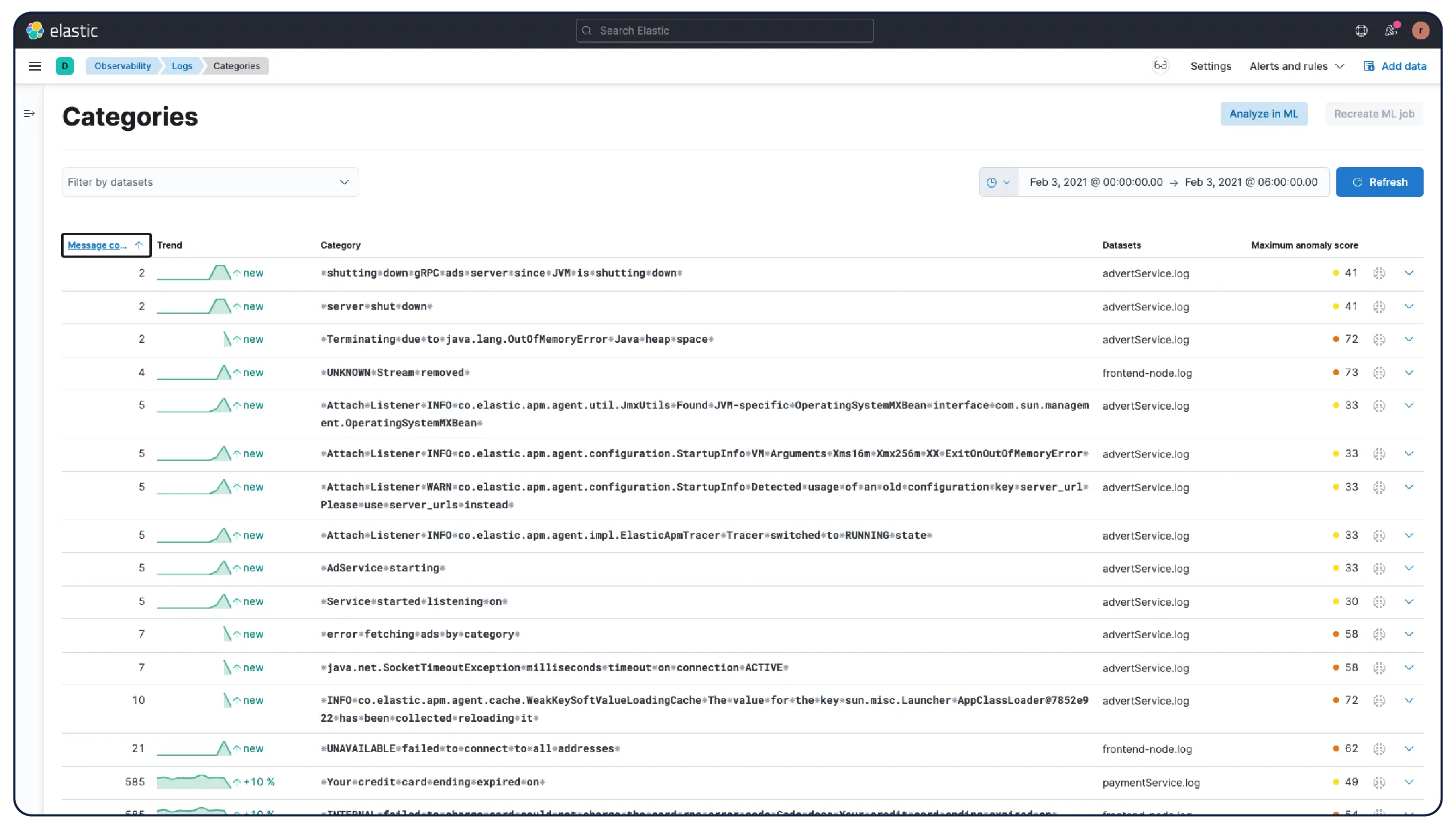

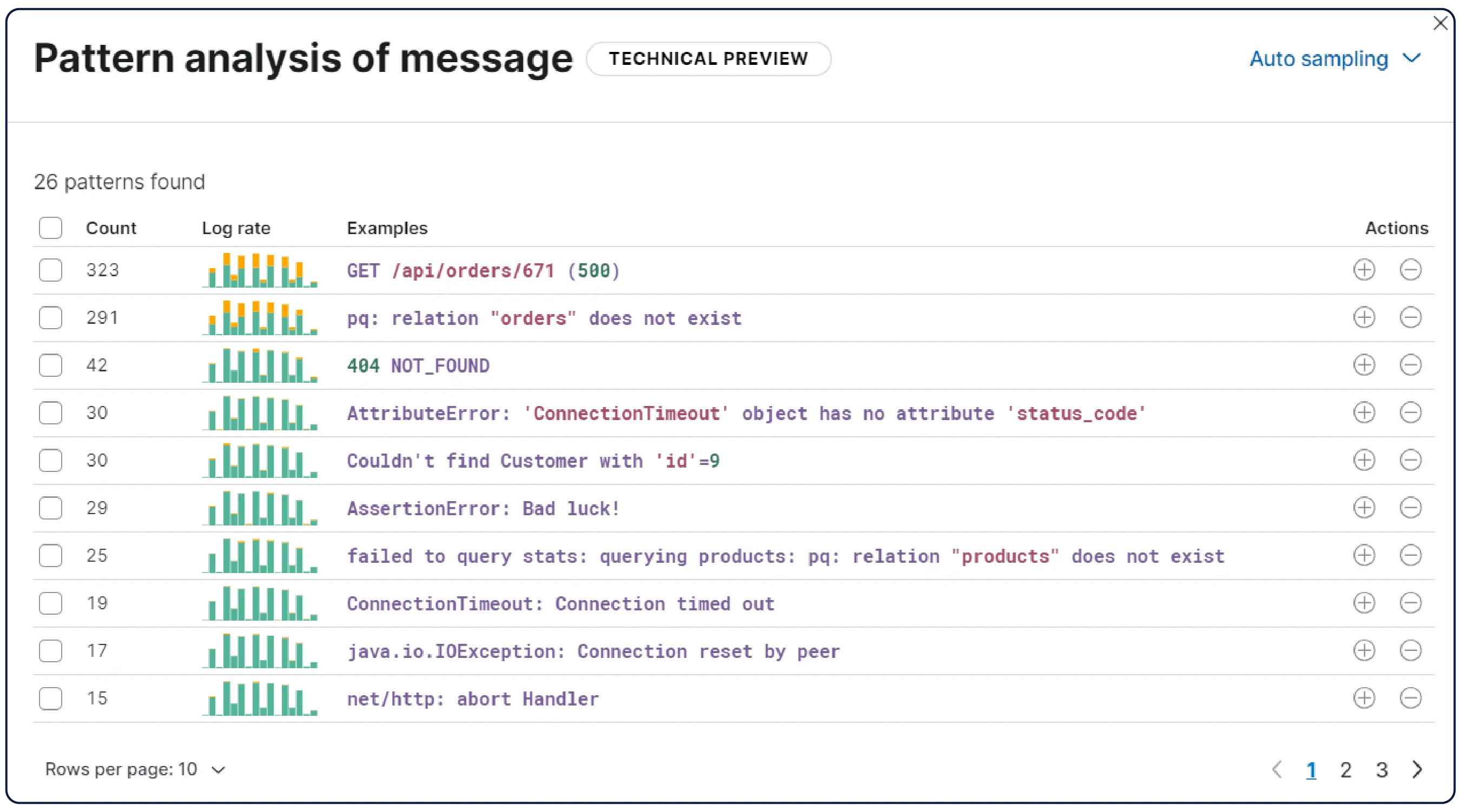

Elastic은 어디서나 로그를 수집하고 자동으로 이를 패턴으로 그룹화하며, 이상 징후를 강조하고 급증을 정확히 파악합니다. 따라서 과부하 없이 명확한 해답을 얻을 수 있습니다.

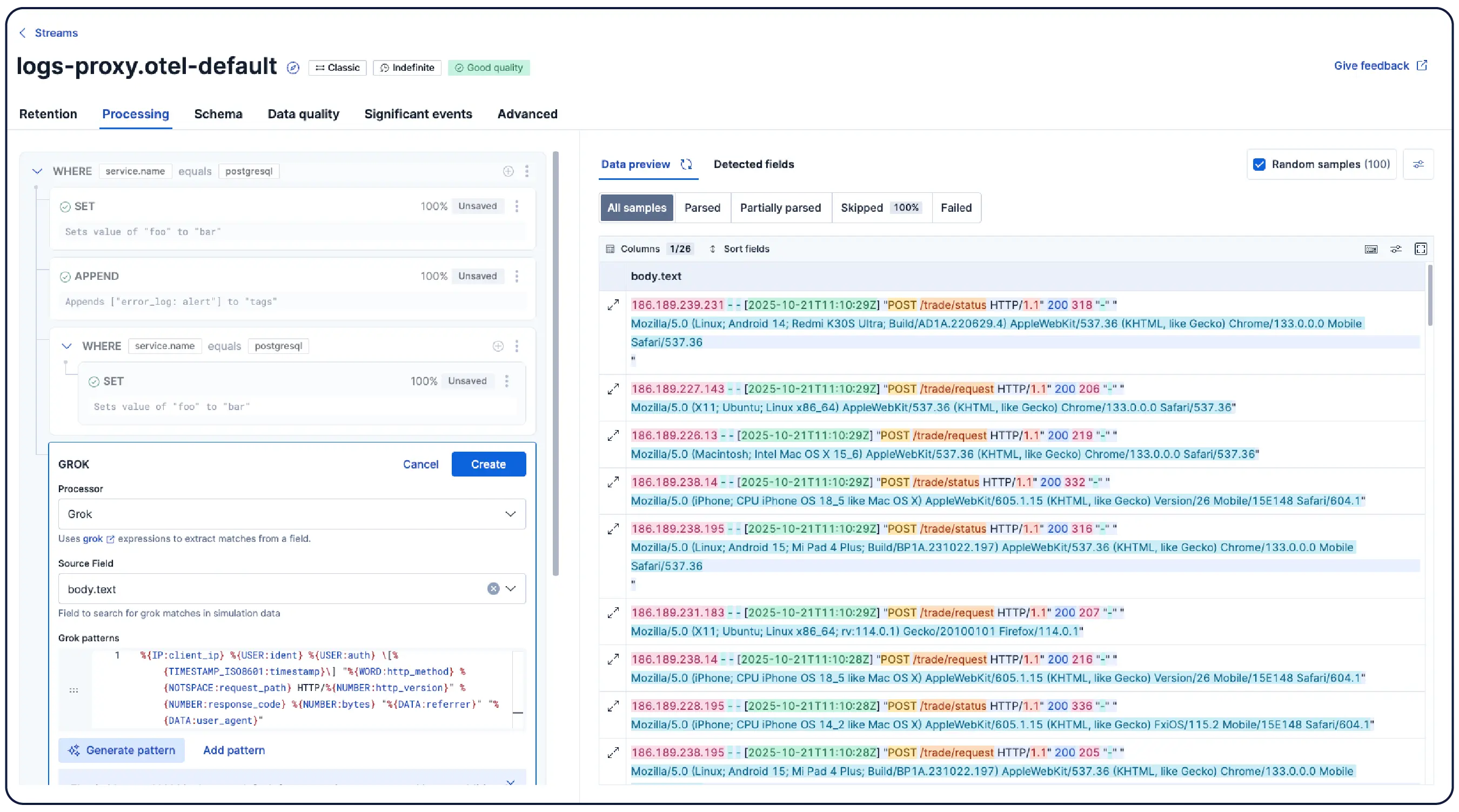

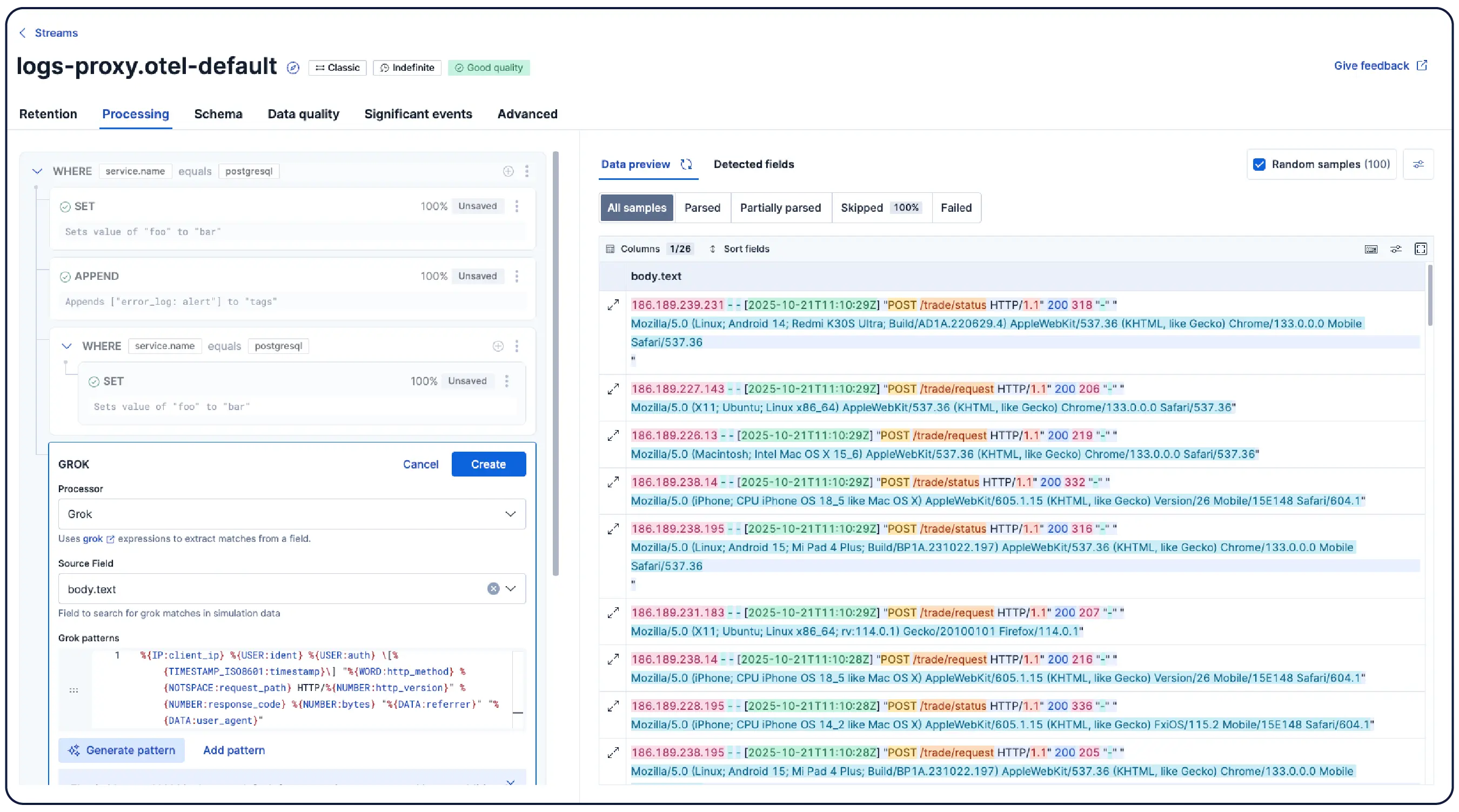

데이터를 논리적인 스트림으로 자동 구성하고 최소한의 수동 설정으로 구문 분석, 파티션 분할, 필드 추출 및 수명 주기 정책을 적용합니다.

수많은 기업이 Elastic Observability를 선택하는 이유를 알아보세요

대규모 로그 분석으로 복잡한 로그를 운영 인사이트로 전환하세요.

고객 스포트라이트

Comcast는 Elastic을 통해 매일 400테라바이트의 데이터를 수집하여 서비스를 모니터링하고 근본 원인 분석을 가속화함으로써 최상의 고객 경험을 보장합니다.

고객 스포트라이트

Discover는 Elastic을 통해 중앙 집중식 로깅 플랫폼을 도입하여 저장 공간 비용을 50% 절감하고 데이터 검색 시간을 향상했습니다.

고객 스포트라이트

Informatica는 100개 이상의 애플리케이션과 300개 이상의 Kubernetes 클러스터에서 로깅 워크로드 전체를 Elastic으로 마이그레이션하여 비용을 절감하고 MTTR을 단축했습니다.

채팅 참여

Elastic의 글로벌 커뮤니티에 연결하여 열린 대화와 협업에 참여해 보세요.

.jpg)