ログ分析の検索、分析、操作

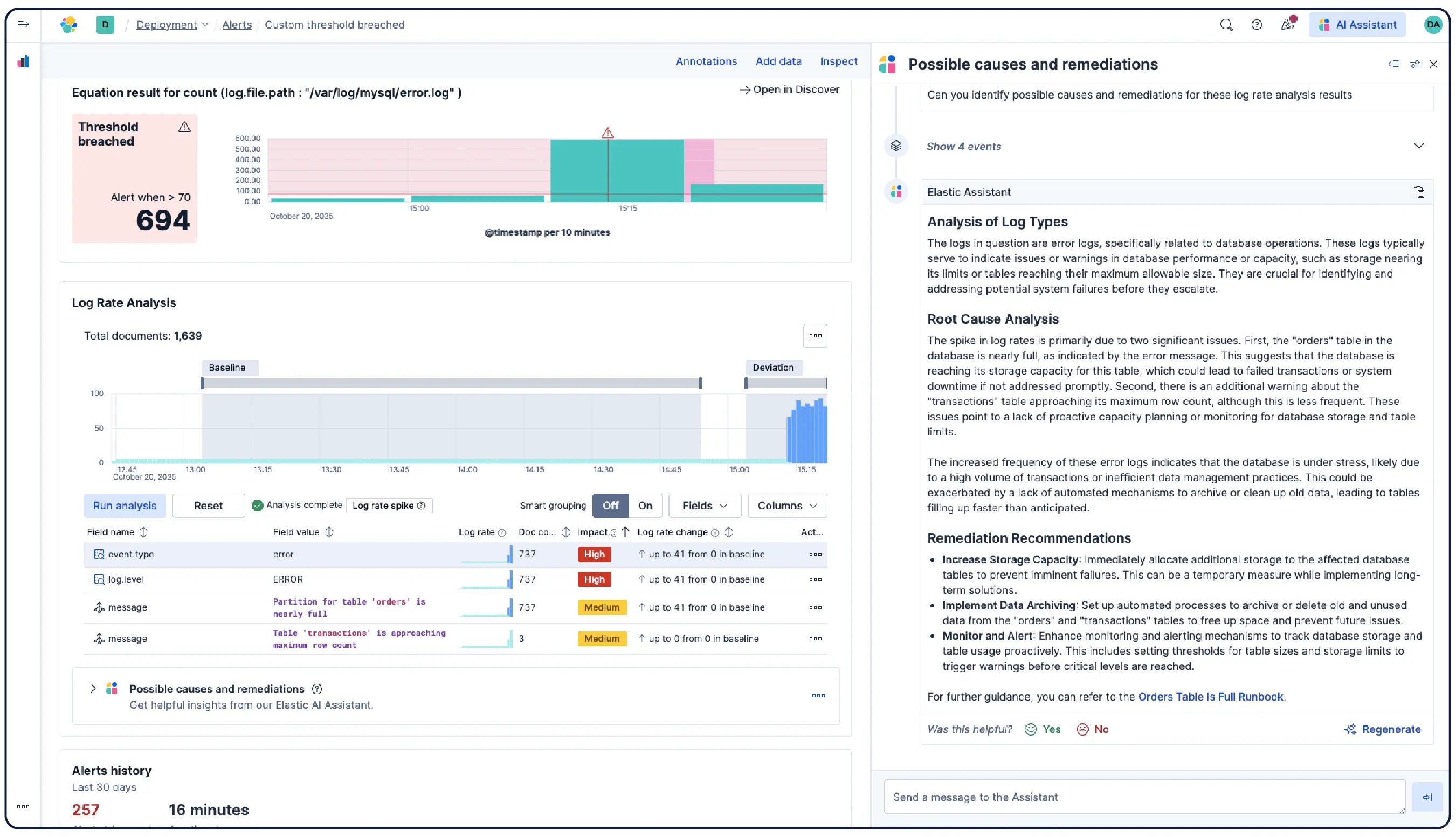

ログは至る所に存在し、すべてを記録し、コンテキストの最も豊富なソースとなります。Elasticはエージェント型AIを用いてノイズを除去し、乱雑で非構造化なログを運用上の回答に変換します。

ガイド付きデモ

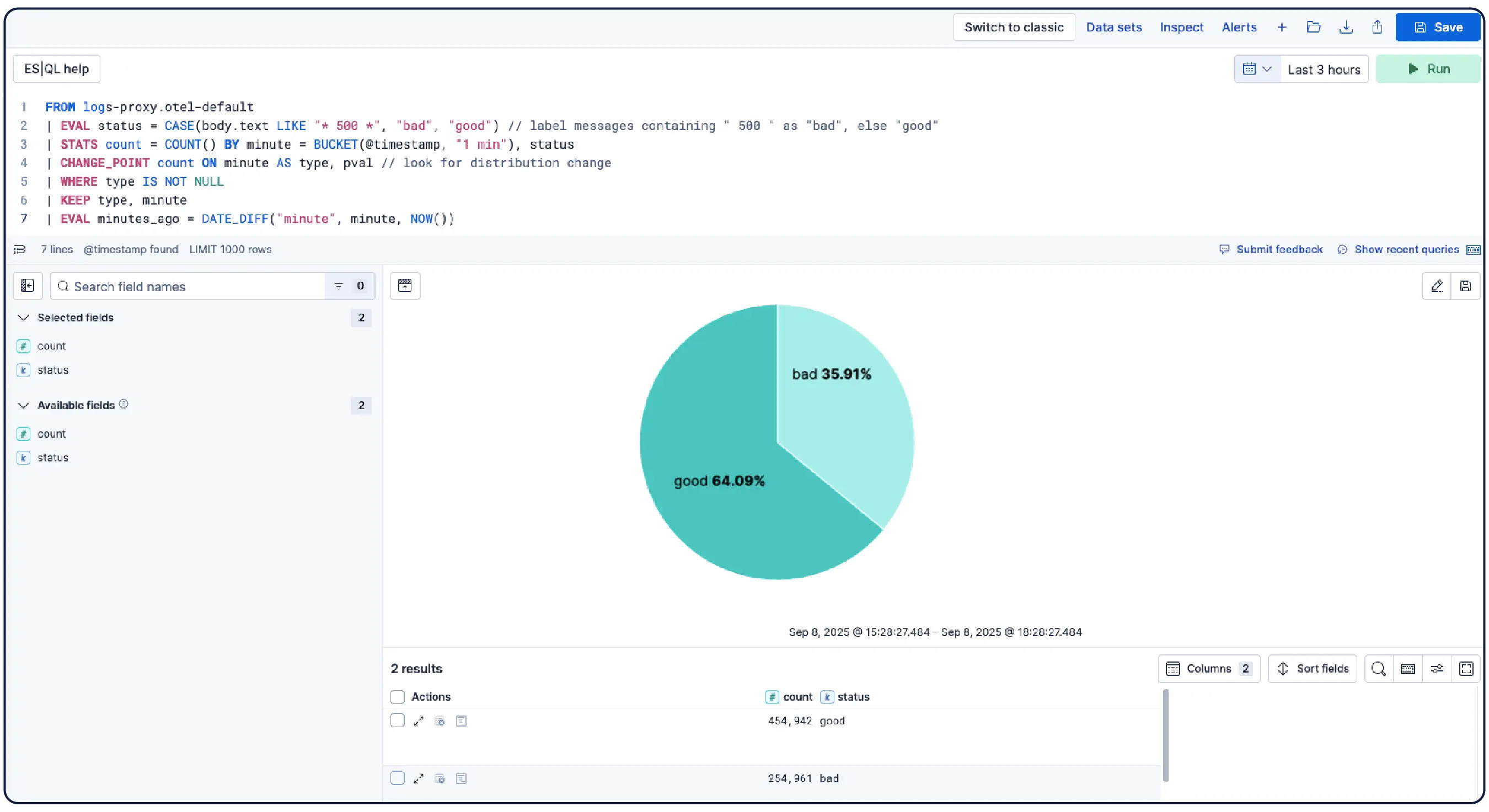

生のログから実際の回答へ

ログは何が起こったかを教えてくれます。Elasticはその理由を理解するのに役立ちます。

各種機能

数十億のログ、一つの明確な全体像

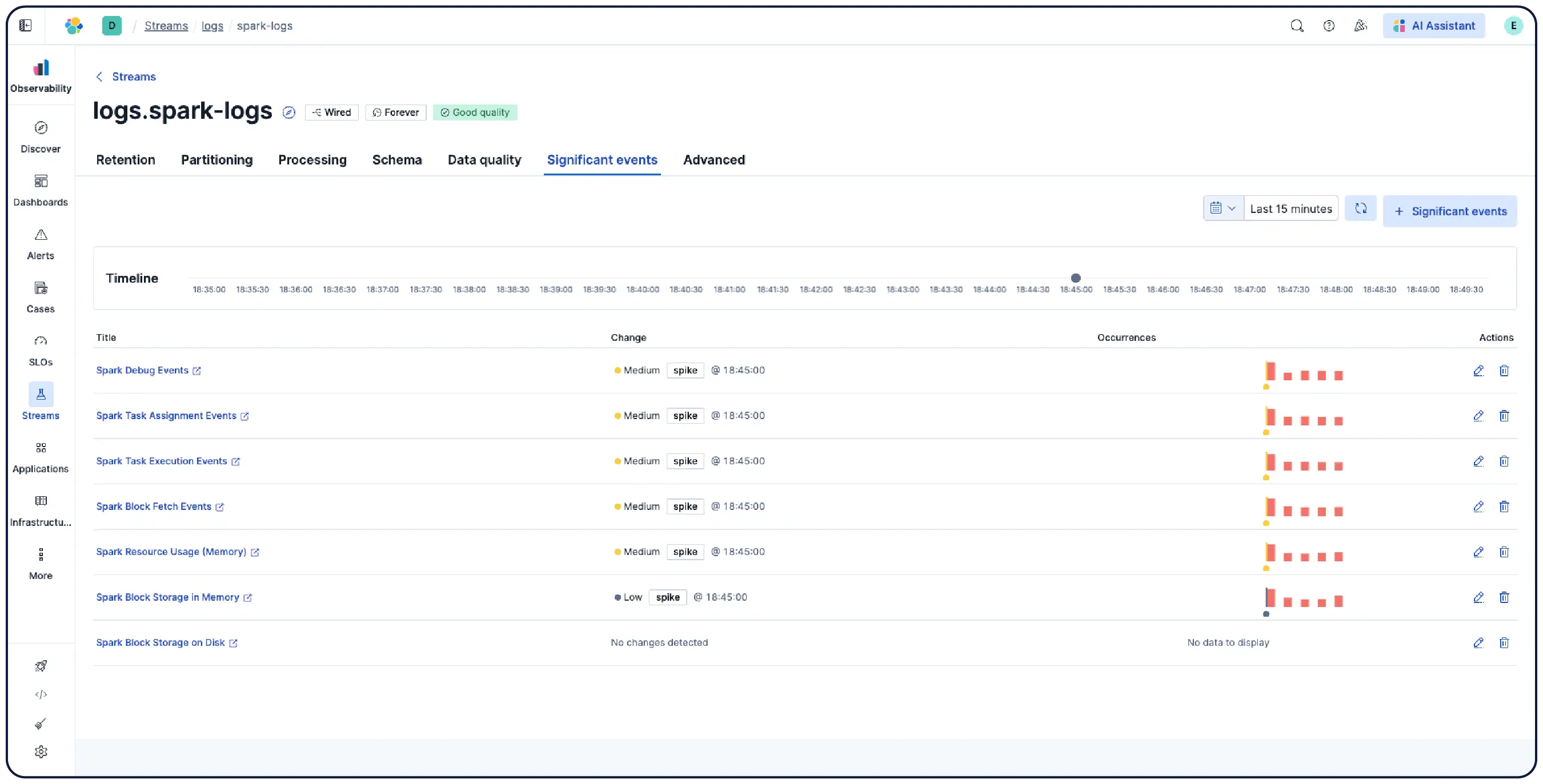

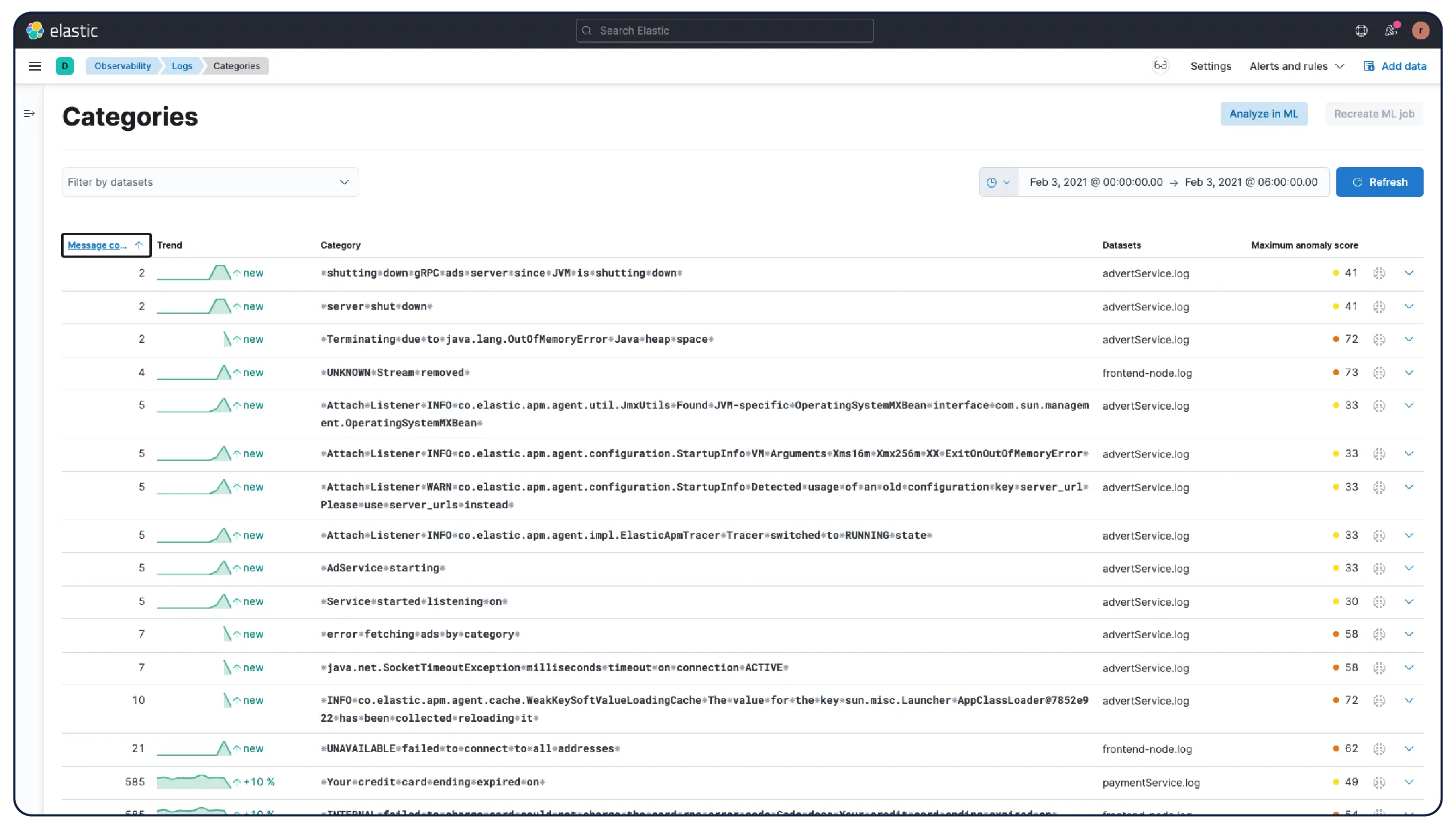

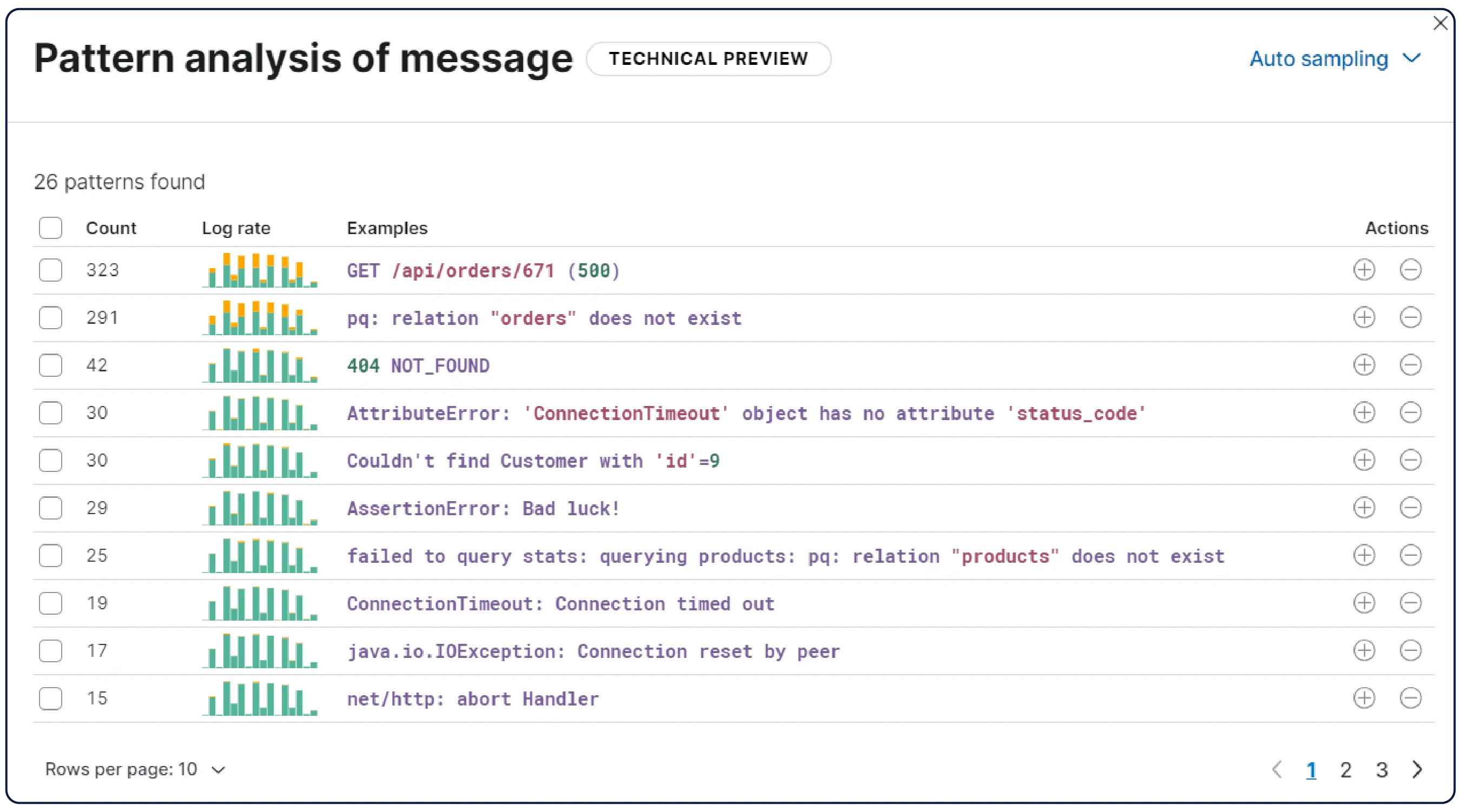

Elasticはどこからでもログを取り込み、自動的にパターンにグループ化し、異常を強調表示して急増を特定します。そのため、情報過多に陥ることなく、答えを得ることができます。

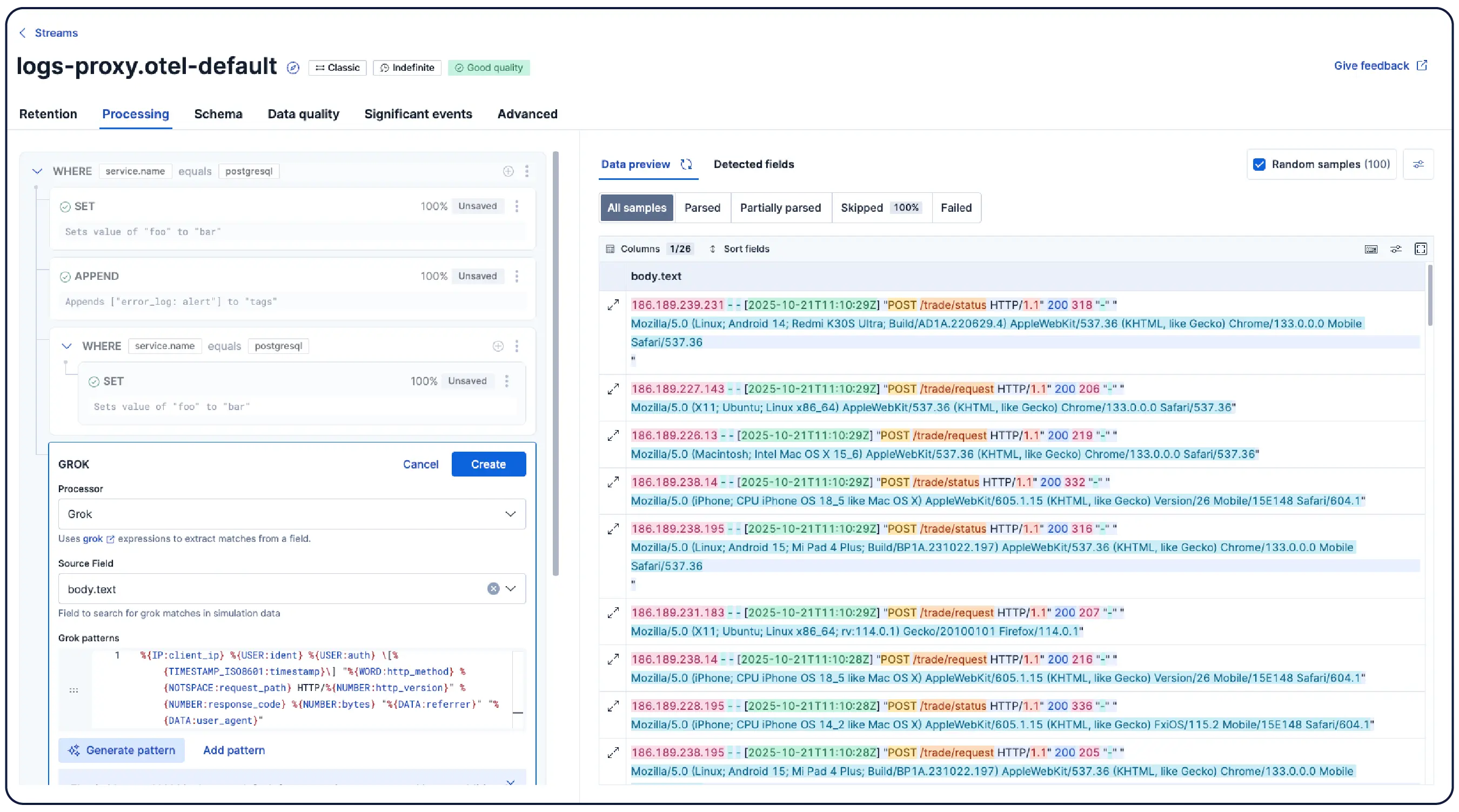

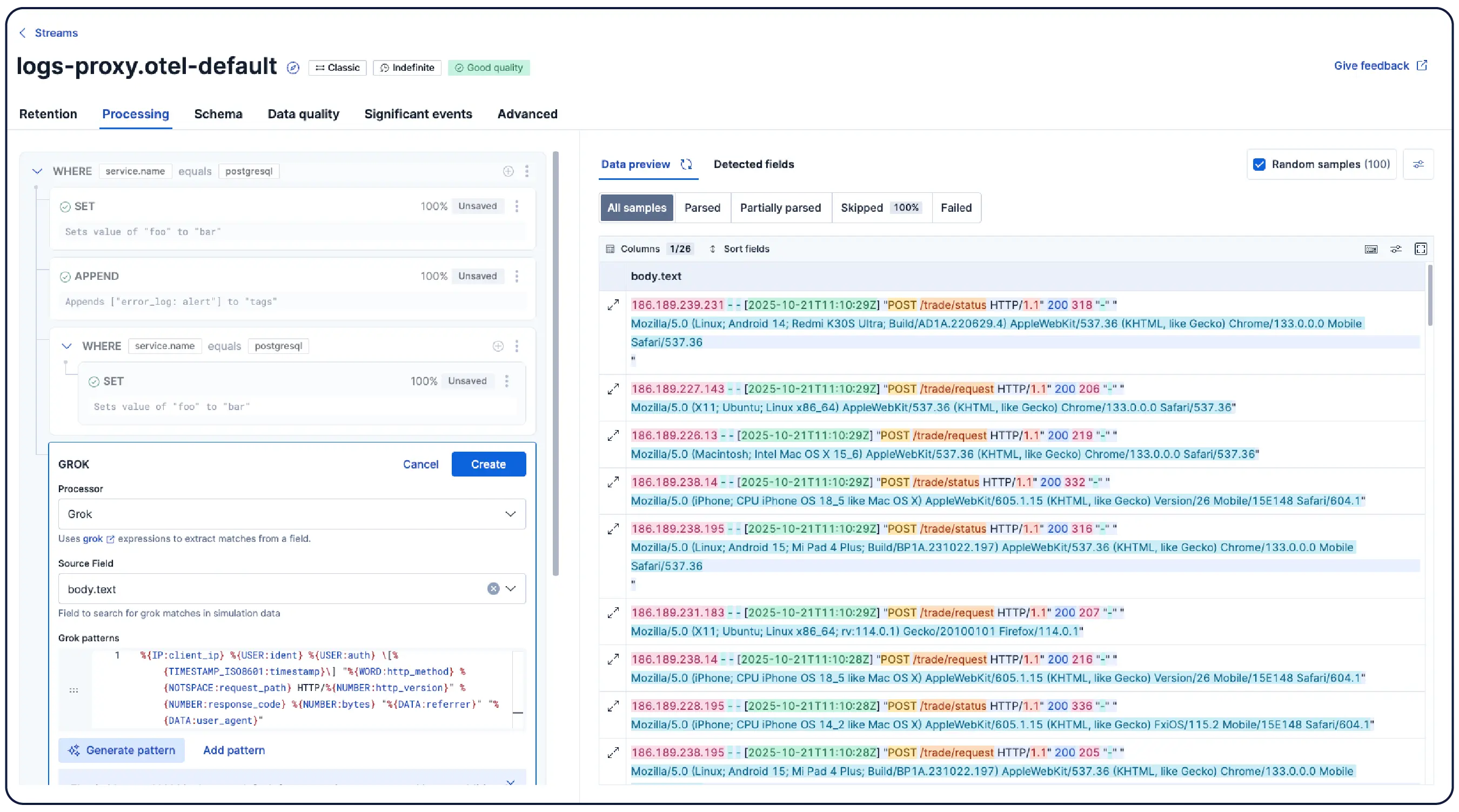

最小限の手動設定で、解析、分割、フィールド抽出、ライフサイクルポリシーを適用し、データを自動的に論理的なStreamsに整理します。

同業他社がElastic Observabilityを選ぶ理由をご覧ください

大規模なログ分析を活用して、煩雑なログを運用上の答えに変えます。

お客様事例

ComcastはElasticを使用して毎日400テラバイトのデータを取り込み、サービスの監視と根本原因分析の迅速化を実現し、最高水準の顧客体験を提供しています。

お客様事例

Elasticで一元化されたロギングプラットフォームを実装することで、ストレージコストを50%削減し、データ取得時間を短縮しました。

お客様事例

Informaticaは、100を超えるアプリケーションと300を超えるKubernetesクラスターのログワークロード全体をElasticに移行することで、コストを削減し、MTTRを短縮しました。

チャットに参加

Elasticのグローバルコミュニティとつながり、オープンな会話やコラボレーションに参加しましょう。

.jpg)