Building real-time address search with the Australian G-NAF dataset

In Australia, it pays to know where you are and where you are going. In a vast country with climates ranging from deserts to sub-tropical rainforest, the last thing you'd want on your next adventure is to get lost and accidentally end up with a desert posthumously named after you.

In 2016, the Geocoded National Address File (a.k.a. G-NAF) was made openly available by PSMA, an unlisted public company formed by the nine governments of Australia to collate and standardise, format, and aggregate location data from each of their jurisdictions. The G-NAF comprises authoritative national datasets that are rich and complex, with nearly 200 files across 35 different database tables.

The goal of this blog post is to show you how to use the power of Elasticsearch to build a real-time, suggestive, typeahead implementation across the approximately 14 million addresses in the G-NAF dataset.

The task is broken down into multiple separate steps:

- Download and install the prerequisites

- Stage the G-NAF datasets into a SQL database

- Create the Elasticsearch index and mappings

- Bulk index into Elasticsearch

- Query demonstrations for: i. Address search ii. Geo search

Let's get started!

Download and install the prerequisites

Before you can follow this example, you must download and install a number of resources:

- elastic-gnaf.fsx; The complete F# script as described throughout this blog post.

- Elasticsearch; This example makes use of the

search_as_you_typefeature implemented in 7.2. To get started easily, spin up a 14-day free trial of the Elasticsearch Service on Elastic Cloud. - August 2019 G-NAF data file; At time of writing, the latest dataset was published on 19th August 2019. Download this dataset (1.48Gb) and unzip it somewhere locally (expands to 2Gb).

- Microsoft F#; A mature, open source, cross-platform, functional-first programming language that runs on Linux, Mac OS X, Android, iOS, Windows, GPUs, and browsers. The example makes use of the interactive scripting console

fsi.exe. - Microsoft SQL Server 2017 Express or another capable SQL database. The G-NAF dataset includes pipe-separated data files along with scripted table creation, foreign key relationships, and views. Staging the data in a SQL server prior to indexing into Elasticsearch enables us to harness the bundled G-NAF scripts.

- SQL Server Management Studio 18.2; Enables you to easily manage Microsoft SQL server.

- The .NET Elasticsearch client and its dependencies. These can be downloaded from nuget, unzipped, and copied into the same directory as the

elastic-gnaf.fsxscript.- NEST 7.3.0; A strongly typed interface to Elasticsearch. Fluent and classic object initializer mappings of requests and responses.

- Elasticsearch.Net 7.3.0; A low-level, dependency- free client that has no opinions about how you build and represent your requests and responses.

- System.Diagnostics.DiagnosticSource 4.5.1

- System.Buffers 4.5.0

For those more familiar with Postgres, Oracle, or MySQL databases, this example would work just as well, with some minor tweaking to import and SQL statements.

Stage the G-NAF datasets into a SQL database

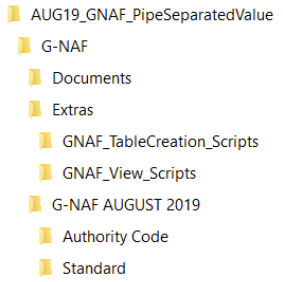

With the G-NAF zip file downloaded and extracted you should be presented with a number of directories and files:

- Documents - Product description and release notes

- Extras/* - SQL scripts for table creation, foreign key relationships, and views

- G-NAF AUGUST 2019/Authority Code - Shared reference data

- G-NAF AUGUST 2019/Standard - Australian State data

You should now create a new empty SQL database (named gnaf) to hold the data. This is easily done by following this Microsoft SQL Server step-by-step guide.

Initialise the SQL database

The G-NAF zip file contains a number of scripts that can be used to initialise the newly created database with the correct tables, foreign key constraints, and views. The elastic-gnaf.fsx script contains the following function to iterate these files and apply them to the database:

let initSql (sqlConnection:SqlConnection) =

let getCommand file =

let fixLine (line:string) = line.Replace("CREATE OR REPLACE VIEW ADDRESS_VIEW", "CREATE VIEW ADDRESS_VIEW")

let fixLines file = File.ReadAllLines(file) |> Array.map fixLine

String.Join(Environment.NewLine, fixLines file)

let tableScriptsDir = Path.Combine(unzippedGNAFDir, @"Extras\GNAF_TableCreation_Scripts")

let createTables = Path.Combine(tableScriptsDir, "create_tables_sqlserver.sql")

let constraints = Path.Combine(tableScriptsDir, "add_fk_constraints.sql")

let createView = Path.Combine(unzippedGNAFDir, @"Extras\GNAF_View_Scripts\address_view.sql")

log "Initialising SQL database"

for setupFile in [| createTables; constraints; createView |] do

let commandText = getCommand setupFile

let command = new SqlCommand(commandText, sqlConnection)

if command.ExecuteNonQuery() <> -1 then failwith (sprintf "Received failure return value for %s" setupFile)

sqlConnection

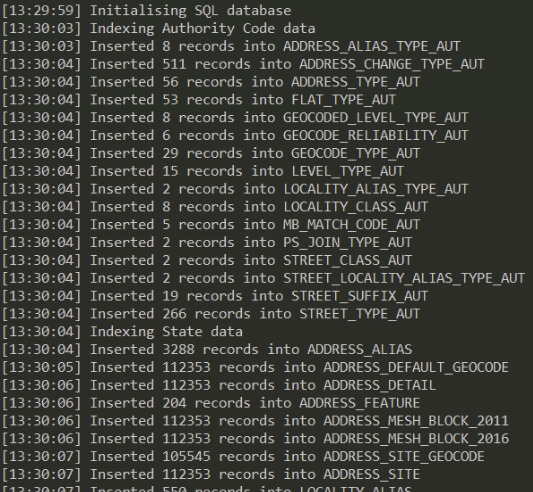

After the initialisation, the next part of the script iterates the pipe-separated data files and bulk imports them into the SQL database. This should result in a database around 12Gb in size:

let indexSql (sqlConnection:SqlConnection) =

let indexFiles(dir, regex) =

let bulkSqlInsert command table =

let command = new SqlCommand(command, sqlConnection)

command.CommandTimeout <- 600

let returnValue = command.ExecuteNonQuery()

if returnValue = 0 then failwith (sprintf "No records inserted into %s" table)

else logf "Inserted %i records into %s" returnValue table

for file in Directory.EnumerateFiles(dir) do

let fileInfo = FileInfo file

let rMatch = Regex.Match(fileInfo.Name, regex)

let table = rMatch.Groups.["table"].Value

let bulkInsert = sprintf "BULK INSERT %s FROM '%s' WITH (FIELDTERMINATOR = '|', FIRSTROW = 2)" table fileInfo.FullName

bulkSqlInsert bulkInsert table

log "Indexing Authority Code data"

let dataAuthorityDir = Path.Combine(unzippedGNAFDir, @"G-NAF AUGUST 2019\Authority Code")

indexFiles (dataAuthorityDir, "^Authority_Code_(?<table>.*?)_psv.psv$")

log "Indexing State data"

let dataStandardDir = Path.Combine(unzippedGNAFDir, @"G-NAF AUGUST 2019\Standard")

indexFiles (dataStandardDir, "^[^_]*_(?<table>.*?)_psv.psv$")

sqlConnection

When running the elastic-gnaf.fsx script, you will see progress written to the console output:

When the script completes, you will have successfully staged the G-NAF data into a SQL database ready for ingestion into Elasticsearch.

Create the Elasticsearch index and mappings

Before you bulk index into Elasticsearch, you must define the document structure, create an index, and configure the mappings.

Define the document structure

Since this example uses F# , the document structures are defined using F# records:

type AddressComponent =

{ BuildingName: string

Number: string

Street: string

Locality: string

State: string

Postcode: string }

let toDisplay (address:AddressComponent) =

seq {

yield address.Number

yield address.Street + ","

yield address.Locality

yield address.State

yield address.Postcode

}

|> String.concat " "

type Address =

{ Id: string 1.

Display: string 2.

Location: GeoLocation 3.

Component: AddressComponent 4. }

The intention behind these records types is to store the primary items of interest in their own fields:

- Id - The G-NAF dataset already provides an identifier

ADDRESS_DETAIL_PID, so you can use this value, e.g.GANT_703838111 - Display - The full formatted address used for display purposes. This will also be used for the

search_as_you_typesearch capability, e.g.6 Macquarie Street, Sydney, NSW 2000. The G-NAF dataset does not provide a full formatted address, so you will have to write some data transformations. - Location - The address latitude and longitude, using the NEST

GeoLocationtype. This will be indexed as ageo_pointso you can use geo queries in the .NET API. - Component - The separated address components. This is not indexed, but can be retrieved through the

_sourceproperty on search hits.

Create the index and mappings

Now that you have defined the document structure through the use of an F# type, you can go ahead and create the Elasticsearch index along with the mapping:

let createIndex (elasticClient:ElasticClient) =

let properties = new Properties<Address>()

properties.Add(PropertyName.op_Implicit "display", new SearchAsYouTypeProperty()) 1.

properties.Add(PropertyName.op_Implicit "location", new GeoPointProperty()) 2.

let mapping = new TypeMapping()

mapping.Properties <- properties

let settings = new IndexSettings()

settings.NumberOfReplicas <- Nullable 0 3.

settings.NumberOfShards <- Nullable 1 4.

let createIndexRequest = new CreateIndexRequest(IndexName.op_Implicit elasticsearchIndex)

createIndexRequest.Settings <- settings

createIndexRequest.Mappings <- mapping

logf "Creating index %s" elasticsearchIndex

let indexResponse = elasticClient.Indices.Create(createIndexRequest)

logf "Created index %O" indexResponse

- Map the

displayproperty as a search-as-you-type property. - Map the

locationproperty as a geo point property. - In this example, we aren't interested in replicating the primary shard, so you can set the number of replicas to 0.

- The indexed data will be less than 12Gb, so you can configure 1 shard.

When you run the script, the following HTTP request will be issued to the Elasticsearch server and the index will be created:

PUT /address

{

"mappings": {

"properties": {

"display": {

"type": "search_as_you_type"

},

"location": {

"type": "geo_point"

}

}

},

"settings": {

"index.number_of_replicas": 0,

"index.number_of_shards": 1

}

}

Bulk index into Elasticsearch

Now you have created the index and mapping, you can enumerate the SQL database and bulk index into Elasticsearch.

The bulk indexing function utilises the bulk helper in the NEST client:

let bulkIndex (elasticClient:ElasticClient) (sqlConnection:SqlConnection) =

let timeout = TimeSpan.FromMinutes(60.0) 1.

let currentPage = ref 0

let perBulkRequest = Nullable 10000 2.

let backoffTime = "30s" 3.

let backoffRetries = Nullable 2

let parallelism = Nullable 4 4.

let columnValue column (reader:SqlDataReader) =

let ordinal = reader.GetOrdinal(column)

if reader.IsDBNull(ordinal) then String.Empty

else reader.[ordinal].ToString()

let columnDecimalValue column (reader:SqlDataReader) =

reader.GetOrdinal(column) |> reader.GetDecimal

let address (reader:SqlDataReader) = 5.

// omitted for brevity

let readCommand = new SqlCommand("SELECT * FROM ADDRESS_VIEW", sqlConnection) 6.

readCommand.CommandTimeout <- 600

let reader = readCommand.ExecuteReader()

let results = 7.

seq {

while reader.Read() do

let id = columnValue "ADDRESS_DETAIL_PID" reader

let lat = columnDecimalValue "LATITUDE" reader

let lon = columnDecimalValue "LONGITUDE" reader

let addressParts = address reader

yield {

Id = id

Display = addressParts |> toDisplay

Location = GeoLocation.TryCreate((double)lat, (double)lon)

Component = addressParts

}

}

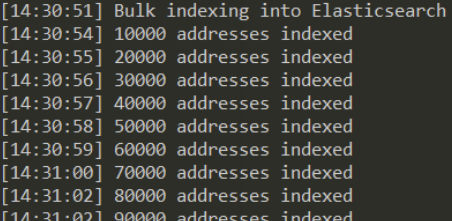

log "Bulk indexing into Elasticsearch"

elasticClient.BulkAll(results, fun (b:BulkAllDescriptor<Address>) ->

b.Index(IndexName.op_Implicit elasticsearchIndex)

.BackOffTime(Time.op_Implicit backoffTime)

.BackOffRetries(backoffRetries)

.RefreshOnCompleted()

.MaxDegreeOfParallelism(parallelism)

.Size(perBulkRequest) :> IBulkAllRequest<Address>).Wait(timeout, fun next ->

let page = Interlocked.Increment(currentPage)

logf "%i addresses indexed" (page * perBulkRequest.Value) 8.

) |> ignore

log "Bulk indexing complete"

- Set the bulk timeout to 60 minutes, which should be enough time to index all of the address data into Elasticsearch.

- Index 10,000 addresses per bulk request.

- If bulk indexing encounters any errors, back off for 30 seconds up to 2 times.

- Perform 4 bulk requests in parallel.

- Formatting the address is omitted for brevity.

- Use the G-NAF provided

ADDRESS_VIEWto enumerate the SQL data. - Lazily enumerate the result of this SQL query and transform the output into the defined F# type.

- Write progress to the console.

In the background many bulk requests are issued to the Elasticsearch server that look similar to:

POST /address/_bulk

{"index":{"_id":"GANT_702959719"}}

{"id":"GANT_702959719","display":"2/9 Glyde Court, Leanyer NT 0812","location":{"lat":-12.368626,"lon":130.899675},"component":{"buildingName":"","number":"2/9","street":"Glyde Court","locality":"Leanyer","state":"NT","postcode":"0812"}}

{"index":{"_id":"GANT_702959720"}}

{"id":"GANT_702959720","display":"5/53 Rosewood Crescent, Leanyer NT 0812","location":{"lat":-12.368795,"lon":130.898465},"component":{"buildingName":"","number":"5/53","street":"Rosewood Crescent","locality":"Leanyer","state":"NT","postcode":"0812"}}

{"index":{"_id":"GANT_702959721"}}

...omitted for brevity...

The console will show the following output:

Congratulations, you have successfully indexed the G-NAF dataset into Elasticsearch and are ready to start experimenting with querying!

Query demonstrations

Address search

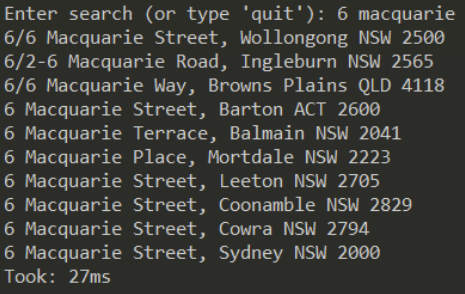

You can now use the search_as_you_type functionality on the display field to perform an address search:

let searchAsYouTypeDemo (elasticClient:ElasticClient) =

let search text =

let query = new MultiMatchQuery() 1.

query.Query <- text

query.Type <- Nullable.op_Implicit TextQueryType.BoolPrefix

query.Fields <- Fields.op_Implicit "display,display._2gram,display._3gram" 2.

let request = new SearchRequest<Address>()

request.Query <- new QueryContainer(query)

let searchAsYouTypeResponse = elasticClient.Search<Address>(request) 3.

displayResults searchAsYouTypeResponse 4.

let readLine () =

Console.Write "\nEnter search (or type 'quit'): "

Console.ReadLine()

let readlines = Seq.initInfinite (fun _ -> readLine())

let run item = if item = "quit"

then Some(item)

else

search item

None

Seq.pick run readlines |> ignore

elasticClient

- Perform a multi-match query using the console input as the query text, a

bool_prefixtype, and the fields shown in 2. - Fields indexed using the

search_as_you_typefield type are also indexed alongside auto-generated fields to contain processed versions of the input. When you construct your query, you must include these additional fields. - Perform the search.

- Write the results to the console and show the time taken.

At this point in the script you are able to enter a search query and view the results:

In the background, the script dispatches a search query to the Elasticsearch server:

POST /address/_search

{

"query": {

"multi_match": {

"fields": [

"display",

"display._2gram",

"display._3gram"

],

"query": "6 macquarie",

"type": "bool_prefix"

}

}

}

...and the server returns a list of results. For example:

{

"took": 27,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 10000,

"relation": "gte"

},

"max_score": 7.0198717,

"hits": [

{

"_index": "address",

"_type": "_doc",

"_id": "GANSW706320144",

"_score": 7.0198717,

"_source": {

"id": "GANSW706320144",

"display": "6\/6 Macquarie Street, Wollongong NSW 2500",

"location": {

"lat": -34.4205949,

"lon": 150.88502529

},

"component": {

"buildingName": "",

"number": "6\/6",

"street": "Macquarie Street",

"locality": "Wollongong",

"state": "NSW",

"postcode": "2500"

}

}

},

{

"_index": "address",

"_type": "_doc",

"_id": "GANSW710276847",

"_score": 5.8887067,

"_source": {

"id": "GANSW710276847",

"display": "6 Macquarie Street, Sydney NSW 2000",

"location": {

"lat": -33.86738924,

"lon": 151.21305098

},

"component": {

"buildingName": "Parliament House",

"number": "6",

"street": "Macquarie Street",

"locality": "Sydney",

"state": "NSW",

"postcode": "2000"

}

}

}

...omitted for brevity...

]

}

}

Congratulations! You have just searched the G-NAF address dataset and returned a list of potential candidates for a matching address.

You can now choose to enter another search query, or type 'quit' to proceed to the Geo Search example.

Geo search

After you quit the address search demonstration the script advances to an example of a Geo Distance query, looking for addresses within 20 kilometres of Uluru.

let geoSearchDemo (elasticClient:ElasticClient) =

let query = new GeoDistanceQuery() 1.

query.Field <- Field.op_Implicit "location" 2.

query.Distance <- Distance.op_Implicit "20km" 3.

query.Location <- new GeoLocation(-25.3444, 131.0369) 4.

let request = new SearchRequest<Address>()

request.Query <- new QueryContainer(query)

let geoSearchResponse = elasticClient.Search<Address>(request) 5.

displayResults geoSearchResponse 6.

- Perform a Geo Distance query.

- Use the

locationproperty on the address. - Search all addresses within 20 kilometres of the query coordinates in 4.

- Set the query coordinates.

- Perform the search.

- Write the results to the console and show the time taken.

At this point, the console displays the addresses found.

In the background, the script has dispatched a search query to the Elasticsearch server:

POST /address/_search

{

"query": {

"geo_distance": {

"distance": "200m",

"location": {

"lat": -33.71,

"lon": 150.31

}

}

}

}

...and the server returns a list of results similar to the previous example.

In Closing

This example and blog post was put together during my Spacetime, a program run by Elastic.

Spacetime gives you, the engineer, the chance to break free from your day-to-day responsibilities and to explore something new and of interest. For me this was to experiment more with F# and the G-NAF datasets.

This freedom for engineers is baked into our source code.