Observability trends for 2026 (Part 2): GenAI and OpenTelemetry reshape the landscape

_(1).png)

Over the course of my 20 years as a developer, SRE, and now observability product leader, software has typically progressed at a good pace. But now, the emergence of two transformative technologies are fundamentally reshaping enterprise observability: generative AI (GenAI) and OpenTelemetry (OTel).

We surveyed over 500 IT decision-makers for a new report:The Landscape of Observability in 2026: Balancing Cost and Innovation. The data confirms what many practitioners suspect: These aren't science projects anymore. They're in production, reshaping vendor decisions, and changing how teams actually work.

Generative AI: The hype is real, but so are the limitations

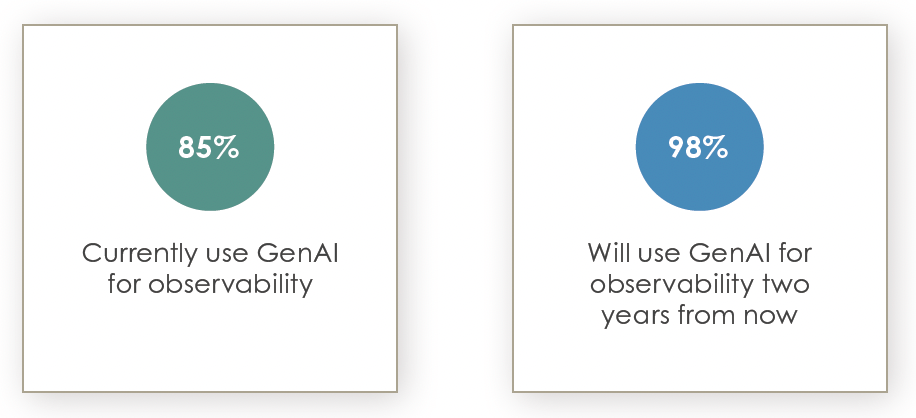

Today, 85% of organizations use some form of GenAI for observability. Within two years, that number will reach 98%. At that point, it becomes table stakes for any platform worth evaluating.

Why the speed? Observability generates data at a scale humans can't process manually. Correlating logs, metrics, and traces during an incident used to require an engineer who could hold an entire system topology in their head. GenAI automates that pattern recognition and lets teams query telemetry in plain language.

That's the promise. The reality is messier.

How teams are actually implementing generative AI

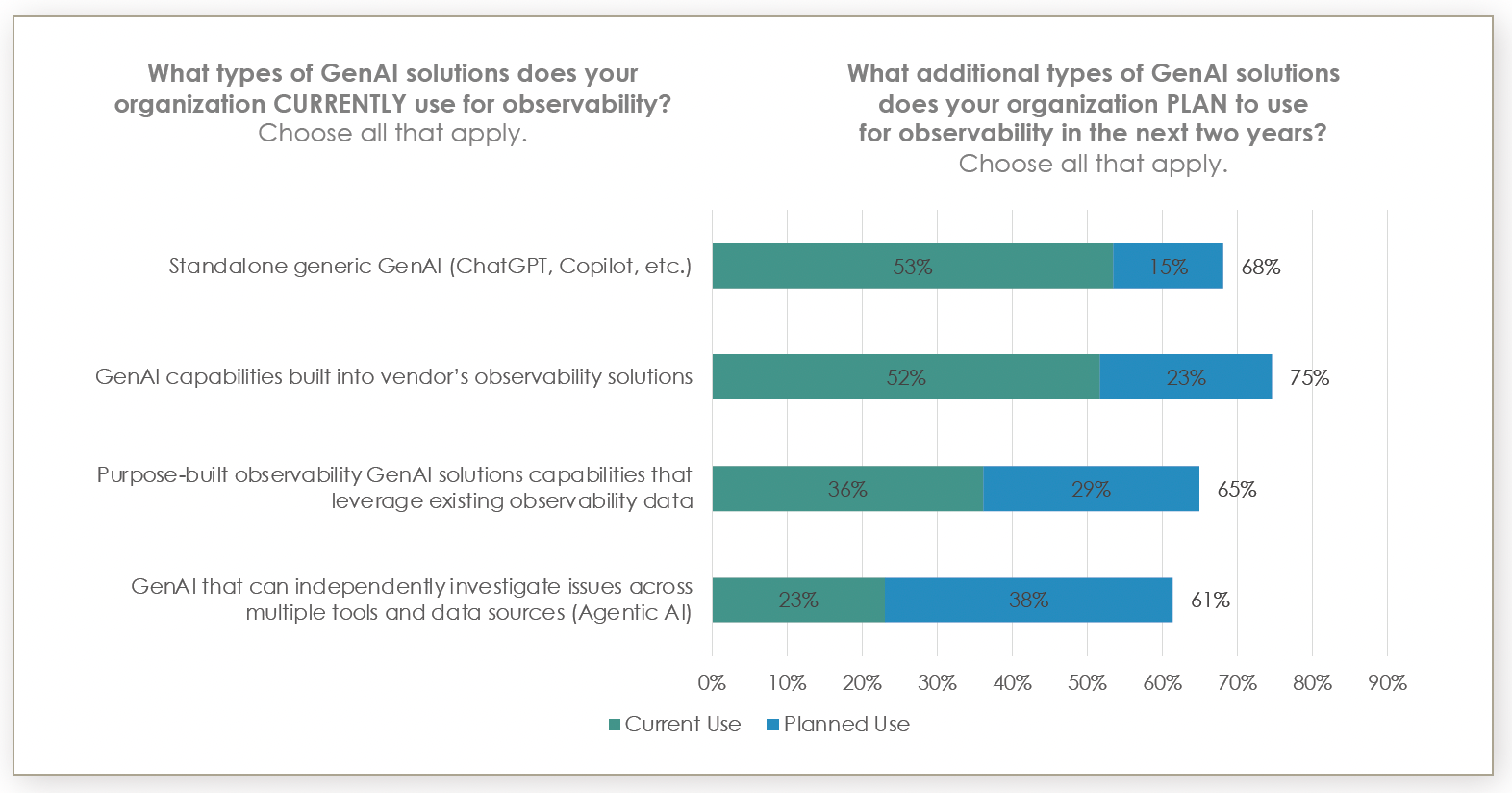

Organizations run multiple approaches simultaneously because no single one does everything well.

Standalone tools (like ChatGPT and Claude) and built-in platform capabilities show nearly identical adoption at 53% and 52%. Both are easy to deploy without custom work.

Then, the trajectories diverge.

15% plan to add standalone GenAI

23% plan to focus on built-in features

Vendor-integrated GenAI reaches 75% adoption within two years

This makes sense; standalone tools lack context. You can paste logs into ChatGPT, but it doesn't know your service dependencies or deployment patterns. It's useful for adhoc analysis but not production workflows. Vendor-integrated solutions operate on your data with context already present.

Purpose-built observability GenAI and agentic AI require more integration effort. Current adoption is lower, but interest is high among teams who've matured past the basics.

GenAI maturity matters more than budget

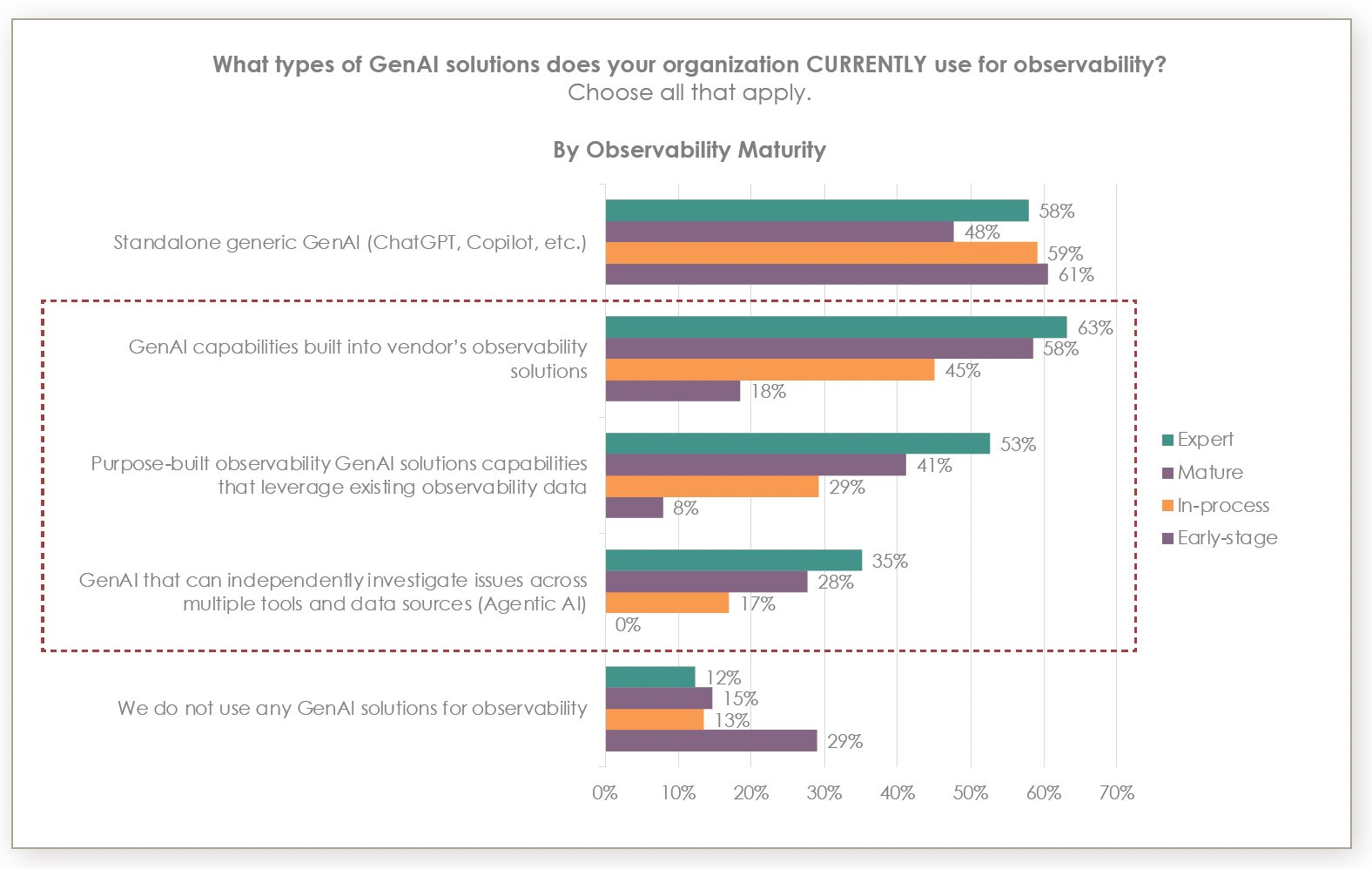

Early-stage teams show 71% GenAI adoption versus 85%–88% among mature organizations. The more interesting pattern is which GenAI solutions they use.

Standalone generic GenAI stays flat across maturity levels because it’s easy to adopt anywhere. The gap appears in observability-specific implementations. Vendor-integrated, purpose-built, and agentic AI correlate with maturity.

Agentic AI shows this starkly. These autonomous systems investigate issues, correlate data, and execute remediation without human initiation. 23% of teams currently use them, and an additional 38% plan to use them. By maturity levels:

Expert teams: 35%

Mature teams: 28%

In-process: 17%

Early-stage: 0%

Zero in early-stage, because agentic AI has prerequisites that early-stage teams lack: comprehensive telemetry, consistent schemas, documented dependencies, codified runbooks, and mature incident response. You can't deploy autonomous remediation if you haven't defined what remediation means.

If you're still building foundational coverage, focus on vendor-integrated GenAI first. The autonomous stuff can wait.

Efficiency gains for observability teams are modest — for now

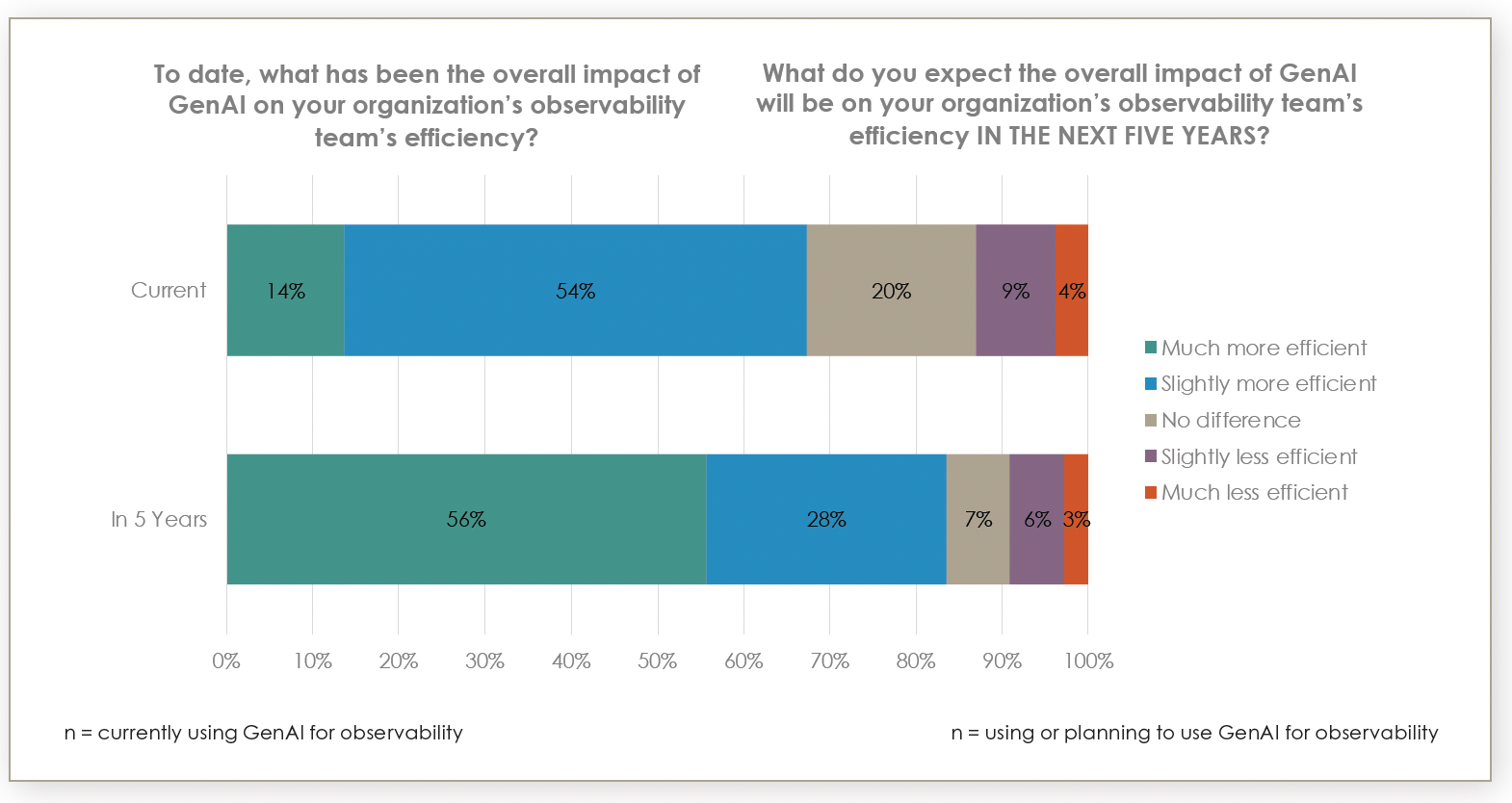

68% of teams using GenAI report increased efficiency; only 14% call it substantial. And the rest see modest improvement. GenAI helps, but it hasn't transformed operations for most teams yet.

Projections over the next five years include 84% of teams expecting to see improvement and 56% expecting to see substantial gains, which is 4x the current experience.

The gap makes sense. Current implementations are first-generation with limited scope. Teams are still figuring out effective workflows. This matches other automation technologies — initial gains stay modest until processes restructure around new capabilities.

Where the efficiency actually shows up is in making existing data accessible. Many organizations have data lakes sitting underutilized because only a few engineers know how to query them; the data just dies there. Natural language interfaces resurrect that investment. Queries that took minutes of expert work become 60-second requests anyone can make.

That alone justifies the investment for many teams even without autonomous remediation.

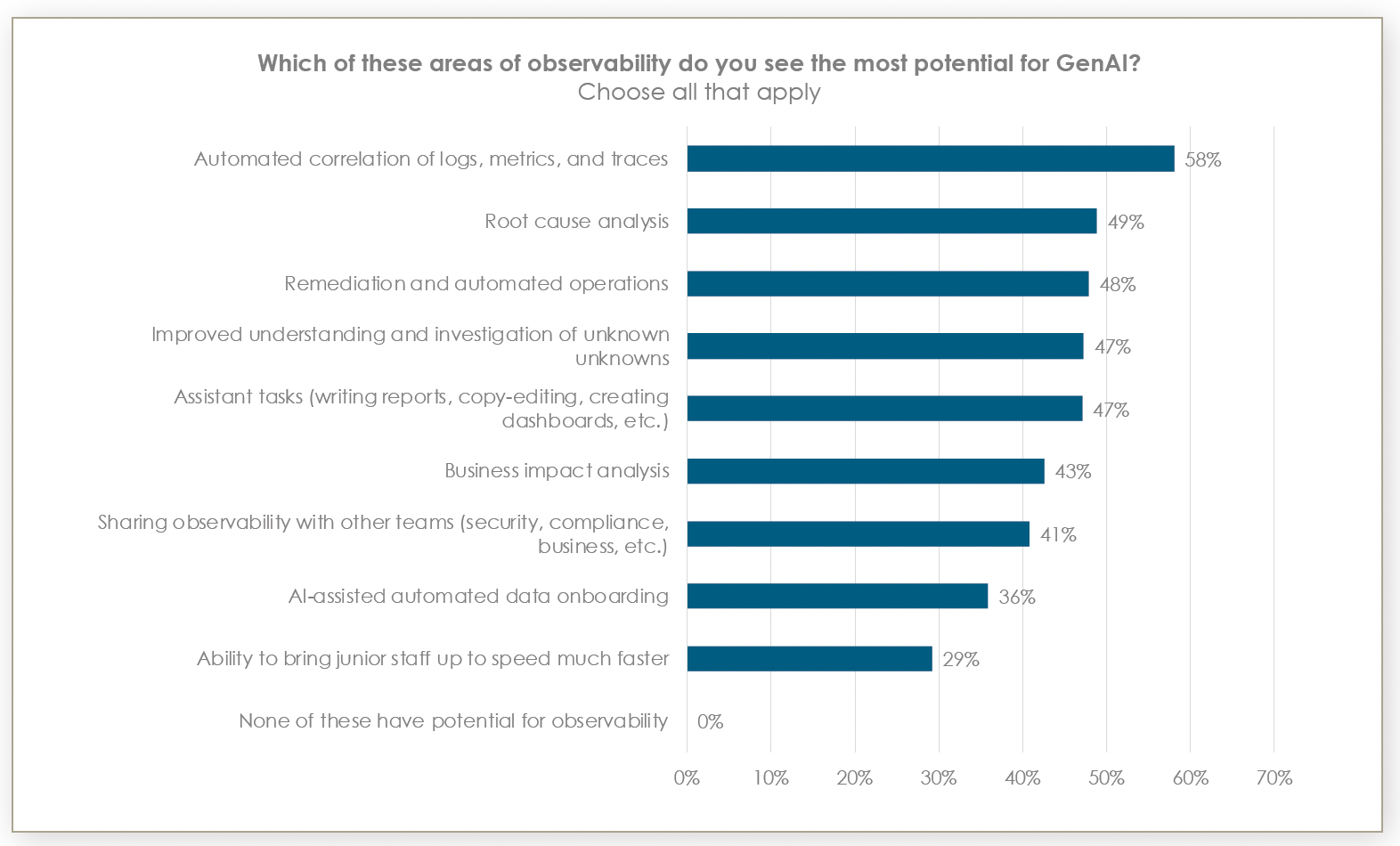

Observability areas where generative AI actually works

Correlation and automation drive the highest-value applications:

Automated correlation of logs, metrics, traces (58%): Connecting signals across telemetry types during incidents. This used to require someone who knew the whole system.

Root cause analysis (49%): Pattern matching across failure modes, dependencies, and historical data.

Remediation and automated operations (48%): Executing known procedures, scaling resources, applying fixes. Requires guardrails.

Unknown unknowns (47%): AI identifies anomalies that weren't being monitored. Useful in distributed systems where manual alerting always has gaps.

- Assistant tasks (47%): Reports, dashboards, and query optimization. Makes observability accessible to non-specialists.

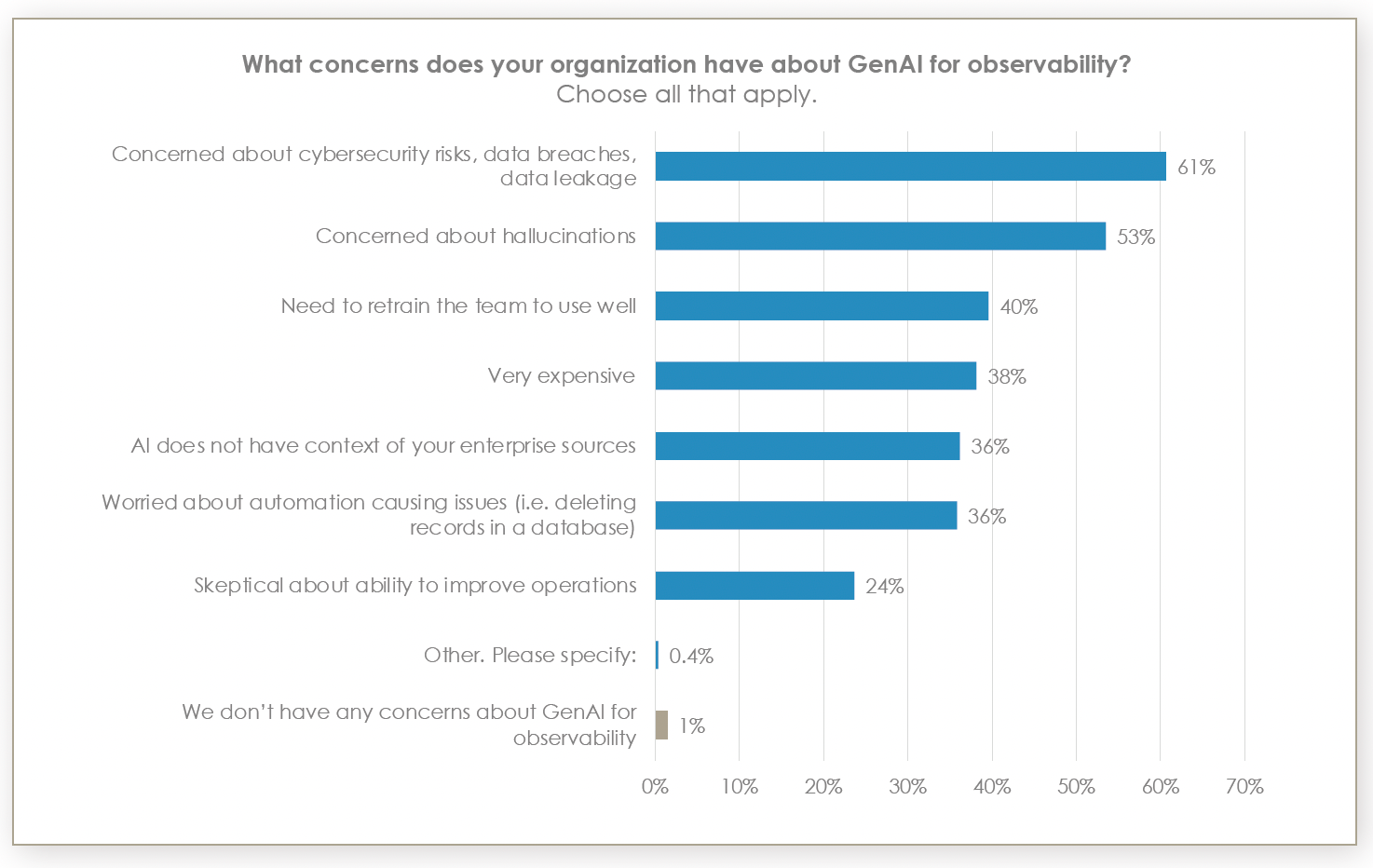

The concerns are legitimate

99% have concerns about GenAI for observability. The specifics:

Security and data leakage leads with 61%. Telemetry often contains sensitive information. Sending it to external LLMs is a legitimate problem. This drives preference for vendor solutions that keep data within existing security boundaries.

Hallucinations follow with 53%. AI generates confident nonsense. In incident response, acting on bad information makes outages worse.

Teams succeeding with GenAI treat outputs as hypotheses, not conclusions. Keeping the human in the loop, AI identifies devices needing upgrades, surfaces problematic configurations, and finds patterns in historical data. You verify and act. That saves time even without letting AI make decisions.

Letting AI make changes without review is dangerous. An AI tool that decides to open all firewall ports will confidently explain why it's a good idea — it isn't. Guardrails and human review aren't optional yet. And maybe not for a while.

Skepticism about GenAI's usefulness overall is low sitting at 24%. Teams aren't questioning value. They're working through implementation problems. The technology works; it's just immature, so treat it accordingly.

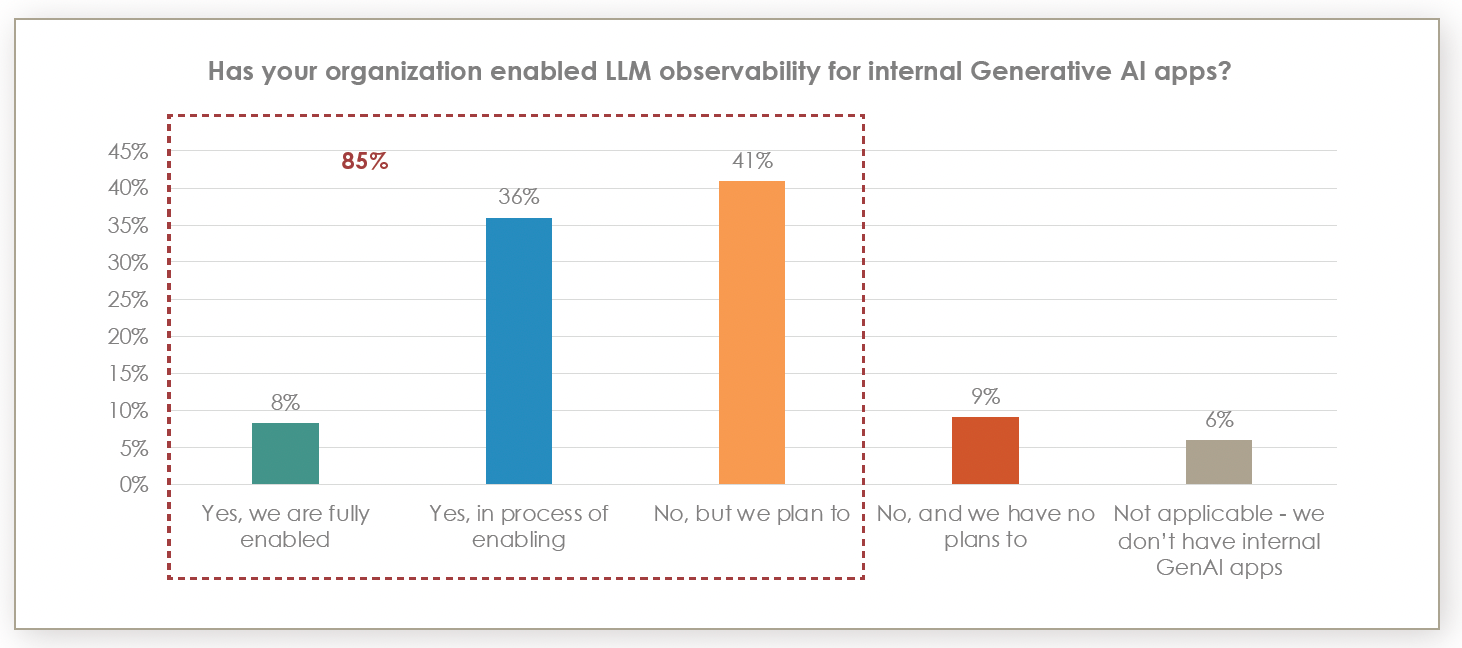

LLM observability: everyone plans it, nobody's done it

Organizations deploying GenAI for observability are also building internal GenAI applications that need monitoring. 85% plan to enable LLM observability, but only 8% have finished. 36% are working on it; 41% have plans but haven't started.

There are two probable reasons.

Internal GenAI development is new and LLM observability requires capabilities traditional frameworks lack like token tracking, prompt effectiveness, response quality, LLM-specific latency, and cost attribution.

94% are implementing or planning because GenAI applications need the same rigor as any production system.

Early implementers get visibility that others won't have. And the rest are flying blind on their own AI.

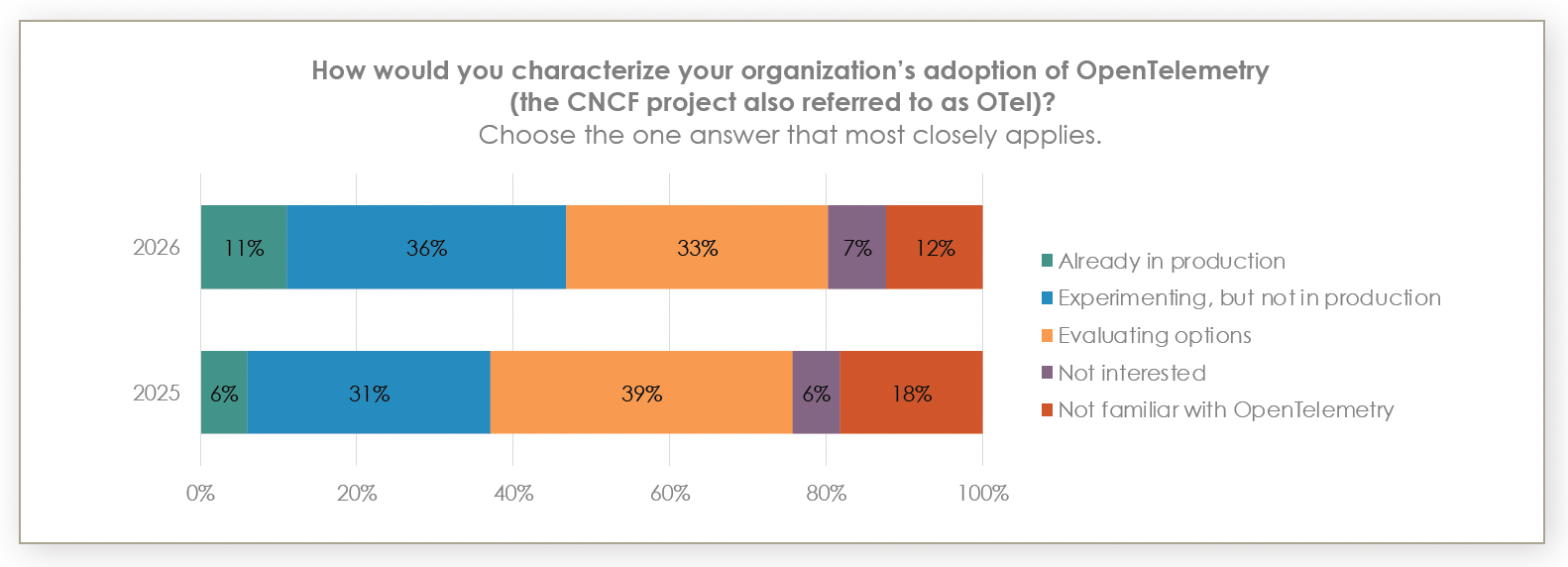

OpenTelemetry: Becoming the default for observability data

OTel in production nearly doubled year-over-year from 6% to 11%. These are still modest absolute numbers, but the trajectory indicates acceleration.

36% are experimenting (up from 31%) but are not in the production stages.

33% are evaluating their options.

19% are not interested in OTel adoption.

Overall, there’s early adoption. But for new service instrumentation, OTel is increasingly the default. The real story isn't adoption percentages. It's what happens when teams move from evaluation to production.

Production experience changes everything

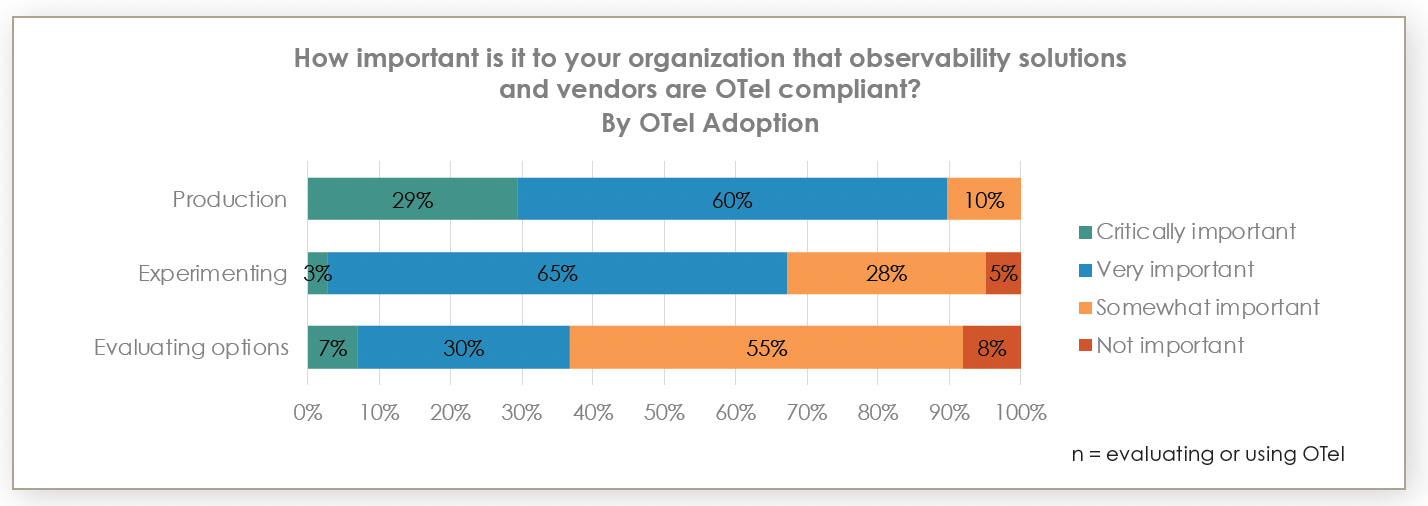

Among the organizations with OTel in production, 89% rate vendor compliance as critical or very important; among the teams that are still evaluating, 37% rate it as critical or very important.

Production teaches you what matters. Full specification support. Semantic conventions handling. Direct OTel ingestion without translation layers that add latency and lose fidelity. Solutions that are truly OTel compliant are crucial in production environments.

As more implementations reach production, vendor OTel support shifts from differentiator to requirement. Vendors without robust native integration exclude themselves from consideration.

Vendor distributions are winning

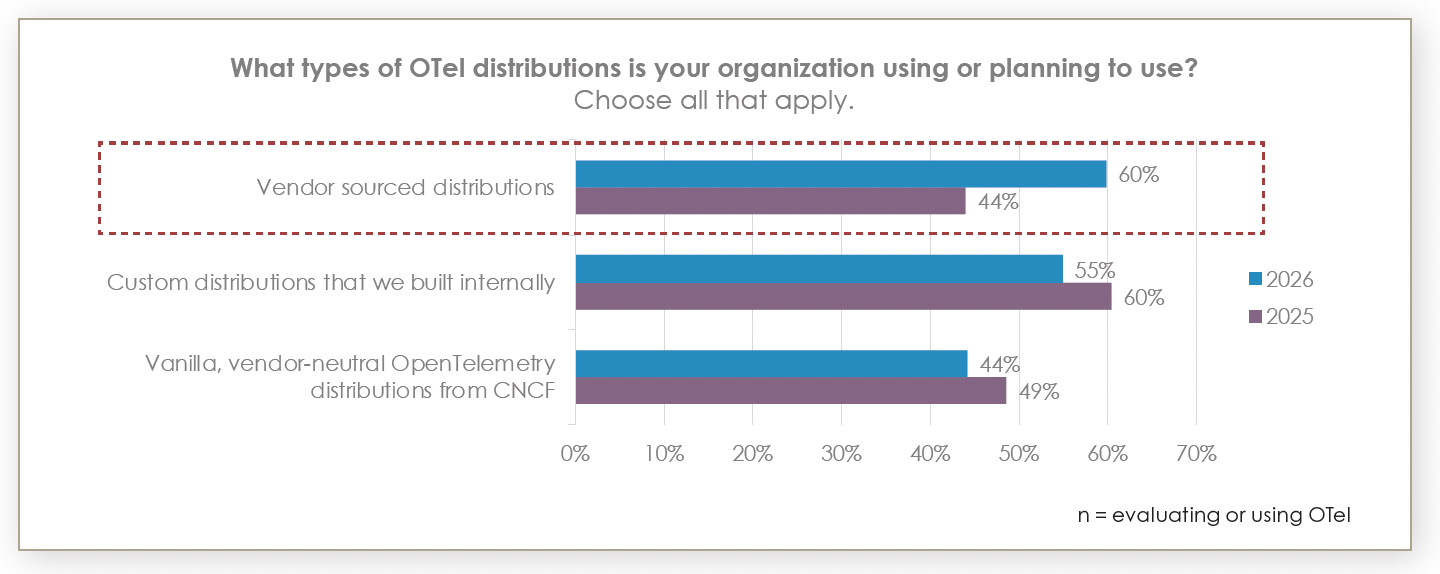

Vendor-sourced OTel distributions increased from 44% to 60% — a 36% year-over-year jump. Custom and vanilla distributions declined.

Vendor distributions integrate easier, include support, and optimize for specific use cases. Maintaining custom distributions requires engineering resources, building expertise, and testing infrastructure, documentation — most teams have better uses for that capacity.

Organizations prioritizing vendor independence still choose custom distributions. The broader market prefers convenience.

The underlying shift is that data collection is becoming commoditized. OTel handles the mechanics in a vendor-neutral way. The value and the differentiation lies in what happens after ingestion — AI-powered insights, investigation acceleration, and outcome optimization. That's where the interesting problems are now.

Observability vendor selection in 2026

The convergence of GenAI and OpenTelemetry adoption creates new criteria for evaluating observability vendors. Organizations must assess:

GenAI integration: Integrated capabilities from vendors beat custom integrations for time-to-value. A 75% projected adoption rate makes this standard criteria.

Agentic AI roadmap: Demand increases with maturity. Verify guardrails and human-in-the-loop controls before committing.

OTel support: 89% of production users consider compliance at least very important, which includes full specification, semantic conventions, and native ingestion.

LLM observability: 85% plan for this, where token tracking, prompt management, and cost attribution differentiate vendors.

- Security: 61% cite security as the primary concern when implementing GenAI. Vendors need robust controls and transparency.

A brave new world for observability in 2026

GenAI and OpenTelemetry are moving from emerging to foundational. GenAI is projected to reach 98% adoption within two years. OTel grows slowly but steadily, gaining strategic importance as implementations mature.

Vendor selection criteria are shifting. Integrated GenAI, comprehensive OpenTelemetry support, and LLM observability features become requirements for observability platforms. Vendors invested in these areas are positioned well; the rest will face a disadvantage. Evaluate your solutions against these new requirements because delay creates risk, and the industry isn't waiting.

For an overview of how observability leaders are managing strategic initiatives around observability maturity, cost management, and maximizing observability ROI, read this companion blog. Or download the full report: The Landscape of Observability in 2026: Balancing Cost and Innovation.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, and associated marks are trademarks, logos or registered trademarks of Elasticsearch B.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.