Get hands-on with Elasticsearch: Dive into our sample notebooks, start a free cloud trial, or try Elastic on your local machine now.

You can now use Elasticsearch Query Language (ES|QL) for vector search! ES|QL can retrieve, filter, and score dense_vector fields. Use k-nearest neighbors (KNN) queries for fast, approximate nearest neighbors search at scale. Use vector similarity functions for exact search and custom scoring.

KNN is simpler to use in ES|QL than in the Query DSL. Prefilters and the number of results to retrieve per shard are automatically inferred from the ES|QL query.

What is vector search?

Modern search is no longer limited to exact keyword matching. Users expect systems to understand meaning, not just text. This is where vector embeddings and Elasticsearch’s dense_vector field type come in.

The easiest way to use vector search in Elasticsearch is to use the semantic_text field type. It allows you to automatically generate text embeddings, perform semantic search, and handle chunking. However, you may want to use dense_vector instead when:

- You’re already using

dense_vectorfields. - You’re using non-textual data, like images, sound, or video.

- You need to generate embeddings separately to ingestion in Elasticsearch.

- You need to do custom or advanced scoring.

- You want to perform exact nearest neighbors search.

A dense_vector stores numerical embeddings produced by machine learning models. These embeddings capture semantic similarity: documents with similar meaning have vectors that are close to each other in high-dimensional space.

With vectors, you can build:

Semantic textsearch, for finding documents related to a question.- Retrieval-augmented generation (RAG).

- Recommendation systems.

ES|QL brings the power of a query-piped experience to Elasticsearch. Adding first-class support for dense_vector fields means you can now retrieve, filter, score, and search using vectors directly in ES|QL, alongside your text and non-text data.

In this post, we’ll walk through how to work with dense_vector fields in ES|QL, from basic inspection to approximate and exact similarity search, and how to use vector search as part of hybrid search strategies.

The basics: Retrieving vector data

Assume you have an index with a mapping similar to:

You can retrieve vector fields just like any other column:

Keep in mind that vectors can be large. For exploration and debugging, it may be useful to retrieve vector data, but in production you should avoid returning full vector data unless it's really necessary.

You can use familiar ES|QL constructs to check how many rows have vector information:

Approximate search using KNN

Vector search means finding the most similar vectors to a given query vector.

For large datasets, the most common approach is approximate nearest neighbor (ANN) search. ANN tries to find the most similar vectors by using data structures that allow for fast computation of similar vectors but doesn’t guarantee that all vectors will be considered.

ES|QL exposes approximate search via the KNN function:

This simple example:

- Searches over the

content_vectorfield. - Uses a dense vector query

[0.12, -0.03, 0.98, ...]to search similar vectors to it. - Sorts the results by score, by using the

METADATA _scoreattribute that will be populated by the `KNN` function. - Keeps just the title and score, as the

content_vectorfield is not interesting to be returned and we can avoid loading its contents. - Retrieves the top 10 elements by using

LIMIT. This automatically setskto 10 in theKNNfunction.

The KNN function can be further customized by using options:

See the KNN function named parameters for a complete description of the available parameters.

Combining KNN with filters

You can narrow down the candidate set for the vector search:

Of course, you can use any other WHERE clauses that filter the results or include KNN as part of a filter expression:

KNN made simple

KNN is simpler to use in ES|QL. You won't have to specify prefilters or k for your query explicitly.

Prefilters are the way to ensure that a KNN query returns as many results as expected. Prefilters are applied on the KNN search itself, instead of being applied after the query.

Keep in mind that KNN returns the top k results it's been asked for. If filters are applied after the KNN query, some of the results returned by the query may be filtered. If that happens, we’ll retrieve fewer results than expected.

The Query DSL knn query contains a section for specifying prefilters:

You don't need to care about prefilters when using KNN in ES|QL. All filters are applied as prefilters for the KNN function, so there’s no need to specify them as a specific option or command; just use `WHERE` and let ES|QL do it for you!

KNN also allows specifying the number of results to retrieve per shard; that is, the k parameter. Similar to the Query DSL, k defaults to the LIMIT specified in your query.

Exact search using vector similarity functions

KNN is designed to be fast, and that makes it ideal for large datasets (hundreds of thousands or millions of vectors) and latency-sensitive applications. The trade-off is that results are approximate, though usually very accurate.

Sometimes you want exact similarity computation instead of approximate search, for example:

- When your dataset is small.

- When the filters used in the query are very restrictive and select a small subset of your dataset.

ES|QL provides the following vector similarity functions:

Using these functions, you can calculate the similarity of your query vector with all the vectors your query retrieves.

The following query uses the same mapping as our KNN example, above, but does exact search using cosine similarity:

This query:

- Computes the similarity using the

V_COSINEvector similarity function. - Sorts on the computed similarity.

- Keeps the top 10 similar results.

Semantic search

When doing semantic search, you'll be trying to match a text query to your vectors. Of course, you can retrieve the query vector by first calculating the embeddings and then supply the query vector directly to your vector search.

But it would be much simpler to allow Elasticsearch to calculate the embeddings for you by using the TEXT_EMBEDDING function:

TEXT_EMBEDDING uses an already existing inference endpoint to automatically calculate the embeddings and use them in your query.

Hybrid search

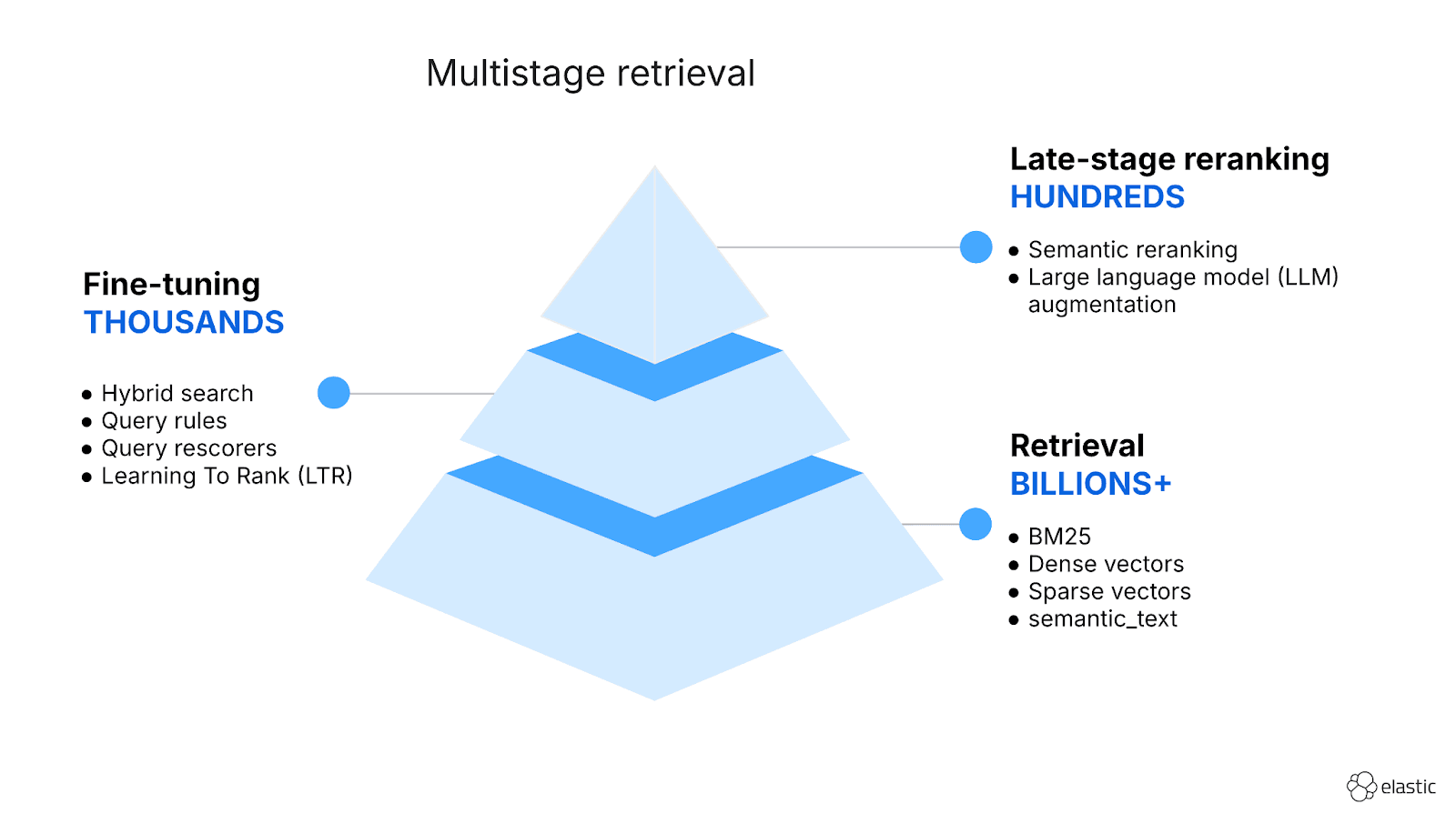

Most searches don't rely just on vector data; they need to be combined with lexical search, as well, so we have the best of both worlds:

- Lexical information is great for exact search of words and synonyms and provides a strong signal that users are looking for specific terms.

- Vectors capture meaning and intention, using similar phrases or terms that are not lexically related.

Combining vector search and lexical search is best done using FORK and FUSE:

The query above:

- Uses

FORKto do two queries: - Uses

FUSEto mix together the query results by using reciprocal rank fusion (RRF) by default.

This allows total control of the queries you want to perform, how many results to retrieve from each one, and how to combine the results together.

Check out our multistage retrieval blog post for more details on how modern search works and how easy it is to implement via ES|QL.

Custom scoring

Calculating custom scoring is easy using ES|QL! Just use the _score metadata field for calculating your custom score:

If you're using exact search, you already have an evaluation for the vector similarity that you can fine-tune:

Compared to the Query DSL script_score, this is a much simpler and more iterative approach and fits perfectly into the ES|QL execution flow.

Using query parameters

When using a query vector, you can specify it directly on the query as in our previous examples. But you may have noticed that we're using ellipses (...) to signal that there's more data to come.

Dense vectors are usually high dimensional; they can have hundreds or thousands of dimensions, so copying and pasting your query vector on the query itself can make it difficult to understand or reason about, as you'll be seeing thousands of numeric values on your screen.

Remember that you can use ES|QL query parameters for supplying parameters to your query:

This helps to keep your query and parameters separated, so you can focus on the query logic and not on specific parameters that get in your way.

Using query parameters for vectors is also more performant, as vectors are parsed faster that way using the request parser instead of the ES|QL parser.

Conclusion

ES|QL doesn’t just support vector search; it makes it a natural part of how you query your data. It allows you to use a single, powerful syntax for text, vectors, and everything in between, including:

- Vector search, both approximate and exact.

- Semantic search, using text for search over vector data.

- Hybrid search, combining the best of text and vector search.

- Custom vector scoring, using

EVALand ES|QL constructs.

Vector search in ES|QL is easier than in the Query DSL, by inferring prefilters and parameters, and integrating with the expressive, feature-rich expressions that ES|QL allows.

Defining KNN as part of a query pipeline for multistage retrieval is just another piece in the query; you can keep using filters, combine with other text functions for hybrid search, and apply reranking or query completion on top of your vector results.

We’ll keep adding vector functions for performing vector arithmetic and aggregations over dense vectors, so you can use the full power of ES|QL to manipulate your vector data.

Happy (vector) searching!