Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

Retrieval Augmented Generation (RAG) provides an opportunity for organisations to adopt Large Language Models (LLMs) by applying Generative AI (GenAI) capabilities to their own proprietary data. There is an inherent reduction in risk by using RAG as we rely on a controlled dataset to be the foundation of a model's answer, rather than relying on training data which may be unreliable, irrelevant to our use case or simply incorrect. However, you must continually manage RAG pipelines to ensure that answers are grounded and accurate. This blog will explore key considerations associated with deploying RAG capabilities in production and demonstrate how the Elastic Search AI platform provides the insights you need to run your RAG pipeline with peace of mind.

Defining a RAG pipeline

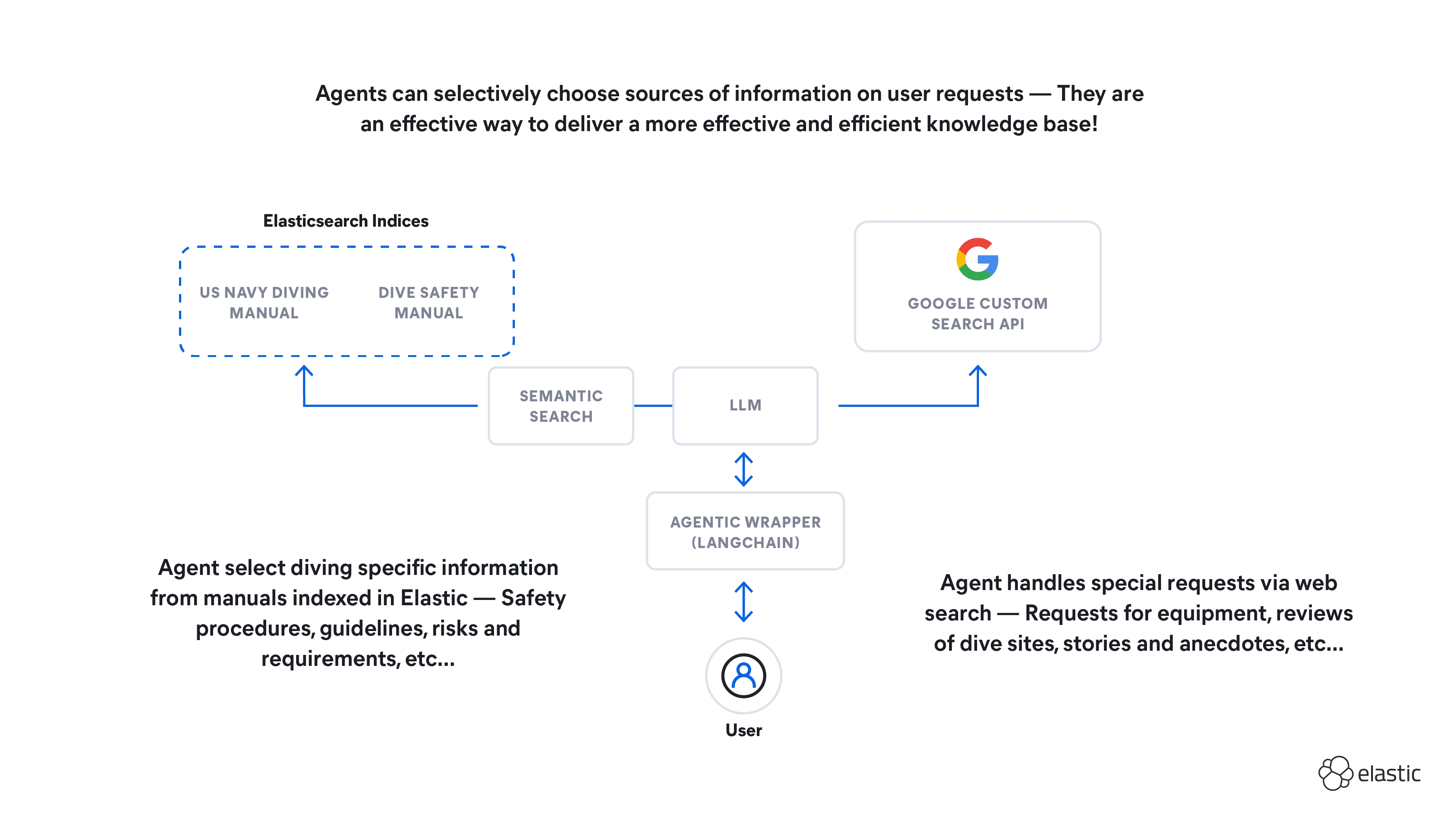

A typical RAG implementation consists of a user provided question being sent to an LLM with an accompanying context. The context needs to provide relevant information that will inform the LLMs answer. The context is generated by search results which were returned by an evaluation of the user question against the contents of a vectorstore to ensure that the results match the question semantically. In the case of the Elastic Search AI platform, the context can also be a result of a hybrid search which includes lexical search, document filtering and access control rules. The ability to perform a hybrid search operation in one discreet motion allows developers to build a context for the LLM that is as relevant as possible by combining results from a semantic axis and a lexical search axis. Furthermore, filters and access control rules restrict the corpus of data that will be searched in order to comply with RBAC rules, both improving performance and maintaining data security.

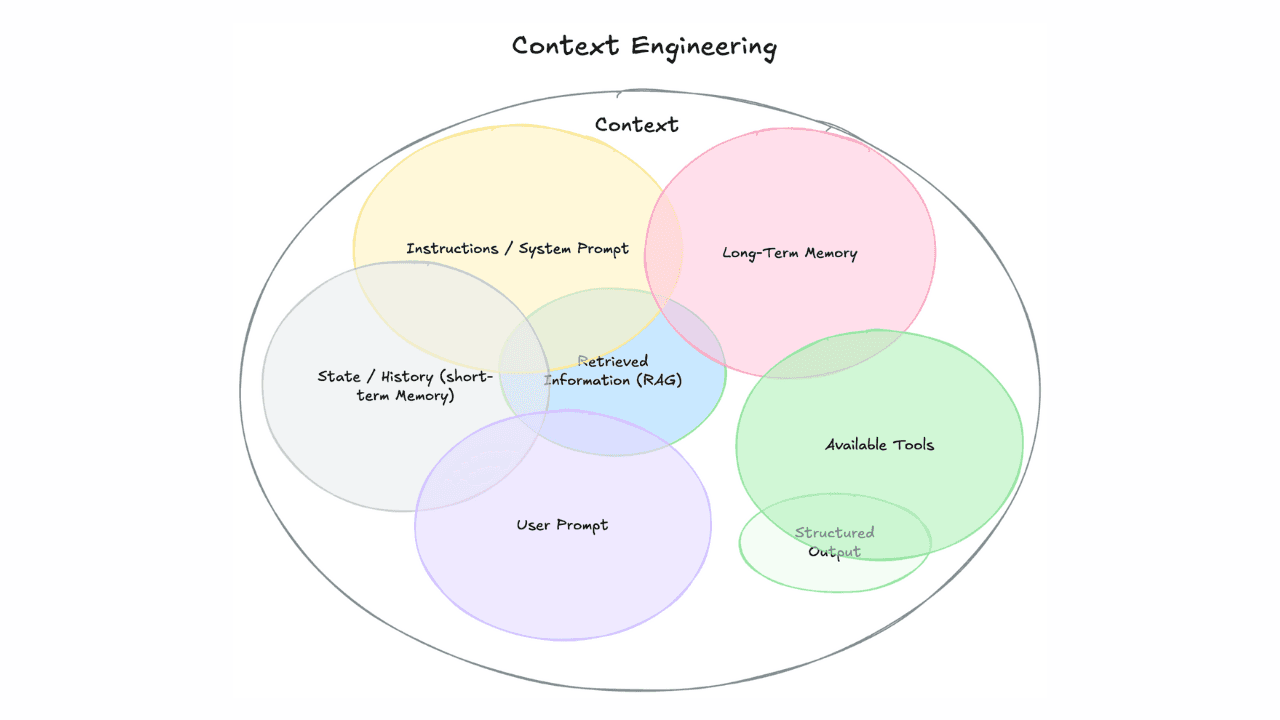

The LLM receives the question, interprets the context, and then responds with an answer that is often either a summary of the context or a synthesis of relevant statements from the context. The combination of question and context is referred to as a prompt, and the prompt also provides the LLM with instructions as to its role and guardrails that define how it should answer. A developer creates an artefact known as a prompt template that combines all of these values together in a logical fashion which can be reused and fine tuned to ensure the correct results.

Thus, a RAG implementation could be seen as a pipeline consisting of the following elements:

- User provided question

- Search generated context

- Prompt template

- LLM generated answer

Production considerations

When implementing a RAG pipeline there a number of operational and performance considerations that need to be taken into account to ensure that the experience delivered lives up to the expectations of both end users and the teams that are building LLM capabilities into their applications.

- Metrics need to be tracked, as with any application, to ensure that the RAG pipeline operates within SLO and SLI targets.

- Questions and answers should not be discarded as they contain valuable information about system behaviour and user preferences.

- Costs should be tracked and reviewed continually.

- The RAG pipeline needs to be evaluated consistently to ensure that LLMs are responding accurately and that they are provided with sufficient context to answer those questions.

So, how exactly can this be achieved? What patterns and practices need to be considered when bringing RAG pipelines to production? Understandably, this is a very broad subject, but we will cover these considerations at a high level to encourage further reading and exploration.

RAG performance and monitoring

When building a RAG pipeline determining the right prompt technique for your use case is critical, as it can be the difference between truthful and valuable answers, or misleading and frustrating ones. As such, prompt development requires an adequate knowledge of the underlying data and a benchmarking framework that can objectively measure LLM answers and rigorously test them against the truth. Choosing a benchmarking framework relies on understanding which metrics are not only important, but also how those metrics need to be measured. Once in production, logging of all LLM interactions becomes a mandatory aspect of the RAG pipeline in order to ensure management and control of data points such as costs, latency and quality of responses.

RAG benchmarking and evaluation

We begin with benchmarking and evaluation, as this must occur before shipping to production in order to mitigate the risk of a poor or damaging service. There are a number of benchmarking frameworks available, all of which generally align around a dataset of questions and ground truth answers from the underlying body of knowledge that will be used for RAG context. Choosing the right framework comes down to a few key factors:

- The framework should be compatible with your team's skills, your application code, and your organisational policies. This is a cross-functional integration that should align to your decisions. For example, if you have chosen to leverage locally hosted LLMs exclusively, then the framework should accomodate that.

- You need to have the flexibility to choose the evaluation metrics that are important to you to measure.

- The generated evalution output should be easy to integrate into your existing Observability or Security tooling, as LLMs are key capabilities of the applications that they are used in and should not be considered a separate system.

These question/answer pairs represent the baseline understanding of the data and the use case, and will be used to evaluate the performance of the complete pipeline. It is important that the question set contains relevant questions that would most likely be asked by users, or at least the type of question that would be asked. The answer should not be a simple cut and paste from the document, but rather a summarisation of one or more facts that adequately answers the question, as this is what an LLM likely would generate in response to a question.

The benchmark questions will be passed through a RAG pipeline and two outputs will be generated to inform any evaluation metrics: the LLM response and the Context. In most instances, frameworks will evaluate the LLM responses against the answer in the benchmark dataset for accuracy or correctness, in addition to measuring the semantic similarity of the answer. The answer can also be compared to the context to ensure that any statement it contains can be grounded in the search results that the question derived. Further metrics may be applied by calculating the relevance of the answer by taking the benchmark, the context and the question into account.

Though an RAG benchmark aims to measure the quality of answers for functional reasons, implementing a pre-production phase can significantly reduce the risk of hallucination, bias, and even training data poisoning as the benchmark dataset represents absolute truth and will highlight irregularities in the LLM output.

RAG logging

It might be true than an LLM produces no log files with respect to how it generated content, but that does not mean that it is not possible to log valuable details of an interaction with an LLM. Deciding exactly what needs to be logged is determined by what is important to your business, but at the very least the following data should be captured:

- The user provided question that is driving the interaction (e.g. How much PTO do I get per year?)

- The LLM generated answer (e.g "...according to the policy you are entitled to 23 days of PTO per year, Source: HR policy, page 10.")

- Timestamps for the submission to the LLM, the first response, and the completed response of the LLM.

- The latency between submission and first response

- The LLM model name, version and provider

- The LLM model temperature as configured at the time of invocation (useful for debugging hallucinations)

- The endpoint or instance (to differentiate between production and development)

Additionally you may find the following fields useful:

- The costs of the prompt and the answer, as measured in tokens and based on unit costs provided by your model provider

- The business workflow being facilitated (customer support, engineering knowledgebase etc.)

- The prompt submitted to the LLM (with or without context as you may want to ingest context into a separate field, this can help with debugging or troubleshooting answer tone, hallucination and accuracy. )

- The sentiment of the LLM response (this can be achieved by running the response through a sentiment model)

It is crucial to log operational data even before your RAG pipeline has reached production status to remove any potential blind spots, adequately manage costs and customer satisfaction, and validate whether the results observed in benchmarking are replicated in the real world.

Creating a unified RAG data platform

One of the most common patterns that develop within emerging technologies is that of multiple tools for each nuanced requirement. Point solutions tend to address one requirement of a system, but require system owners or architects to seek out solutions for the remaining requirements. This is not inherently bad, as trying to meet too many diverse sets of goals can result in not meeting any of them fully. However when you consider that data is the most valuable asset of any system, pivoting to centralise your data in one unified platform does result in your most valuable asset being significantly easier to use and manage. New insights and meaning emerge when data is analysed with context provided by data generated from other parts of a larger system.

An Elastic Search AI framework for RAG

Elastic can solve for all of the data input and output of an RAG pipeline in a single platform by serving as the foundational data layer, including data generated by a performance and monitoring framework. Below is a functional architecture that represents how Elastic facilitates each step of an RAG pipeline.

The Elastic Search AI platform is unique in its ability to address the functional requirements of a pipeline by leveraging the following capabilities:

- Elastic is the best storage and retrieval engine for context building for GenAI. The flexibility to use both lexical and semantic search capabilities within a single API endpoint, allowing RBAC enforcement and sophisticated reranking features for effective context windows.

- Elastic is the de facto standard logging platform for countless projects and teams across the globe and serves as the underlying data platform for both the Elastic Observability and Elastic Security solutions. Adding LLM logging data simply taps into this native capability.

- The Elastic Search AI platform is able to host the transformer models required to vectorise content in order to make it semantically searchable without necessitating external model hosting tools and services. This is achieved by leveraging the Elastic provided ELSER sparse vector model, as well as enabling custom model imports.

- Elastic can serve as the content storage of the benchmarking setup data, consisting of questions, answers and ground truths - enabling evaluation frameworks to be implemented using the same libraries as the RAG pipeline, further minimising complexity and ensuring that the testing framework is aligned to the application implementation.

- Elastic as a benchmarking data destination allows fine grained analysis and comprehensive visualisation of results. These benchmarking results can also be combined with real time logging data to represent overall pipeline health and identify functional deviations, which can further leverage the Alerting feature of Elastic to ensure that no anomaly goes unseen.

- Finally, Elastic allows for an easy aggregation of commonly asked questions to better inform the benchmarking configuration so that it closely aligns with real world data. Given that benchmarking is effectively a regression activity, this step is crucial in making sure that it is credible and relevant. Understanding which questions users ask most frequently also enables caching of those answers for instant responses, making the need to refer to an LLM unnecessary.

With all data logged within Elastic, visualisations, dashboards and alerts are simple and quick to configure, providing your teams with the visibility they need to run their pipelines smoothly and proactively.

Conclusion

A great deal of benefit can be derived by a considered and thoughtful implementation of RAG, but many of these benefits can be undone by not thinking about the ongoing management lifecycle of a RAG pipeline. The Elastic Search AI platform simplifies the deployment of robust and secure RAG pipelines, supporting the advancement of GenAI adoption while minimising the risks associated with these early stage technologies. Be sure to sign up for a free trial of Elastic Cloud today to use the Elastic Search AI platform to take your GenAI idea to production as quickly and easy as possible.

Frequently Asked Questions

What does a RAG pipeline consist of?

A RAG pipeline consists of the following elements: a user-provided question, a search generated context, a prompt template, and an LLM generated answer.