Seamlessly connect with leading AI and machine learning platforms. Start a free cloud trial to explore Elastic’s gen AI capabilities or try it on your machine now.

In modern observability stacks, ingesting unstructured logs from diverse data providers into platforms like Elasticsearch remains a challenge. Reliance on manually crafted parsing rules creates brittle pipelines, where even minor upstream code updates lead to parsing failures and unindexed data. This fragility is compounded by the scalability challenge: in dynamic microservices environments, the continuous addition of new services turns manual rule maintenance into an operational nightmare.

Our goal was to transition to an automated, adaptive approach capable of handling both log parsing (field extraction) and log partitioning (source identification). We hypothesized that Large Language Models (LLMs), with their inherent understanding of code syntax and semantic patterns, could automate these tasks with minimal human intervention.

We are happy to announce that this feature is already available in Streams!

Dataset description

We chose a Loghub collection of logs for PoC purposes. For our investigation, we selected representative samples from the following key areas:

- Distributed systems: We used the HDFS (Hadoop Distributed File System) and Spark datasets. These contain a mix of info, debug, and error messages typical of big data platforms.

- Server & web applications: Logs from Apache web servers and OpenSSH provided a valuable source of access, error, and security-relevant events. These are critical for monitoring web traffic and detecting potential threats.

- Operating systems: We included logs from Linux and Windows. These datasets represent the common, semi-structured system-level events that operations teams encounter daily.

- Mobile systems: To ensure our model could handle logs from mobile environments, we included the Android dataset. These logs are often verbose and capture a wide range of application and system-level activities on mobile devices.

- Supercomputers: To test performance on high-performance computing (HPC) environments, we incorporated the BGL (Blue Gene/L) dataset, which features highly structured logs with specific domain terminology.

A key advantage of the Loghub collection is that the logs are largely unsanitized and unlabeled, mirroring a noisy live production environment with microservice architecture.

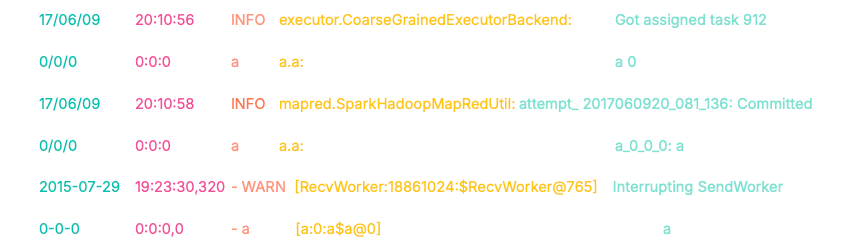

Log examples:

In addition, we created a Kubernetes cluster with a typical web application + database set up to mine extra logs in the most common domain.

Example of common log fields: timestamp, log level (INFO, WARN, ERROR), source, message.

Few-shot log parsing with an LLM

Our first set of experiments focused on a fundamental question: Can an LLM reliably identify key fields and generate consistent parsing rules to extract them?

We asked a model to analyse raw log samples and generate log parsing rules in regular expression (regex) and Grok formats. Our results showed that this approach has a lot of potential, but also significant implementation challenges.

High confidence & context awareness

Initial results were promising. The LLM demonstrated a strong ability to generate parsing rules that matched the provided few-shot examples with high confidence. Besides simple pattern matching, the model showed a capacity for log understanding —it could correctly identify and name the log source (e.g., health tracking app, Nginx web app, Mongo database).

The "Goldilocks" dilemma of input samples

Our experiments quickly surfaced a significant lack of robustness because of extreme sensitivity to the input sample. The model's performance fluctuates wildly based on the specific log examples included in the prompt. We observed a log similarity problem where the log sample needs to include just diverse enough logs:

- Too homogeneous (overfitting): If the input logs are too similar, the LLM tends to overspecify. It treats variable data—such as specific Java class names in a stack trace—as static parts of the template. This results in brittle rules that cover a tiny ratio of logs and extract unusable fields.

- Too heterogeneous (confusion): Conversely, if the sample contains significant formatting variance—or worse, "trash logs" like progress bars, memory tables, or ASCII art—the model struggles to find a common denominator. It often resorts to generating complex, broken regexes or lazily over-generalizing the entire line into a single message blob field.

The context window constraint

We also encountered a context window bottleneck. When input logs were long, heterogeneous, or rich in extractable fields, the model's output often deteriorated, becoming "messy" or too long to fit into the output context window. Naturally, chunking helps in this case. By splitting logs using character-based and entity-based delimiters, we could help the model focus on extracting the main fields without being overwhelmed by noise.

The consistency & standardization gap

Even when the model successfully generated rules, we noted slight inconsistencies:

- Service naming variations: The model proposes different names for the same entity (e.g., labeling the source as "Spark," "Apache Spark," and "Spark Log Analytics" in different runs).

- Field naming variations: Field names lacked standardization (e.g.,

idvs.service.idvs.device.id). We normalized names using a standardized Elastic field naming. - Resolution variance: The resolution of the field extraction varied depending on how similar the input logs were to one another.

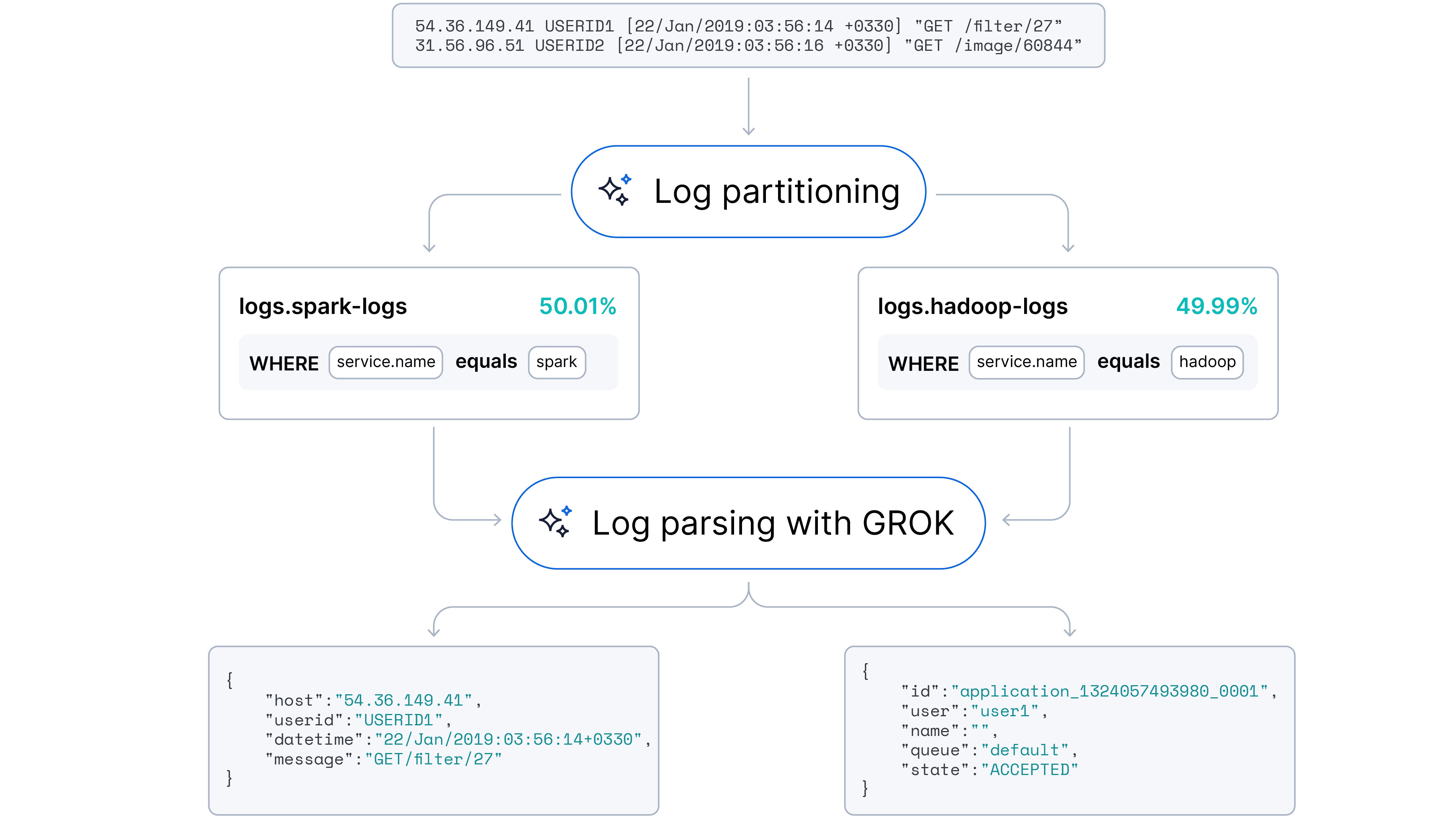

Log format fingerprint

To address the challenge of log similarity, we introduce a high-performance heuristic: log format fingerprint (LFF).

Instead of feeding raw, noisy logs directly into an LLM, we first apply a deterministic transformation to reveal the underlying structure of each message. This pre-processing step abstracts away variable data, generating a simplified "fingerprint" that allows us to group related logs.

The mapping logic is simple to ensure speed and consistency:

- Digit abstraction: Any sequence of digits (0-9) is replaced by a single ‘0’.

- Text abstraction: Any sequence of alphabetical characters with whitespace is replaced by a single ‘a’.

- Whitespace normalization: All sequences of whitespace (spaces, tabs, newlines) are collapsed into a single space.

- Symbol preservation: Punctuation and special characters (e.g., :, [, ], /) are preserved, as they are often the strongest indicators of log structure.

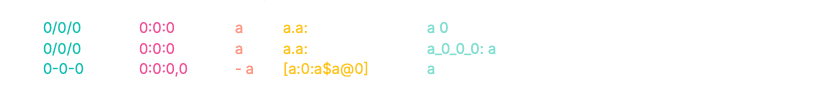

We introduce the log mapping approach. The basic mapping patterns include the following:

- Digits 0-9 of any length -> to ‘0.’

- Text (alphabetical characters with spaces) of any length -> to ‘a’.

- White spaces, tabs, and new lines -> to a single space.

Let's look at an example of how this mapping allows us to transform the logs.

As a result, we obtain the following log masks:

Notice the fingerprints of the first two logs. Despite different timestamps, source classes, and message content, their prefixes (0/0/0 0:0:0 a a.a:) are identical. This structural alignment allows us to automatically bucket these logs into the same cluster.

The third log, however, produces a completely divergent fingerprint (0-0-0...). This allows us to algorithmically separate it from the first group before we ever invoke an LLM.

Bonus part: Instant implementation with ES|QL

It’s as easy as passing this query in Discover.

Query breakdown:

FROM loghub: Targets our index containing the raw log data.

EVAL pattern = …: The core mapping logic. We chain REPLACE functions to perform the abstraction (e.g., digits to '0', text to 'a', etc.) and save the result in a “pattern” field.

STATS [column1 =] expression1, … BY SUBSTRING(pattern, 0, 15):

This is a clustering step. We group logs that share the first 15 characters of their pattern and create aggregated fields such as total log count per group, list of log datasources, pattern prefix, 3 log examples

SORT total_count DESC | LIMIT 100 : Surfaces the top 100 most frequent log patterns

The query results on LogHub are displayed below:

As demonstrated in the visualization, this “LLM-free” approach partitions logs with high accuracy. It successfully clustered 10 out of 16 data sources (based on LogHub labels) completely (>90%) and achieved majority clustering in 13 out of 16 sources (>60%) —all without requiring additional cleaning, preprocessing, or fine-tuning.

Log format fingerprint offers a pragmatic, high-impact alternative and addition to sophisticated ML solutions like log pattern analysis. It provides immediate insights into log relationships and effectively manages large log clusters.

- Versatility as a primitive

Thanks to ES|QL implementation, LFF serves both as a standalone tool for fast data diagnostics/visualisations, and as a building block in log analysis pipelines for high-volume use cases.

- Flexibility

LFF is easy to customize and extend to capture specific patterns, i.e. hexadecimal numbers and IP addresses.

- Deterministic stability

Unlike ML-based clustering algorithms, LFF logic is straightforward and deterministic. New incoming logs do not retroactively affect existing log clusters.

- Performance and mMemory

It requires minimal memory, no training or GPU making it ideal for real-time high-throughput environments.

Combining log format fingerprint with an LLM

To validate the proposed hybrid architecture, each experiment contained a random 20% subset of the logs from each data source. This constraint simulates a real-world production environment where logs are processed in batches rather than as a monolithic historical dump.

The objective was to demonstrate that LFF acts as an effective compression layer. We aimed to prove that high-coverage parsing rules could be generated from small, curated samples and successfully generalized to the entire dataset.

Execution pipeline

We implemented a multi-stage pipeline that filters, clusters, and applies stratified sampling to the data before it reaches the LLM.

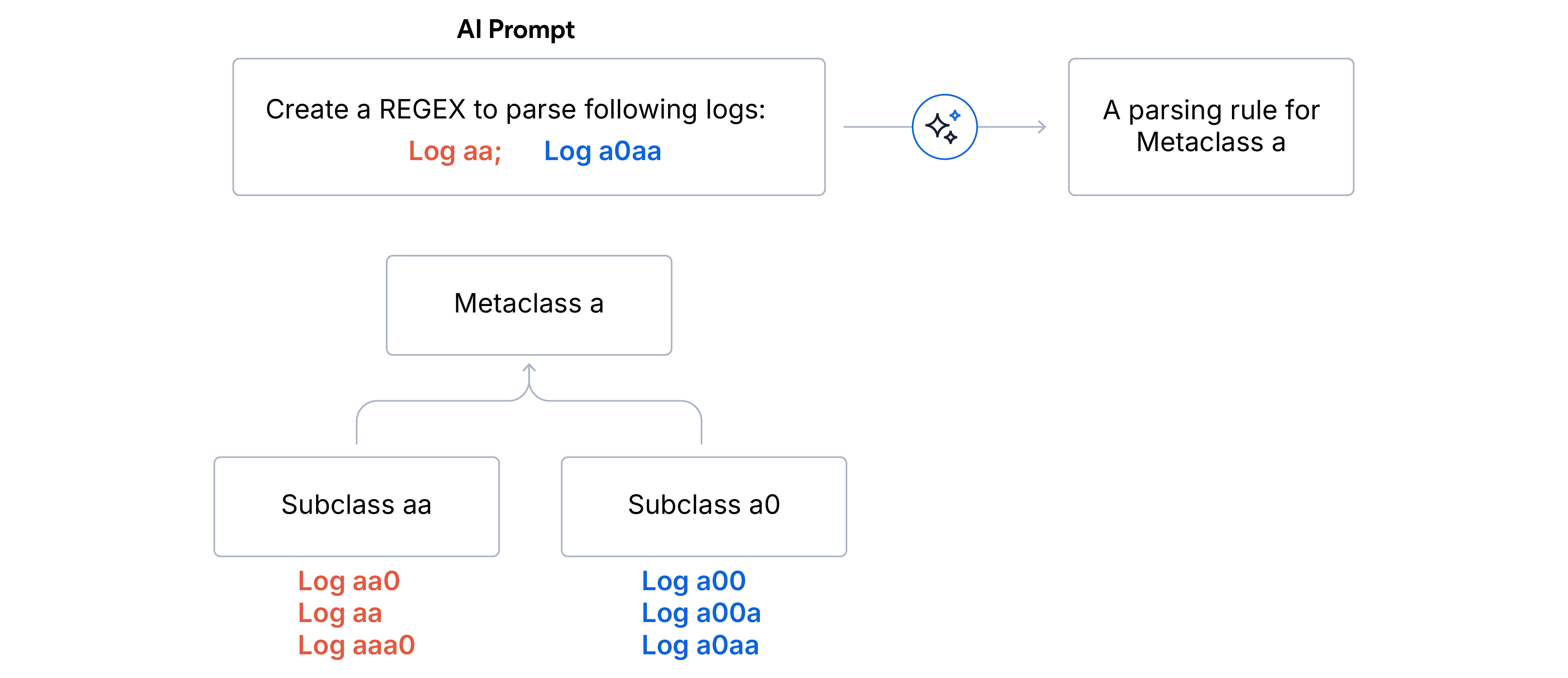

1. Two-stage hierarchical clustering

- Subclasses (exact match): Logs are aggregated by identical fingerprints. Every log in one subclass shares the exact same format structure.

- Outlier cleaning. We discard any subclasses that represent less than 5% of the total log volume. This ensures the LLM focuses on the dominant signal and won’t be sidetracked by noise or malformed logs.

- Metaclasses (prefix match): Remaining subclasses are grouped into Metaclasses by the first N characters of the format fingerprint match. This grouping strategy effectively splits lexically similar formats under a single umbrella.We chose N=5 for Log parsing and N=15 for Log partitioning when data sources are unknown.

2. Stratified sampling. Once the hierarchical tree is built, we construct the log sample for the LLM. The strategic goal is to maximize variance coverage while minimizing token usage.

- We select representative logs from each valid subclass within the broader metaclass.

- To manage an edge case of too numerous subclasses, we apply random down-sampling to fit the target window size.

3. Rule generation Finally, we prompt the LLM to generate a regex parsing rule that fits all logs in the provided sample for each Metaclass. For our PoC, we used the GPT-4o mini model.

Experimental results & observations

We achieved 94% parsing accuracy and 91% partitioning accuracy on the Loghub dataset.

The confusion matrix above illustrates log partitioning results. The vertical axis represents the actual data sources, and the horizontal axis represents the predicted data sources. The heatmap intensity corresponds to log volume, with lighter tiles indicating a higher count. The diagonal alignment demonstrates the model's high fidelity in source attribution, with minimal scattering.

Our performance benchmarks insights:

- Optimal baseline: a context window of 30–40 log samples per category proved to be the "sweet spot," consistently producing robust parsing with both Regex and Grok patterns.

- Input minimisation: we pushed the input size to 10 logs per category for Regex patterns and observed only 2% drop in parsing performance, confirming that diversity-based sampling is more critical than raw volume.