From vector search to powerful REST APIs, Elasticsearch offers developers the most extensive search toolkit. Dive into sample notebooks on GitHub to try something new. You can also start your free trial or run Elasticsearch locally today.

Agentic RAG introduction

Agents are the logical next step in the application of LLMs for real-world use-cases. This article aims to introduce the concept and usage of agents for RAG workflows. All in all, agents represent an extremely exciting area, with many possibilities for ambitious applications and use-cases.

I'm hoping to cover more of those ideas in future articles. For now, let's see how Agentic RAG can be implemented using Elasticsearch as our knowledge base, and langchain as our agentic framework.

Background

The use of LLMs began with simply prompting an LLM to perform tasks such as answering questions and simple calculations.

However, deficiencies in existing model knowledge meant that LLMs could not be applied to domains which required specialist skills, such as enterprise customer servicing and business intelligence.

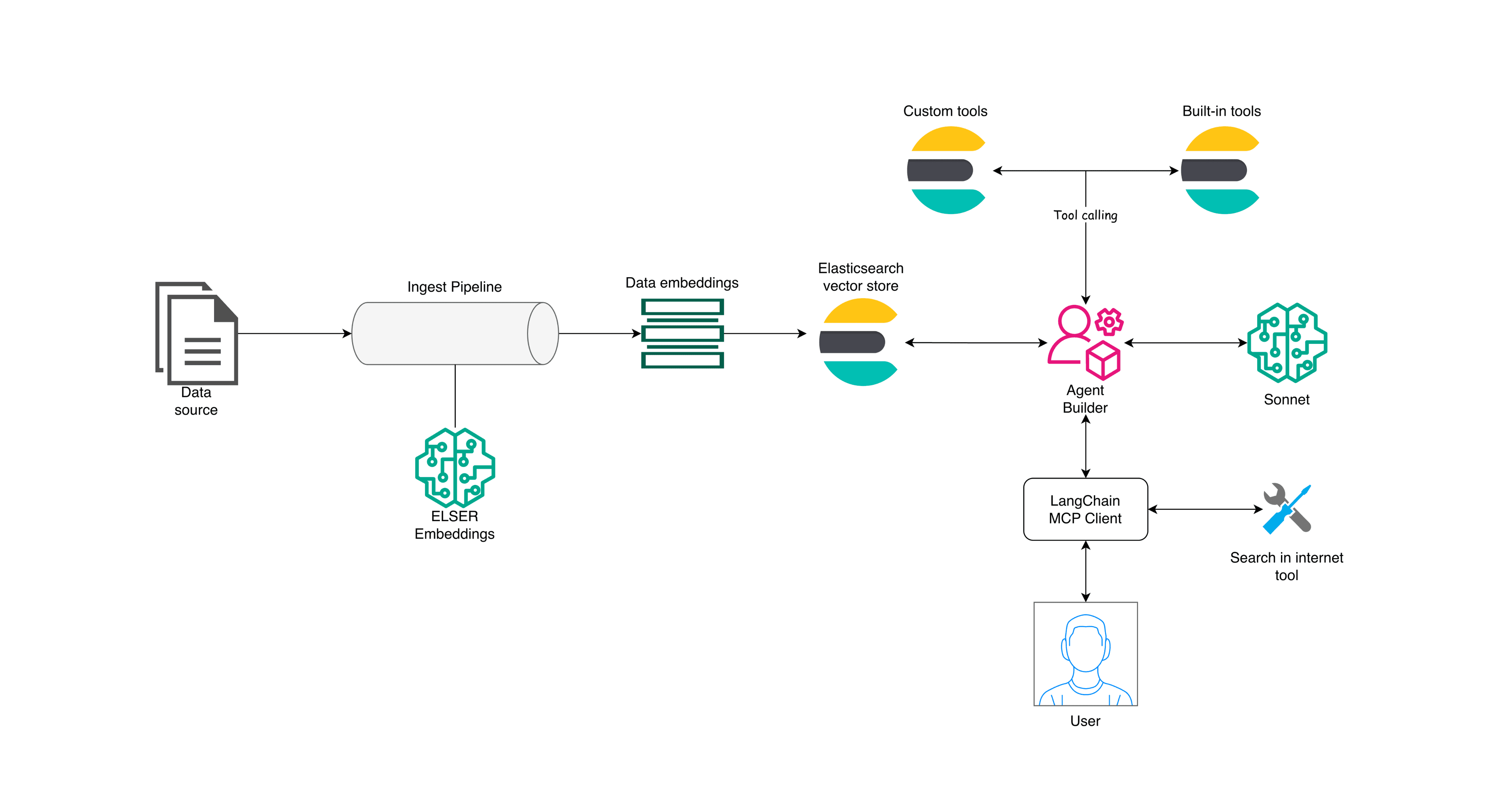

Soon, prompting transitioned to Retrieval Augmented Generation (RAG), a natural sweet spot for Elasticsearch. RAG emerged as an effective and simple way to quickly provide contextual and factual information to an LLM at query time. The alternative is lengthy and very costly retraining processes where success is far from guaranteed.

The main operational advantage of RAG is allowing for an LLM app to be fed with updated information on a near real-time basis.

Implementation is a matter of procuring a vector database, such as Elasticsearch, deploying an embedding model such as ELSER, and making a call to the search API to retrieve relevant documents.

Once documents are retrieved, they can be inserted into the LLM's prompt, and answers generated based on the content. This provides context and factuality, both of which the LLM may lack on its own.

The difference between simply calling an LLM, using RAG, and using Agents

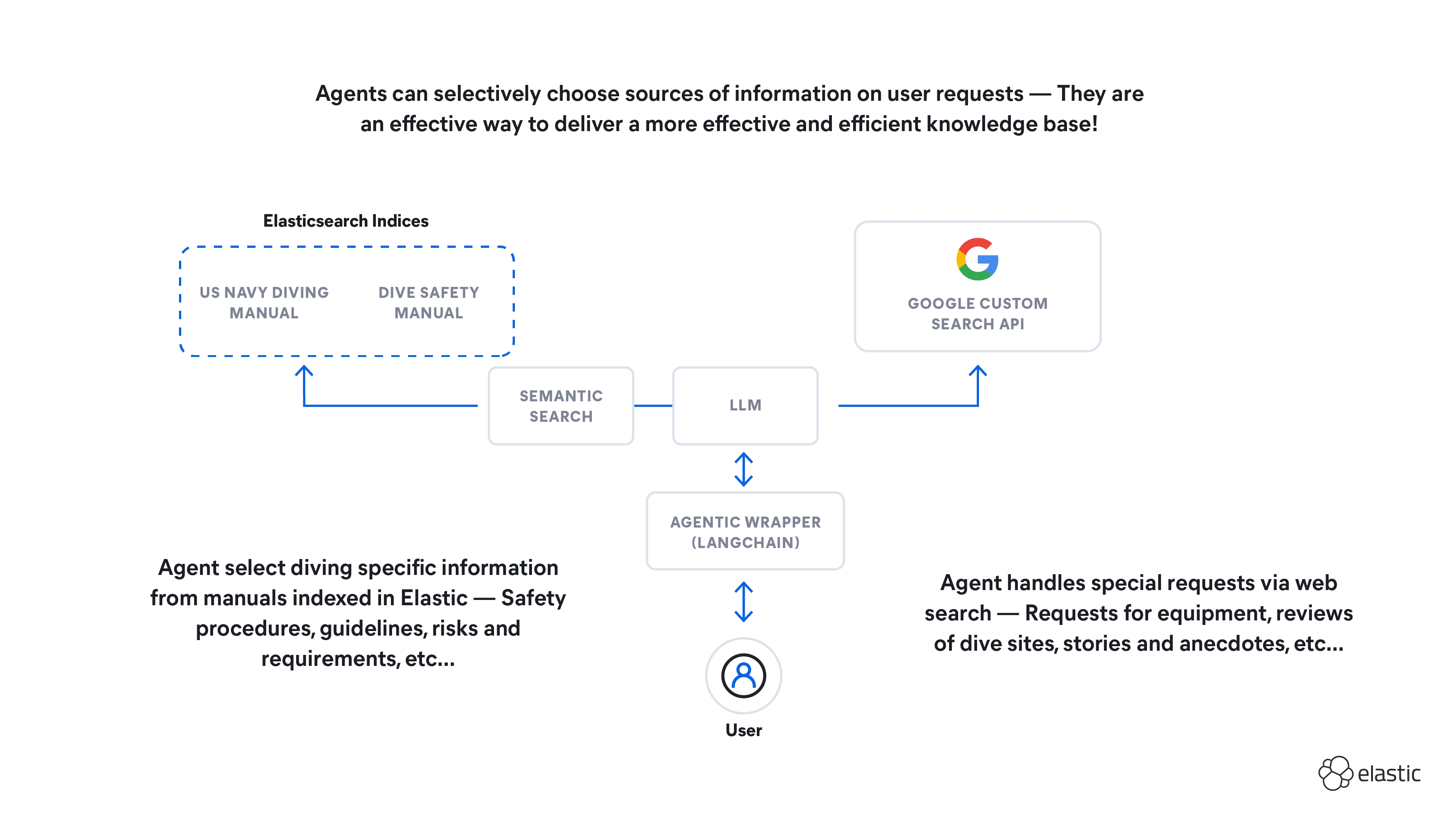

However, the standard RAG deployment model has a disadvantage - It is rigid. The LLM cannot choose which knowledge base it will draw information from. It also cannot choose to use additional tools, such as web search engine APIs like Google or Bing. It cannot check the current weather, or use a calculator, or factor in the output of any tool beyond the knowledge base it has been given.

What differentiates the Agentic model is Choice.

Note on terminology

Tool usage, which is the term used in a Langchain context is also known as function-calling. For all intents and purposes, the terms are interchangeable - Both refer to the LLM being given a set of functions or tools which it can use to complement its capabilities or affect the world. Please bear with me, as I use "Tool Usage" throughout the rest of this article.

Choice & agentic models

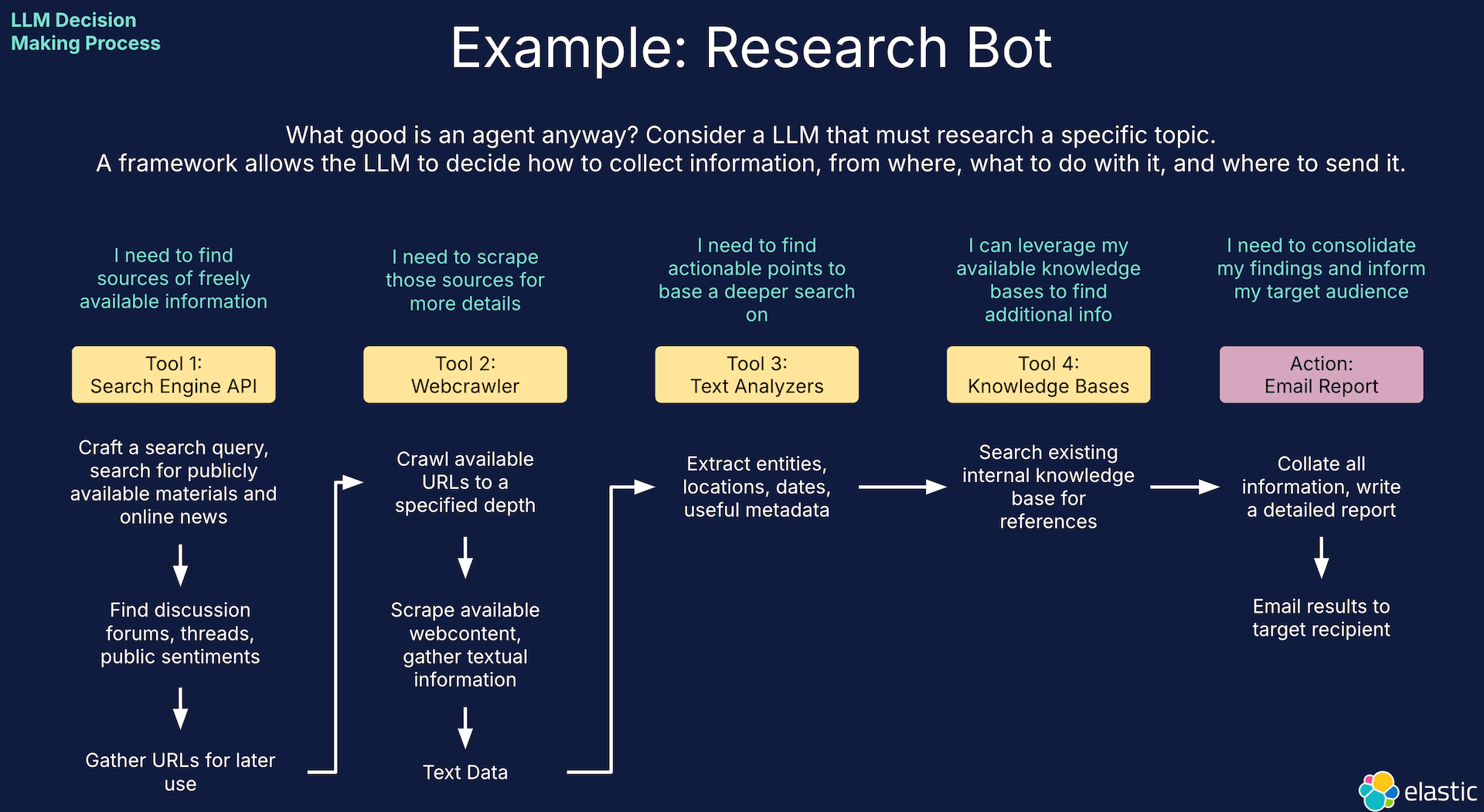

Give the LLM the ability to make decisions, and give it a set of tools. Based on the state and history of the conversation, the LLM will choose whether or not to use each tool, and it will factor in the output of the tool into its responses.

These tools may be knowledge bases, or calculators, or web search engines and crawlers - There is no limitation or end to the variety. The LLM becomes capable of complex actions and tasks, beyond just generating text.

An example of an agentic flow for researching a specific topic

Let's implement a simple example of an agent. The core strength of Elastic is in our knowledge bases. So this example will focus on using a relatively large and complex knowledge base by crafting a more complicated query than just simple vector search.

Set-up

First, define a .env file in your project directory, and fill in these fields. I'm using an Azure OpenAI deployment with GPT-4o for my LLM, and an Elastic Cloud deployment for my knowledge base. My python version is python 3.12.4, and am working off my Macbook.

You may have to install the following dependencies in your terminal.

Create a python file called chat.py in your project directory, and paste in this snippet to initalize your LLM and connection to Elastic Cloud:

Hello world! Our first tool

With our LLM and Elastic client initialized and defined, let's do an Elastic-fied version of Hello World. We'll define a function to check the status of our connection to Elastic Cloud, and a simple agent conversational chain to call it.

Define the following function as a langchain Tool. The name and description are a crucial part of your prompt engineering. The LLM rely on them to determine whether or not to make use of the tool during conversation.

Now, let's initialize a conversational memory component to keep track of the conversation, as well as our agent itself.

Finally, let's run the conversation loop with this snippet:

In your terminal, run python chat.py to initialize the conversation. Here's how mine went:

When I asked if Elasticsearch was connected, the LLM used the ES Status tool, pinged my Elastic Cloud deployment, got back True, and then confirmed that Elastic Cloud was indeed connected.

Congratulations! That is a successful Hello World :)

Note that the observation is the output of the es_ping function. The format and content of this observation is a key part of our prompt engineering, because this is what the LLM uses to decide its next step.

Let's see how this tool can be modified for RAG.

Agentic RAG

I recently built a large and complex knowledge base in my Elastic Cloud deployment, using the POLITICS dataset. This dataset contains roughly 2.46 Million political articles scraped from US news sources. I ingested it to Elastic Cloud and embedded it with an elser_v2 inference endpoint, following the process defined in this previous blog.

To deploy an elser_v2 inference endpoint, ensure that ML node autoscaling is enabled, and run the following command in your Elastic Cloud console.

Now, let's define a new tool that does a simple semantic_search on over our political knowledge base index. I called it bignews_embedded. This function takes a search query, adds it to a standard semantic_search query template, and runs the query with Elasticsearch. Once it has the search results, it concatenates the article contents into a single block of text, and returns it as an LLM observation.

We'll limit the number of search results to 3. One advantage of this style of Agentic RAG is that we can develop an answer over multiple conversation steps. In other words, more complex queries can be answered by using leading questions to set the stage and context. Question and Answer becomes a fact-based conversation rather than one-off answer generation.

Dates

In order to highlight an important advantage of using an agent, the RAG search function includes a dates argument in addition to a query. When searching for news articles, we probably want to constrain the search results to specific timeframe, like "In 2020" or "Between 2008 and 2012". By adding dates, along with a parser, we allow the LLM to specify a date range for the search.

In short, if I specify something like "California wildfires in 2020", I do not expect to see news from 2017 or any other year.

This rag_search function is a date parser (Extracting dates from the input and adding it to the query), and an Elastic semantic_search query.

After running the complete search query, the results are concatenated into a block of text and returned as an 'observation' for the LLM to use.

To account for multiple possible arguments, use pydantic's BaseModel to define a valid input format:

We also need to make use of StructuredTool to define a multi-input function, using the input format defined above:

The description is a critical element of the tool definition, and is part of your prompt engineering. It should be comprehensive and detailed, and provide sufficient context to the LLM, such that it knows when to use the tool, and for what purpose.

The description should also include the types of inputs that the LLM must provide in order to use the tool properly. Specifying the formats and expectations has a huge impact here.

Uninformative descriptions can have a serious impact on the LLM's ability to use the tool!

Remember to add the new tool to the list of tools for the agent to use:

We also need to further modify the agent with a system prompt, providing additional control over the agent's behavior. The system prompt is crucial for ensuring that errors related to malformed outputs and function inputs do not occur. We need to explicitly state what each function expects, and what the model should output, because langchain will throw an error if it sees improperly formatted LLM responses.

We also need to set agent=AgentType.OPENAI_FUNCTIONS to use OpenAI's function callingt capability. This allows the LLM to interact with the function according to the structured templates we specified.

Note that the system prompt includes a prescription on the exact format of input that the LLM should generate, as well as a concrete example.

The LLM should detect not just which tool should be used, but also what input the tool expects! Langchain only takes care of function invocation/tool use, but it's up to the LLM to use it properly.

Now, run python chat.py in your terminal and let's test it out!

Testing Agentic RAG

Let's test it by asking the following query:

Helpfully enough, langchain will output the intermediate steps, including the inputs to the RAG_Search function, the search results, and the final ouput.

The most notable thing is that the LLM created a search query, then added a date range from the beginning to the end of 2020. By constraining the search results to the specified year, we ensured that only relevant documents would be passed to the LLM.

There's a lot more that we can do with this, such as constraining based on category, or the appearance of certain entities, or relation to other events.

The possibilities are endless, and I think that's pretty cool!

Error handling in Agentic RAG

There may be situations where the LLM fails to use the proper tool/function when it needs to. For example, it may choose to answer a question about current events using its own knowledge rather than using an available knowledge base.

Careful testing and tuning of both the system prompt and the tool/function description is necessary.

Another option might be to increase the variety of available tools, to increase the probability that an answer is generated based on knowledge base content rather than the LLM's innate knowledge.

Do note that LLMs will have occasional failures, as a natural consequence of their probabilistic nature. Helpful error messages or disclaimers might also be an important part of the user experience.

Conclusion and future prospects

For me, the main takeaway is the possibility of creating much more advanced search applications. The LLM might be able to craft very complex search queries on the fly, and within the context of a natural language conversation. This opens the way to drastically improving the accuracy and relevancy of search applications, and is an area that I'm excited to explore.

The interaction of knowledge bases with other tools, such as web search engines and monitoring tool APIs, through the medium of LLMs, could also enable some exciting and complex use-cases. Search results from KBs might be supplemented with live information, allowing LLMs to perform effective and time-sensitive on-the-fly reasoning.

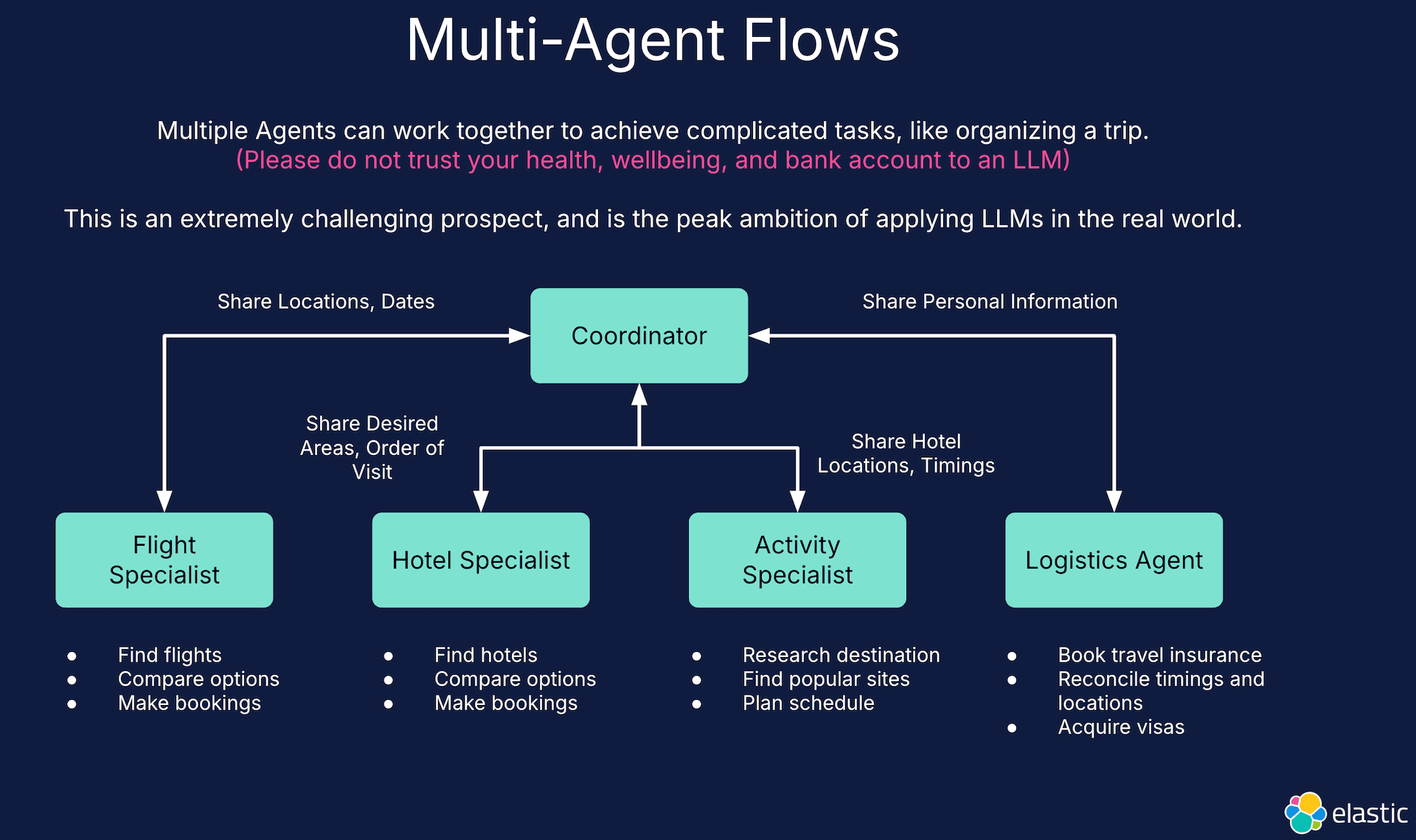

There is also the possibility of multi-agent workflows. In an Elastic context, this might be multiple agents exploring different sets of knowledge bases to collaboratively build a solution to a complex problem. Perhaps a federated model of search where multiple organizations build collaborative, shared applications, similar to the idea of federated learning?

Example of a multi-agent flow

I'd like to explore some of these use-cases with Elasticsearch, and I hope you will as well.

Until next time!