AI 기반 SIEM으로

오픈 소스이며 저렴합니다

Elastic SIEM은 수천 개의 실제 환경에서 검증되었으며 AI에 의해 구동되어 위협을 더 빠르게 탐지하고 비용 초과 없이 확장할 수 있도록 도와줍니다.

가이드 데모

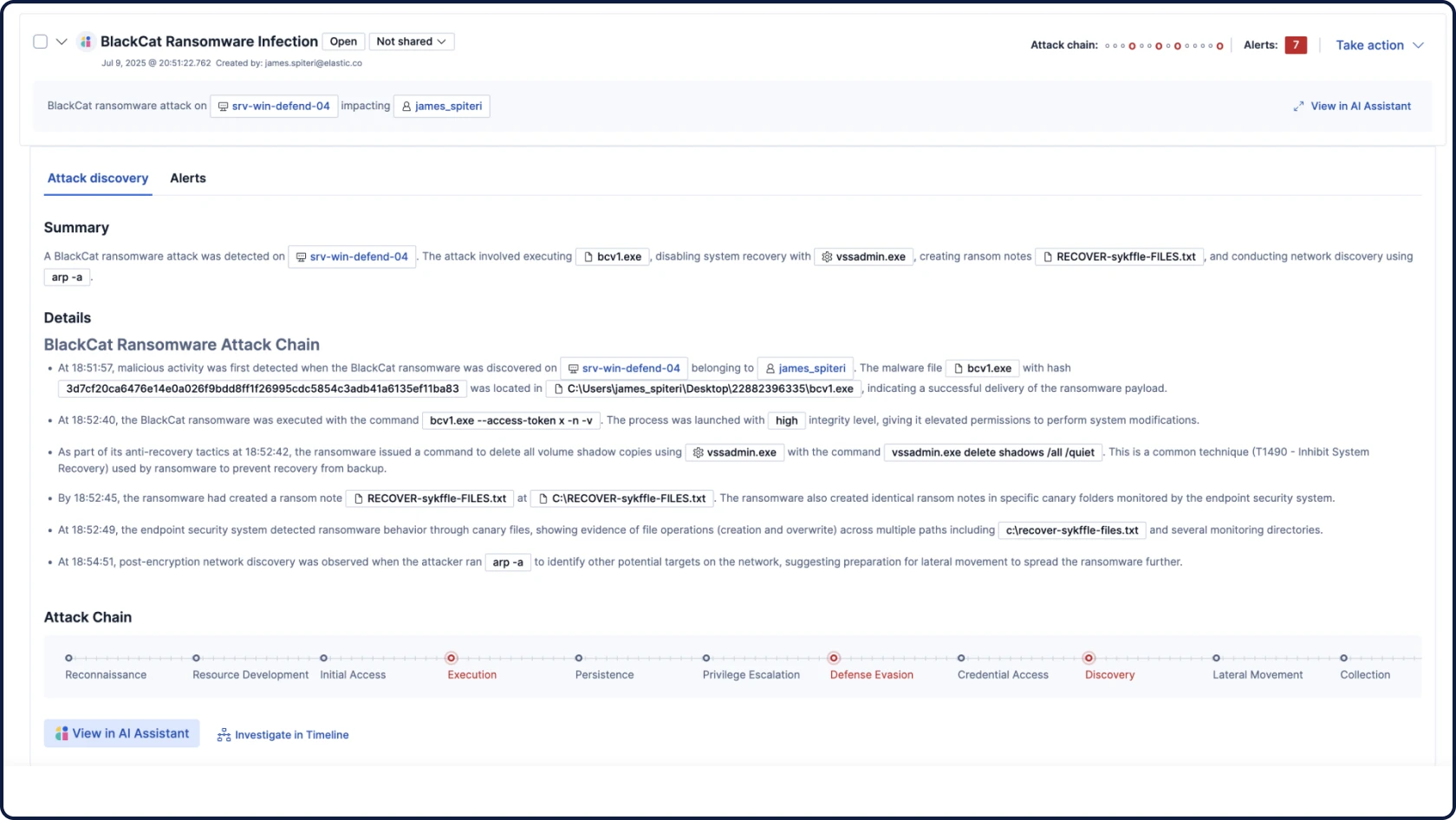

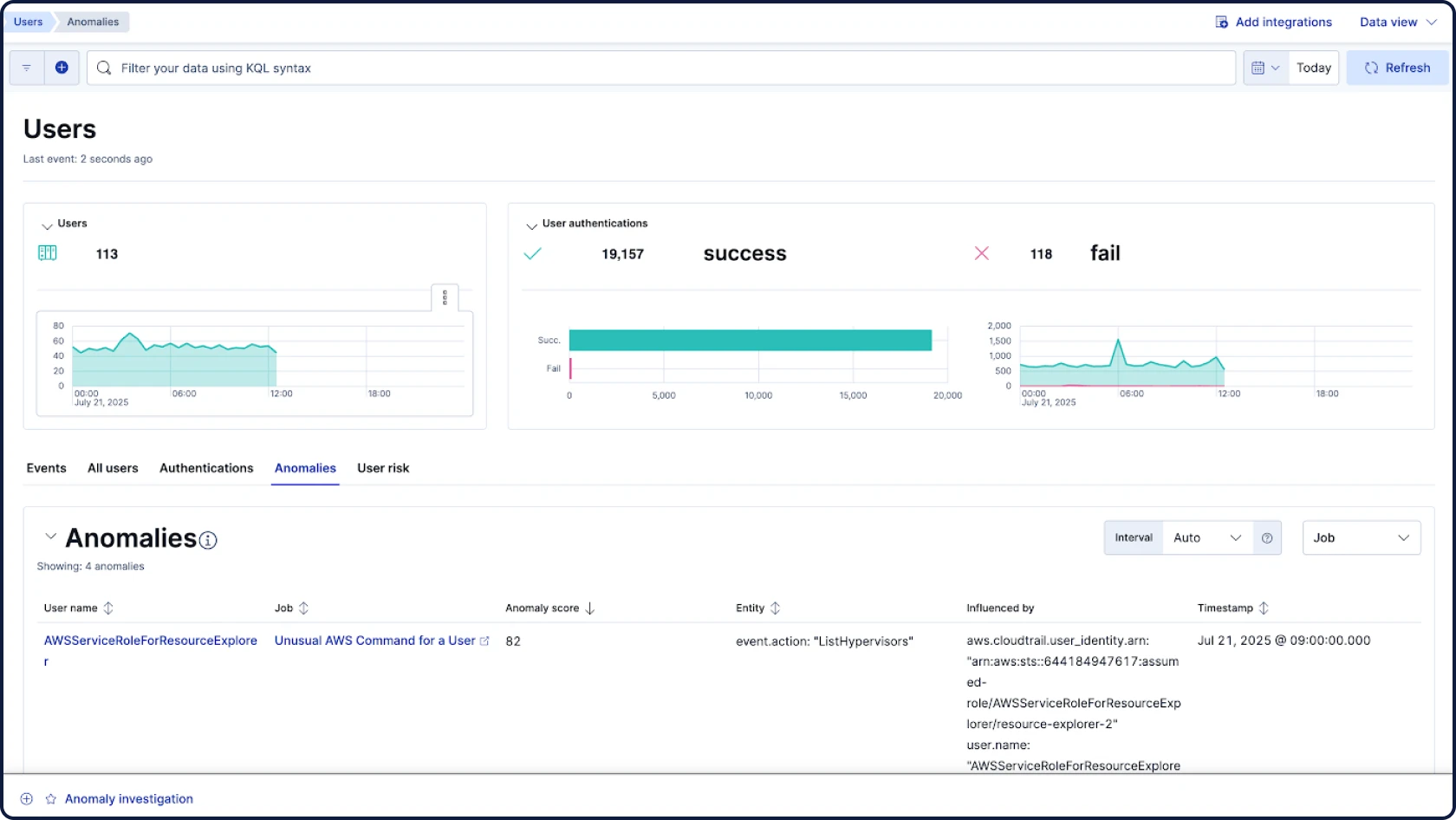

데이터에 숨어 있는 위협을 찾으세요

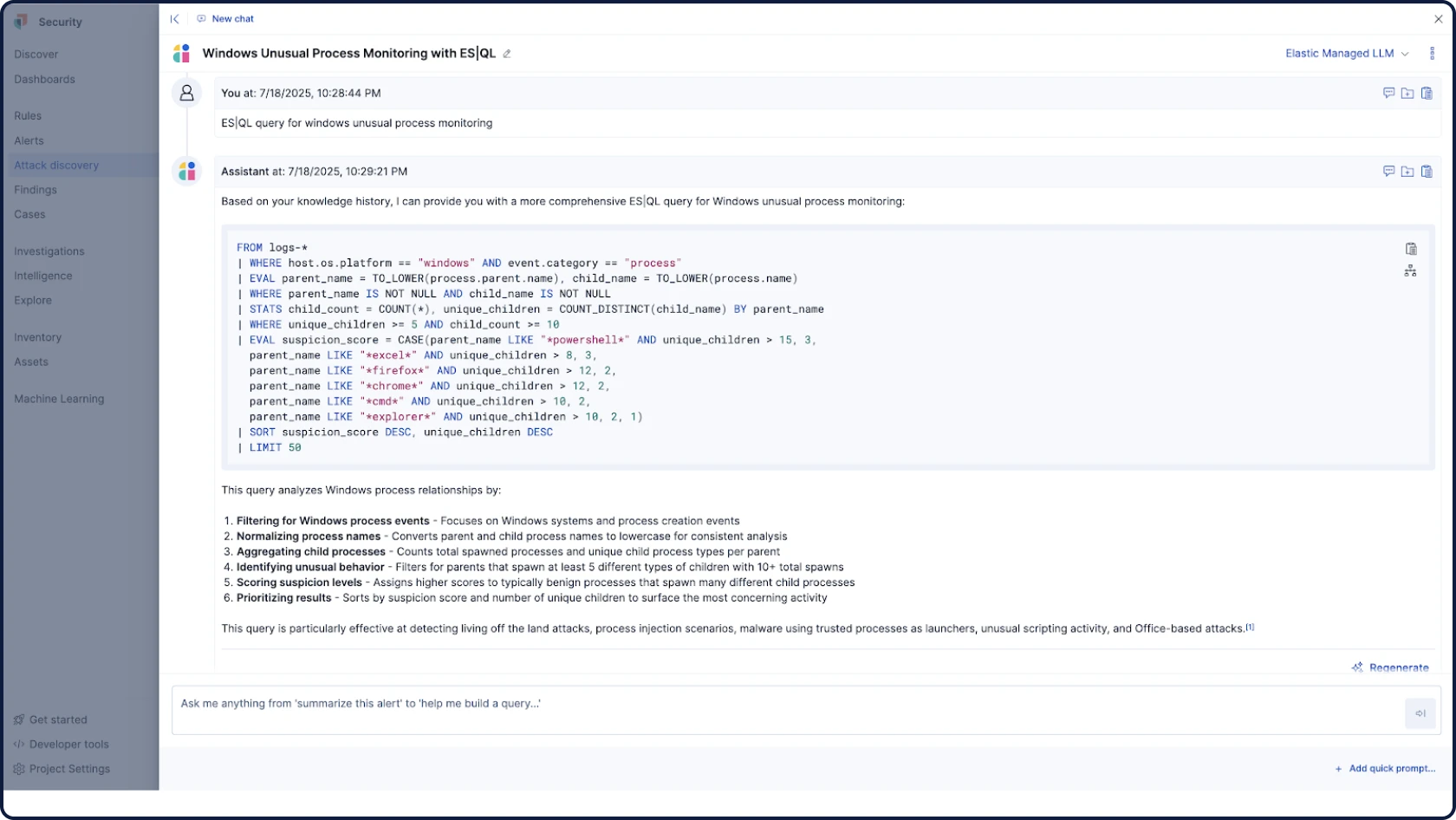

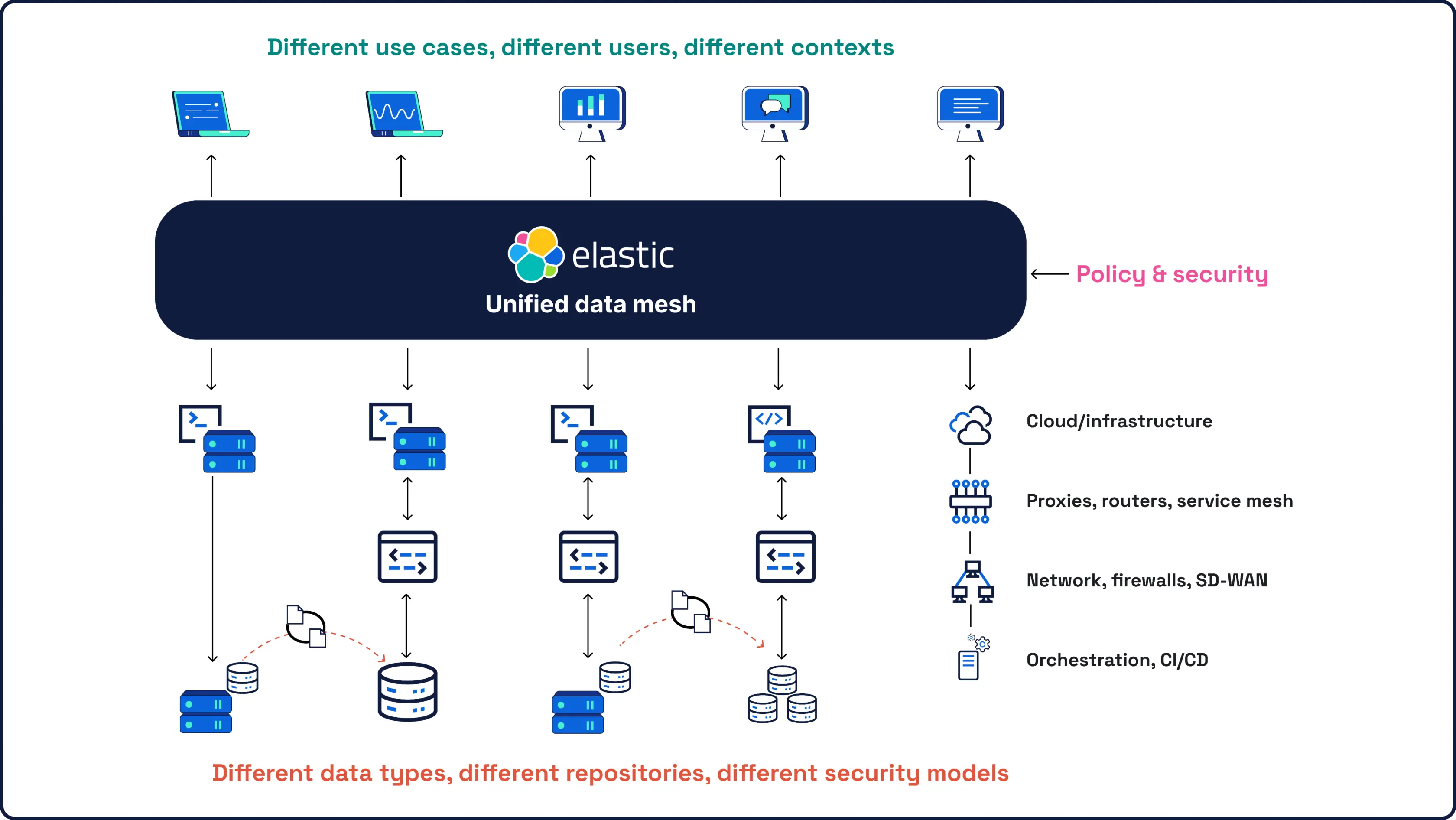

보안은 데이터에서 시작됩니다. 그리고 데이터는 우리가 가장 잘 아는 분야입니다. 세계 최고의 오픈소스 검색 및 분석 엔진의 개발사인 Elasticsearch의 SIEM은 모든 보안 데이터에 대해 규모에 관계없이 강력한 AI 기반 탐지 및 조사 기능을 제공합니다.

패키징 옵션

모두 수용하시거나 귀하의 속도에 맞춰 진행하십시오

Elastic의 보안 플랫폼은 고객의 현재 상황에 맞게 시작하여, 기존 플랫폼이 도달할 수 없는 곳으로 안내합니다.

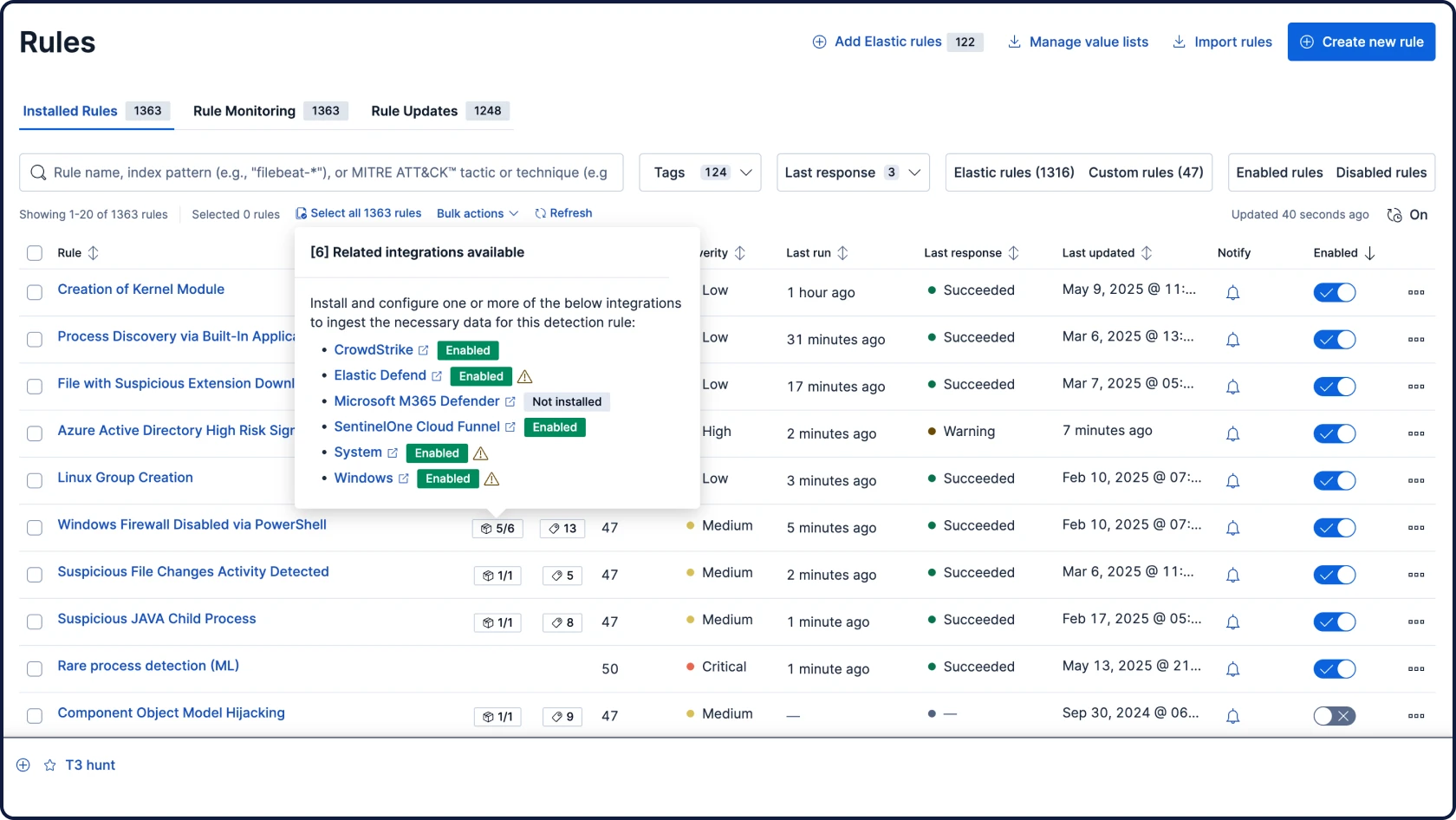

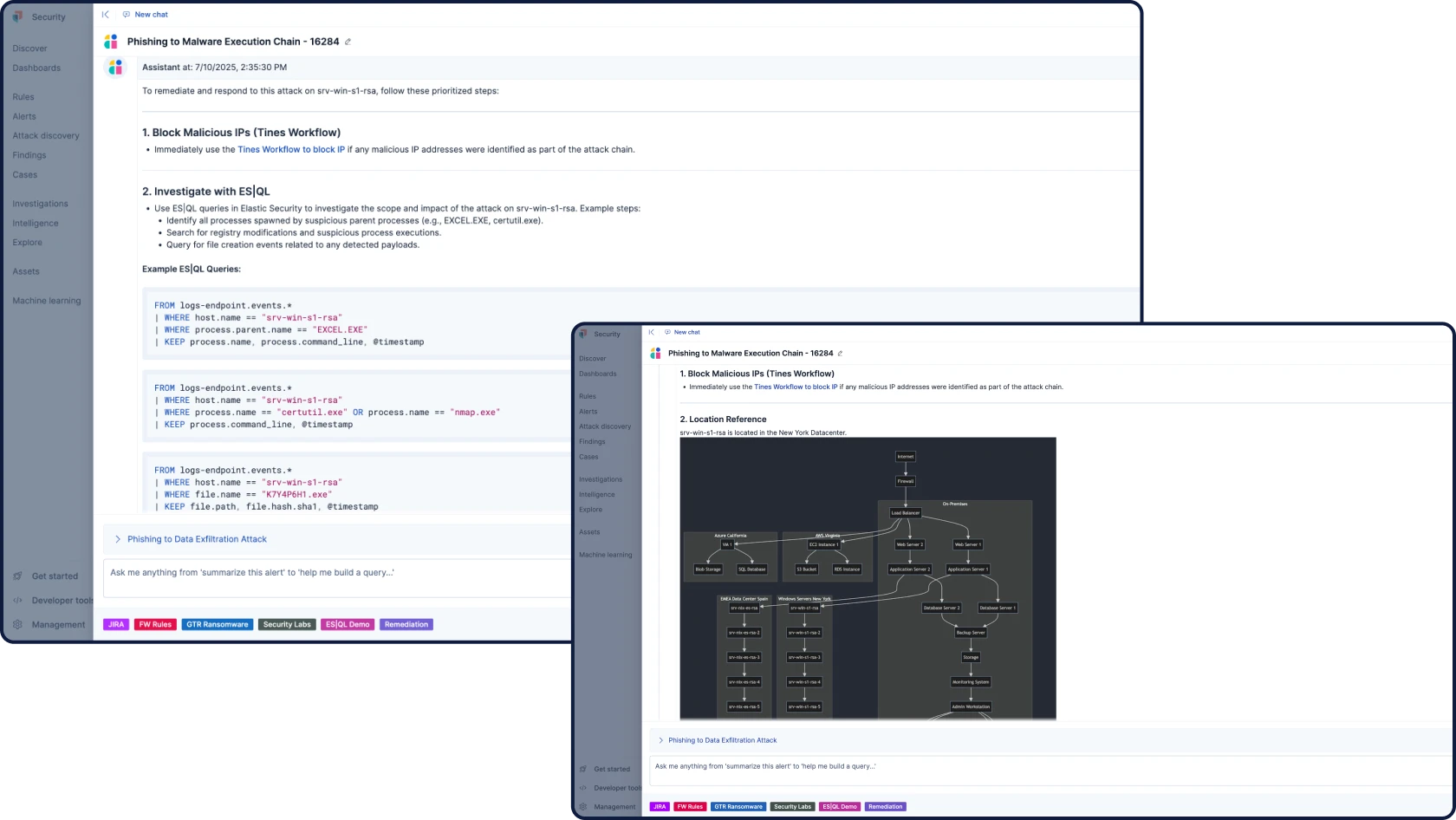

Elastic Security

SIEM, XDR, 클라우드 보안, 통합 AI 등 필요한 모든 기능을 하나의 통합 플랫폼에서 제공합니다. 추가 SKU도, 부가 기능도 없으며, 어떤 타협도 허용하지 않습니다. 분석가들이 사고하고 탐색하며 대응하는 방식을 위해 설계된 Elastic만의 원활한 환경을 경험해 보세요.

Elastic AI SOC 엔진(EASE)

이 AI 기능 패키지를 통해 원하는 일정에 맞춰 Elastic Security를 도입할 수 있으며, 전면적으로 교체할 필요가 없습니다. 기존 SIEM, EDR 등 다양한 경보 도구를 데이터와 워크플로우에 연동된 AI로 강화하고, 준비가 되면 전체 플랫폼으로 확장하세요.

다른 우수 고객과 함께하세요

고객 스포트라이트

Airtel은 Elastic의 AI 기능으로 사이버 보안 역량을 강화하고 SOC 효율성을 40% 향상했으며 조사 속도를 30% 높였습니다.

Airtel은 Elastic의 AI 기능으로 사이버 보안 역량을 강화하고 SOC 효율성을 40% 향상했으며 조사 속도를 30% 높였습니다.고객 스포트라이트

Sierra Nevada Corporation은 Elastic Security를 통해 자사와 다른 방위 계약업체의 인프라를 보호합니다.

Sierra Nevada Corporation은 Elastic Security를 통해 자사와 다른 방위 계약업체의 인프라를 보호합니다.고객 스포트라이트

Mimecast는 가시성을 중앙화하고, 조사를 추진하며, 중요 사고를 95% 줄여 글로벌 SecOps를 혁신합니다.

채팅에 참여하십시오

Elastic Security의 글로벌 커뮤니티에 참여해 보세요. 열린 대화와 협업은 물론, 버그 바운티 프로그램을 통한 제품 강화에도 함께할 수 있습니다.

.jpg)