オープンソースかつ

手頃な価格のAIを活用したSIEM

Elastic SIEMは何千もの実際の環境で実証されており、AIを搭載しているため、脅威をより迅速に検出し、スケールできます。

ガイド付きデモ

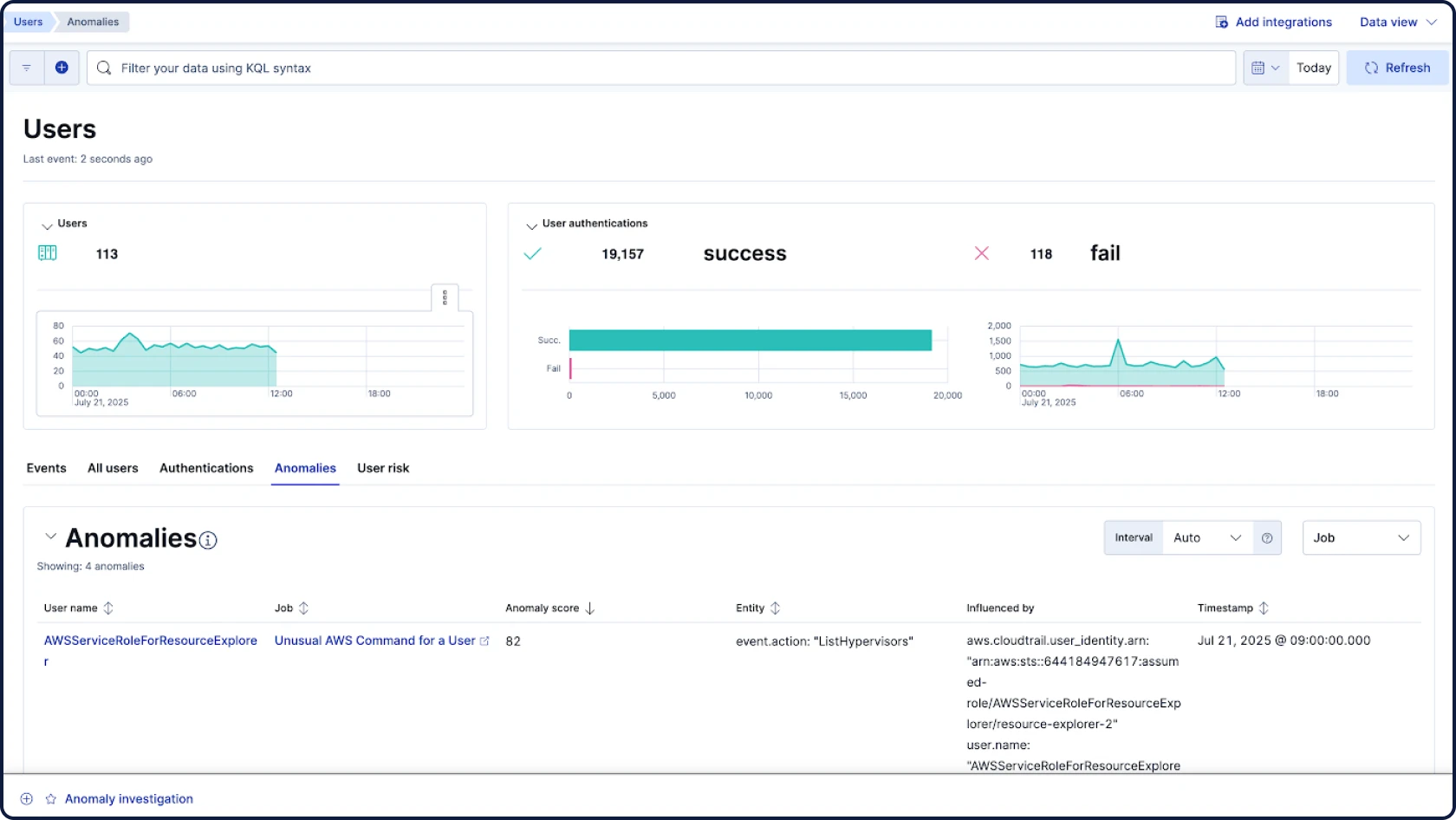

データに潜む脅威を検出

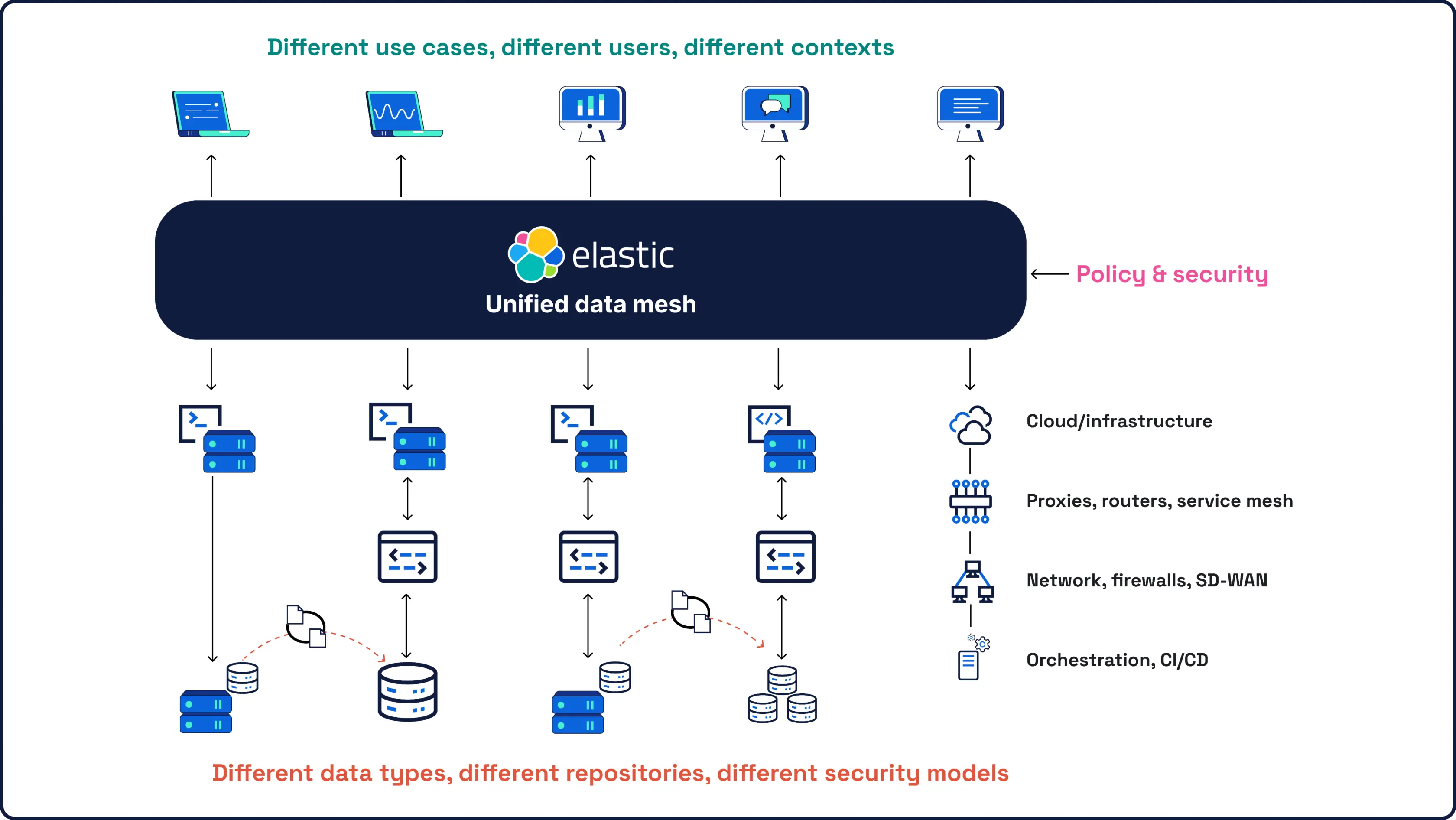

セキュリティはデータから始まります。そして、データは当社が最も熟知しているものです。Elasticsearchという世界をリードするオープンソースの検索および分析エンジンを提供する企業として、当社のSIEMは、あらゆるスケールのすべてのセキュリティデータに対して、強力なAI主導型の検出と調査を提供します。

ハントと調査

未来のSOCのためのSIEM

すべてのセキュリティデータ、AI、調査を1つのオープンで拡張可能でスケーラブルなプラットフォームに集約

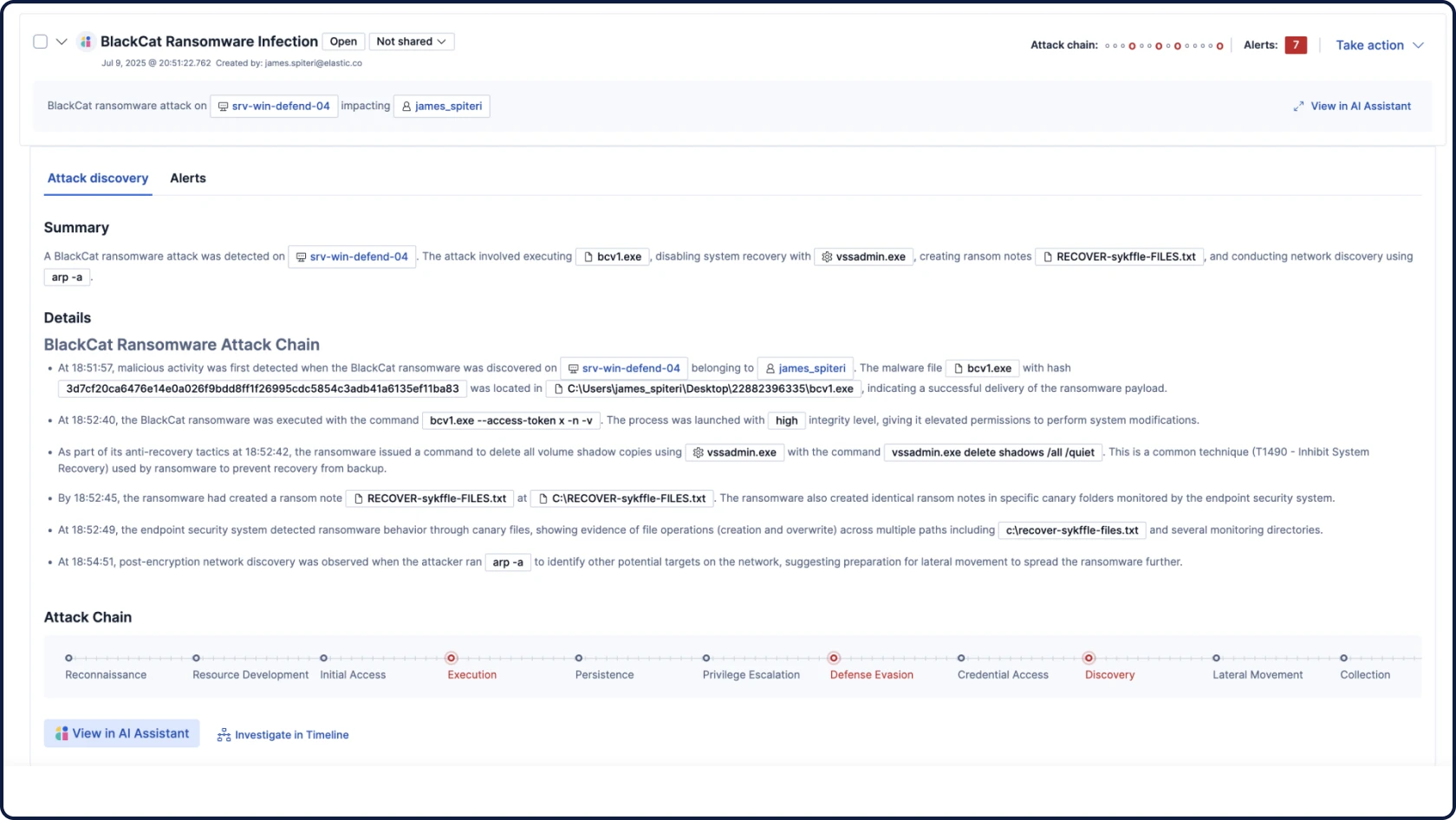

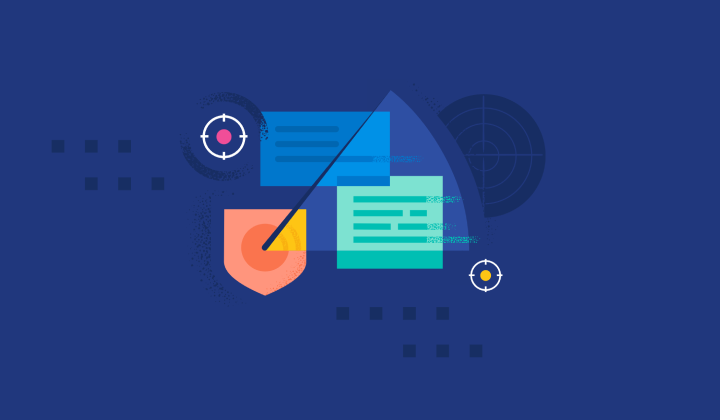

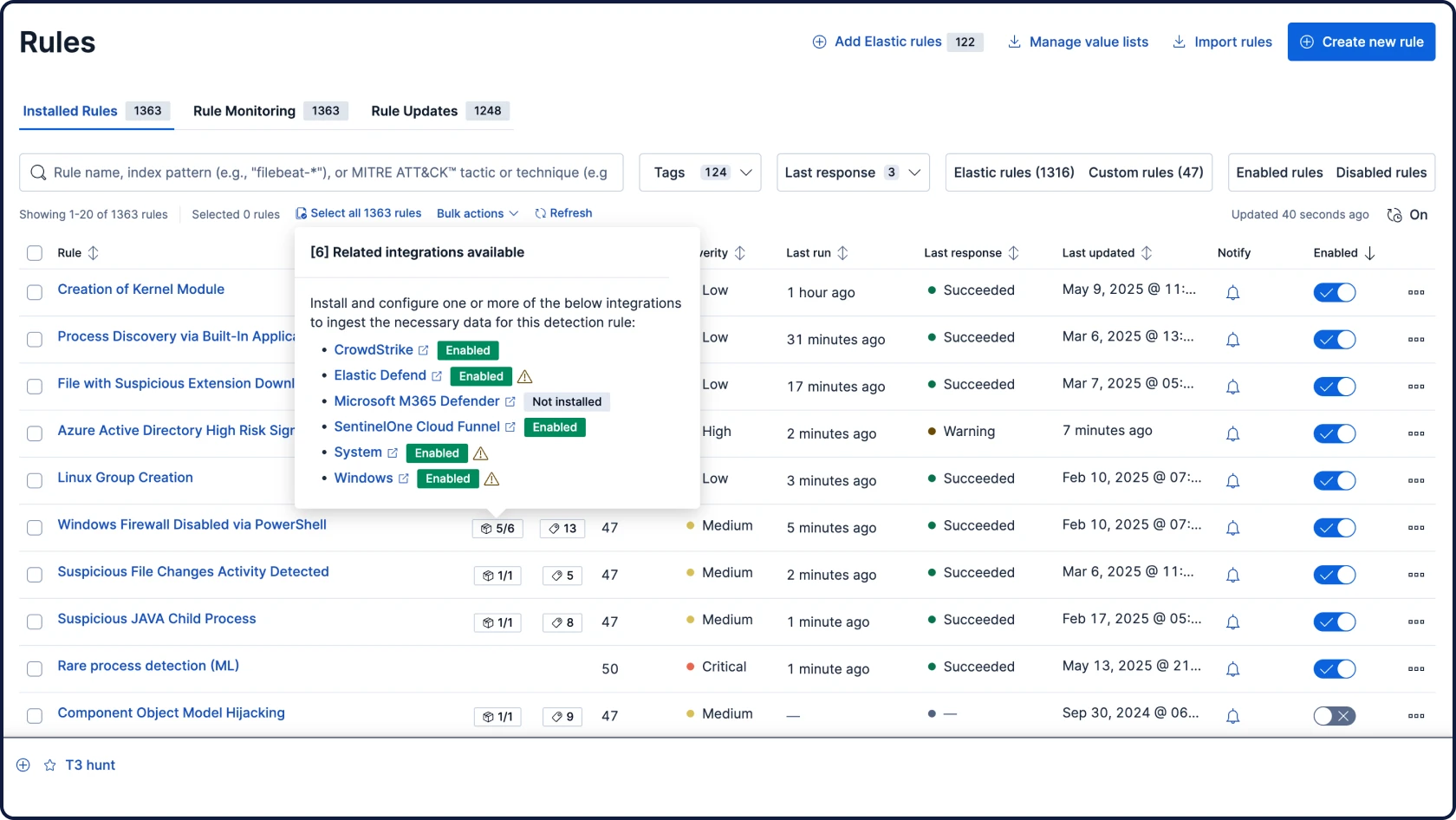

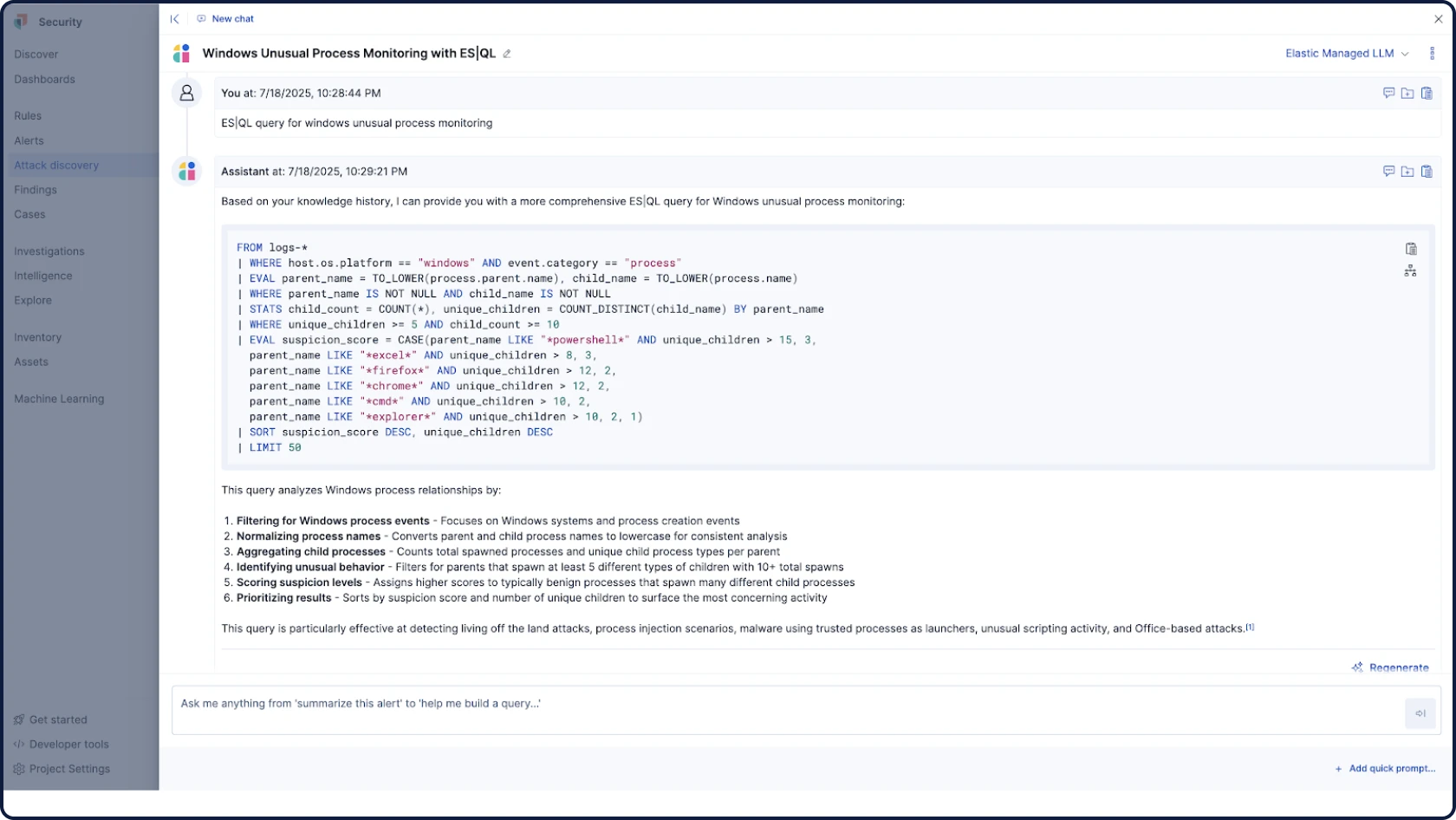

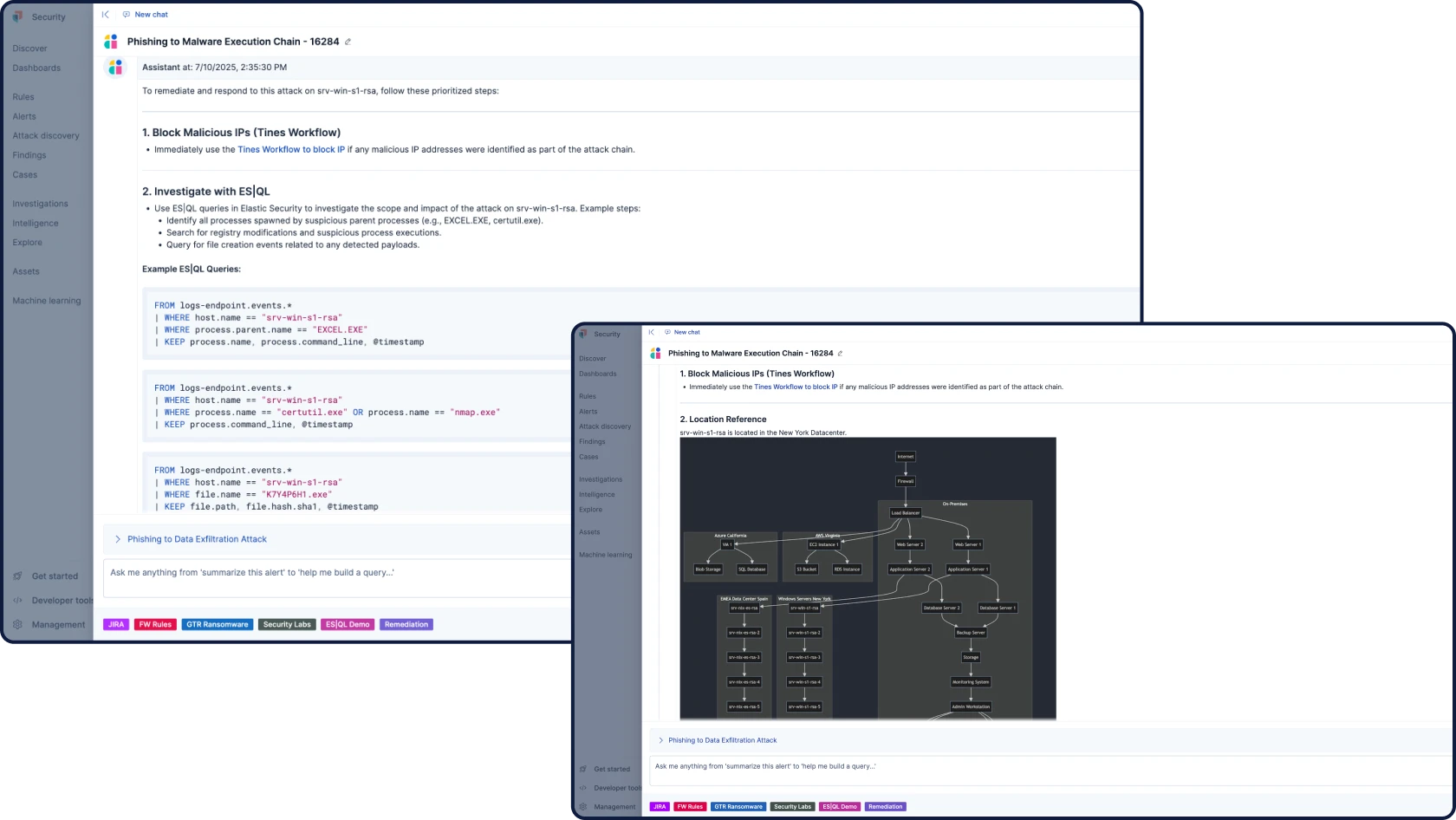

根拠のある、状況に応じた、透明性の高いセキュリティ用AIでワークフローを自動化します。Attack Discoveryはアラートを相関付けることで実際の攻撃行動と経路を特定し、AI Assistantは質問に回答し、アナリストの次のステップをガイドします。

パッケージオプション

すべてを採用しても、マイペースで構築しても

当社のセキュリティプラットフォームは、お客様が業務を行う場所で対応し、レガシープラットフォームでは対応できない場所へお客様を連れて行きます。

Elastic Security

SIEM、XDR、クラウドセキュリティ、統合AIなど、必要なものすべてが1つの統合プラットフォームにまとめられ、余分なSKUも、ボルトオンも、妥協もありません。アナリストの考え方、探索、対応の仕方に合わせて構築されたシームレスな単一のエクスペリエンスのみが存在します。

Elastic AI SOC Engine (EASE)

完全な置き換えをすることなく、Elastic Securityをスケジュールに合わせて導入できるAI機能のパッケージです。データとワークフローにプラグインするAIを使用して、既存のSIEM、EDR、その他のアラートツールを強化し、準備が整ったら完全なプラットフォームに拡張します。

仲間がいます

お客様事例

AirtelはElasticのAI機能でサイバー態勢を改善し、SOC効率を40%向上させ、調査を30%加速しました。

AirtelはElasticのAI機能でサイバー態勢を改善し、SOC効率を40%向上させ、調査を30%加速しました。お客様事例

Sierra Nevada CorporationはElastic Securityを使用して自社のインフラと他の防衛請負業者のインフラを保護しています。

Sierra Nevada CorporationはElastic Securityを使用して自社のインフラと他の防衛請負業者のインフラを保護しています。お客様事例

Mimecastは可視性を一元化し、調査を促進し、重大なインシデントを95%削減して、グローバルなSecOpsを変革します。

チャットに参加

Elastic Securityのグローバルコミュニティに参加し、オープンな会話やコラボレーションから、バグ報酬プログラムによる製品の強化まで、さまざまな活動に参加しましょう。

.jpg)