Just Enough Redis for Logstash

Message queues are used in Logstash deployments to control a surge in events which may lead to slowdown in Elasticsearch or other downstream components. Redis is one of the technologies in use as a message queue. Due to its speed, ease of use, and low resource requirements it is also one of the most popular ways as well.

That is probably why it has been around for a very, very long time—since Logstash version 1.0.4, released more than five years ago!

With all of the recent changes to improve the Logstash pipeline—as well as improvements to the Redis plugin itself—you may not be getting all the performance the Logstash Redis input plugin is capable of delivering.

Logstash attempts to detect the number of cores in your system and set the number of pipeline workers to match. But pipeline workers only matter for filter and output plugins. It has no effect on input plugins. If you haven’t set `threads` in the Redis input plugin, you are only ingesting a fraction of what you could.

The test setup included the most recent stable version of Logstash (2.3.4) with Redis 3.0.7 running on the same machine, which is a 2015 MacBook Pro, for reference. The Logstash configuration used is

input {

redis {

host => "127.0.0.1"

data_type => "list"

key => "redis_test"

# batch_count => 1

# threads => 1

}

}

output { stdout { codec => dots } }

Run this with bin/logstash -f some_config_file.config | pv -Wart > dev/null

This test setup is only designed to test for maximum throughput. Actual performance numbers will vary widely depending on what other plugins are used.

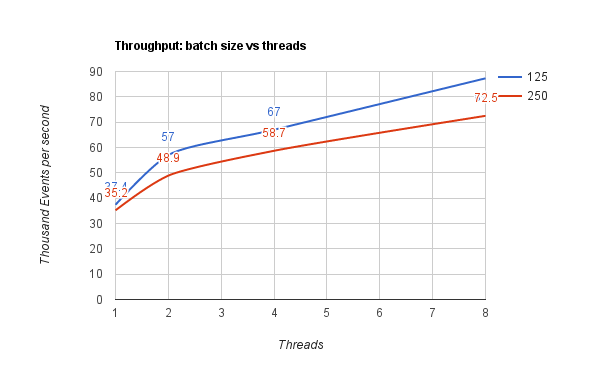

By iterating through a few options for only the threads setting, and using all other settings at their defaults, we see results like these:

- threads => 1 : 37.4K/sec

- threads => 2 : 57K/sec

- threads => 4 : 67K/sec

- threads => 8 : 87.3K/sec

Let’s run the same tests again, but with batch_count => 250, and add -b 250 to our Logstash command line to take full advantage of that increase:

- threads => 1 : 35.2K/sec

- threads => 2 : 48.9K/sec

- threads => 4 : 58.7K/sec

- threads => 8 : 72.5K/sec

As you can see, increasing the default batch_count (which is 125), to 250 results in a decrease in performance.

It’s been common in the past for users to use a larger batch_count when working with Redis. This is no longer best practices, and will actually reduce performance. This is primarily due to changes in the Logstash pipeline architecture. Even an increase of batch_count to 250 results in a drop of performance from 89.5K/sec down to 72.5K/sec when threads => 8. The sweet spot for batch_count is right around 125, which is the default pipeline-batch-size (there’s a reason for that!).

Diving deeper

Recent changes to the Redis input include turning on batch mode by default and using a Lua script to execute the batch retrieval commands on the Redis server. Lua scripts also act as a transaction so we can take a batch and shorten the queue in one step. This forces other Redis connections to wait. Note, your Logstash Redis output plugin in the upstream Logstash config is one such connection.

Also, you should be aware that the manner in which events are added to Redis by the upstream Logstash can affect the performance of the downstream Logstash instance. For example, while preparing the results of the tests, we found that querying the list size with LLEN redis_test in redis_cli too often would affect the measurement. Try to understand these factors in your upstream Logstash instance:

- the rate that events are generated

- the size of the events, smallest; largest and average

- the batch_events size used the redis output

You might want to look at using bigger batch_events sizes e.g. 500 on the upstream Redis output - this will buffer the events before calling Redis and doing this may give the downstream Redis input threads time to jump in to pull from Redis. However, if the event generation rate is high then this may not be as helpful - 50K/sec in batches of 500 means a batch buffer fills in 10 milliseconds.

We have seen that the performance of the Lua script suffers proportionately with larger batch sizes. This means that larger batch sizes on the Redis input will affect the performance of both of the Logstash instances on either side of Redis.

Generally, the goal of tuning the Redis input plugin config and the pipeline batch size and worker threads is to make sure that the input is able to keep pace with the filter/output stage. You should know what the average throughput of your filter/output stage is with the defaults. If, for example, your filter/output stage throughput is 35K/sec then there is little need to change the redis input worker count to 8 when one or two workers can fetch sufficient events per second.

One more source of variation is JSON encoding (1), decoding (2) and encoding (3) - yes, three times, 1 in the upstream Redis output, 2 in the downstream Redis input and 3 in the elasticsearch output. Events with big string values do take some time to decode so make sure your testing is using data sets that represent the size and distribution of size as close to your production data as you can make it.

Scaling throughput using multiple Logstash instance and one Redis server

Many users seek to improve throughput by starting up multiple Logstash instances on different hardware all pointing to the same Redis server and list.

This does work and can work well, but you are advised to apply the same tuning tips to each instance. There is probably not much point in having multiple Redis inputs defined in any one config unless they are connecting to different Redis servers or a different list. Simply tune the threads of the one Redis input. Clearly, having, say, 3 Logstash instances pointing at the same Redis server will create some degree of Redis client connection contention - meaning that you should probably tune one instance and then retune all three when running together.

Conclusion

In the not too distant future, we will be enhancing the Redis input with a JRuby wrapper around the popular and speedy Jedis Java library. This will bring the added bonus of supporting Redis Cluster mode!

In the meanwhile, to give your Redis input performance a boost, try increasing the number of threads used. And be sure to measure and tune accordingly if you use something other than the default batch_count, or have multiple instances of Logstash pointing to a single Redis server.

If you have a question about Redis and Logstash, feel free to open a discussion topic on our forum. You can also find us on IRC (#logstash), and on GitHub.

Happy Logstashing!