AI assistant: From generalist to specialist

In the AI world, there’s a lot of buzz about creating custom large language models (LLMs) tailored for specific domains, perhaps for better security, context, expertise, or accuracy. It’s an appealing idea: What better way to solve your niche challenges than with a bespoke AI designed just for you? But here’s the thing — building a great LLM isn’t just challenging; it’s prohibitively expensive and resource-intensive. Organizations like OpenAI and xAI have poured astronomical investments into developing their models, running on enormous compute power, backed by years of expertise. For most of us, trying to replicate this effort would be like attempting to build a Formula 1 car in your garage; even DeepSeek has hefty resource requirements. Ambitious, yes, but likely to end in frustration.

The good news? You don’t have to. The beauty of the LLMs developed by major AI organizations lies in their versatility. These models are generalists — designed to understand human language, generate coherent responses, and extrapolate ideas well beyond the confines of their training data. They provide a solid foundation for intelligent interaction.

So, how do you transform these generalists into specialists capable of tackling your unique domain challenges? That’s where retrieval augmented generation (RAG) comes into play.

RAG: More than just another acronym

RAG isn’t about reinventing the wheel. It’s about enhancing what already exists. By pairing a powerful LLM with a domain-specific knowledge base (KB), you give the AI access to the expertise it lacks. Think of it as teaching a gifted linguist the nuances of your industry — from intricate standards like the BSI security guidelines to internal policies, playbooks, and beyond. Instead of training a model from scratch, you’re simply equipping it with the tools to answer detailed questions and provide actionable insights specific to your needs.

Imagine a scenario where you’re poring over an 850-page document packed with technical recommendations. With RAG, you don’t have to read through endless pages or search for a needle in a haystack. Instead, you can ask the AI questions in plain language, and it will fetch answers from the document directly — efficiently and accurately. Or consider internal standards and guidelines: RAG lets your AI assistant provide meaningful, context-aware support to your team, saving time and reducing confusion.

Ultimately, RAG lets you turn a general-purpose AI into your own bespoke expert without the hefty price tag or complexity of creating something from scratch. It’s the smarter, more practical way forward for those who want AI that actually understands their world. And that’s what makes RAG more than just another acronym.

An AI assistant that actually knows its stuff

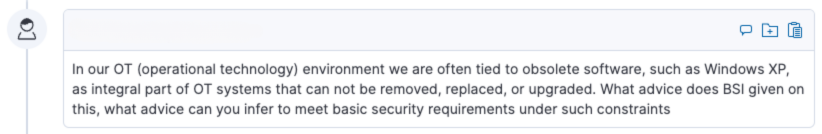

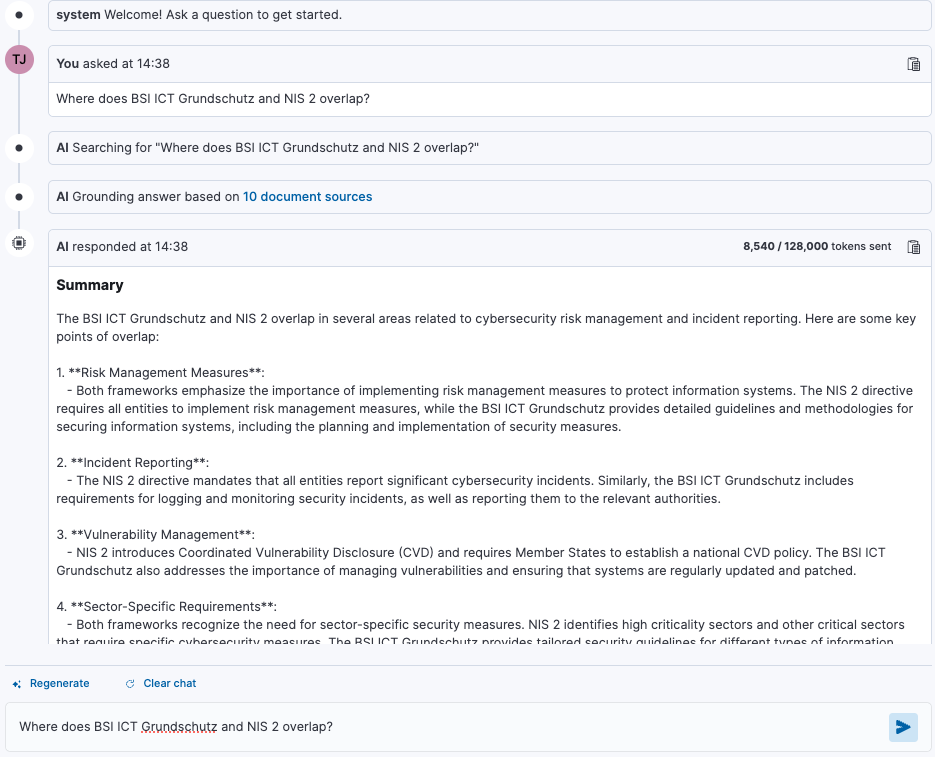

Imagine this: You’re navigating a technical document — say, the 850+ page BSI (Bundesamt für Sicherheit in der Informationstechnik // German Federal Office for Information Security) security guidelines. You’ve got a specific question about securing an OT environment with obsolete software, but the sheer volume of the document makes it nearly impossible to find what you need without spending hours digging through it. That’s where RAG shines.

With a RAG-powered AI assistant, you can bypass the manual search entirely. You ask a question in plain English — something like, “What does the BSI recommend for securing systems with Windows XP?” — and the assistant not only provides a concise answer but cites the relevant sections of the guidelines to back it up. It’s like having a domain expert who’s read the entire document and remembers exactly where everything is.

Take the example of OT environments tied to legacy software. While Windows XP is long outdated in IT, in OT it’s often a critical component of expensive machinery and cannot be replaced for the lifetime of the equipment. The BSI guidelines recognize this reality and offer a framework for securing such systems. Instead of dismissing their use outright, the assistant provides context-aware advice, breaking down recommendations like:

You can see how the assistant distills dense technical content into actionable advice, complete with citations to the relevant sections. This not only saves time but ensures the guidance is aligned with best practices. We could then follow up with questions to explore any point more deeply.

The real strength here is in the nuance. Yes, Windows XP is obsolete, but the Elastic Security AI Assistant understands the operational context where it remains necessary. Instead of generic, one-size-fits-all advice, it delivers targeted solutions that address the unique challenges of OT environments. This makes the AI assistant not just helpful but truly indispensable in navigating complex scenarios.

RAG empowers organizations to leverage cutting-edge AI without needing to reinvent the wheel, offering a practical way to make smarter, more contextually aware decisions.

Fancy giving it a go?

Other blogs from Elastic go into the details of RAG, including vector databases, semantic search, and other such technologies that make it all work. If you want to go deeper, you’re welcome to explore those resources. One such blog from my colleague Christine Komander shows the technical steps to enrich an LLM-driven AI assistant with local expertise by building a semantic search knowledge base (KB) Elasticsearch index from PDFs.

However, I’ve distilled all this into a simple script that does all the technical lifting for you. You can use it in three easy steps:

Configure it to connect to your Elasticsearch instance.

Let it set up the inferencing, ingest pipeline, and index for you.

Read in as many PDFs as you want.

Please refer to the README.md of that project for details on the script’s usage, as well as its capabilities and features — such as how it handles excerpt extraction. The README also provides an example of how to load the BSI guidelines discussed in previous sections of this blog.

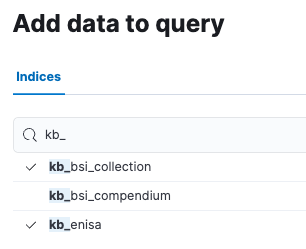

Once you’ve set up your knowledge base, you’ll probably want to see it in action. You can add it to the AI Assistant for Observability or Security in their respective configuration panels. But here, we’ll demonstrate using the Search AI Playground in Kibana:

1. In Kibana, open the navigation menu and click on Playground under the “Search” application.

2. Configure your Model Settings (top right) to the LLM you want to use (I used GPT-4o).

3. Click the Data button (top right) and select the KB index you created with the script above; you can enable more than one index if needed.

5. Now you’re ready to ask questions in the “Ask a question” box.

If you want to use one or more of such KB indices with the Elastic AI Assistant for Security, then you will need to add the KB index, as described in the documentation. Furthermore, here is my “Query Instruction” for my BSI KB:

The BSI documents herein are in German, so use German to query them. However, translate all responses back to the user's language. Always include references/citations to the relevant document sections in the response.

<example 1>

This is a generic example response [§<section>]

</example 1>

<example 2>

This is a specific example response [§IND.2.3.4]

</example 2>

We can look at some further examples in the next section.

Not just for techies: Why it matters

While the idea of using AI and RAG might seem like it’s tailored exclusively for tech enthusiasts or data scientists, its applications extend far beyond. This approach makes complex regulatory, technical, or operational documents accessible to everyone, available at the point of requirement and distilled to exactly what is needed, including those with limited technical expertise.

Providing the full text of a conversation with an AI assistant would make this blog far too lengthy. Instead I’ll summarise example interactions to illustrate how this technology can address complex, real-world scenarios. If you’re curious to see it in action, you’re welcome to recreate these experiences in your own Elasticsearch deployment.

NIS II: A practical example

Let’s explore how this works using an example related to the NIS II directive:

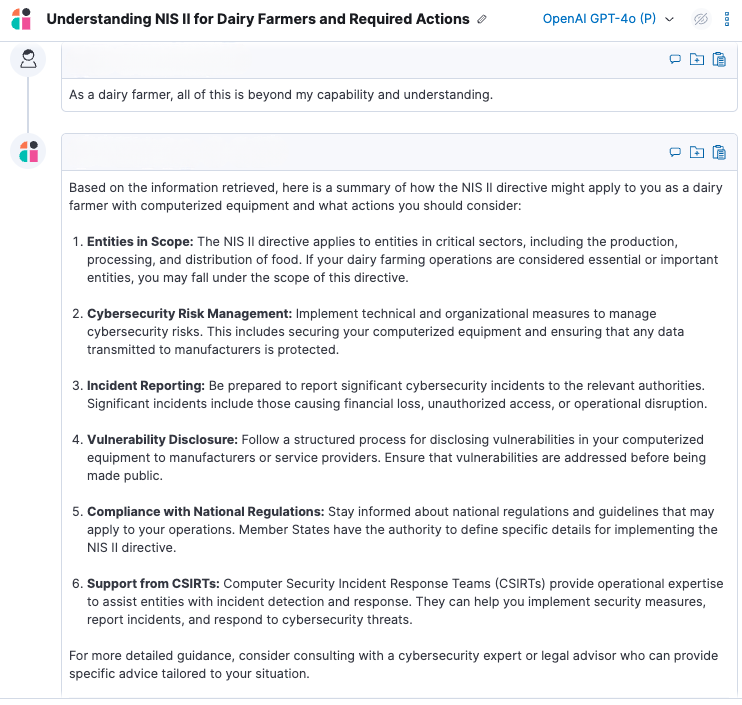

Question: “As a dairy farmer, describe how NIS II might apply to me and what actions I should be taking.”

The AI assistant reviews relevant documents and explains how the dairy farming sector is categorized as a critical sector under NIS II. It outlines practical steps, including implementing risk management measures, reporting significant incidents, and ensuring compliance with national supervision requirements. The response is detailed but accessible, showing how even non-technical users can engage with complex regulations.

Question: “As a dairy farmer, all of this is beyond my capability and understanding.”

In this case, the assistant provides actionable advice tailored to someone unfamiliar with cybersecurity concepts. It recommends starting with basic cyber hygiene, seeking professional help, and leveraging industry resources to meet compliance requirements.

Question: “My tractor is computerized and I assume it is connecting to its manufacturer, however I am not allowed nor able to make alterations.”

Here, the assistant advises engaging with the manufacturer to ensure security measures are in place, understanding the connectivity of the tractor, and ensuring updates and incident reporting processes are followed. It highlights steps that can be taken within the farmer’s control to address cybersecurity concerns without needing deep technical knowledge.

Through these examples, it’s clear that AI assistants (empowered by RAG) are not just for tech-savvy users — they are designed to make critical information usable and actionable for everyone, regardless of their technical background.

The takeaway: Smarter AI, happier humans

What’s remarkable about RAG and Elasticsearch is that they offer capabilities that would be standalone products in many other ecosystems. In the wider market, there are countless vendors developing specialized expert systems built around LLMs and AI. Many of these systems might even rely on Elasticsearch under the hood — but with Elasticsearch, this functionality is intrinsic and available to all users.

For Elasticsearch customers, this means that features like RAG and AI assistants are included as part of the Enterprise license. We don’t lock you into our choice of LLM — you are free to connect any LLM of your choosing in order to use these advanced capabilities, making cutting-edge AI accessible to a broader audience and relevant to your business’ data.

This isn’t just about technology; it’s about solving real-world problems. Security analysts, for example, face an ever-increasing deluge of information — from verbose cloud logs to evolving regulatory frameworks like GDPR, NIS II, and DORA. Think about the vast amount of data encapsulated in handbooks, playbooks, regulations, guidelines, standards, technical documentation, and more that a user needs to remain familiar with. Now by harnessing Elasticsearch’s out of the box AI and RAG capabilities, these users can process, analyze, and act on vast amounts of information more effectively, reducing cognitive overload and improving decision-making.

The future of AI isn’t about replacing humans — it’s about empowering them. With solutions like the Elastic AI Assistant for Security, leveraging RAG and custom knowledge sources, we can create smarter systems that help users navigate complexity, make informed decisions, and ultimately, achieve better outcomes.

Learn more about how to achieve faster problem resolution with Elastic AI Assistant.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.