Get hands-on with Elasticsearch: Dive into our sample notebooks, start a free cloud trial, or try Elastic on your local machine now.

Today we’re excited to introduce jina-reranker-v2-base-multilingual and jina-reranker-v3 on Elastic Inference Service (EIS), enabling fast multilingual, high-precision reranking directly in Elasticsearch.

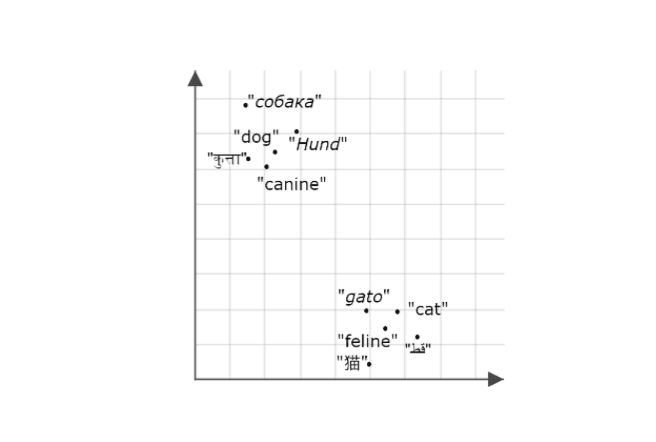

Jina AI, recently acquired by Elastic, is a leader in open-source multilingual and multimodal models, delivering state-of-the-art search foundation models for high-quality retrieval and retrieval-augmented generation (RAG). EIS makes it easy to run fast, high-quality inference with an expanding catalog of these ready-to-use models on managed GPUs, with no setup or hosting complexity.

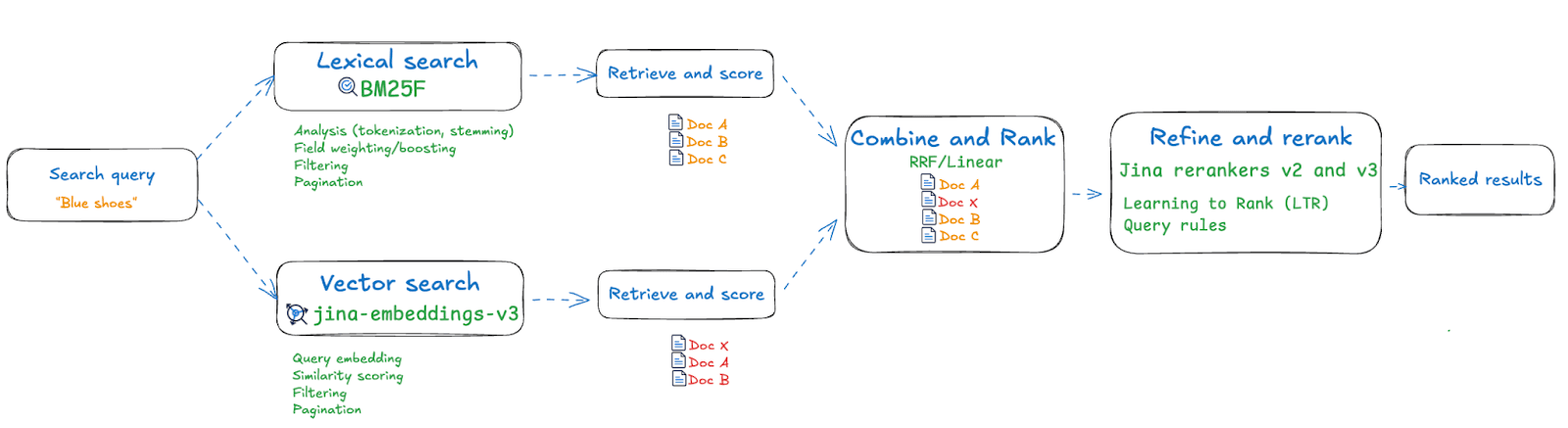

Rerankers add semantic precision by refining the ordering of retrieved results, helping select the best matches for a query. They add relevance improvements without the time and effort involved in reindexing or pipeline disruption, and they’re especially valuable for hybrid and RAG workflows where better context boosts downstream accuracy.

This follows the recent introduction of jina-embeddings-v3 on EIS, expanding the model catalog for multilingual reranking. Developers can now perform hybrid search using lexical search with BM25F and vector search with multilingual embeddings from jina-embeddings-v3, then rerank results with Jina Rerankers v2 or v3 depending on the use case. This delivers full control over recall tuning natively in Elasticsearch.

jina-reranker-v2-base-multilingual

jina-reranker-v2-base-multilingual is a compact general-purpose reranker with features designed to support function calling and SQL querying.

- Low-latency inference at scale: A compact 278M parameter model with Flash Attention 2 for low-latency inference, delivering strong multilingual performance that outperforms larger rerankers based on AIR metrics and other widely used benchmarks.

- Supports agentic use cases: Accurate multilingual text reranking with additional support for selecting SQL tables and external functions that match text queries, enabling agentic workflows.

- Unbounded candidate support: v2 handles arbitrarily large candidate lists by scoring documents independently. Scores are compatible across batches, so developers can rerank large result sets incrementally. For example, a pipeline can score 100 candidates at a time, merge the scores, and sort the combined results. This makes v2 suitable when pipelines don’t apply strict top-k limits.

jina-reranker-v3

jina-reranker-v3 performs multilingual listwise reranking, offering state-of-the-art performance with higher precision for RAG and agent-driven workflows.

- Lightweight, production-friendly architecture: A ~0.6B parameter listwise reranker optimized for low-latency inference and efficient deployment in production settings.

- Strong multilingual performance: Benchmarks show that v3 delivers state-of-the-art multilingual performance while outperforming much larger alternatives and maintains stable top-k rankings under permutation.

- Cost-efficient, cross-document reranking: Unlike v2, v3 reranks up to 64 documents together in a single inference call, reasoning over relationships across the full candidate set to improve ordering when results are similar or overlapping. By batching candidates instead of scoring them individually, v3 significantly reduces inference usage, making it a strong fit for RAG and agentic workflows with defined top-k results.

More models are on the way. EIS continues to expand with models optimized for candidate reranking, retrieval, and agentic reasoning. Next up is jina-reranker-m0 for multimodal reranking, followed closely by frontier models from OpenAI, Google and Anthropic.

Get started

You can start using jina-reranker-v2-base-multilinugal on EIS with just a few steps.

Create embeddings with jina-embeddings-v3

The response:

Rerank with jina-reranker-v2-base-multilingual

Perform inference:

The response:

The response contains the ranked list of inputs sorted by relevance score. In this example, the model identifies "ocean" as the most relevant match for a large body of water, assigning it the highest score while correctly ranking "puddle" and "cup of tea" lower.

Rerank with jina-reranker-v3

Perform inference:

The response:

Similar to jina-reranker-v2-base-multilingual, the response provides a prioritized list of the inputs sorted by relevance. In this example, the model identifies "The Swiss Alps" as the most relevant match for "mountain range," compared to "pebble" and "a steep hill."

However, a key difference is that jina-reranker-v3 is a listwise reranker. Unlike jina-reranker-v2-base-multilingual, which scores document-query pairs individually, jina-reranker-v3 processes all inputs simultaneously, enabling rich cross-document interactions before determining the final ranking.

What’s new in EIS

EIS via Cloud Connect brings EIS to self-managed clusters, allowing developers to access its GPU fleet to prototype and ship RAG, semantic search, and agent workloads without needing to procure GPU capacity on their self-managed clusters. Platform teams gain hybrid flexibility by keeping data and indexing on-prem while scaling GPU inference in Elastic Cloud when needed.

What’s next

semantic_text fields will soon default to jina-embeddings-v3 on EIS, providing built-in inference at ingestion time, making it easier to adopt multilingual search without additional configuration.

Try it out

With Jina AI models on EIS, you can build multilingual, high-precision retrieval pipelines without managing models, GPUs, or infrastructure. You get fast dense retrieval, accurate reranking, and tight integration with Elasticsearch’s relevance stack, all in one platform.

Whether you’re building RAG systems, search, or agentic workflows that need reliable context, Elastic now gives you high-performance models out of the box and the operational simplicity to move from prototype to production with confidence.

All Elastic Cloud trials have access to the Elastic Inference Service. Try it now on Elastic Cloud Serverless and Elastic Cloud Hosted.