Get hands-on with Elasticsearch: Dive into our sample notebooks, start a free cloud trial, or try Elastic on your local machine now.

Today, we are excited to introduce jina-embeddings-v3 on Elastic Inference Service (EIS), enabling fast multilingual dense retrieval directly into Elasticsearch. Jina-embeddings-v3 is the first Jina AI model available on EIS, with many more to come soon.

Jina AI, which recently joined Elastic via acquisition, is a leader in open-source multilingual and multimodal embeddings, rerankers, and small language models. Jina brings deep expertise in search foundation models that help developers build high-quality retrieval and RAG systems across text, images, code, and long multilingual content.

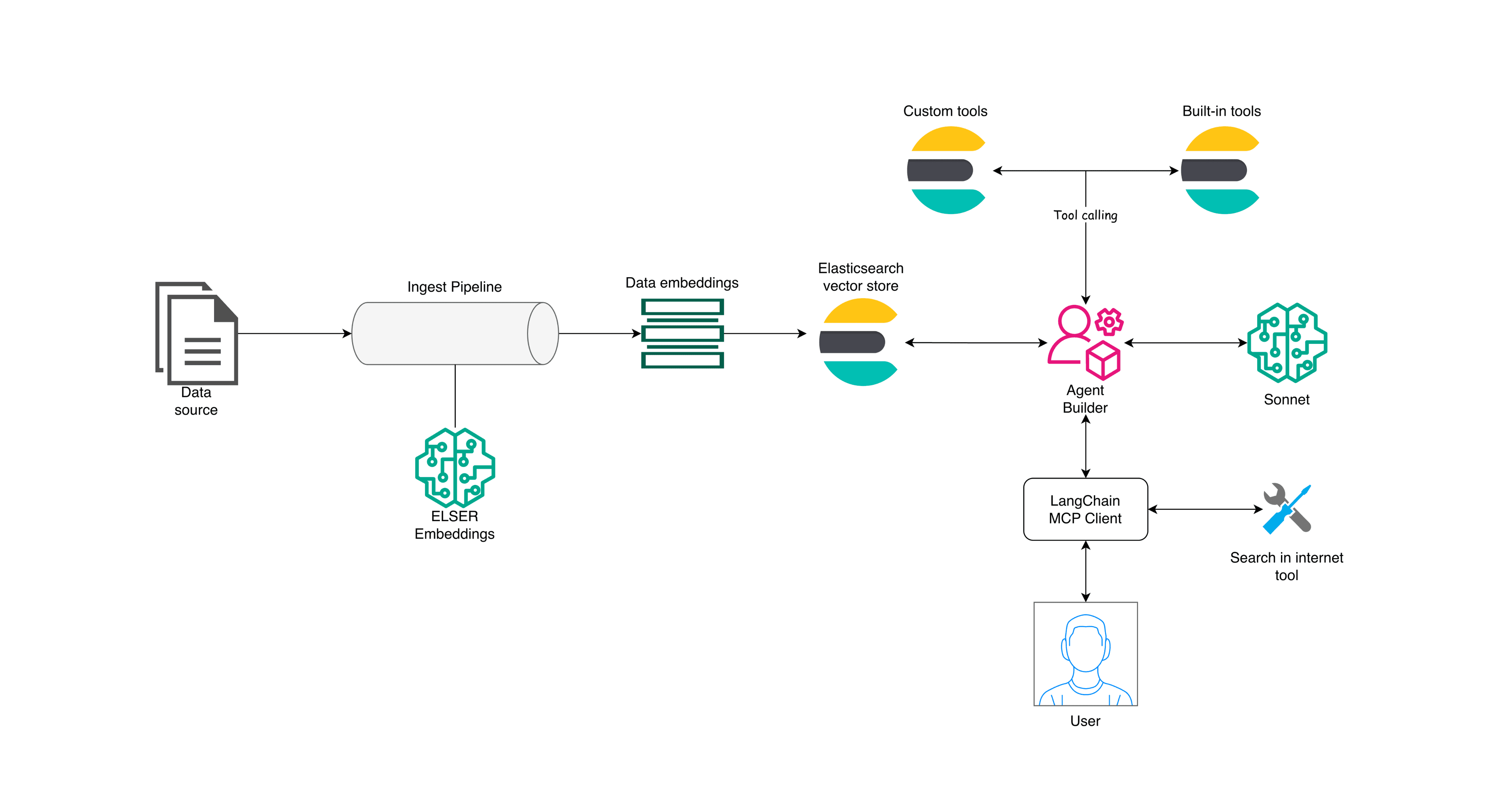

Elastic Inference Service (EIS) makes it easy for developers to add fast, high-quality, and reliable semantic retrieval for search, RAG, and agentic applications with predictable, consumption-based pricing. EIS offers fully-managed GPU-powered inference with ready-to-use models, no additional setup or hosting complexity required.

jina-embeddings-v3 supports high-quality multilingual retrieval with long-context reasoning and task-tuned modes for RAG and agents. It provides developers fast dense embeddings across a broad range of languages without the operational overhead.

Why jina-embeddings-v3?

jina-embeddings-v3 is a text embedding model that supports 32 languages and up to 8192-token context, high relevance at lower cost, and GPU-powered inference through EIS.

Key capabilities

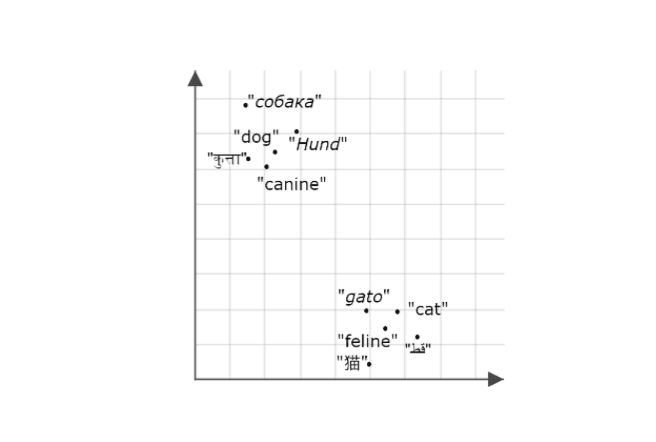

- Multilinguality: Closes the language gap and aligns meaning across 32 languages, including Arabic, Bengali, Chinese, Danish, Dutch, English, Finnish, French, Georgian, German, Greek, Hindi, Indonesian, Italian, Japanese, Korean, Latvian, Norwegian, Polish, Portuguese, Romanian, Russian, Slovak, Spanish, Swedish, Thai, Turkish, Ukrainian, Urdu, and Vietnamese.

- Parameter-efficiency: Delivers higher performance with only 570M parameters, achieving comparable performance to much larger LLM-based embeddings at lower costs.

- Dimensionality control: Default in 1024 dimensions, and with Matryoshka representation support, it lets developers dial the embedding size all the way down to 32 dimensions, giving flexibility to balance accuracy, latency, and storage based on your needs.

- Task-specific optimization: Features task-specific Low-Rank Adaptation (LoRA) adapters, enabling it to generate high-quality embeddings for various tasks including query-document retrieval, clustering, classification, and text matching.

Get started

The response:

What’s next

Alongside these new models, EIS continues to evolve to support more users and simplify semantic search across environments.

Cloud Connect for EIS: Cloud Connect for EIS will soon bring EIS to self-managed environments, reducing operational overhead and enabling hybrid architectures and scaling where it works best for you.

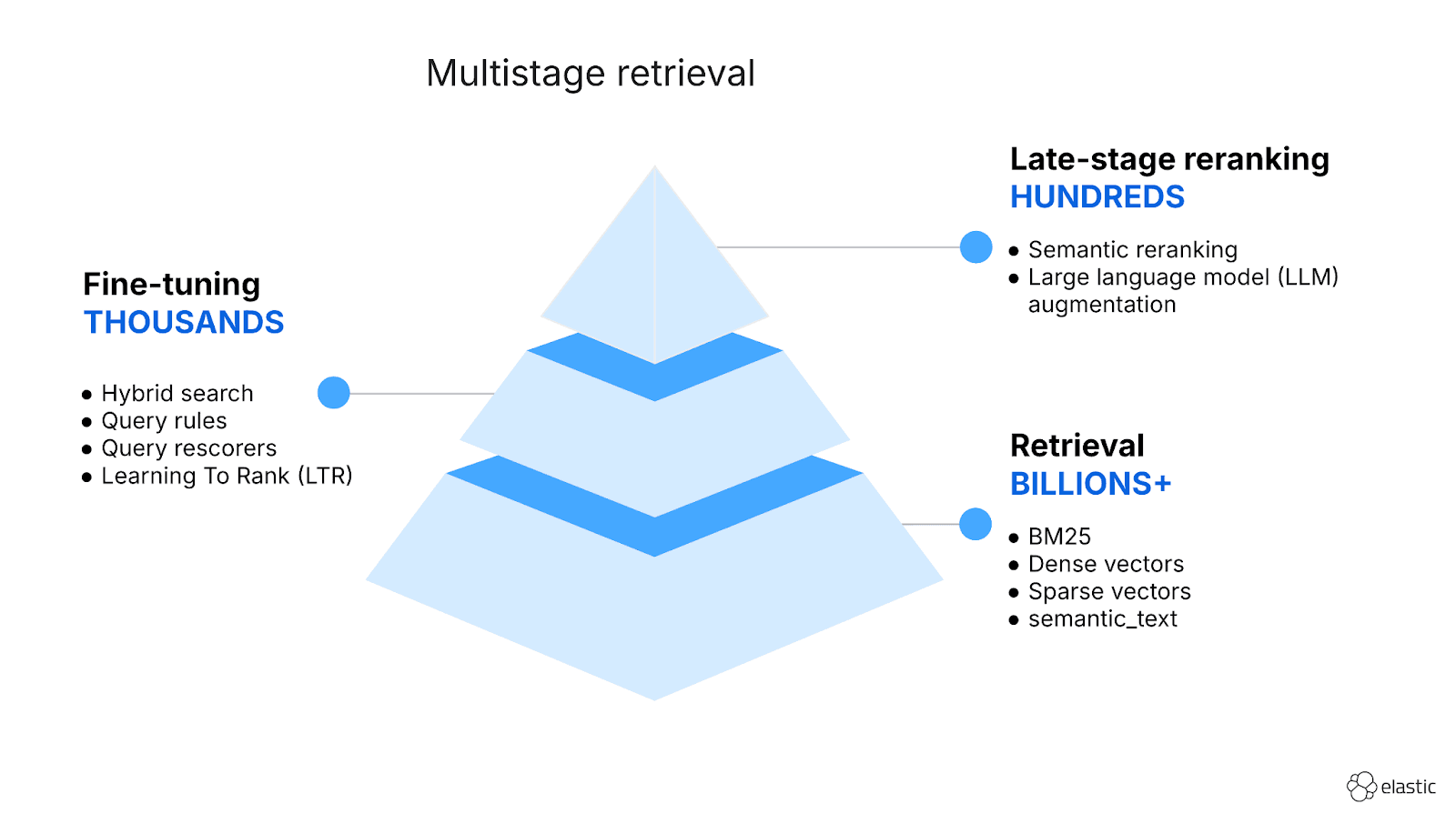

semantic_text defaults to jina-embeddings-v3 on EIS: semantic_text currently uses ELSER as the embeddings model behind the scenes, but will default to the jina-embeddings-v3 endpoint on EIS in the near future. This change will provide built-in inference at ingestion time, making it easier to adopt multilingual search without additional configuration.

More models: We’re expanding the EIS model catalog to meet the rising inference demands of our customers. In the coming months, we’ll introduce new models that support an even broader set of search and inference workloads. Hot on the heels of jina-embeddings-v3, the next models to follow are jina-reranker-v2-base-multilingual and jina-reranker-v3. Both Jina AI models greatly improve precision through multilingual reranking for RAG and AI agents.

Conclusion

With jina-embeddings-v3 on EIS, you can build multilingual, high-precision retrieval pipelines without managing models, GPUs, or infrastructure. You get fast dense retrieval and tight integration with Elasticsearch’s relevance stack, all in one platform.

Whether you are building global RAG systems, search, or agentic workflows that need reliable context, Elastic now gives you a high-performance model out-of-the-box, and the operational simplicity to move from prototype to production with confidence.

All Elastic Cloud trials have access to the Elastic Inference Service. Try it now on Elastic Cloud Serverless and Elastic Cloud Hosted.