Get hands-on with Elasticsearch: Dive into our sample notebooks in the Elasticsearch Labs repo, start a free cloud trial, or try Elastic on your local machine now.

This short article is about uploading structured data to an Elastic index, then converting a plain English query into a query DSL statement, to search for specific criteria with specific filters and ranges. The full code is located in this Github repo.

To get started, run the following command to install dependencies (Elasticsearch and OpenAI):

You will need an Elastic Cloud deployment and an Azure OpenAI deployment to follow along with this notebook. For more details, refer to this readme.

If these are available to you, fill in the env.example as below and rename it to .env:

Finally, open the main.ipynb notebook, and execute each cell in order. Beware that the code will upload about 200,000 datapoints to your chosen Elastic index, which should come to about 400MB.

The flow explored in this article

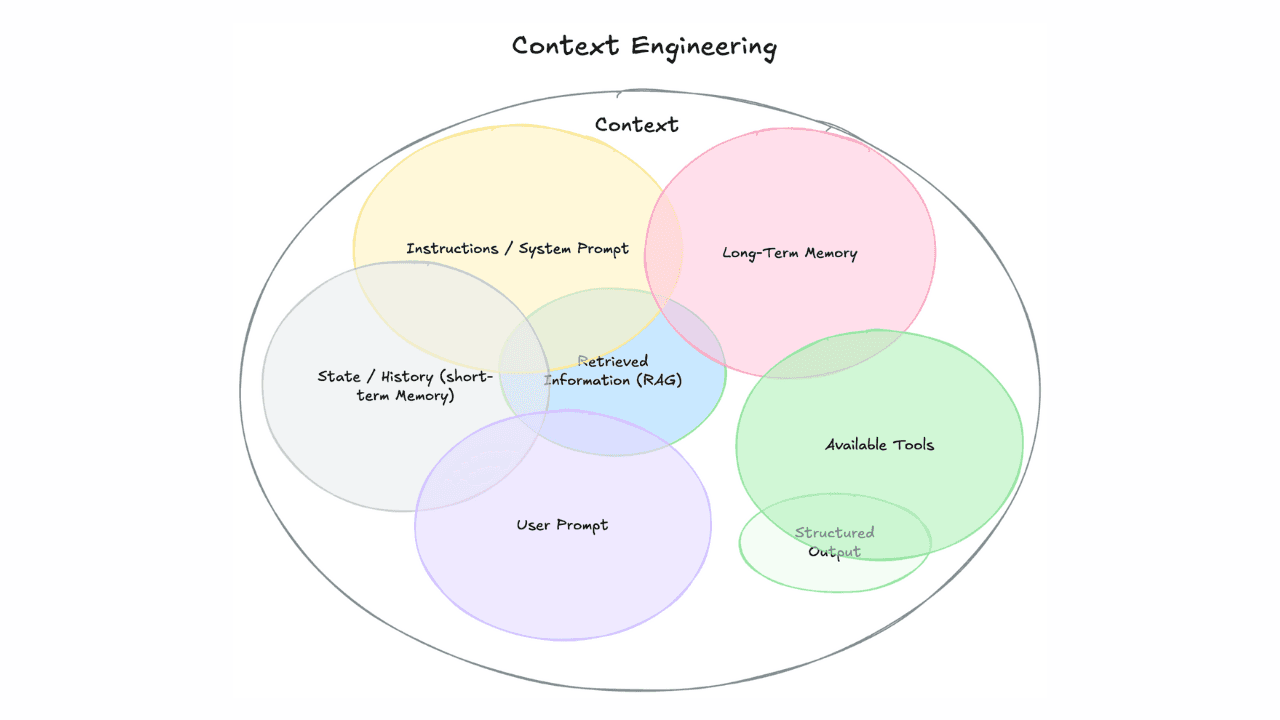

Problem statement: Structured data limitation in RAG

RAG and Hybrid Search are all the RAGe these days. The prospect of uploading unstructured documents to an index and then querying it, with machine learning present at every step of the process, turns out to be a versatile idea with an enormous number of use-cases.

Now, an enormous number is not infinite. Versatile as RAG is, there are occasions where RAG is either overkill or simply poorly suited to the data. One such example is structured datasets containing datapoints like this one here:

To me, it doesn't make much sense to do vector-based RAG with structured data like this. To go about it, you'd probably need to try embedding the field values, but this has a couple of issues.

First off, not all of those individual fields are equal. Depending on use-case, certain fields would be of greater interest and thus should be weighted more highly (blood_type, age, weight, and deceased, perhaps if you are in the medical field?). Weighting the fields is possible if you embed every field, then do a weighted sum. This would multiply the compute cost of embeddings by however many field you have - In this case about 37x. If you don't do this, then the relevant fields are buried under irrelevant ones and performance will likely be poor. Bad choice.

Second, even if you did embed each field in each datapoint, what exactly are you querying with? A plain English question like "Adult men over the age of 25"? Vectors can't filter for specific criteria like this - They work purely based on statistical correlation, which is hardly explicit. I'd bet money on these queries giving poor results, if at all.

Sample data

The data we're using doesn't include the profiles of real people. I wrote a data generator to generate fake Singaporeans. It's a series of random choice statements, where each choice picks from a large list of options for occupation, country_of_birth, addresses, etc... There are a few functions generating convincing looking passport, phone, and NRIC numbers. Now this fake data generator isn't particularly interesting in and of itself, so I'll refrain from discussing it here. The generator itself is located on the relevant notebook - Feel free to check it out! I generated 352,500 profiles for this test.

The generated dataset in Elastic Cloud

Solution: Using LLMs to write Elastic Query DSL & search structured data

Traditional search is a more efficient choice, with its explicit filters and ranges. Elastic is a fully-featured search engine, with plenty of search capabilities that go way beyond just vectors. This is an aspect of search that is recently overshadowed by discussions around RAG and vectors. I think this represents a missed opportunity for serious gains in relevance (Search engine performance), because of the potential for filtering out large portions of irrelevant data (Which can spawn statistical noise, worsening your results) long before costly vector search comes into play.

However, delivery and form factor are significant hurdles. End-users might not be expected to learn database query languages like SQL or even Elastic Query DSL, especially if they are non-technical to begin with. Ideally, we want to preserve the idea of users querying your data using plain old natural language. Give users the ability to search your database without fiddling with filters or frantically googling how to write a SQL or QDSL query.

The solution to this in the pre-LLM era might have been to write a number of buttons and tag them to specific components of a query. Then you could build a complex query by selecting a list of options. This has the caveat of eventually ballooning into a terribly ugly UI filled with masses of buttons. Wouldn't it be nicer if you just had a chat box to search with?

Yeap, we're going to write a natural language query, pass it to an LLM, generate an Elastic query, and use that to search our data.

Prompt design

This is a straightforward notion, where the only challenge to solve is prompt writing. We should write a prompt with a few key characteristics:

1. Document schema

It contains the data schema, so the LLM knows which fields are valid and can be queried, and which cannot. Like this:

Note that for certain fields like blood_type and religion and fluency, I explictly define valid values to ensure consistency. For other fields such as languages or streetnames, the number of available options is excessive and will cause the prompt to balloon. The best option is to trust the LLM in such cases.

2. Examples

It contains example queries and guidelines, which helps reduce the odds of a nonsense query that doesn't work.

3. Special cases

It should contain a few special situations where slightly more advanced queries may be called for:

4. Leniency and coverage

It should contain instructions to avoid over-reliance on exact matches. We want to encourage fuzziness and partial matches, and avoid boolean AND statements. This is to counteract a possible hallucination, which might result in unworkable or unreasonable criteria that ends in an empty search result list.

Once we have a prompt that fulfils all these criteria, we're ready to test it out!

Test procedure

We'll define a class to call our Azure OpenAI LLM:

And pass a query to the LLm along with the prompt we've just defined, like so:

This line in the prompt should allow us to directly load the LLM's response as a JSON object without any further processing required:

Now let's do some testing and see how it performs:

Test 1

First, let's test the LLM's ability to handle simple criteria and handle some vagueness. I'm expecting a simple age range, fuzzy matches for male and software related occupations, and a negative match for Singapore citizens.

Query:

All non-Singaporean men over the age of 25 who are software people living in woodlands

Generated Elastic Query:

Results:

Okay. That's basically what we expected. Totally relevant results only. Let's try a harder test.

Test 2

Let's do a much vaguer query. I'm expecting a more explicit criteria for people with O- bloodtypes, who are currently deceased and born in Singapore.

Query:

Women who are not alive currently, who are universal blood donors born in singapore

Generated Elastic Query:

Results:

These results are exactly as expected, and vectors didn't even enter the picture at any step. The natural inclination when you have a hammer is to look for nails to hit. In this case, I don't think embedding the data would have created any positive outcome to justify the added cost and complexity of adding an embedding model.

Test 3

One last test - Let's see if it can handled nested properties like the languages field.

Query:

People who speak chinese dialects

Generated Elastic Query:

Results:

Here's a significant advantage of using LLMs to define the query: Various Chinese dialects were correctly added to the query without my having to explicitly define them in the prompt. Convenience!

Discussion

Not all search use-cases in the GenAI era should involve RAG and embeddings. It is straightforward to use traditional structured data to build search engines with the modern convenience of plain language querying. Since the core of this is really just prompt engineering, this approach isn't the best for searching over unstructured data. I do believe that there is a large class of use-cases waiting to be explored, where conventional structured database tables can be exposed to non-technical users for general querying. This was a difficult ask before LLMs were availablefor automatic query generation.

This seems to me like a bit of a missed opportunity.