Hybrid search made simple — One API. Exceptional relevance.

Elasticsearch gives you all the tools to add hybrid search through a single API, so you can quickly improve results and tune for exceptional relevance without stitching together multiple systems.

Why developers choose Elasticsearch

Get the best tools for precision, explainability, and control. Lexical search excels at structured queries, rare terms, and out-of-domain data. Semantic search adds fuzziness and recall when exact matches fall short. Control how they work together with tune scoring, filters, and boosts.

Lexical search

Vector search

Hybrid search

Use BM25F scoring with full control over field weights and term boosts — no model required.

Retrieve semantically related results via dense_vector or semantic_text fields.

Combine results via reciprocal_rank_fusion or <options> in the rank API.

Tune relevance using combined_fields, boost, fuzziness, synonyms, and analyzers.

Bring your own embeddings or use built-in inference with ELSER, OpenAI, etc.

Use a single hybrid query with shared filters, weights, and rerank logic.

Get native support for geo, term, range, and ACL filters — fast and stable at scale.

ACORN-1 enables fast filtered kNN even on large datasets with filter clause support.

Use explain, profile, and the _rank_features field to understand how docs score.

Lexical search

Vector search

Hybrid search

Use BM25F scoring with full control over field weights and term boosts — no model required.

Retrieve semantically related results via dense_vector or semantic_text fields.

Combine results via reciprocal_rank_fusion or <options> in the rank API.

Tune relevance using combined_fields, boost, fuzziness, synonyms, and analyzers.

Bring your own embeddings or use built-in inference with ELSER, OpenAI, etc.

Use a single hybrid query with shared filters, weights, and rerank logic.

Get native support for geo, term, range, and ACL filters — fast and stable at scale.

ACORN-1 enables fast filtered kNN even on large datasets with filter clause support.

Use explain, profile, and the _rank_features field to understand how docs score.

Best in class? Built right in

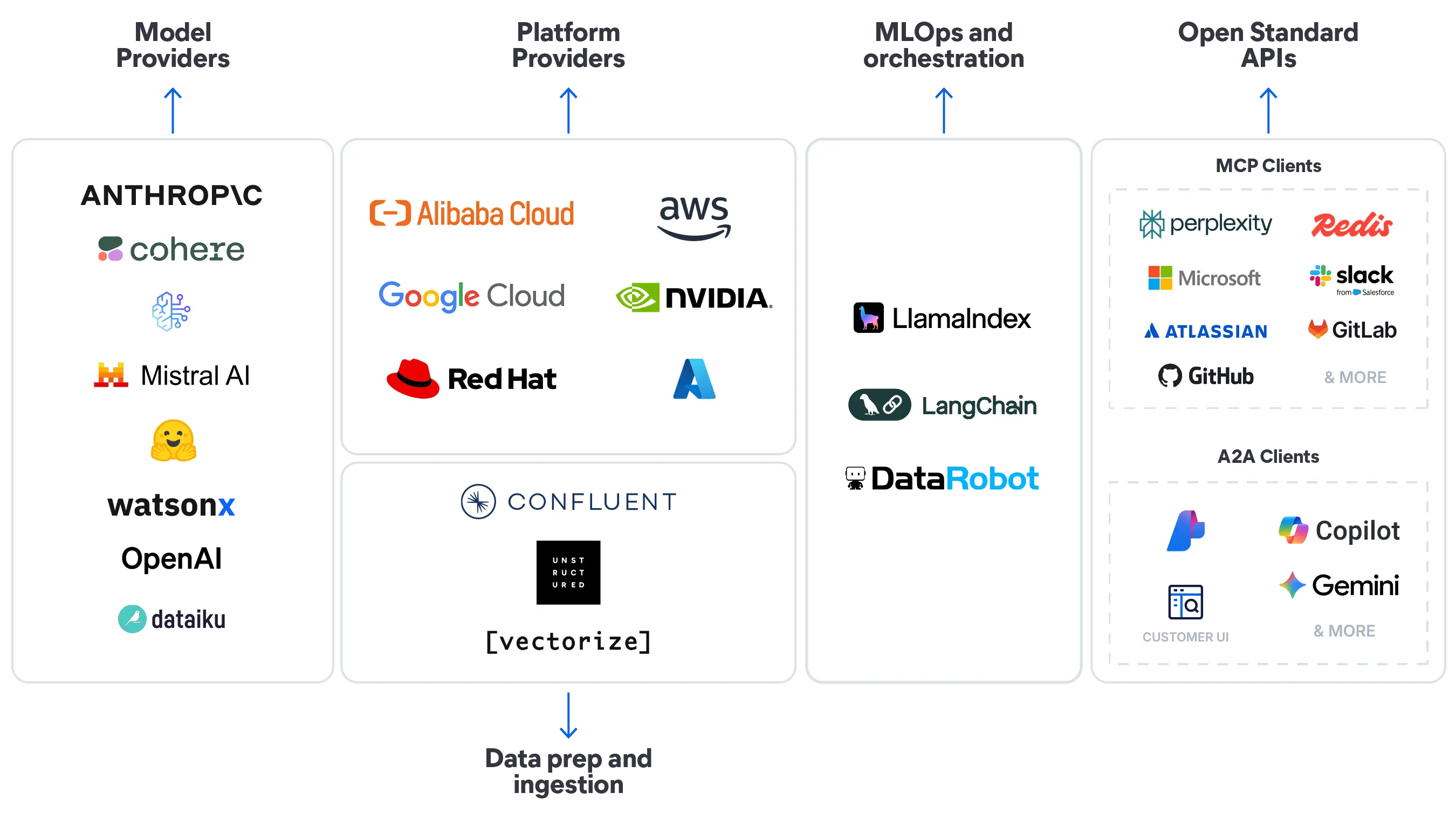

Start with Elastic's first-party ELSER and Jina AI models, built into Elasticsearch. Or plug into the models you already use through native integrations across the AI ecosystem.

Frequently asked questions

Hybrid search combines keyword (lexical) precision with vector (semantic) similarity, so users get relevant results even when queries don’t match exact text.

Lexical search is ideal for exact matches and filters. Vector search understands intent and meaning. Hybrid search lets you use both in the same query — ranking by relevance, not just match.

Retriever queries combine multiple search strategies — like match, kNN, or text_expansion — into one ranked result list using built-in rank fusion.

Yes. Elasticsearch supports native filters, facets, and geo constraints on top of approximate kNN vector search — no rescoring or workarounds needed.

Elastic uses scoring techniques like reciprocal rank fusion (RRF) or convex weighting to fairly combine lexical and vector scores, so results feel balanced out of the box.

Yes. Hybrid search is fully supported with vector fields, retriever queries, semantic models, filters, and observability — all natively in Elasticsearch.