Analysing Linux auditd anomalies with Auditbeat and machine learning

Auditbeat is an extremely popular Beat that allows you to collect Linux audit framework data to monitor processes running on Linux systems. It has the ability to stream a multitude of information — from security-related system information, to file integrity data, to process information — from the Linux auditd framework.

As part of an ongoing effort to offer ready-to-deploy machine learning job configurations in modules, we have recently released a set of analyses for the Auditbeat auditd module. These jobs allow you to automatically identify suspicious periods of activity in your servers’ kernels or within Docker containers. These types of analyses are crucial when trying to detect any errant processes or users gaining access to your systems.

Auditbeat ingestion

Auditbeat collects data about users and processes interacting on your systems by utilizing the Linux audit framework. By default, it uses the system’s preconfigured audit rules, but you can specify your own.

Setting up machine learning jobs

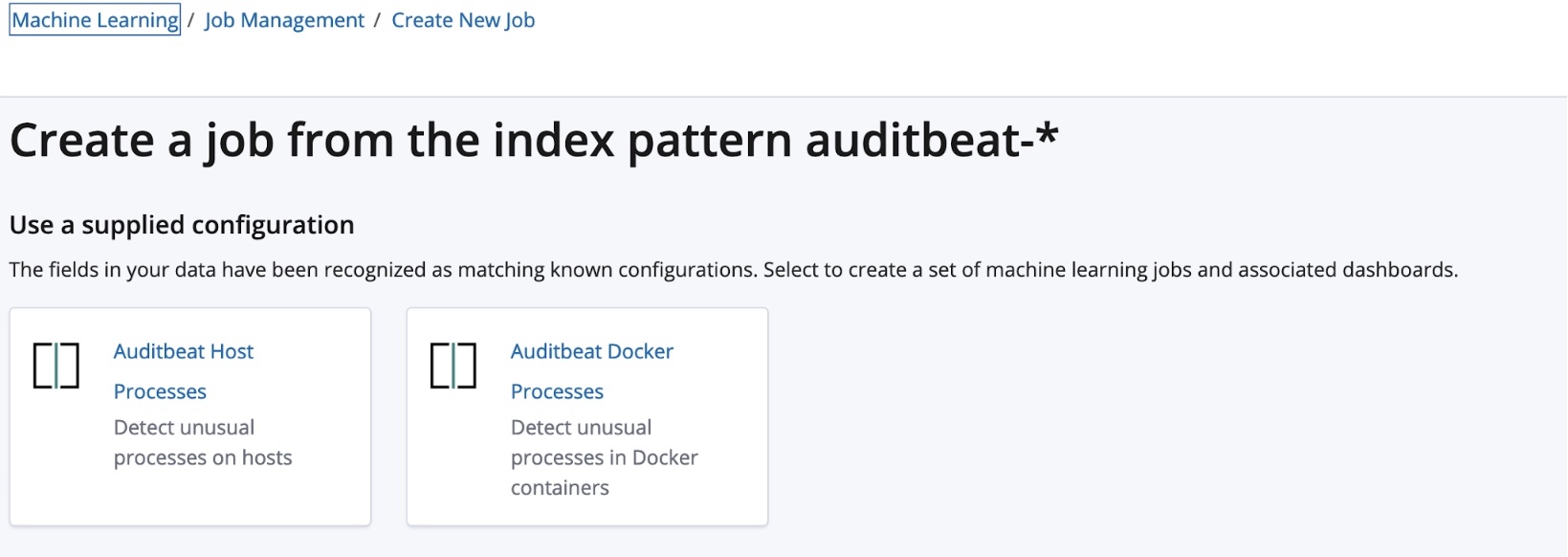

Once you have data being ingested with Auditbeat, the machine learning job creation page will look for an auditbeat-* index pattern and automatically offer you one of two supplied modules:

- Auditbeat Host Processes - These jobs and dashboards analyze processes running directly on host kernels.

- Auditbeat Docker Processes - These jobs and dashboards analyze processes running inside of Docker containers.

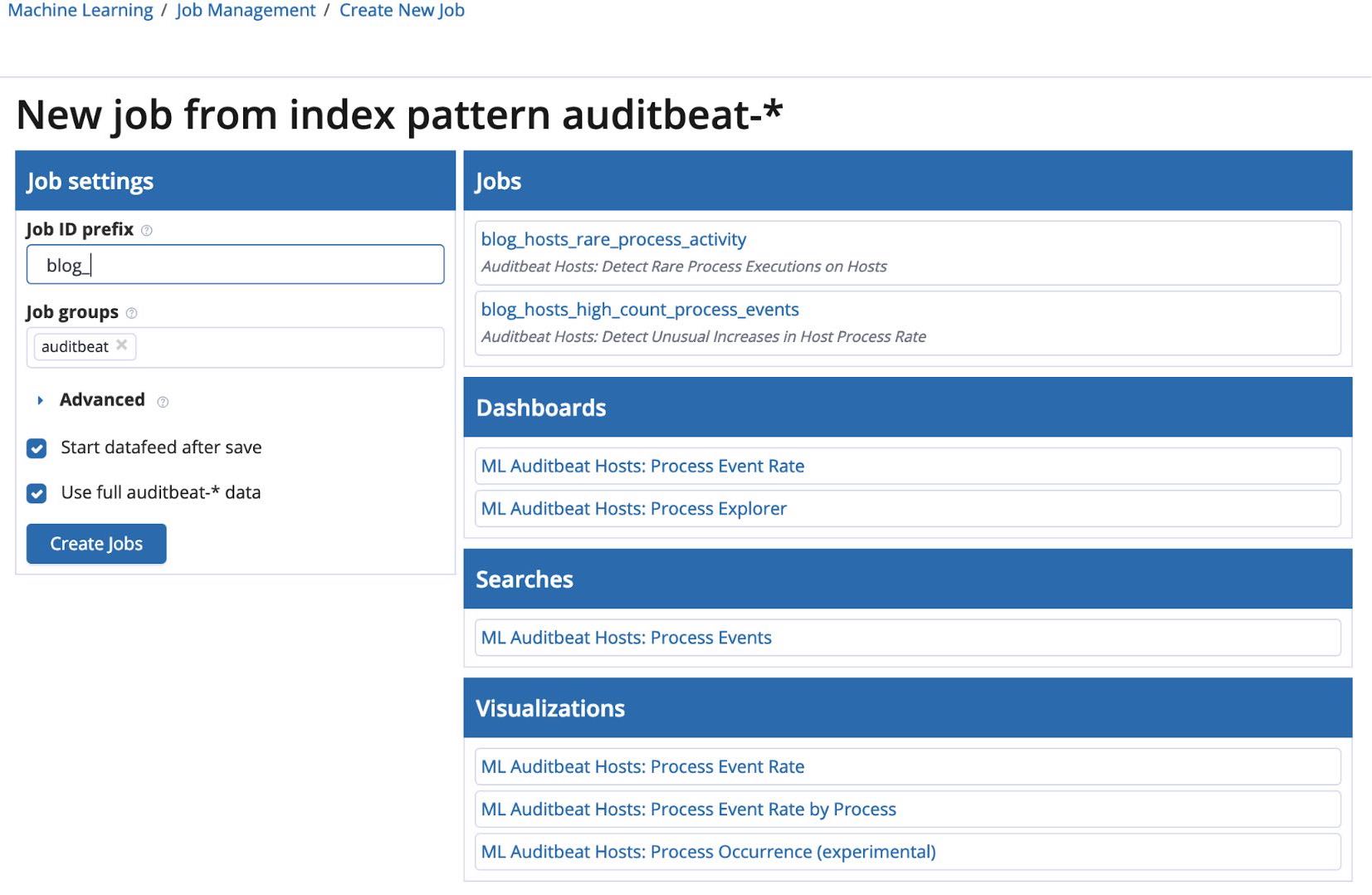

Clicking into one of the modules brings you to the module setup page where you can add a prefix to the job names and configure the datafeed. You also get a list of the dashboards, visualizations, and saved searches that comprise the module.

Once everything is set, you can create the jobs to begin the analysis.

Analysis

We offer modules for both on-host and in-Docker configurations. If your Auditbeat data is enriched with Docker metadata, machine learning will analyze rare processes and high process volume within containers.

Rare process activity

Production systems run a multitude of processes at any given time — be it serving pages via an API, file manipulation, or scheduled cron jobs. Manually monitoring every command that is run on a server is not a feasible task, and the ones that are potentially malicious are often hidden in a sea of seemingly benign processes.

To aid in this, we created the rare process activity job, which finds processes that are rare in time and scoped to each individual host. That is, we look at the relative proportion of buckets which contain each process and determine rare processes to be those that occur in far fewer buckets than other processes.

This job is configured as rare by “process.exe” parition_field_name=”beat.name” with “beat.name” and “process.exe” as influencers.

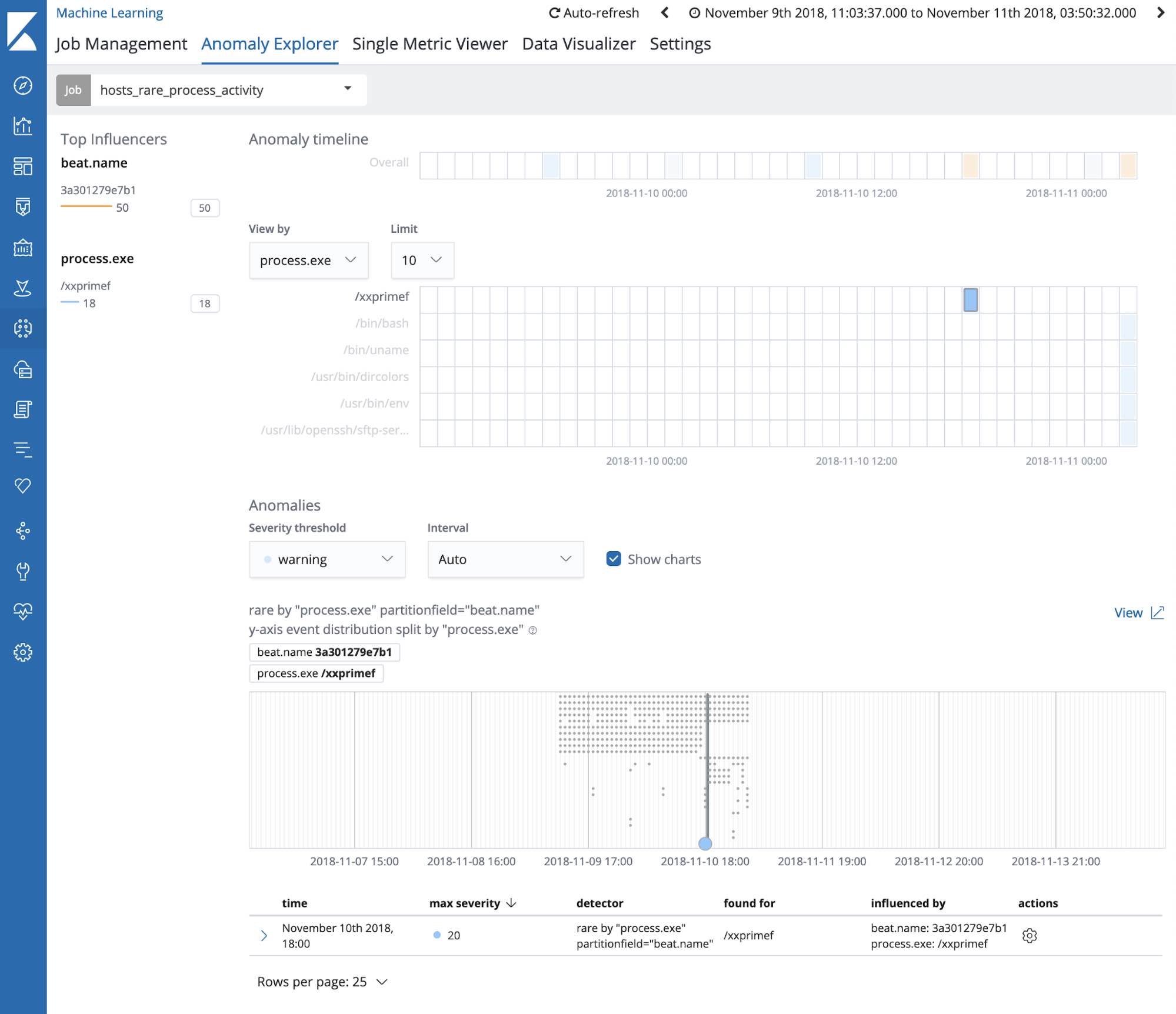

Let’s look at an example:

Here we see a process called /xxprimef being run on our 3a30127937b1 server. Looking at the rare chart below the anomaly explorer, we can see that this process was not run during any other bucket in our analysis — meaning it could be a suspicious process.

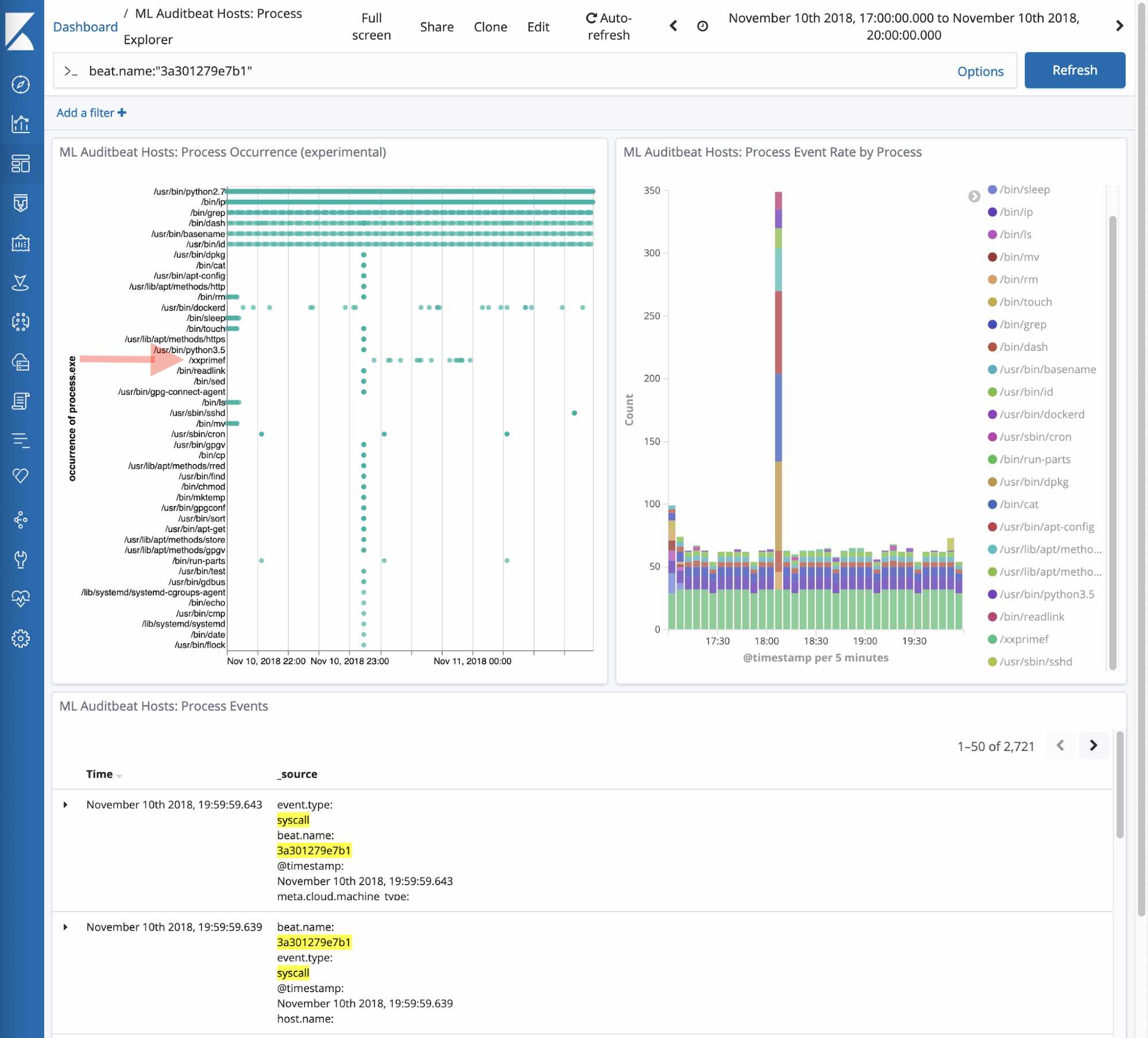

Clicking into the “Process Explorer” custom URL gives us a more detailed view of the activity occurring on that machine during the period of suspicious activity. Looking at /xxprimef we see that its pattern of behavior is markedly different from that of other processes.

High process rates

As mentioned above, the volume of processes that are running on a machine often follow predictable patterns. Schedule large cron jobs for nights or over the weekend, push releases every other Sunday night, etc. Sometimes servers are flooded with traffic as part of an attack. The high process rates job aims to identify these unusual increases in process activity that could be indicative of flooding-style attacks.

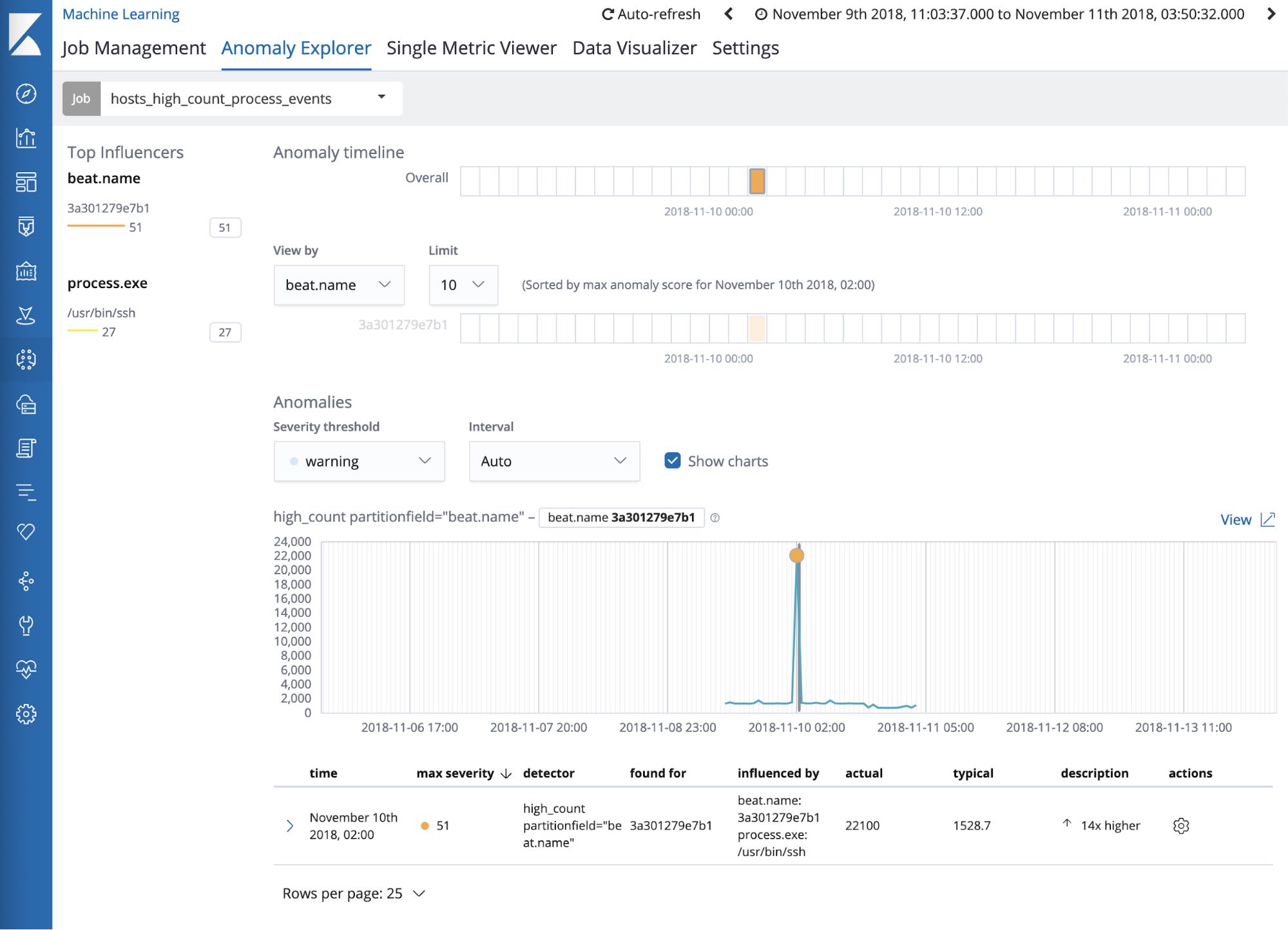

This job identifies periods of unusually high process activity to help identify attack attempts on your servers. Going back to our example, we see that there was a large spike in the number of ssh commands sent to the kernel on 3a301279eb1.

Clicking into the Process Rate Custom URL shows a more detailed view of activity on the server at the time period surrounding the anomaly. We see below that the ssh commands were coupled with a spike in scp commands as well, meaning someone was copying files to or from the server.

Summary

The new Auditbeat machine learning module provides you with a way to bootstrap security analyses on your on-host or Dockerized environments. The insights it provides can allow you to identify potential threats through machine learning jobs and investigate fine-grain details via custom urls and dashboards. Give the analyses a try, and if you have any questions, feel free to reach out on our Beats Discuss forum.