Generative AI

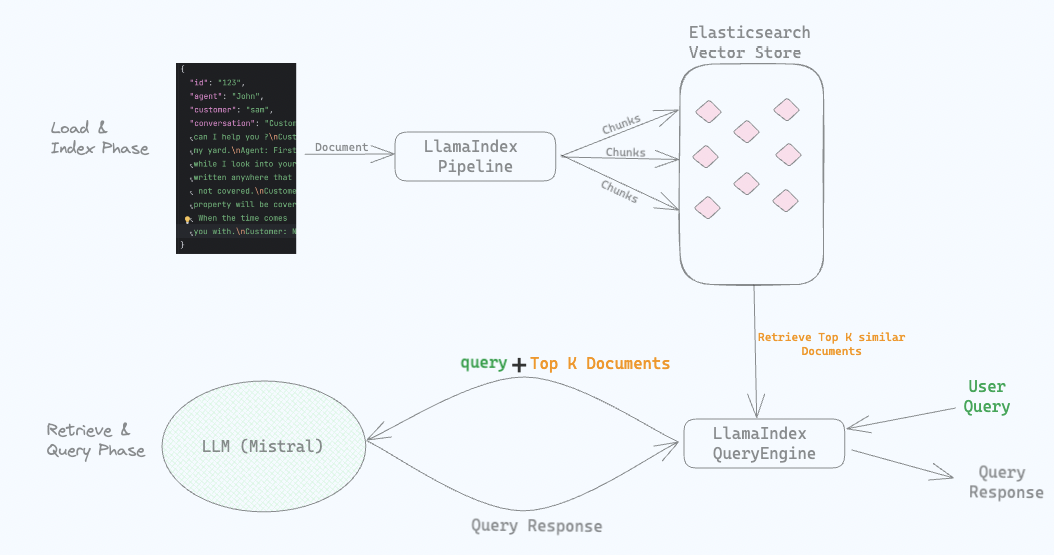

Protecting Sensitive and PII information in RAG with Elasticsearch and LlamaIndex

How to protect sensitive and PII data in a RAG application with Elasticsearch and LlamaIndex.

Build a Conversational Search for your Customer Success Application with Elasticsearch and OpenAI

Explore how to enhance your customer success application by implementing a conversational search feature using advanced technologies such as Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG)

semantic_text with Amazon Bedrock

Using semantic_text new feature, and AWS Bedrock as inference endpoint service

Elasticsearch open inference API adds Amazon Bedrock support

Elasticsearch open inference API adds support for embeddings generated from models hosted on Amazon Bedrock."

Playground: Experiment with RAG using Bedrock Anthropic Models and Elasticsearch in minutes

Playground is a low code interface for developers to explore grounding LLMs of their choice with their own private data, in minutes.

Search complex documents using Unstructured.io and Elasticsearch vector database

Ingest and search complex proprietary documents with Unstructured and Elasticsearch vector database for RAG applications

How Generative AI will transform web accessibility

An experiment inspired by Be My Eyes and OpenAI to experiment with using Chat GPT 4o for web accessibility

Playground: Experiment with RAG applications with Elasticsearch in minutes

Playground is a low code interface for developers to explore grounding LLMs of their choice with their own private data, in minutes.

Elasticsearch vs. OpenSearch: Vector Search Performance Comparison

Elasticsearch is out-of-the-box 2x–12x faster than OpenSearch for vector search

Building RAG with Llama 3 open-source and Elastic

Build a RAG system with Llama3 open source and Elastic.