End-to-End Observability with Nginx and OpenTelemetry

Nginx sits at the very front of most modern architectures: handling SSL, routing, load balancing, authentication, and more. Yet, despite its central role, it is often absent from distributed traces.

That gap creates blind spots that impact performance debugging, user experience analysis, and system reliability.

This article explains why Nginx tracing is important in an application context, and provides a practical guide to enable the Nginx Otel tracing module exporting spans directly to Elastic APM.

Why Nginx Tracing Matters for Modern Observability

Instrumenting only backend services gives you only half the picture.

Nginx sees:

- every incoming request

- client trace context

- TLS negotiation

- upstream errors (502, 504)

- edge-layer latency

- routing decisions

If Nginx is not in your traces, your distributed trace is incomplete.

By adding OpenTelemetry tracing at this ingress layer, you unlock:

1. Full trace continuity : From browser → Nginx → backend → database.

2. Accurate latency attribution : Edge delays vs. backend delays are clearly separated which unlock Elastic APM Latency anomaly detection for proactive detection.

3. Error root-cause clarity : Nginx errors appear as spans instead of backend “mystery gaps”.

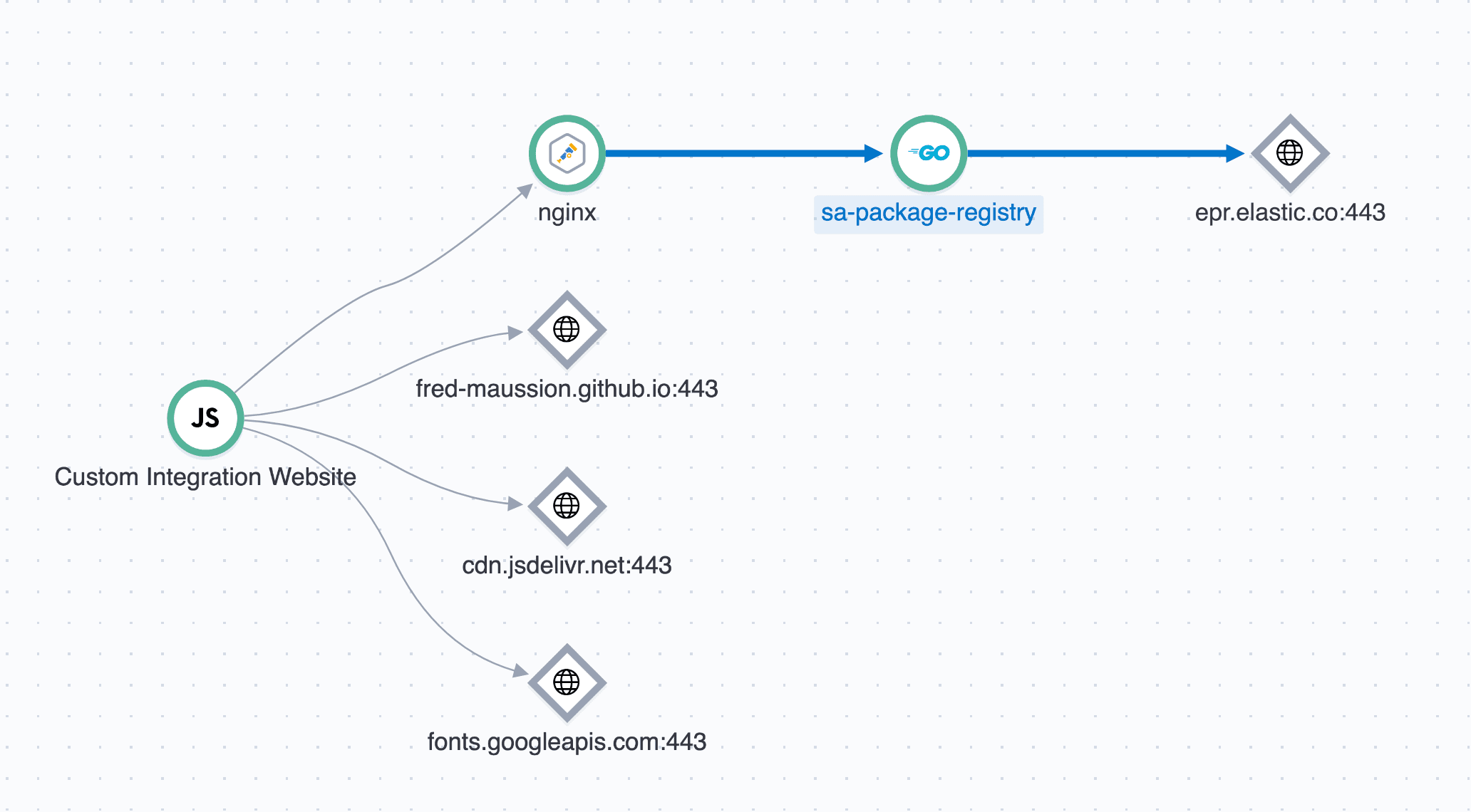

4. Complete service topology : Your APM service map finally shows the real architecture.

Integrating Nginx with OpenTelemetry on Debian

This guide provides a comprehensive overview of why, how to install and configure the Nginx OpenTelemetry module on a Debian-based system. The configuration examples are tailored to send telemetry data directly to an Elastic APM endpoint whether it's an EDOT Collector or mOtel in case of our serverless, enabling end-to-end distributed tracing.

Installation on Debian

The Nginx OTEL module is not included in the standard Nginx packages. It must be installed along with a working nginx configuration.

Prerequisites

First, install the necessary tools for compiling software and the Nginx development dependencies.

sudo apt update

sudo apt install -y apt install nginx-module-otel

Load the Module in Nginx

Edit your main

# /etc/nginx/nginx.conf

load_module modules/ngx_otel_module.so;

events {

# ...

}

http {

# ...

}

Now, test your configuration and restart Nginx.

sudo nginx -t

sudo systemctl restart nginx

Configuration

Configuration is split between the main

Global Configuration (/etc/nginx/nginx.conf)

This configuration sets up the destination for your telemetry data and defines global variables used for CORS and tracing. These settings are placed inside the

http {

...

# --- OpenTelemetry Exporter Configuration ---

# Defines where Nginx will send its telemetry data directly to Elastic APM or EDOT.

otel_exporter {

endpoint https://<ELASTIC_URL>:443;

header Authorization "Bearer <TOKEN>";

}

# --- OpenTelemetry Service Metadata ---

# These attributes identify Nginx as a unique service in the APM UI.

otel_service_name nginx;

otel_resource_attr service.version 1.28.0;

otel_resource_attr deployment.environment production;

otel_trace_context propagate; # Needed to propagate the RUM traces to the backend

# --- Helper Variables for Tracing and CORS ---

# Creates the $trace_flags variable needed to build the outgoing traceparent header.

map $otel_parent_sampled $trace_flags {

default "00"; # Not sampled

"1" "01"; # Sampled

}

# Creates the $cors_origin variable for secure, multi-origin CORS handling.

map $http_origin $cors_origin {

default "";

"http://<URL_ORIGIN_1>/" $http_origin; # Add your Origin here to allow CORS

"https://<URL_ORIGIN_2>/" $http_origin; # Add your others Origin here to allow CORS

}

...

}

Server Block Configuration (/etc/nginx/conf.d/site.conf)

This configuration enables tracing for a specific site, handles CORS preflight requests, and propagates the trace context to the backend service.

server {

listen 443 ssl;

server_name <WEBSITE_URL>;

# --- OpenTelemetry Module Activation ---

# Enable tracing for this server block.

otel_trace on;

otel_trace_context propagate;

location / {

# --- CORS Preflight (OPTIONS) Handling ---

# Intercepts preflight requests and returns the correct CORS headers,

# allowing the browser to proceed with the actual request.

if ($request_method = 'OPTIONS') {

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS' always;

add_header 'Access-Control-Allow-Headers' 'Content-Type, traceparent, tracestate' always;

add_header 'Access-Control-Max-Age' 86400;

add_header 'Access-Control-Allow-Origin' "$cors_origin" always;

return 204;

}

# --- OpenTelemetry Trace Context Propagation ---

# Manually constructs the W3C traceparent header and passes the tracestate

# header to the backend, linking this trace to the upstream service.

proxy_set_header traceparent "00-$otel_trace_id-$otel_span_id-$trace_flags";

proxy_set_header tracestate $http_tracestate;

# --- Standard Proxy Headers ---

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# --- Forward to Backend ---

# Passes the request to the actual application (eg. localhost in this example).

proxy_pass http://<BACKEND_URL>:8080;

}

}

Test your configuration and restart Nginx.

sudo nginx -t

sudo systemctl restart nginx

Conclusion: Turning Nginx into a First-Class Observability Signal

By enabling OpenTelemetry tracing directly in Nginx and exporting spans to Elastic APM (via EDOT or Elastic’s managed OTLP endpoint), you bring your ingress layer into the same observability model as the rest of your stack. The result is:

- true end-to-end trace continuity from the browser to backend services

- clear separation between edge latency and application latency

- immediate visibility into gateway-level failures and retries

- accurate service maps that reflect real production traffic

Most importantly, this approach aligns Nginx with modern observability standards. It avoids proprietary instrumentation, fits naturally into OpenTelemetry-based architectures, and scales consistently across hybrid and cloud-native environments.

Try it out!

Once Nginx tracing is in place, several natural extensions can further improve your observability posture:

- correlate Nginx traces with application logs and metrics using Elastic’s unified observability

- add Real User Monitoring (RUM) to close the loop from frontend to backend

- introduce sampling and tail-based decisions at the collector level for cost control

- use Elastic APM service maps and anomaly detection to proactively detect edge-related issues

Instrumenting Nginx is often the missing link in distributed tracing strategies. With OpenTelemetry and Elastic, that gap can now be closed in a clean, standards-based, and production-ready way.

If you want to experiment with this setup quickly, Elastic Serverless provides the fastest way to get started. Sign up and try it out in just a few minutes using our trial environment available at https://cloud.elastic.co/ .