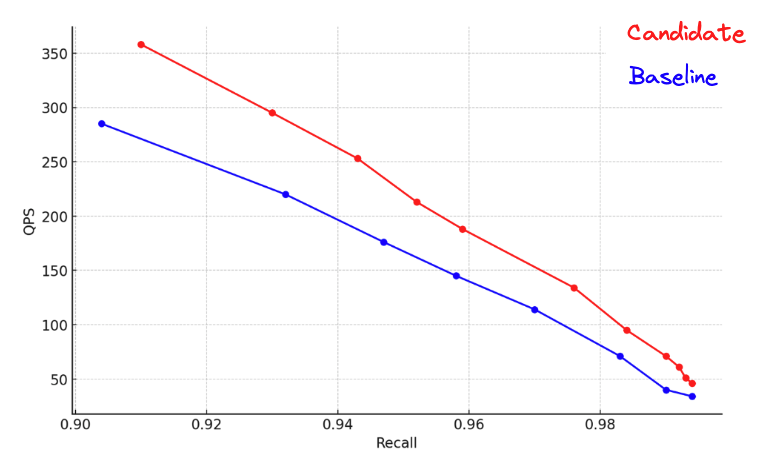

We made significant changes to how Elasticsearch 8.11 reclaims memory from its indexing buffer, which helps reduce the merging overhead and thus make indexing faster. Using our Logging track, we observed an 8% improvement in ingestion throughput with these changes when running with a 1GB heap.

How it works in Elasticsearch 8.10 and earlier

When indexing data, Elasticsearch starts building new segments in memory and writes index operations into a transaction log for durability. These in-memory segments eventually get serialized to disk, either when changes need to be made visible - an operation called "refresh" in Elasticsearch, or when memory needs to be reclaimed. This blog focuses on the latter.

To manage memory of its indexing buffer, Elasticsearch keeps track of how much RAM is used across all shards on the local node. Whenever this amount of memory crosses the limit (10% of the heap size by default), it will identify the shard that uses the most memory and refresh it.

Change 1: flush a single segment at a time

When changes get buffered in memory for a given shard, there is not a single pending segment. In order to be able to index concurrently, Lucene maintains a pool of pending segments. When a thread wants to index a new document, it picks a pending segment from this pool, updates it and then moves the pending segment back to the pool. If there is no free pending segment in the pool, a new one will be created. There are usually a number of pending segments in the pool which are in the order of the peak indexing concurrency.

The first change we applied was to update this logic to no longer flush all segments from a shard at once, and instead only flush the largest pending segment using Lucene's IndexWriter#flushNextBuffer() API. This helps because the size of pending segments is generally not uniform, as Lucene has a bias towards updating the largest pending segments, so this new approach helps flush fewer segments, which should also be significantly larger. And since fewer segments get merged, less merging is required to keep the number of segments under control.

Change 2: flush shards in a round-robin fashion

Managing a shared indexing buffer across many shards is a hard problem. The existing logic assumed that it would be sensible to pick the shard that uses the most memory for its indexing buffer as the next shard to reclaim memory from. After all, this is the most efficient way to buy some time until we reach the maximum amount of memory for the indexing buffer again. But on the other hand, this also penalizes shards that ingest most actively, as they would flush segments much more frequently than shards that have a modest ingestion rate. There are many moving parts here, which make it hard to get a good intuition about how these various factors play together and figure out the best strategy for picking the next shard to flush.

So we ran experiments with various approaches to picking the next shard to flush, and interestingly picking the largest shard was the worst one, significantly outperformed by picking shards at random. Actually, the only approach that slightly outperformed picking shards at random was to pick shards in a round-robin fashion. This is now the way how Elasticsearch picks the next shard to flush.

Conclusion

These two changes should help reduce the merging overhead and speed up ingestion, especially with small heaps and field types that consume significant amounts of RAM in the indexing buffer like text and match_only_text fields, or field types that are expensive to merge like dense_vector. Enjoy the speedups!