Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

The evolution of search

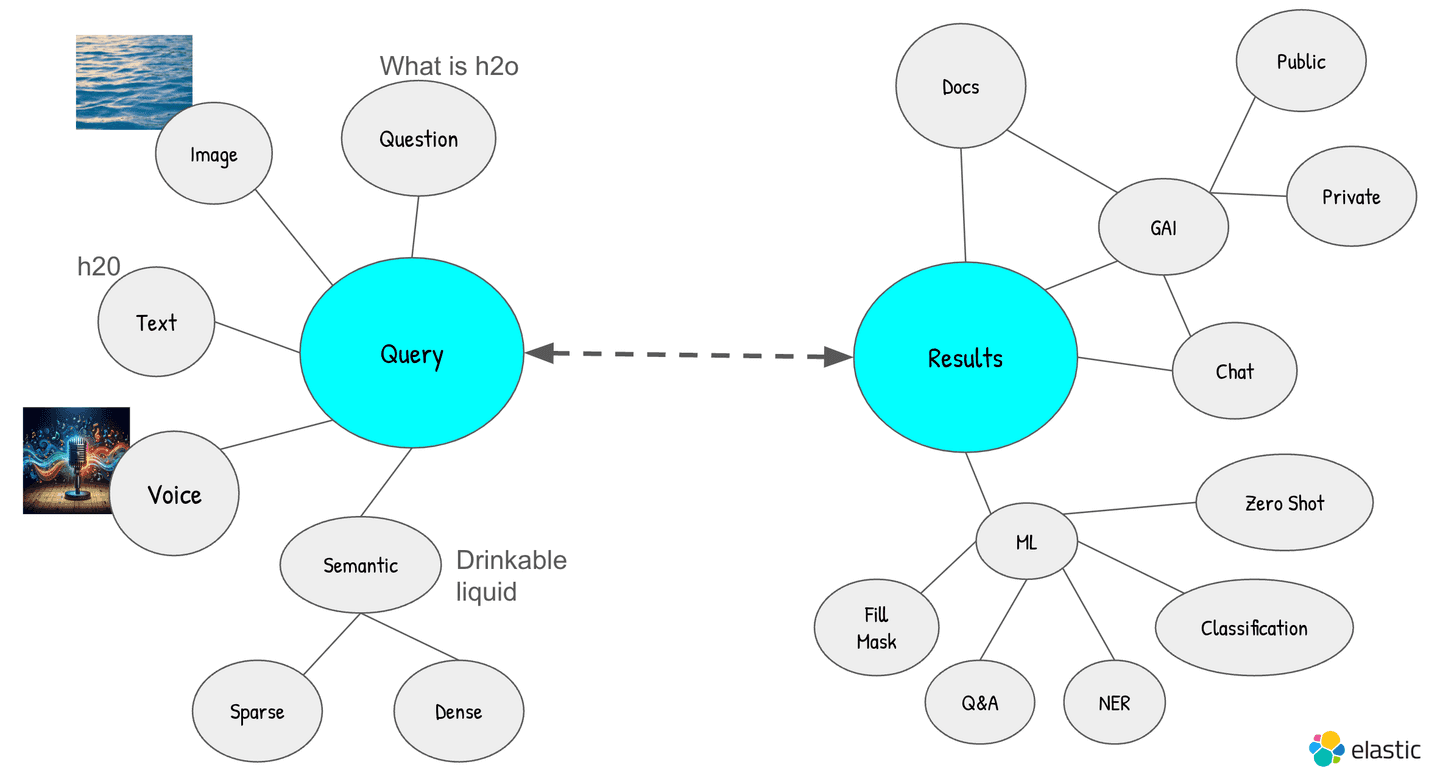

Search has evolved from simple text queries yielding straightforward results to a complex system accommodating various formats like text, images, videos, and questions.

Search not too long ago comprised of a text query and relevant results. Today's search results are enhanced with generative AI, machine learning, and interactive chat features, offering a richer, more dynamic, and contextually relevant user experience. Additionally, voice search and speech avatars have transformed traditional search, offering a more interactive and convenient user experience.

The desire for dialogue in search

In a realm where dialogue underpins every interaction, whether with fellow humans or bots, shouldn't our search experiences reflect this fundamental aspect? Envision the vast array of document corpora residing within an enterprise. Naturally, this environment sparks curiosity and a multitude of questions, leading to subsequent inquiries. This innate human trait drives us to seek answers, delve deeper following initial responses, and continuously explore. Yet, traditional question-and-answer mechanisms fall short, as they often disregard the context of preceding exchanges, leading to a disjointed and laborious process that feels unnatural and prompts users to disengage prematurely.

Beyond question and answer search

Consider the act of using a television to search for content, such as seeking action movies featuring Nicolas Cage. While most current systems adeptly provide relevant results, the inquiry rarely ends there. Subsequent questions, such as inquiring about the runtime or release dates of these movies, are a natural progression in our quest for information. However, standard search applications are not designed to facilitate a continuous dialogue; they are structured around isolated question-and-answer formats, which limits the depth of interaction and exploration.

Avatar assisted voice search experience

This is where the concept of an avatar-assisted search experience comes into play, especially in scenarios where users, myself included, prefer direct answers without the need to sift through information. Occasionally, we desire the convenience of having answers delivered to us, bypassing the effort of reading through content. The development of an avatar to generate responses could further modernize this interaction, providing a more engaging, efficient, and natural user experience.

Live demo: creating an avatar assisted voice search experience

This demo showcases a seamless integration of speech-to-text, Elasticsearch's semantic search capabilities, Azure OpenAI's RAG, and a synthesized avatar for responses.

Integration details

Speech to search

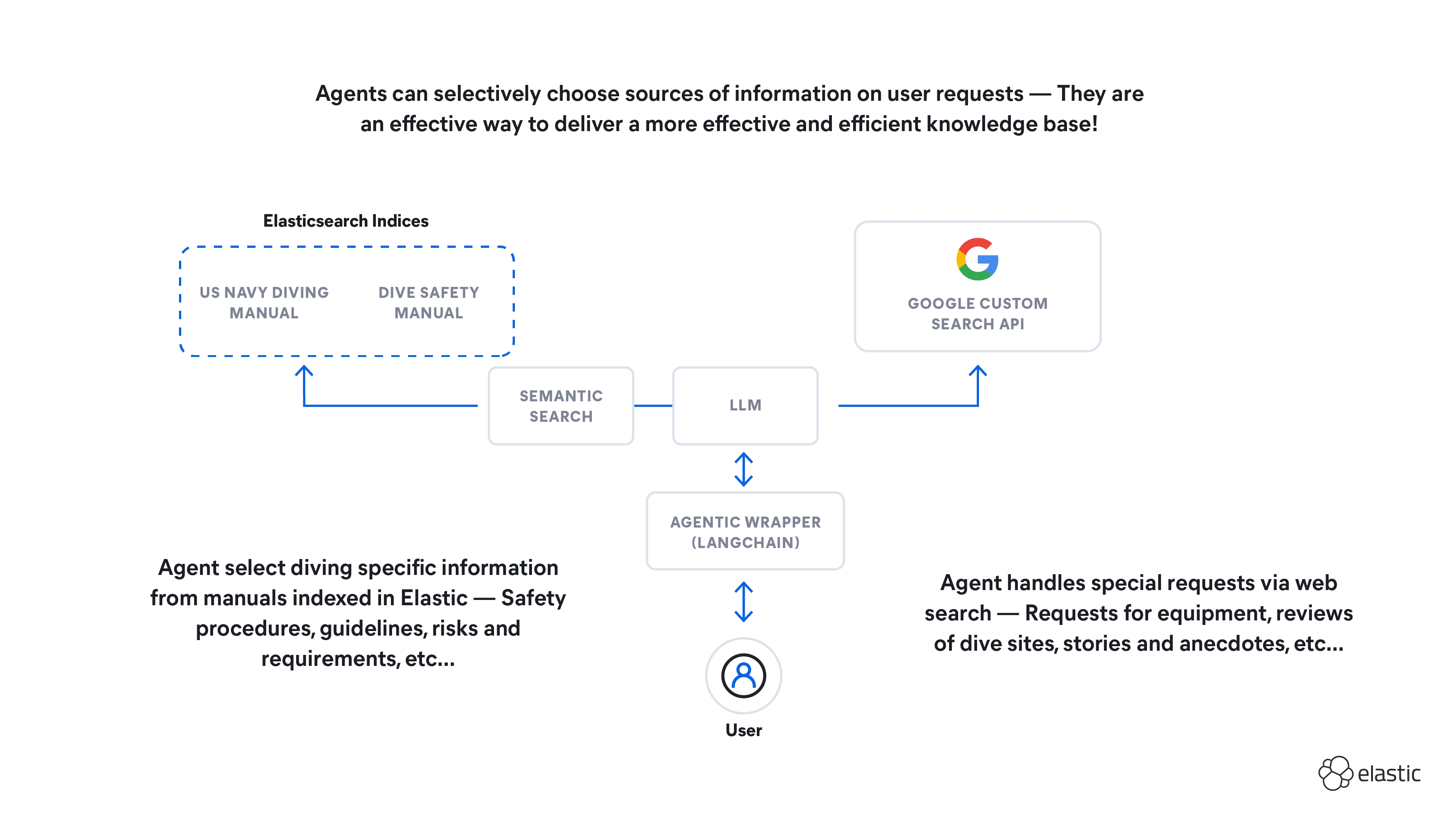

The advanced search experience begins with user voice interactions, which are converted into text by Azure Speech to Text, forming the basis of the search query. This query is then processed through Elasticsearch, using the ELSER, to retrieve relevant documents, such as TV guides listing “action movies featuring Nicolas Cage.” This ensures precision and relevance in the search results.

RAG & cache

In the enhanced search framework, merely fetching documents isn't enough. Azure OpenAI's GPT-4 refines raw data into understandable responses, ensuring smooth conversation flow. Additionally, Elasticsearch boosts efficiency as a GenAI caching layer, recycling answers for related queries, thus conserving resources. For example, if there's a cached response for "action movies featuring Nicolas Cage," the caching API will swiftly use this for similar questions like “Nicolas Cage high-intensity movies,” accelerating the search experience.

Avatar response generation

The experience is further enriched with an avatar response feature, powered by Azure Synthesizer, adding a visual and auditory dimension that surpasses traditional text-based interfaces. This creates a more engaging and interactive user experience, integrating various advanced technologies to deliver a dynamic, intuitive, and compelling search experience.

Summary

The shift from traditional Google searches to platforms like ChatGPT for answering queries illustrates a broader trend: our preference for dialogue over static information retrieval. This predilection underscores the importance for enterprises to adopt a more intuitive and conversational approach in their search functionalities. By embracing this paradigm, businesses can better align with the natural human inclination towards dialogue, thereby enhancing the overall search and discovery process within their data ecosystems.

Demo assets

Still curious, here is the link to the source code.