Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

There are a number of strategies to add domain specific knowledge to large language models (LLMs), and more approaches are being investigated as part of an active research field. Methods such as pre-training and fine-tuning on domain specific datasets allow the LLM to reason and generate domain specific language. However, using these LLMs as knowledge bases is still prone to hallucinations. If the domain language is similar to the LLM training data, using external information retrieval systems via Retrieval Augmented Generation (RAG) to provide contextual information to the LLM can improve factual responses. Ultimately, a combination of fine-tuning and RAG may provide the best result.

The blog attempts to describe some of the basic processes for storing and retrieving knowledge from LLMs. Followup blogs will describe different RAG strategies in more detail.

| Pre-training | Fine-tuning | Retrieval Augmented Generation | |

|---|---|---|---|

| Training duration | Days to weeks to months | Minutes to hours | Not required |

| Customisation | Requires large amount of domain training data Can customise model architecture, size. tokenizer etc. Creates new “foundation” LLM model | Add domain-specific data Tune for specific tasks. Updates LLM model. | No model weights. External information retrieval system can be tuned to align with LLM. Prompt can be optimised for task performance. |

| Objective | Next-token prediction | Increase task performance | Increase task performance for specific set of domain documents |

| Expertise | High | Medium | Low |

Introduction to domain specific generative AI

Generative AI technologies, built on large language models (LLMs), have substantially progressed our ability to develop tools for processing, comprehending, and generating text. Furthermore, these technologies have introduced an innovative information retrieval mechanism, wherein generative AI technologies directly respond to user queries using the stored (parametric) knowledge of the model.

However, it's important to note that the parametric knowledge of the model is a condensed representation of the entire training dataset. Thus, employing these technologies for a specific knowledge base or domain beyond the original training data does come with certain limitations, such as:

- The generative AI's responses might lack context or accuracy, as they won't have access to information that wasn't present in the training data.

- There is potential for generating plausible-sounding but incorrect or misleading information (hallucinations).

Different strategies exist to overcome these limitations, such as extending the original training data, fine-tuning the model, and integrating with an external source of domain-specific knowledge. These various approaches yield distinct behaviours and carry differing implementation costs.

Strategies for integrating domain-specific knowledge into LLMs

Domain specific pre-training for LLMs

LLMs are pre-trained on huge corpora of data that represent a wide range of natural language use cases:

| Model | Total dataset size | Data sources | Training cost |

|---|---|---|---|

| PaLM 540B | 780 billion tokens | Social media conversations (multilingual) 50%; Filtered web pages (multilingual) 27%; Books (English) 13%; GitHub (code) 5%; Wikipedia (multilingual) 4%; News (English) 1% | 8.4M TPU v2 hours |

| GPT-3 | 499 billion tokens | Common Crawl (filtered) 60%; WebText2 22%; Books1 8%; Books2 8%; Wikipedia 3% | 0.8M GPU hours |

| LLaMA 2 | 2 trillion tokens | “mix of data from publicly available sources” | 3.3M GPU hours |

The costs of this pre-training step are substantial, and there’s a significant amount of work required to curate and prepare the datasets. Both of these tasks require a high level of technical expertise.

In addition, pre-training is only one step in creating the model. Typically, the models are then fine-tuned on a narrower dataset that is carefully curated and tailored for specific tasks. This process also typically involves human reviewers that rank and review possible model outputs to improve the model’s performance and safety. This adds further complexity and cost to the process.

Examples of this approach applied to specific domains include:

- ESMFold, ProGen2 and others - LLM for protein sequences: protein sequences can be represented using language-like sequences but are not covered by natural language models

- Galactica - LLM for science: trained exclusively on a large collection of scientific datasets, and includes special processing to handle scientific notations

- BloombergGPT - LLM for finance: trained on 51% financial data, 49% public datasets

- StarCoder - LLM for code: trained on 6.4TB of permissively licensed source code in 384 programming languages, and included 54 GB of GitHub issues and repository-level metadata

The domain-specific models generally outperform generalist models within their respective domains, with the most significant improvements observed in domains that differ significantly from natural language (such as protein sequences and code). However, for knowledge-intensive tasks, these domain-specific models suffer from the same limitations due to their reliance on parametric knowledge. Therefore, while these models can understand the relationships and structure of the domain more effectively, they are still prone to inaccuracies and hallucinations.

Domain specific fine-tuning for LLMs

Fine-tuning for LLMs involves training a pre-trained model on a specific task or domain to enhance its performance in that area. It adapts the model's knowledge to a narrower context by updating its parameters using task-specific data, while retaining its general language understanding gained during pre-training. This approach optimises the model for specific tasks, saving significant time compared to training from scratch.

Examples

- Alpaca - fine-tuned LLaMA-7B model that behaves qualitatively similarly to OpenAI’s GPT-3.5

- xFinance - fine-tuned LLaMA-13B model for financial-specific tasks. Reportedly outperforms BloombergGPT

- ChatDoctor - fine-tuned LLaMA-7B model for medical chat.

- falcon-40b-code-alpaca - fine-tuned falcon-40b model for code generation from natural language

Costs: fine-tuning vs. pre-training LLMs

Costs for fine-tuning are significantly smaller than for pre-training. In addition, novel methods such as parameter-efficient fine-tuning (PEFT) methods (e.g. LoRA, adapters, prompt tuning, and in-context learning as described above) enable very efficient adaptation of pre-trained language models (PLMs) to various downstream applications without fine-tuning all the model's parameters. For example,

| Model | Fine-tuning method | Fine-tuning dataset | Cost |

|---|---|---|---|

| Alpaca | Self-Instruct | 52K unique instructions and the corresponding outputs | 3 hours on 8 80GB A100s:24 GPU hours |

| xFinance | Unsupervised fine-tuning and instruction fine-tuning using xTuring library | 493M token text dataset; 82K instruction dataset | 25 hours on 8 A100 80GB GPUs:200 GPU hours |

| ChatDoctor | Self-Instruct | 110K patient-doctor interactions | 3 hours on 6 A100 GPUS: 18 GPU hours |

| falcon-40b-code-alpaca | Self-Instruct | 52K instruction dataset; 20K instruction-input-code triplets | 4 hours on 4 A100 80GB GPUs: 16 GPU hours |

Similar to domain-specific pre-trained models, these models typically exhibit better performance within their respective domains, yet they still face the limitations associated with parametric knowledge.

Enhancing LLMs with Retrieval Augmented Generation (RAG)

LLMs store factual knowledge in their parameters, and their ability to access and precisely manipulate this knowledge is still limited. This can lead to LLMs providing non-factual but seemingly plausible predictions (hallucinations) - particularly for unpopular questions. Additionally, providing references for their decisions and updating their knowledge efficiently remain open research problems.

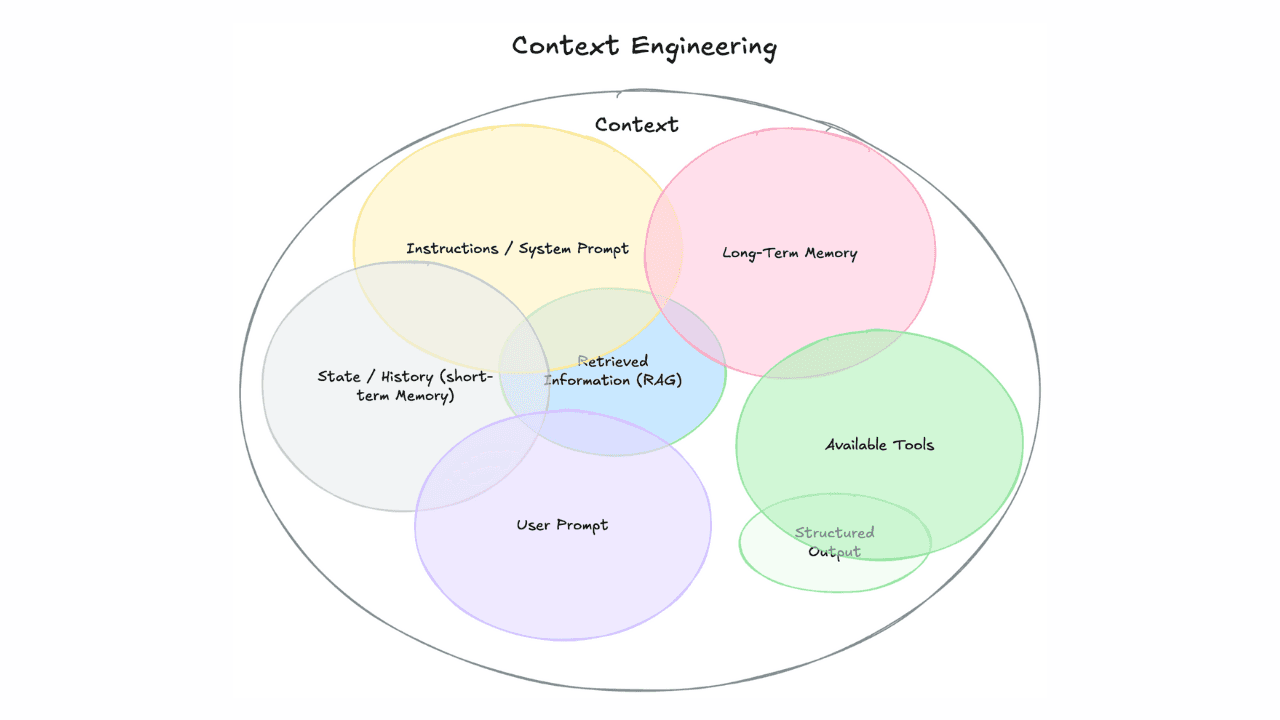

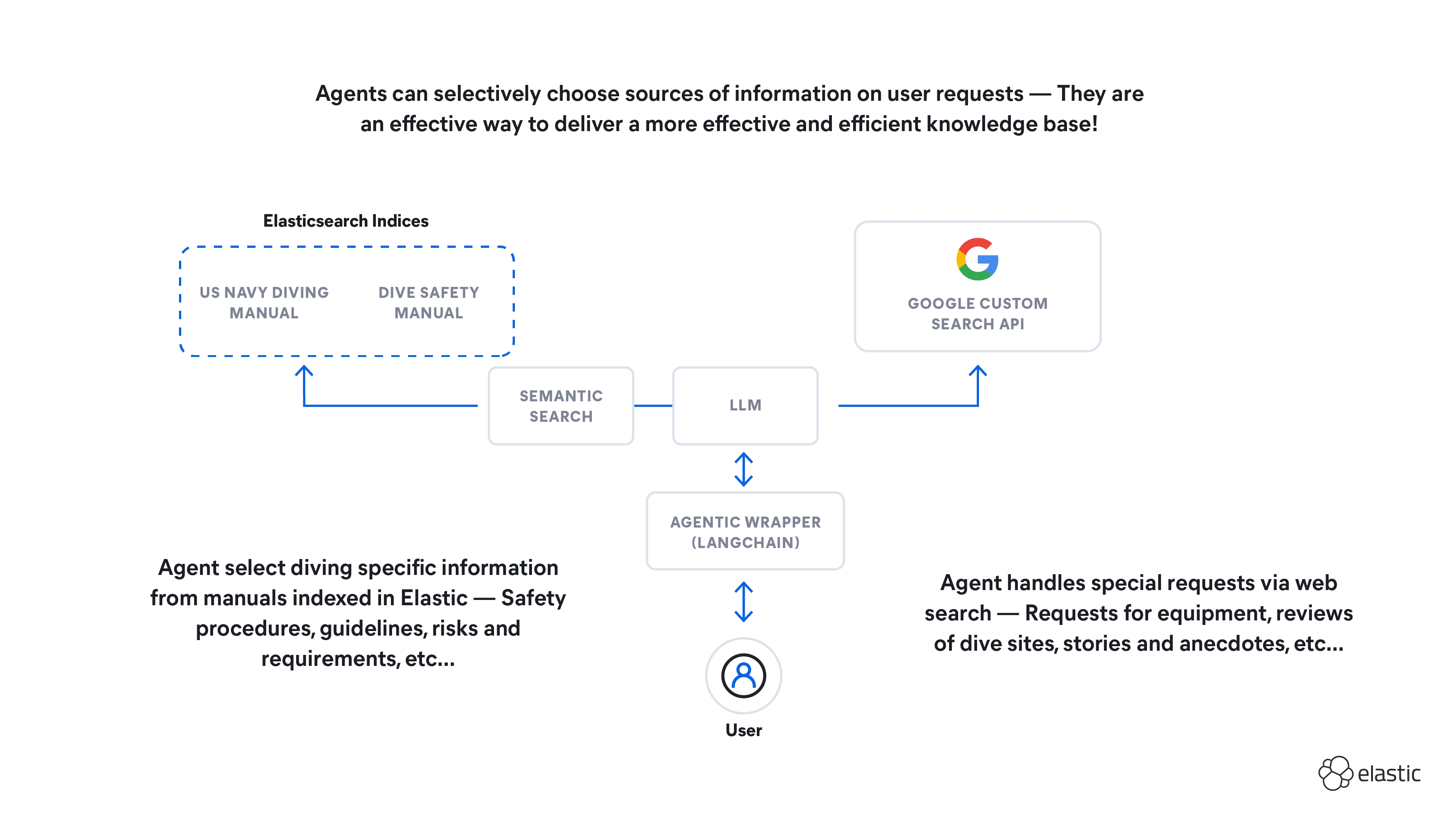

A general purpose recipe to address these limitations is RAG, where the LLM's parametric knowledge is grounded with external or non-parametric knowledge from an information retrieval system. This knowledge is passed as additional context in the prompt to the LLM and specific instructions are given to the LLM on how to use this contextual information. This keeps it more inline with the discussion so far about parametric knowledge. The advantages of this approach are:

- Unlike fine-tuning and pre-training, LLM parameters do not change and so there are no training costs

- Expertise required to simple implementation is low (although more advanced strategies exist)

- Response can be tightly constrained to context returned from the information retrieval system, limiting hallucinations

- Smaller task specific LLMs can be used - as the LLM is being used for a specific task rather than a knowledge base.

- Knowledge base is easily updatable as it requires no changes to the LLM

- Responses can cite sources for human verification and link outs

Strategies to combine this non-parametric knowledge (i.e. retrieved text) with an LLM’s parametric knowledge is an active area of research.

Some of these approaches involve modifying the LLM in conjunction with the retrieval strategy and so can not be classified as distinctly as the definitions in this blog. We will dive into more details in further blogs.

Example

In a simple example, we utilised a fine-tuned LLaMA2 13B model, which was based on the information from this blog. This model underwent fine-tuning using AWS blog posts published after the LLaMA2 pre-training and fine-tuning data cutoff date, specifically those from July 23rd, 2023. We also ingested these documents into a Elasticsearch and established a simple RAG pipeline. In this pipeline, model responses are generated based on the retrieved documents serving as context. Red highlights indicate incorrect responses, and blue highlights correct responses.

However, it's important to note that this is just a single example and does not constitute a comprehensive evaluation of fine-tuning versus RAG, but provides an example of fine-tuning before useful for form, not facts.. We plan to conduct more thorough comparisons in upcoming blogs.

Frequently Asked Questions

What strategies can be used to add domain-specific knowledge into large language models (LLMs)?

Several strategies can be employed to integrate domain-specific knowledge into large language models (LLMs). These include pre-training the model, fine-tuning it, and utilizing Retrieval Augmented Generation (RAG).

How does Retrieval Augmented Generation (RAG) enhance generative AI models?

Retrieval Augmented Generation (RAG) enhances generative AI models by providing contextual information from external sources, improving factual responses and reducing hallucinations.