Trained models

editTrained models

editThis functionality is in beta and is subject to change. The design and code is less mature than official GA features and is being provided as-is with no warranties. Beta features are not subject to the support SLA of official GA features.

When you use a data frame analytics job to perform classification or regression analysis, it creates a machine learning model that is trained and tested against a labelled data set. When you are satisfied with your trained model, you can use it to make predictions against new data. For example, you can use it in the processor of an ingest pipeline or in a pipeline aggregation within a search query. For more information about this process, see Introduction to supervised learning and Inference.

You can also supply trained models that are not created by data frame analytics job but adhere to the appropriate JSON schema. If you want to use these trained models in the Elastic Stack, you must store them in Elasticsearch documents by using the create trained models API.

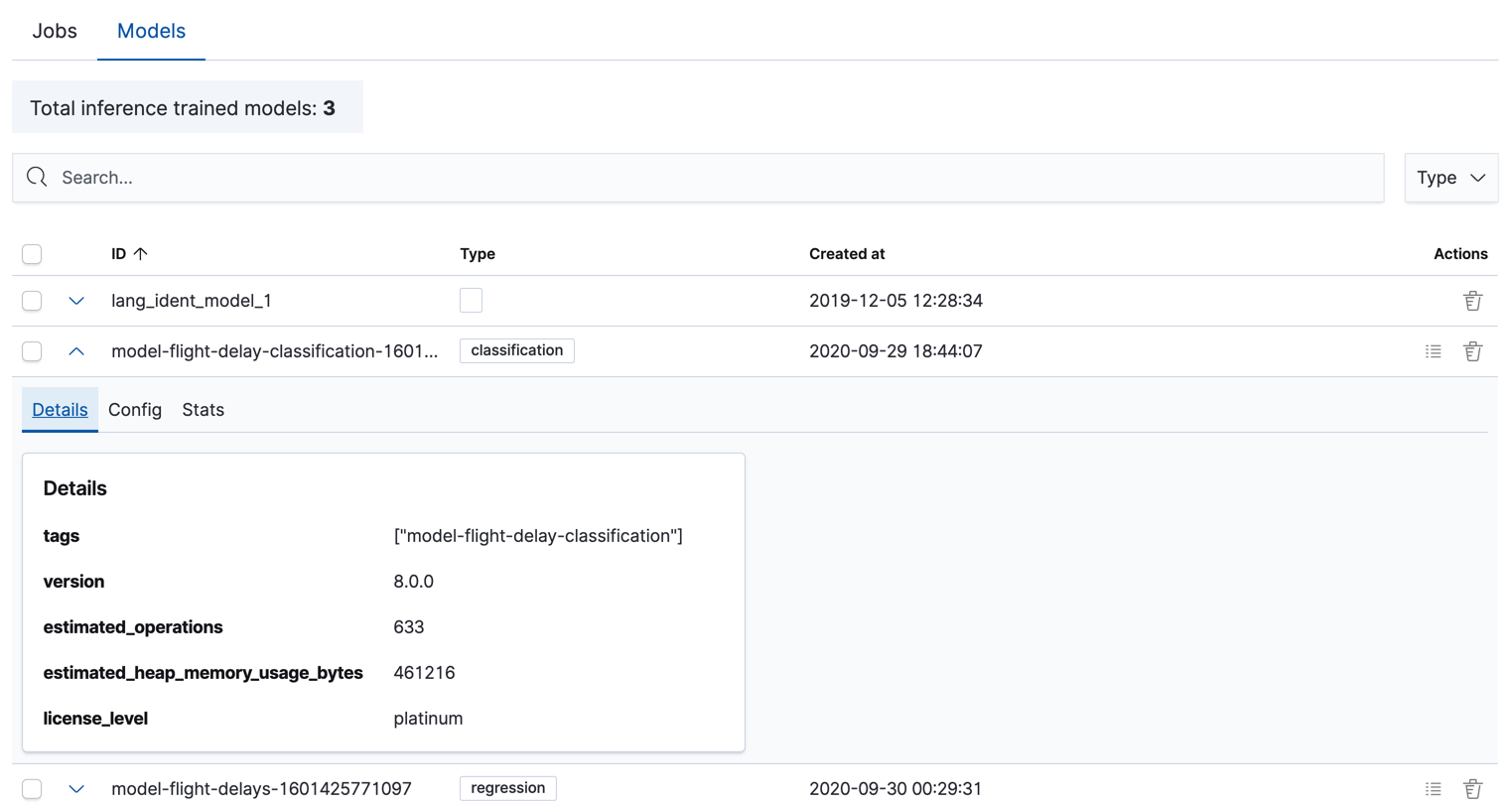

In Kibana, you can view and manage your trained models within Machine Learning > Data Frame Analytics:

Alternatively, you can use APIs like get trained models and delete trained models.

Exporting and importing models

editModels trained in Elasticsearch are portable and can be transferred between

clusters. This is particularly useful when models are trained in isolation from

the cluster where they are used for inference. The following instructions show

how to use curl and jq to

export a model as JSON and import it to another cluster.

-

Given a model name, find the model ID. You can use

curlto call the get trained model API to list all models with their IDs.curl -s -u username:password \ -X GET "http://localhost:9200/_ml/trained_models" \ | jq . -C \ | moreIf you want to show just the model IDs available, use

jqto select a subset.curl -s -u username:password \ -X GET "http://localhost:9200/_ml/trained_models" \ | jq -C -r '.trained_model_configs[].model_id'flights1-1607953694065 flights0-1607953585123 lang_ident_model_1

In this example, you are exporting the model with ID

flights1-1607953694065. -

Using

curlfrom the command line, again use the get trained models API to export the entire model definition and save it to a JSON file.curl -u username:password \ -X GET "http://localhost:9200/_ml/trained_models/flights1-1607953694065?exclude_generated=true&include=definition&decompress_definition=false" \ | jq '.trained_model_configs[0] | del(.model_id)' \ > flights1.jsonA few observations:

-

Exporting models requires using

curlor a similar tool that can stream the model over HTTP into a file. If you use the Kibana Console, the browser might be unresponsive due to the size of exported models. - Note the query parameters that are used during export. These parameters are necessary to export the model in a way that it can later be imported again and used for inference.

-

You must unnest the JSON object by one level to extract just the model

definition. You must also remove the existing model ID in order to not have

ID collisions when you import again. You can do these steps using

jqinline or alternatively it can be done to the resulting JSON file after downloading usingjqor other tools.

-

Exporting models requires using

-

Import the saved model using

curlto upload the JSON file to the created trained model API. When you specify the URL, you can also set the model ID to something new using the last path part of the URL.curl -u username:password \ -H 'Content-Type: application/json' \ -X PUT "http://localhost:9200/_ml/trained_models/flights1-imported" \ --data-binary @flights1.json

-

Models exported from the get trained models API

are limited in size by the

http.max_content_length

global configuration value in Elasticsearch. The default value is

100mband may need to be increased depending on the size of model being exported. -

Connection timeouts can occur when either the source or destination

cluster is under load, or when model sizes are very large. Increasing

timeout configurations for

curl(e.g.curl --max-time 600) or your client of choice will help alleviate the problem. In rare cases you may need to reduce load on the Elasticsearch cluster, for example by adding nodes.

Importing an external model to the Elastic Stack

editIt is possible to import a model to your Elasticsearch cluster even if the model is not trained by Elastic data frame analytics. Eland supports importing models directly through its APIs. Please refer to the latest Eland documentation for more information on supported model types and other details of using Eland to import models with.