ペタバイト規模のログをデプロイ&管理

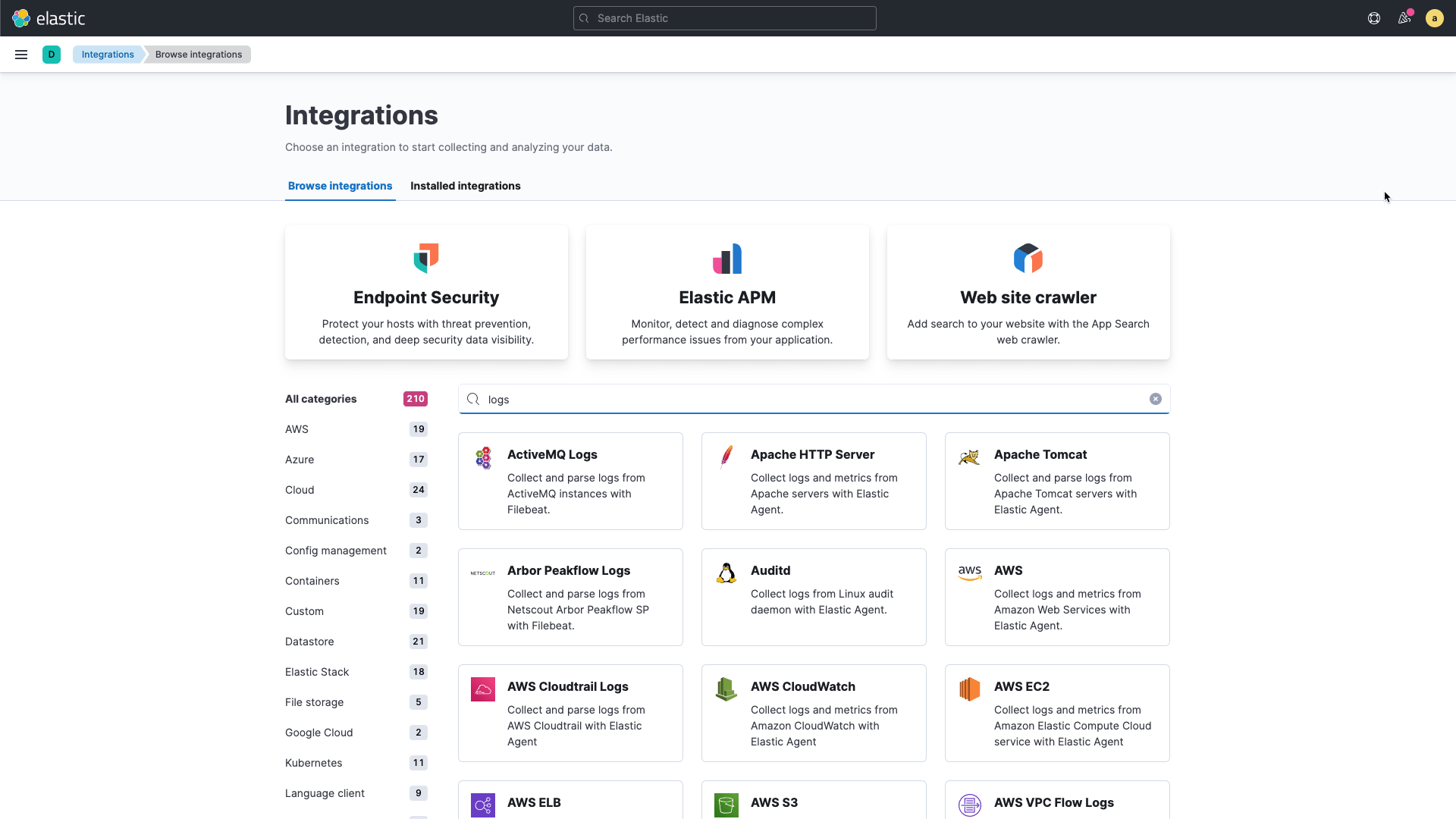

Elastic Agentを使って、ログ監視機能をすばやく簡単にデプロイできます。サポートされる広範なログデータソースを活用し、インフラデータとアプリデータのコンテクストを一元化しましょう。Elasticオブザーバビリティは、ユーザーが設定を行わなくても一般的なデータソースがサポートされる仕様。だから、AmazonやMicrosoft Azure、Google Cloud Platform、またクラウドネイティブなテクノロジーで生成されたクラウドサービスのログを簡単にシッピングして可視化できます。

構造化ログ&非構造化ログから、数分でインサイトを抽出

ソースを問わず、すべてのチームとすべてのテクノロジースタックのユースケースに応じてログをパース、変換、エンリッチすることにより、非構造化データを貴重な資産に変えることができます。schema on writeを使って構造化ログデータのクエリパフォーマンスを高めることも、ランタイムフィールドでschema on readのメリットを活かし、クエリ時にフィールドを抽出、演算、変換することもできます。

ユーザーと共にスケール&なんでも検索

あらゆる死角を排除するには、テレメトリデータをすべてインジェストして、問題解決に欠かせない重要なデータをもれなく入手しなければなりません。Elastic Common Schema(ECS)を使うと、データモデリングの統一、つまり、かけ離れたソースからくるすべてのデータの正規化と一元化が実現可能になります。ECSで統一した後は、クラスター横断検索を活用しましょう。データセンターとクラウドに存在するすべてのデータを串刺しにして、単一のコンソールで検索できます。

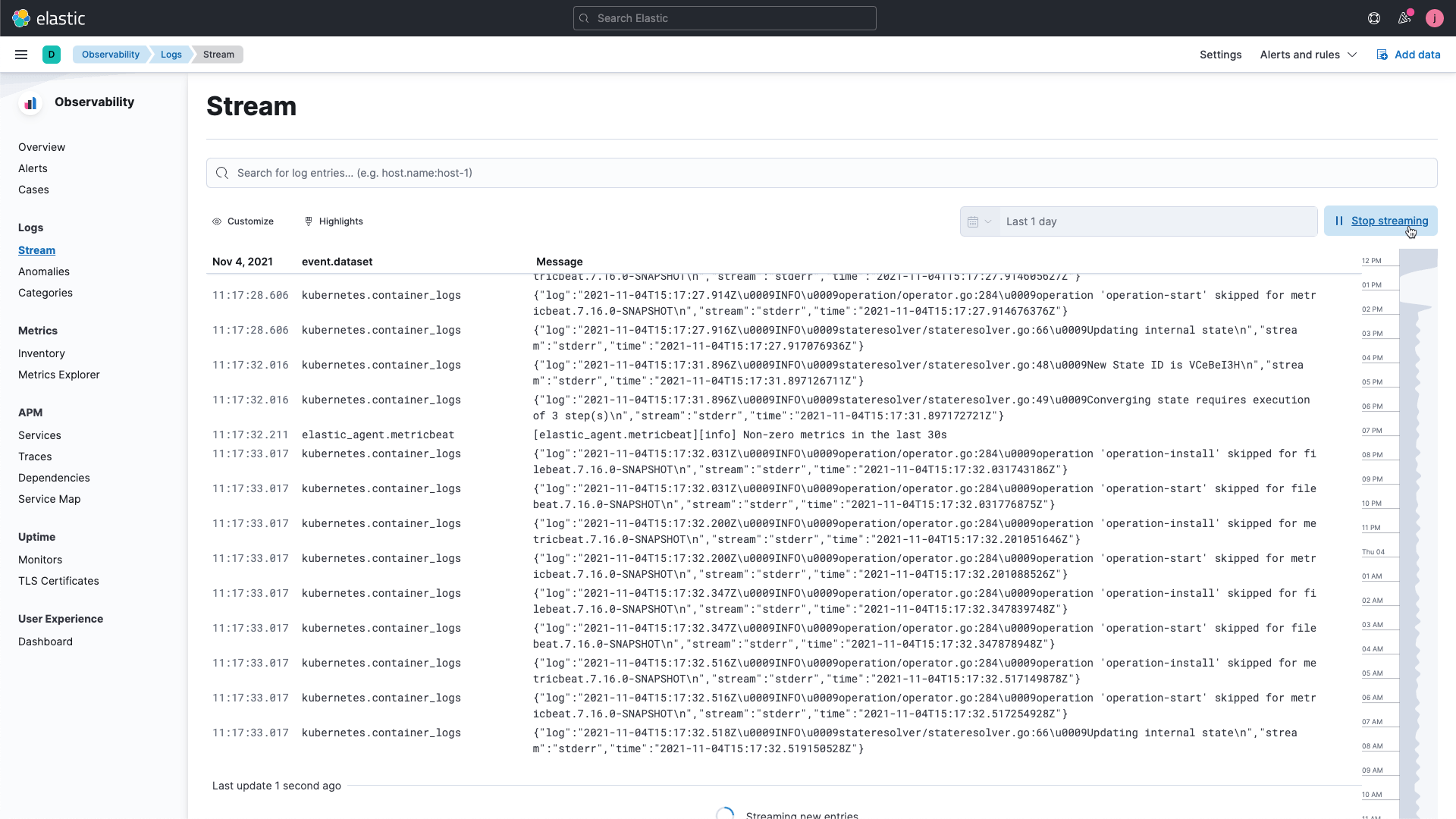

ライブtailで、リアルタイムにトラブルシューティング

サーバーや仮想マシン、コンテナーを流れるすべてのログファイルを収集し、ログ表示に特化した直感的なインターフェースで監視しましょう。構造化フィールドをピン留めすれば、画面はそのままで関連性のあるログを探索することができます。リアルタイムにストリーミングされるログをKibanaに表示すれば、コンソールタイプのすぐれた操作性を実感できます。

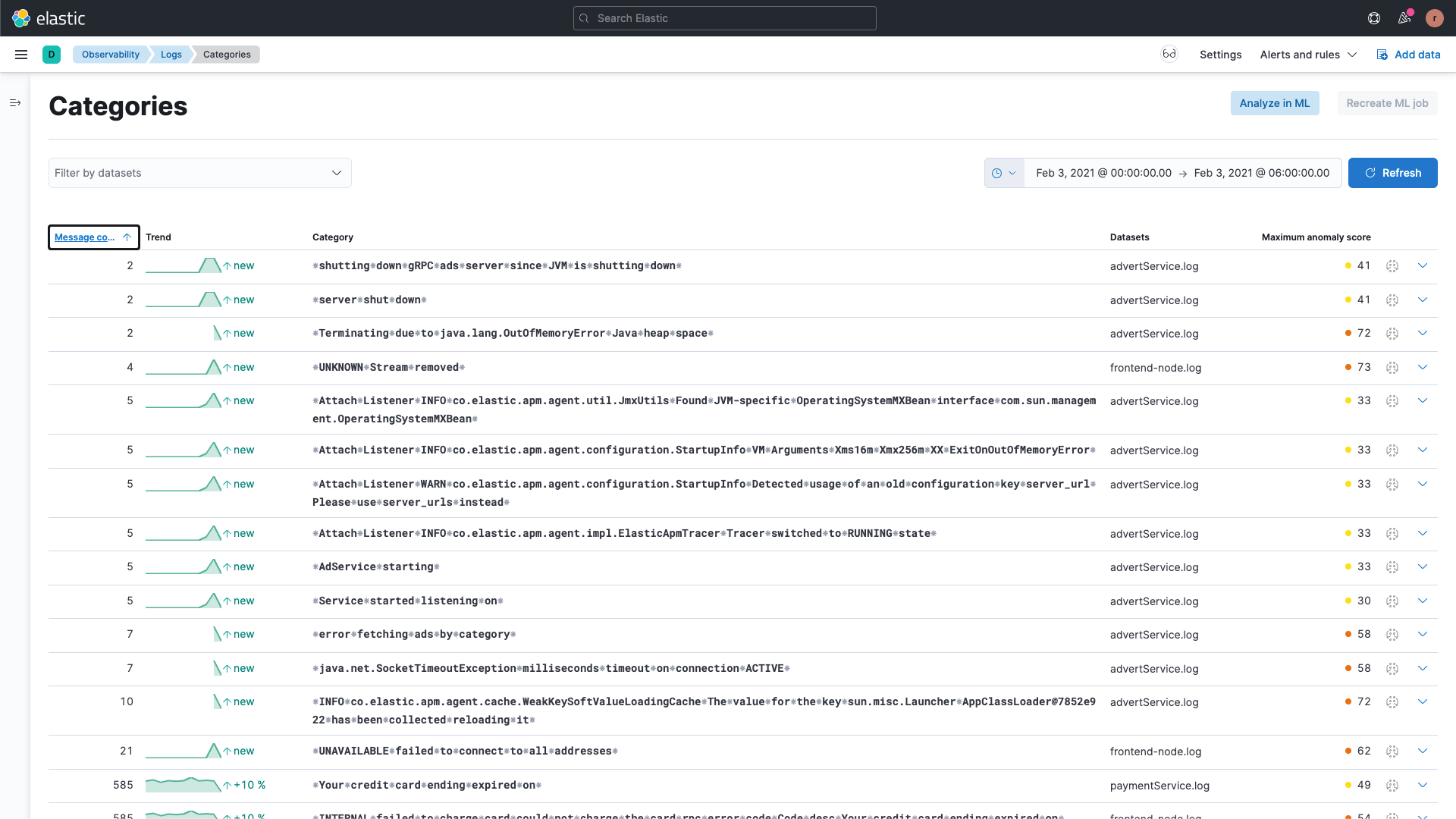

ログ分類と異常検知機能で、パターンと外れ値を検知

共通のパターンや傾向、外れ値を特定して、パフォーマンスや可用性の問題を切り離す作業に活用できます。すべてのログメッセージに設定不要の機械学習ジョブを自動で適用して、高速な検知や相関付けを実践してみましょう。アプリの問題を、かつてないほど早く解決することができます。