Detecting anomalous locations in geographic data

If your data includes geographic fields, you can use machine learning features to detect anomalous behavior, such as a credit card transaction that occurs in an unusual location or a web request that has an unusual source location.

To run this type of anomaly detection job, you must have machine learning features set up. You must also have time series data that contains spatial data types. In particular, you must have:

- two comma-separated numbers of the form

latitude,longitude, - a

geo_pointfield, - a

geo_shapefield that contains point values, or - a

geo_centroidaggregation

The latitude and longitude must be in the range -180 to 180 and represent a point on the surface of the Earth.

This example uses the sample eCommerce orders and sample web logs data sets. For more information, see Add the sample data.

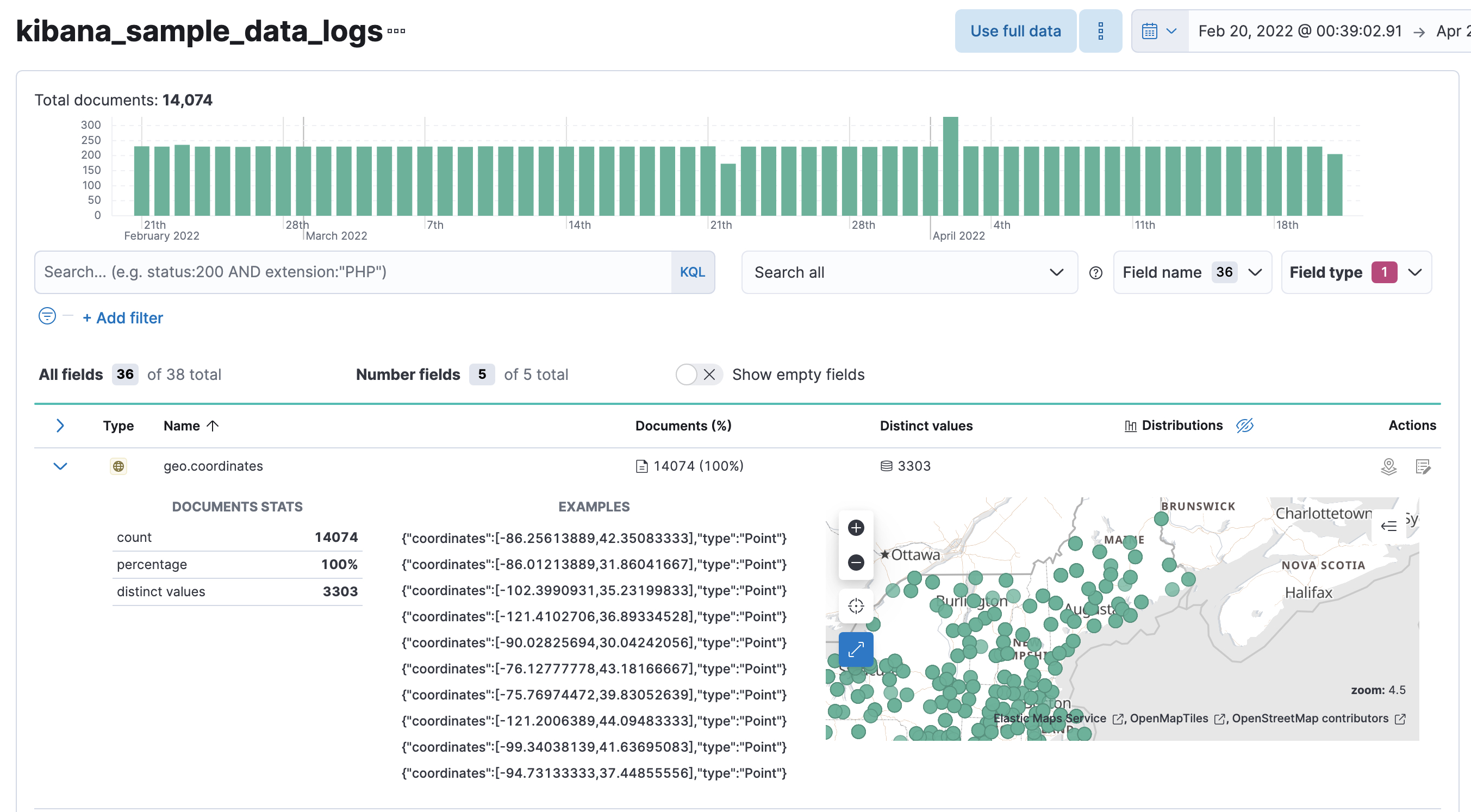

To get the best results from machine learning analytics, you must understand your data. You can use the Data Visualizer in the Machine Learning app for this purpose. Search for specific fields or field types, such as geo-point fields in the sample data sets. You can see how many documents contain those fields within a specific time period and sample size. You can also see the number of distinct values, a list of example values, and preview them on a map. For example:

There are a few limitations to consider before you create this type of job:

- You cannot create forecasts for anomaly detection jobs that contain geographic functions.

- You cannot add custom rules with conditions to detectors that use geographic functions.

If those limitations are acceptable, try creating an anomaly detection job that uses the lat_long function to analyze your own data or the sample data sets.

To create an anomaly detection job that uses the lat_long function, navigate to the Anomaly Detection Jobs page in the main menu, or use the global search field. Then click Create job and select the appropriate job wizard. Alternatively, use the create anomaly detection jobs API.

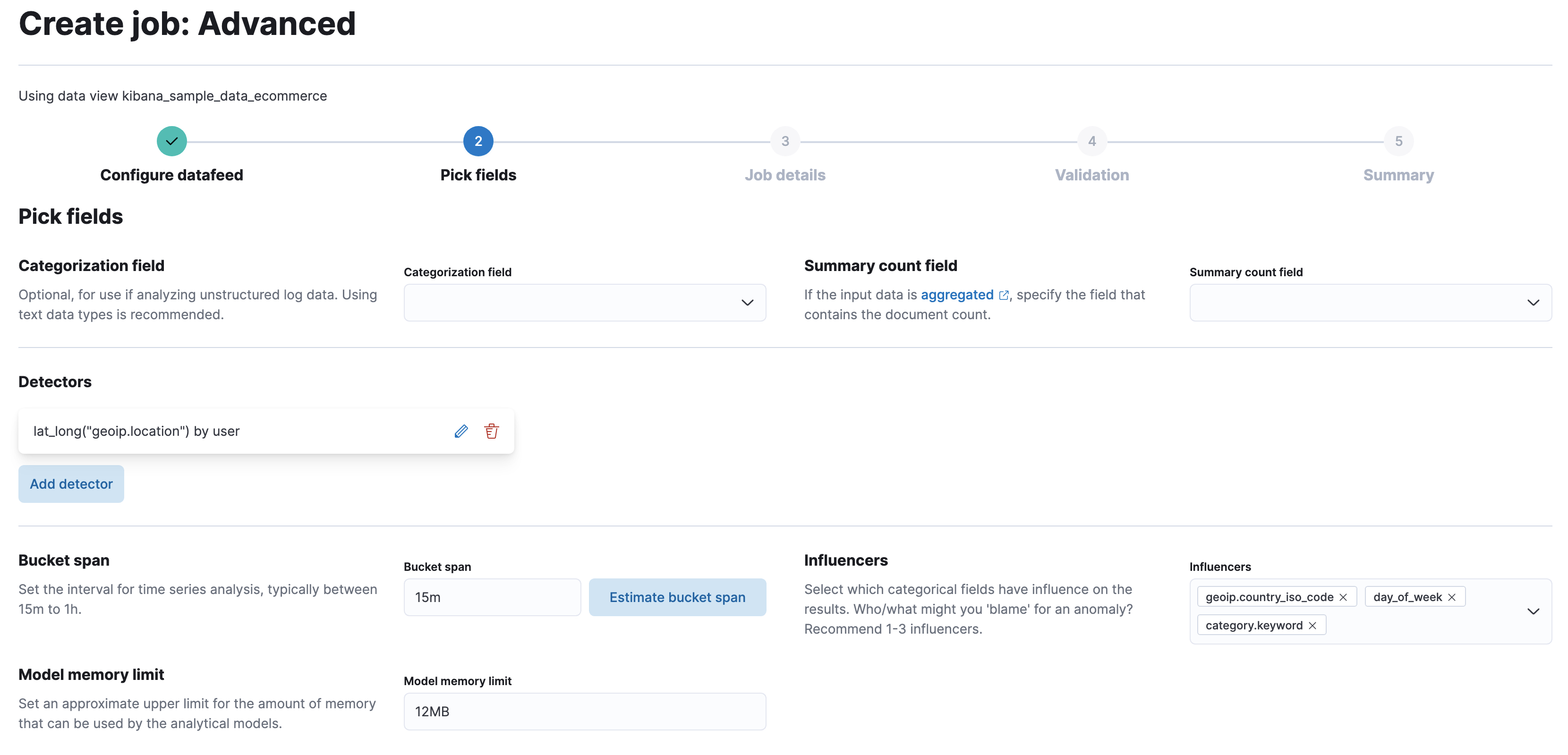

For example, create a job that analyzes the sample eCommerce orders data set to find orders with unusual coordinates (geoip.location values) relative to the past behavior of each customer (user ID):

API example

PUT _ml/anomaly_detectors/ecommerce-geo

{

"analysis_config" : {

"bucket_span":"15m",

"detectors": [

{

"detector_description": "Unusual coordinates by user",

"function": "lat_long",

"field_name": "geoip.location",

"by_field_name": "user"

}

],

"influencers": [

"geoip.country_iso_code",

"day_of_week",

"category.keyword"

]

},

"data_description" : {

"time_field": "order_date"

},

"datafeed_config":{

"datafeed_id": "datafeed-ecommerce-geo",

"indices": ["kibana_sample_data_ecommerce"],

"query": {

"bool": {

"must": [

{

"match_all": {}

}

]

}

}

}

}

POST _ml/anomaly_detectors/ecommerce-geo/_open

POST _ml/datafeeds/datafeed-ecommerce-geo/_start

{

"end": "2022-03-22T23:00:00Z"

}

- Create the anomaly detection job.

- Create the datafeed.

- Open the job.

- Start the datafeed. Since the sample data sets often contain timestamps that are later than the current date, it is a good idea to specify the appropriate end date for the datafeed.

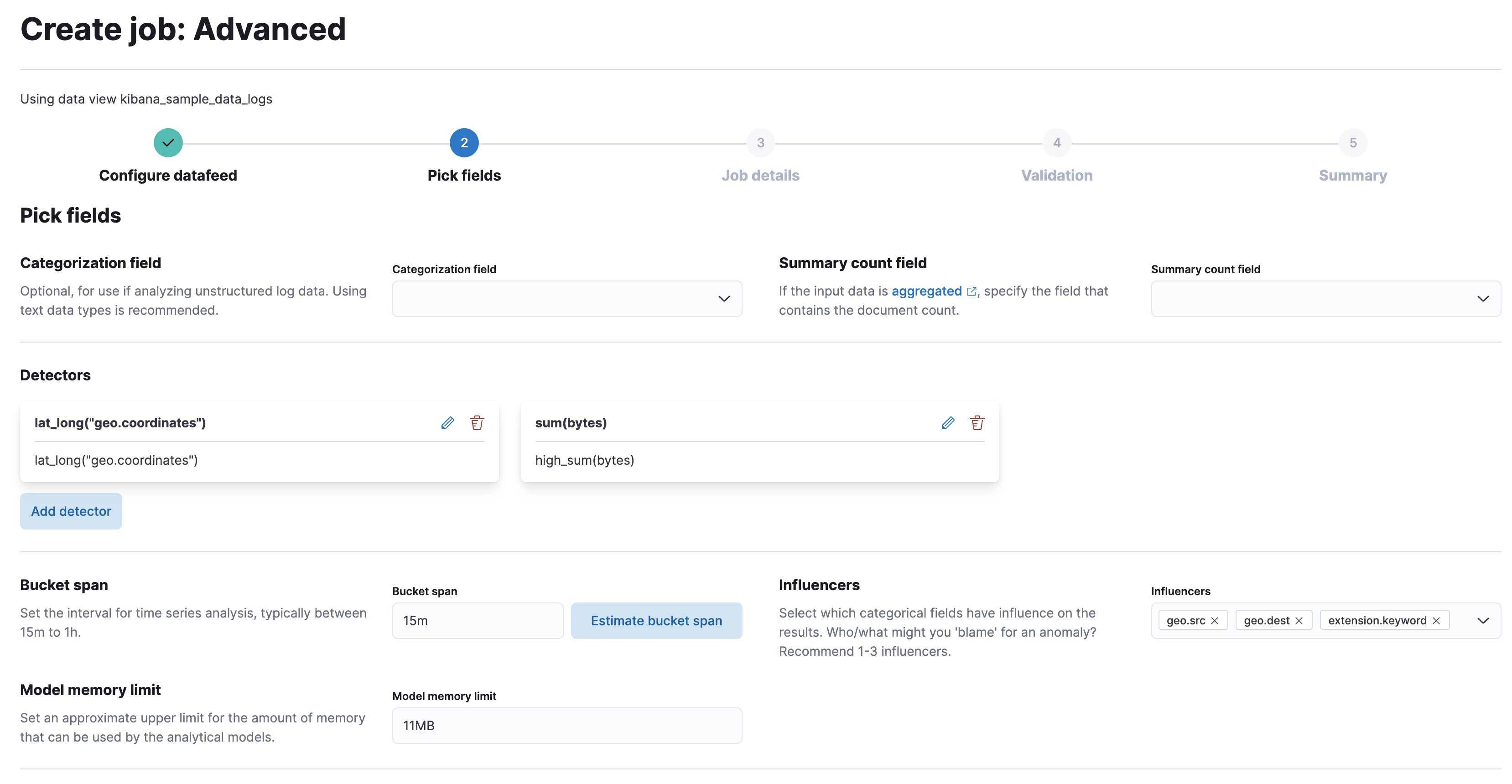

Alternatively, create a job that analyzes the sample web logs data set to detect events with unusual coordinates (geo.coordinates values) or unusually high sums of transferred data (bytes values):

API example

PUT _ml/anomaly_detectors/weblogs-geo

{

"analysis_config" : {

"bucket_span":"15m",

"detectors": [

{

"detector_description": "Unusual coordinates",

"function": "lat_long",

"field_name": "geo.coordinates"

},

{

"function": "high_sum",

"field_name": "bytes"

}

],

"influencers": [

"geo.src",

"extension.keyword",

"geo.dest"

]

},

"data_description" : {

"time_field": "timestamp",

"time_format": "epoch_ms"

},

"datafeed_config":{

"datafeed_id": "datafeed-weblogs-geo",

"indices": ["kibana_sample_data_logs"],

"query": {

"bool": {

"must": [

{

"match_all": {}

}

]

}

}

}

}

POST _ml/anomaly_detectors/weblogs-geo/_open

POST _ml/datafeeds/datafeed-weblogs-geo/_start

{

"end": "2022-04-15T22:00:00Z"

}

- Create the anomaly detection job.

- Create the datafeed.

- Open the job.

- Start the datafeed. Since the sample data sets often contain timestamps that are later than the current date, it is a good idea to specify the appropriate end date for the datafeed.

After the anomaly detection jobs have processed some data, you can view the results in Kibana.

If you used APIs to create the jobs and datafeeds, you cannot see them in Kibana until you follow the prompts to synchronize the necessary saved objects.

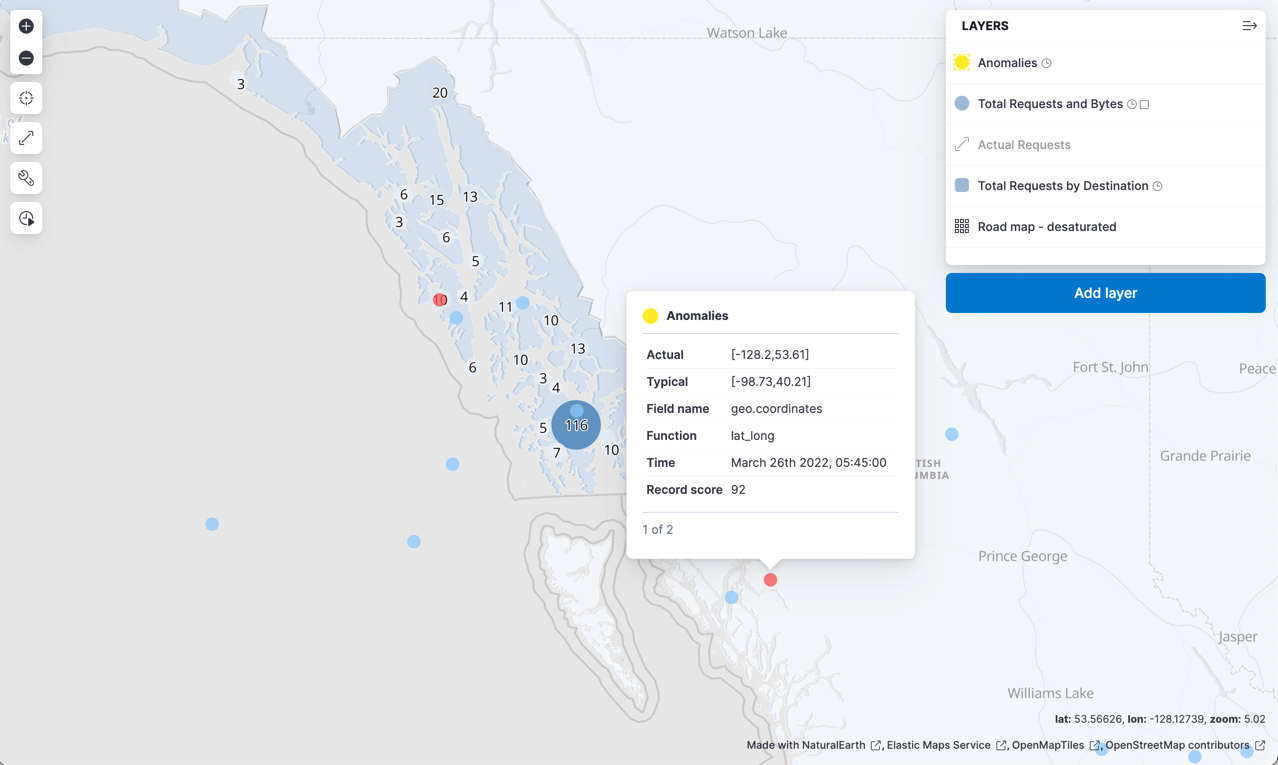

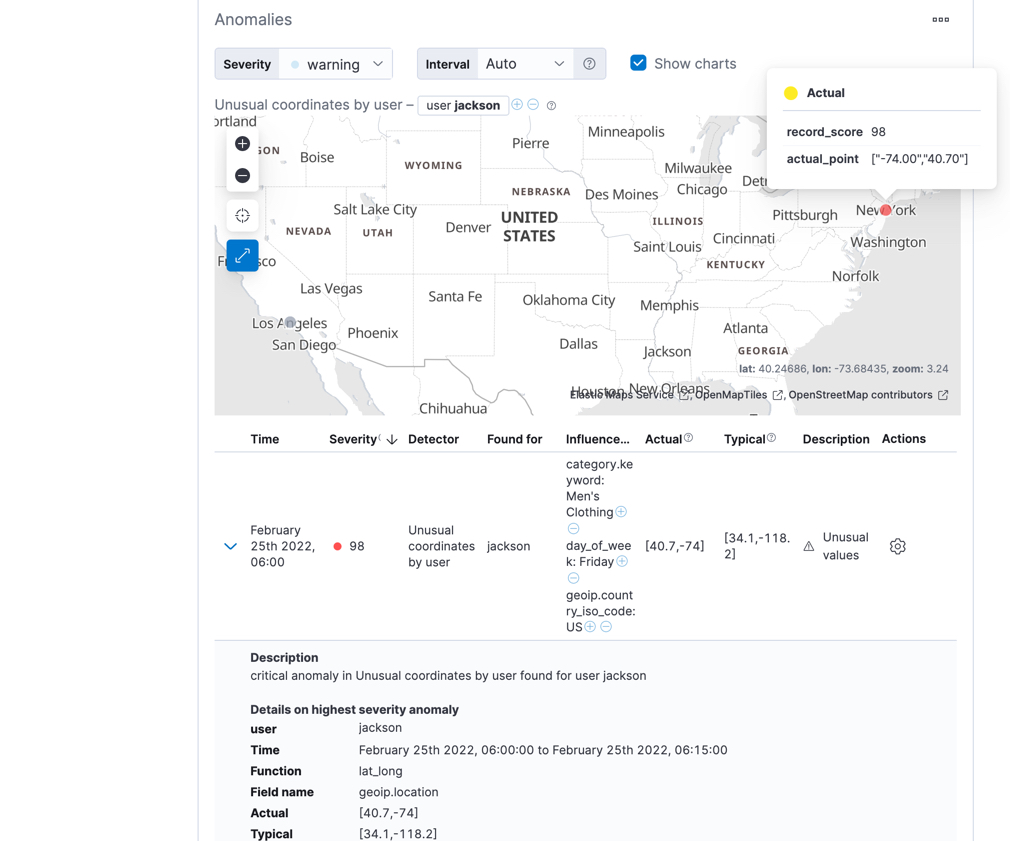

When you select a period that contains an anomaly in the Anomaly Explorer swim lane results, you can see a map of the typical and actual coordinates. For example, in the eCommerce sample data there is a user with anomalous shopping behavior:

A "typical" value indicates a centroid of a cluster of previously observed locations that is closest to the "actual" location at that time. For example, there may be one centroid near the user’s home and another near the user’s work place since there are many records associated with these distinct locations.

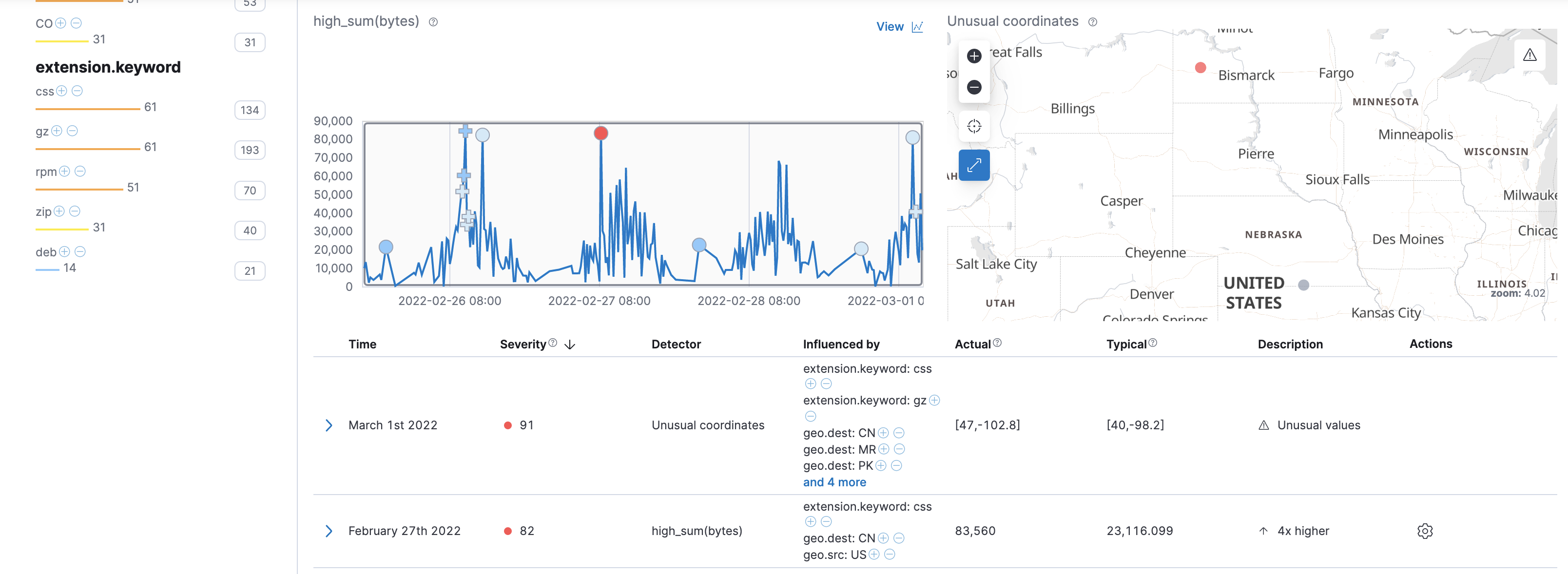

Likewise, there are time periods in the web logs sample data where there are both unusually high sums of data transferred and unusual geographical coordinates:

You can use the top influencer values to further filter your results and identify possible contributing factors or patterns of behavior.

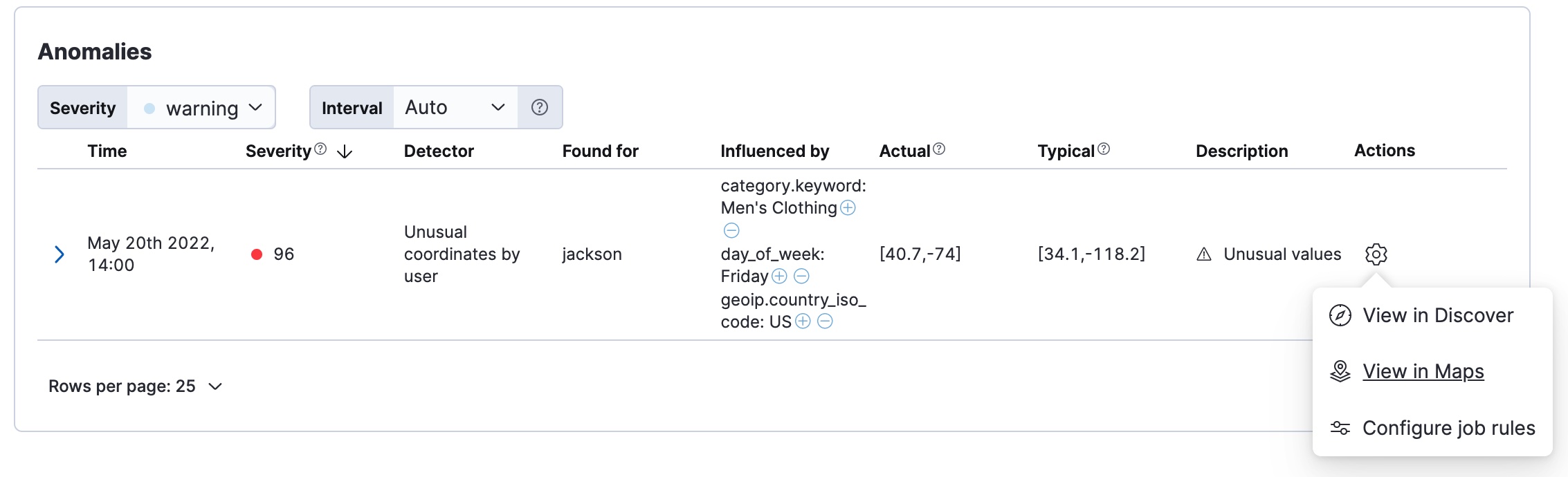

You can also view the anomaly in Maps by clicking View in Maps in the action menu in the anomaly table.

When you try this type of anomaly detection job with your own data, it might take some experimentation to find the best combination of buckets, detectors, and influencers to detect the type of behavior you’re seeking.

For more information about anomaly detection concepts, see Concepts. For the full list of functions that you can use in anomaly detection jobs, see Function reference. For more anomaly detection examples, see Examples.

To integrate the results from your anomaly detection job in Maps, click Add layer, then select ML Anomalies. You must then select or create an anomaly detection job that uses the lat_long function.

For example, you can extend the map example from Build a map to compare metrics by country or region to include a layer that uses your web logs anomaly detection job: