Create an Elastic Cloud Hosted deployment

An Elastic Cloud deployment includes Elastic Stack components such as Elasticsearch, Kibana, and other features, allowing you to store, search, and analyze your data. You can spin up a proof-of-concept deployment to learn more about what Elastic can do for you.

You can also create a deployment using the Elastic Cloud API. This can be an interesting alternative for more advanced needs, such as for creating a deployment encrypted with your own key.

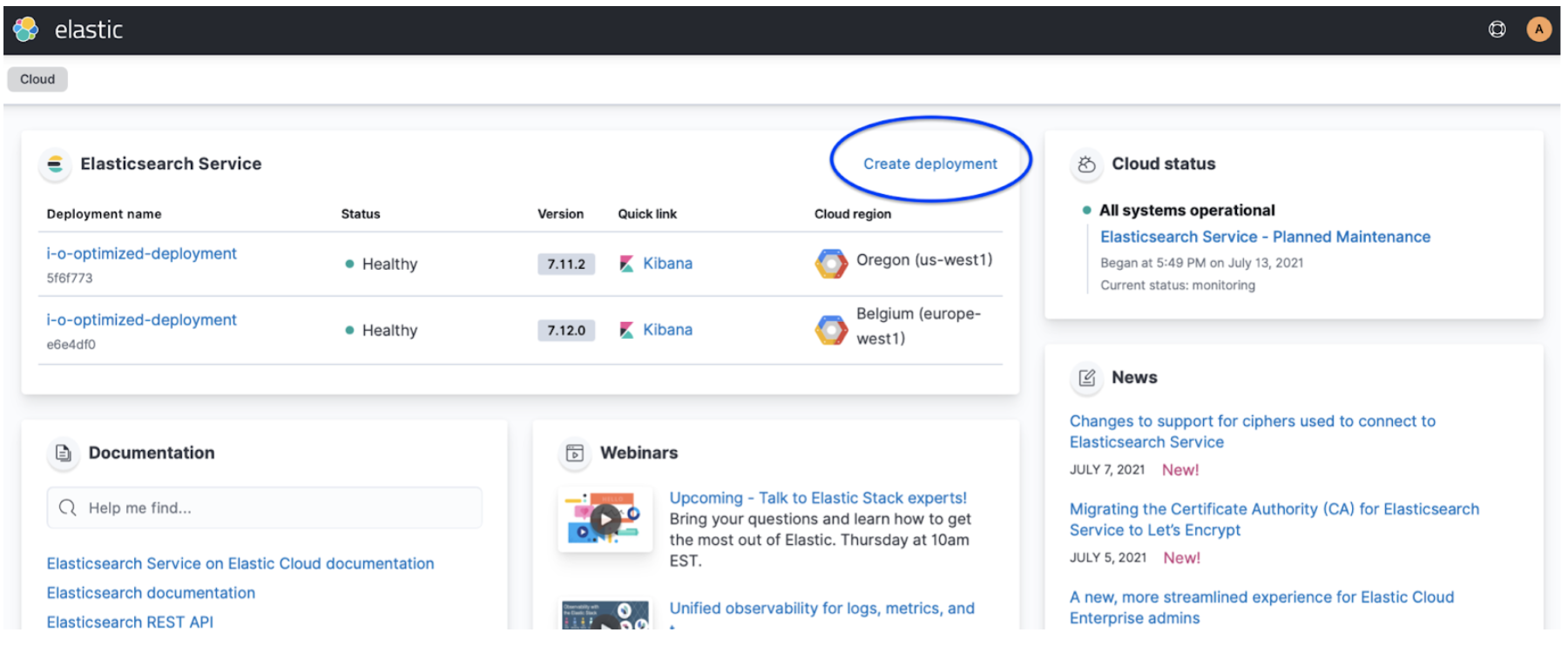

Log in to your cloud.elastic.co account and select Create deployment from the Elastic Cloud main page:

Select a solution view for your deployment. Solution views define the navigation and set of features that will be first available in your deployment. You can change it later, or create different spaces with different solution views within your deployment.

To learn more about what each solution offers, check Elasticsearch, Observability, and Security.

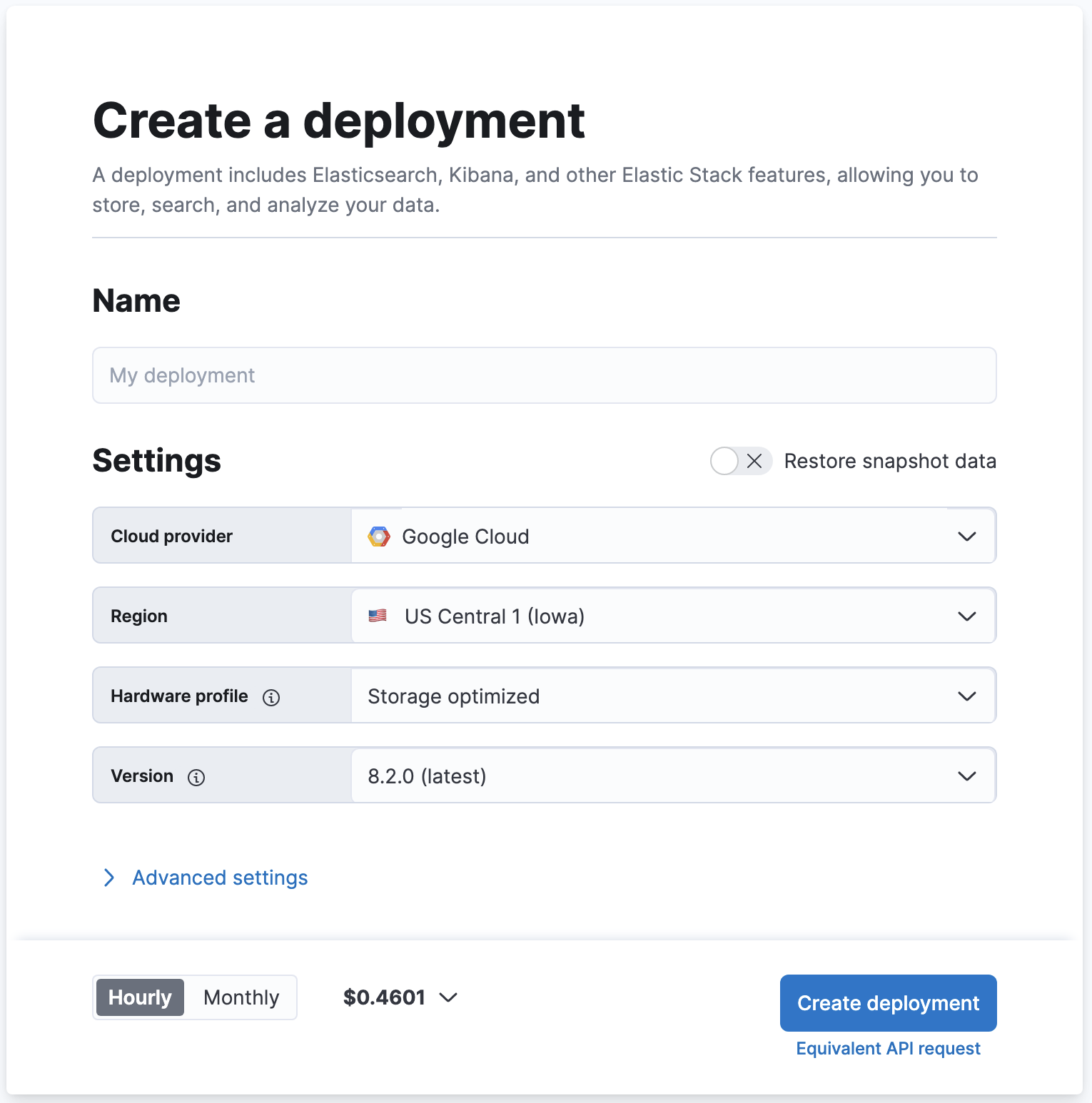

From the main Settings, you can change the cloud provider and region that host your deployment, the stack version, and the hardware profile, or restore data from another deployment (Restore snapshot data):

Cloud provider: The cloud platform where you’ll deploy your deployment. We support: Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure. You do not need to provide your own keys.

Region: The cloud platform’s region your deployment will live. If you have compliance or latency requirements, you can create your deployment in any of our supported regions. The region should be as close as possible to the location of your data.

Hardware profile: This allows you to configure the underlying virtual hardware that you’ll deploy your Elastic Stack on. Each hardware profile provides a unique blend of storage, RAM and vCPU sizes. You can select a hardware profile that’s best suited for your use case. For example CPU Optimized if you have a search-heavy use case that’s bound by compute resources. For more details, check the hardware profiles section. You can also view the virtual hardware details which powers hardware profiles. With the Advanced settings option, you can configure the underlying virtual hardware associated with each profile.

Version: The Elastic Stack version that will get deployed. Defaults to the latest version. Our version policy describes which versions are available to deploy.

Snapshot source: To create a deployment from a snapshot, select a snapshot source. You need to configure snapshots and establish a snapshot lifecycle management policy and repository before you can restore from a snapshot. The snapshot options depend on the stack version the deployment is running.

Name: This setting allows you to assign a more human-friendly name to your cluster which will be used for future reference in the Elastic Cloud Console. Common choices are dev, prod, test, or something more domain specific.

Expand Advanced settings to configure your deployment for encryption using a customer-managed key, autoscaling, storage, memory, and vCPU. Check Customize your deployment for more details.

TipTrial users won’t find the Advanced settings when they create their first deployment. This option is available on the deployment’s edit page once the deployment is created.

Select Create deployment. It takes a few minutes before your deployment gets created. While waiting, you are prompted to save the admin credentials for your deployment which provides you with superuser access to Elasticsearch. Keep these credentials safe as they are shown only once. These credentials also help you add data using Kibana. If you need to refresh these credentials, you can reset the password.

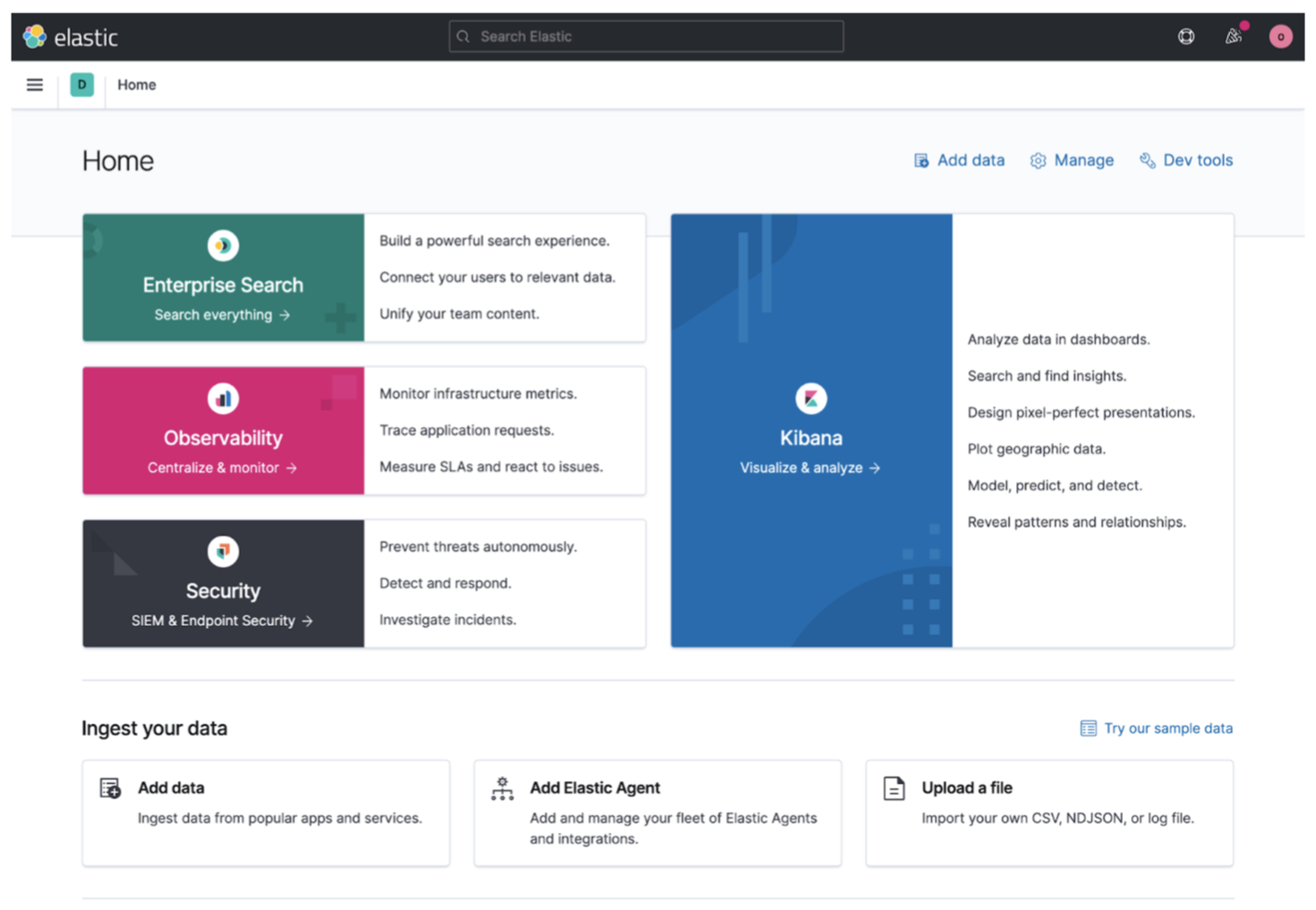

Once the deployment is ready, select Continue to open the deployment’s main page. From here, you can start ingesting data or simply try a sample data set to get started.

At any time, you can manage and adjust the configuration of your deployment to your needs, add extra layers of security, or (highly recommended) set up health monitoring.

To make sure you’re all set for production, consider the following actions:

- Plan for your expected workloads and consider how many availability zones you’ll need.

- Create a deployment on the region you need and with a hardware profile that matches your use case.

- Change your configuration by turning on autoscaling, adding high availability, or adjusting components of the Elastic Stack.

- Add plugins and extensions to use Elastic supported extensions or add your own custom dictionaries and scripts.

- Edit settings and defaults to fine tune the performance of specific features.

- Manage your deployment as a whole to restart, upgrade, stop routing, or delete.

- Set up monitoring to learn how to configure your deployments for observability, which includes metric and log collection, troubleshooting views, and cluster alerts to automate performance monitoring.